Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

@windlessuser If you try it at low settings, I don't mind as long as you inform me when you'll do it and wait for an okay :). It's good to do security tests! (I just have to make sure that I'll be at my monitoring system haha)

-

@windlessuser Testing myself right now, at 800 connections sending in hellfire and the CPU load doesn't go above 1 percent xD

-

@MrJimmy Of course! One thing I've learned from pentesting since I was 15 is that the best way to test security is to do real life attacks.

I don't mind if people perform security tests, hell, I'd do it myself!

In case of DDoS attacks I do like to watch it live to see if everything holds. Of course that won't happen irl probs (seeing it happening live) but it's a responsible way of testing imo.

Next to that, the only thing I ask is to report found security flaws instead of abusing them. If you do that, I'll thank you rather than getting angry :) -

@MrJimmy Right now I'm actually under DDoS for real but it looks like the server is holding it very well :)

-

I went with NetData, let me upload a partial screnshot of what I just witnessed :) @MrJimmy

-

hacker17897y@linuxxx holy shit! That must be so fun to watch hahah! Knowing that the system you built/configured is working perfectly as intended. Cheers!

hacker17897y@linuxxx holy shit! That must be so fun to watch hahah! Knowing that the system you built/configured is working perfectly as intended. Cheers! -

@hacker Normally I get around 50- connections. My own DDOS attack made it to nearly 1K connections :)

-

hacker17897y@linuxxx what about this anonymous analytics you have going on. I'd love to implement something like that for my site. Any suggestions?

hacker17897y@linuxxx what about this anonymous analytics you have going on. I'd love to implement something like that for my site. Any suggestions? -

@MrJimmy I think it's the server itself which can easily keep up. I'm currently doing a heavy load test again. (I rent this server solely for devRant stuffs and I've got 14gb ram so I'll be fine I think for now :)

-

@MrJimmy @hacker Just flooded myself with about 100K connections and I started to get time-outs haha. Going to look into how to block those kinda attacks now :)

-

hacker17897y@linuxxx oh haha! That's cool. Also, look into Client.js (you can store the info you get in a db).

hacker17897y@linuxxx oh haha! That's cool. Also, look into Client.js (you can store the info you get in a db).

You can get more information about visitors this way if you want, anonymously of course. -

@MrJimmy Please do, would be awesome! In the mean time I'm trying to fine-tweak the firewall/ids haha

-

@Awlex Currently I fucked something up in nginx's config so my whole web server is down, working as fast as possible to find this freaking issue 😅

-

ace4810167yAre you using a CDN/Caching system?

ace4810167yAre you using a CDN/Caching system?

If not, you should look into it... It can lower the db connections on high traffic/DDoS attacks.

Edit: care to share the url? :D -

@bas1948 The entire cms works with Redis which is a caching system :). No CDN, I want to keep the requested files etc under my own control for privacy/security reasons.

-

@hacker I'm wondering how it gathers the IP address and the geo ip data? Both those things are a no-go for me ;)

-

hacker17897y@linuxxx hahah, I knew you'd say something about that. Well, first of all, don't worry. I specifically made it so the client is anonymous. No one but you can see that information (not even me).

hacker17897y@linuxxx hahah, I knew you'd say something about that. Well, first of all, don't worry. I specifically made it so the client is anonymous. No one but you can see that information (not even me).

I made my own Promise function to fetch that data from a public API :) -

ace4810167y@linuxxx Your resources aren't returning a caching header.. Correct me if I'm wrong, but I believe that instructs the browser to fetch them everytime

ace4810167y@linuxxx Your resources aren't returning a caching header.. Correct me if I'm wrong, but I believe that instructs the browser to fetch them everytime

Anyway, I also host my own files on S3 but serve them through cloudfront.. A win win solution :)

May I also ask why you're fetching data through a get parameter? -

@bas1948 I honestly have no fucking clue.

I'm a linux engineer/backend programmer and I never deal with this kinda stuff xD -

hacker17897y@linuxxx would you be interested in the idea of caching the site's static content (CSS and what not)? Doing so would greatly improve the performance/speed of the blog.

hacker17897y@linuxxx would you be interested in the idea of caching the site's static content (CSS and what not)? Doing so would greatly improve the performance/speed of the blog. -

ace4810167yWell, you now have something to research I guess(no pun intended :D).

ace4810167yWell, you now have something to research I guess(no pun intended :D).

I suggest using post parameters instead of get, and definitely use post ID's to fetch data rather than a string.. Strings are a recipe for disaster.. -

@hacker Yeah sure! Would caching make that new versions will be downloaded from the server anyways though?

-

ace4810167y@linuxxx yep, caching means static resources will be saved on the client's pc until the header expires.. Or if you change file name.. E.g style.css?v=1

ace4810167y@linuxxx yep, caching means static resources will be saved on the client's pc until the header expires.. Or if you change file name.. E.g style.css?v=1

As for strings, I meant plain text... Sorry.

For instance a unique hash is unique and shouldn't cause problems, but let's assume you want to add another introduction.. You'd fetch it as ?post=introduction2 ??

Doesn't make sense and it is confusing..

Ps: sorry if my explanations aren't clear, haven't slept for the past 3 days. -

hacker17897y@linuxxx I agree with @bas1948. Your static files can be updated using different file names. Look up "cache busting", people have come up with pretty clever ways.

hacker17897y@linuxxx I agree with @bas1948. Your static files can be updated using different file names. Look up "cache busting", people have come up with pretty clever ways.

And also, @bas1948, you really should go to sleep, man. -

Related Rants

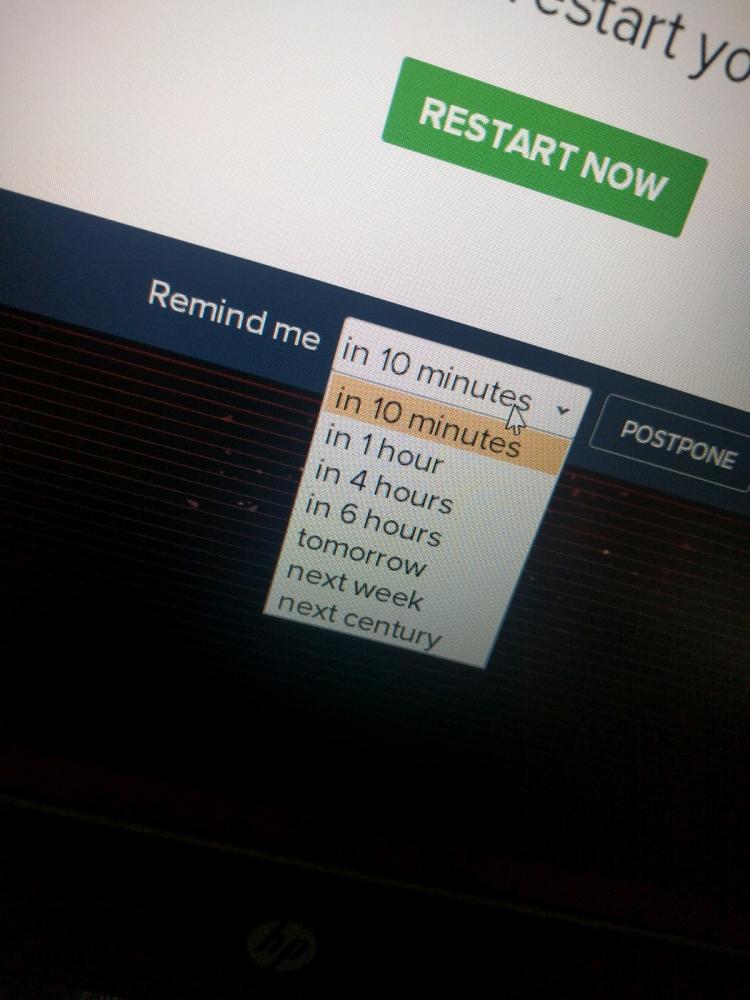

Did you say security?

Did you say security? 10 points for next century option.

10 points for next century option.

Just looked at the anonymous analytics I collect on the security/privacy blog.

No SQL Injection attacks yet (would be useless anyways as I don't use MySQL/MariaDB for the databasing.

Directory Traversal attacks. Really? 🤣

Nice try, guys.

rant

security

really

attacks

much security