Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "it's all just layers"

-

A couple of years ago, I was working in a computer shop as a "technician", I was 15, first job I ever had.

One day an elderly lady came into the shop, probably 50'ish, she and her whole family "suffered" from electromagnetic radiation, and the mother had the worst suffering. She complained about her TV box that just had died.

I accept the tuner and see it's wrapped with 10 layers of aluminium foil, with a tiny hole for the IR receiver.

The whole box smells like burnt electronics, and the foil gets darker for each layer I unwrap. I try explain to her that the box gets warm and overheated by wrapping it like this, and she's lucky that it didn't catch fire.

I further explain to her that she will not get a new box, because the warranty does not cover _this_. The mother tells me she has to wrap it like this, because she gets headaches when she's watching the news.

She then proceeds to go into a rage mode and gets her whole family into the shop, where all of them starts yelling at me, the younger kids start throwing stuff down from the shelves and touching the TVs with sticky fingers (literally, sticky, like yuck!).

Unsure what to do, boss is in a meeting, and my colleague is busy in the back.

So I calmly tell them that in this building there's 4 wireless networks, 3 wireless phones, high voltage cables run in the wall behind me, there's factory tracks 20 meters behind the building, next door business is an electrician, you're standing in front of wall with 30-40 TVs, 5 HDMI splitters, 3 TV boxes and a Blu-ray player. And they've all been standing in front of them for the last 10 minutes.

They all suddenly feel really sick and run out of the store, never to be seen again. From that day, I decided I'll never work in a shop again, and pursued my dreams to become a developer.

TL;DR: Family is "sensitive" to electromagnetic radiation, almost put burnt down their house because of stupidity, yelled at me. I decided to pursue my dream as a developer.16 -

I realize I've ranted about this before, but...

Fuck APIs.

First the fact that external services can throw back 500 errors or timeouts when their maintainer did a drunk deploy (but you properly handled that using caching, workers, retry handlers, etc, right? RIGHT?)...

Then the fact that they all speak a variety of languages and dialects (Oh fuck why does that endpoint return a JSON object with int keys instead of a simple array... wait the params are separated with pipe characters? And the other endpoint uses SOAP? Fuck I need to write another wrapper class around the client...)

But the worst thing: It makes developers live in this happy imaginary universe where "malicious" is not a word.

"I found this cloud service which checks our code style" — hmm ok, they seem trustworthy. Hope they don't sell our code, but whatever.

"And look at this thing, it automatically makes database backups, just have to connect to it to DigitalOcean" — uhhh wait...

"And I just built this API client which sends these forms to be OCR processed" — Fuck... stop it... there are bank accounts numbers on those forms... Where's that API even located? What company?

* read their privacy policy *

"We can not guarantee the safety of your personal data, use at your own risk [...] we are located in Russia".

I fucking hate these millennial devs who literally fail to get their head out of the cloud.

Somehow they think it's easier to write all these NodeJS handlers and layers around some API, which probably just calls ImageMagick + Tesseract on the other side.

If I wasn't so fucking exhausted, I'd chop of their heads... but they're like hydra, you seal one privacy breach and another is waiting to be merged, these kids just keep spewing their crap into easy packages, they keep deploying shitty heroku apps... ugh.

😖8 -

3 rants for the price of 1, isn't that a great deal!

1. HP, you braindead fucking morons!!!

So recently I disassembled this HP laptop of mine to unfuck it at the hardware level. Some issues with the hinge that I had to solve. So I had to disassemble not only the bottom of the laptop but also the display panel itself. Turns out that HP - being the certified enganeers they are - made the following fuckups, with probably many more that I didn't even notice yet.

- They used fucking glue to ensure that the bottom of the display frame stays connected to the panel. Cheap solution to what should've been "MAKE A FUCKING DECENT FRAME?!" but a royal pain in the ass to disassemble. Luckily I was careful and didn't damage the panel, but the chance of that happening was most certainly nonzero.

- They connected the ribbon cables for the keyboard in such a way that you have to reach all the way into the spacing between the keyboard and the motherboard to connect the bloody things. And some extra spacing on the ribbon cables to enable servicing with some room for actually connecting the bloody things easily.. as Carlos Mantos would say it - M-m-M, nonoNO!!!

- Oh and let's not forget an old flaw that I noticed ages ago in this turd. The CPU goes straight to 70°C during boot-up but turning on the fan.. again, M-m-M, nonoNO!!! Let's just get the bloody thing to overheat, freeze completely and force the user to power cycle the machine, right? That's gonna be a great way to make them satisfied, RIGHT?! NO MOTHERFUCKERS, AND I WILL DISCONNECT THE DATA LINES OF THIS FUCKING THING TO MAKE IT SPIN ALL THE TIME, AS IT SHOULD!!! Certified fucking braindead abominations of engineers!!!

Oh and not only that, this laptop is outperformed by a Raspberry Pi 3B in performance, thermals, price and product quality.. A FUCKING SINGLE BOARD COMPUTER!!! Isn't that a great joke. Someone here mentioned earlier that HP and Acer seem to have been competing for a long time to make the shittiest products possible, and boy they fucking do. If there's anything that makes both of those shitcompanies remarkable, that'd be it.

2. If I want to conduct a pentest, I don't want to have to relearn the bloody tool!

Recently I did a Burp Suite test to see how the devRant web app logs in, but due to my Burp Suite being the community edition, I couldn't save it. Fucking amazing, thanks PortSwigger! And I couldn't recreate the results anymore due to what I think is a change in the web app. But I'll get back to that later.

So I fired up bettercap (which works at lower network layers and can conduct ARP poisoning and DNS cache poisoning) with the intent to ARP poison my phone and get the results straight from the devRant Android app. I haven't used this tool since around 2017 due to the fact that I kinda lost interest in offensive security. When I fired it up again a few days ago in my PTbox (which is a VM somewhere else on the network) and today again in my newly recovered HP laptop, I noticed that both hosts now have an updated version of bettercap, in which the options completely changed. It's now got different command-line switches and some interactive mode. Needless to say, I have no idea how to use this bloody thing anymore and don't feel like learning it all over again for a single test. Maybe this is why users often dislike changes to the UI, and why some sysadmins refrain from updating their servers? When you have users of any kind, you should at all times honor their installations, give them time to change their individual configurations - tell them that they should! - in other words give them a grace time, and allow for backwards compatibility for as long as feasible.

3. devRant web app!!

As mentioned earlier I tried to scrape the web app's login flow with Burp Suite but every time that I try to log in with its proxy enabled, it doesn't open the login form but instead just makes a GET request to /feed/top/month?login=1 without ever allowing me to actually log in. This happens in both Chromium and Firefox, in Windows and Arch Linux. Clearly this is a change to the web app, and a very undesirable one. Especially considering that the login flow for the API isn't documented anywhere as far as I know.

So, can this update to the web app be rolled back, merged back to an older version of that login flow or can I at least know how I'm supposed to log in to this API in order to be able to start developing my own client?6 -

Just give me a link to the web font man. Oh, there isn't one? You used a font that we can't legally use? Do you understand how that works? I don't want your 300MB photoshop document. I don't want to comb through your ridiculous stack of insane layers and artboards and deal with the images you didn't bundle into the project or try and make sense out of your arbitrary spacing and random font sizes. You're not an artist, you're just a crappy visual designer handing off an unthoughtful glorified wire-frame - and now I have to sort out all the things that you were paid to do. It's really easy. 1. Pick a color, 2. Pick 2 fonts that are legal and available to use on the web, 3. build a few patterns for font sizes and weights - write them down. 4. Pick your images. Make them double the size you expect them to be on the site + put them in a folder, 5. add readme and list the font patterns and the link to the webfont, 6. quickly scribble the wire-frame out, 7. take a photo of it, 8. put it all in a folder and send it to me.4

-

Help.

I'm a hardware guy. If I do software, it's bare-metal (almost always). I need to fully understand my build system and tweak it exactly to my needs. I'm the sorta guy that needs memory alignment and bitwise operations on a daily basis. I'm always cautious about processor cycles, memory allocation, and power consumption. I think twice if I really need to use a float there and I consider exactly what cost the abstraction layers I build come at.

I had done some web design and development, but that was back in the day when you knew all the workarounds for IE 5-7 by heart and when people were disappointed there wasn't going to be a XHTML 2.0. I didn't build anything large until recently.

Since that time, a lot has happened. Web development has evolved in a way I didn't really fancy, to say the least. Client-side rendering for everything the server could easily do? Of course. Wasting precious energy on mobile devices because it works well enough? Naturally. Solving the simplest problems with a gigantic mess of dependencies you don't even bother to inspect? Well, how else are you going to handle all your sensitive data?

I was going to compare this to the Arduino culture of using modules you don't understand in code you don't understand. But then again, you don't see consumer products or customer-specific electronics powered by an Arduino (at least not that I'm aware of).

I'm just not fit for that shooting-drills-at-walls methodology for getting holes. I'm not against neither easy nor pretty-to-look-at solutions, but it just comes across as wasteful for me nowadays.

So, after my hiatus from web development, I've now been in a sort of internet platform project for a few months. I'm now directly confronted with all that you guys love and hate, frontend frameworks and Node for the backend and whatever. I deliberately didn't voice my opinion when the stack was chosen, because I didn't want to interfere with the modern ways and instead get some experience out of it (and I am).

And now, I'm slowly starting to feel like it was OKAY to work like this.7 -

For almost twenty years I have sheltered in the protective, safe, warm bosom of Debian. For a long time, it had the largest body of available software of all the distros, and by far when Ubuntu rose to prominence. So I used Ubuntu for years for the depth of package availability, and because if something esoteric was released, it would almost certainly come out first on Ubuntu, and sometimes only on Ubuntu. I was happy. Things were good.

But over time, Ubuntu and even Debian started to lean harder and harder on gnome, which I've always hated, along with all desktop environments, as they obscure the system from the user, and introduce graphical layers of abstraction, so the actual job of getting things done becomes a black art, hidden behind gnome-specific tools. This is my preference, and It's been disheartening in recent years to see the direction the desktop appears to be taking.

Then I joined devrant in 2017, and until then, I had heard peripherally about Arch, but never more than that. I had not heard of Manjaro at all. People started posting success stories and happy screenshots, and I was intrigued.

In 2018 I built a windows machine to use for parsec streaming games that wouldn't run on my linux rig. For not a great deal of money, I built a solid machine that's unequivocally better than any machine I've ever used, and installed windows on it. For a while, I was pleased. I had the best of both worlds: a windows box to stream some games from, and a linux desktop for everything else.

But after a couple months, as proton matured, I found fewer and fewer reasons to use my windows machine. My use of it declined to where I was last week: it had been months since I'd even powered it on. It was the most powerful machine I've ever used, and it was just collecting dust behind the TV in the living room. The full realization came to me while I was fighting a battle in the Gnome Takeover War, and I realized: I don't have to do this.

I pulled the newer machine out from behind the TV and installed Manjaro architect edition on it. The flexibility in the install was staggering. I am using nilfs2 for my /boot and / partitions: an option that Ubuntu has never offered. Normally they just default you into the garbage ext4 filesystem, and if you can dig deep enough, you can install with something else, though you have to really want it, in my opinion.

But Manjaro has been a dream-come-true. Pacman is easily the best package manager I have ever used, and pamac's intuitive and easy commands are a great view into AUR. Booting into the virtual console instead of a display manager has been wonderful too. On Ubuntu, I had to disable systemd's version of runlevel 5 to even get it working. But I just popped my xrandr script into my .xinitrc, and X opens with startx in less than a second. On Ubuntu, it takes about 5-10 seconds.

This has nothing to do with Manjaro, but I also switched to Radeon for this install, and I couldn't be happier about that. No more "installing" nvidia's drivers.

No more gnome. No more PPAs. No more settling. I am a Manjaro user now. Full stop. Thank you, devrant, for bringing it to my attention. 10

10 -

Well... I had in over 15 years of programming a lot of PHP / HTML projects where I asked myself: What psychopath could have written this?

(PHP haters: Just go trolling somewhere else...)

In my current project I've "inherited" a project which was running around ~ 15 years. Code Base looked solid to me... (Article system for ERP, huge company / branches system, lot of other modules for internal use... All in all: Not small.)

The original goal was to port to PHP 7 and to give it a fresh layout. Seemed doable...

The first days passed by - porting to an asset system, cleaning up the base system (login / logout / session & cookies... you know the drill).

And that was where it all went haywire.

I really have no clue how someone could have been so ignorant to not even think twice before setting cookies or doing other "header related" stuff without at least checking the result codes...

Basically the authentication / permission system was fully fucked up. It relied on redirecting the user via header modification to the login page with an error set in a GET variable...

Uh boy. That ain't funny.

Ported to session flash messages, checked if headers were sent, hard exit otherwise - redirect.

But then I got to the first layers of the whole "OOP class" related shit...

It's basically "whack a mole".

Whoever wrote this, was as dumb and as ignorant to build up a daisy chain of commands for fixing corner cases of corner cases of the regular command... If you don't understand what I mean, take the following example:

Permissions are based on group (accumulation of single permissions) and single permissions - to get all permissions from a user, you need to fetch both and build a unique array.

Well... The "names" for permissions are not unique. I'd never expected to be someone to be so stupid. Yes. You could have two permissions name "article_search" - while relying on uniqueness.

All in all all permissions are fetched once for lifetime of script and stored to a cache...

To fix this corner case… There is another function that fetches the results from the cache and returns simply "one" of the rights (getting permission array).

In case you need to get the ID of the other (yes... two identifiers used in the project for permissions - name and ID (auto increment key))...

Let's write another function on top of the function on top of the function.

My brain is seriously in deep fried mode.

Untangling this mess is basically like getting pumped up with pain killers and trying to solve logic riddles - it just doesn't work....

So... From redesigning and porting from PHP 7 I'm basically rewriting the whole base system to MVC, porting and touching every script, untangling this dumb shit of "functions" / "OOP" [or whatever you call this garbage] and then hoping everything works...

A huge thanks to AURA. http://auraphp.com/

It's incredibily useful in this case, as it has no dependencies and makes it very easy to get a solid ground without writing a whole framework by myself.

Amen.2 -

Biggest challenge I overcame as dev? One of many.

Avoiding a life sentence when the 'powers that be' targeted one of my libraries for the root cause of system performance issues and I didn't correct that accusation with a flame thrower.

What the accusation? What I named the library. Yep. The *name* was causing every single problem in the system.

Panorama (very, very expensive APM system at the time) identified my library in it's analysis, the calls to/from SQLServer was the bottleneck

We had one of Panorama's engineers on-site and he asked what (not the actual name) MyLibrary was and (I'll preface I did not know or involved in any of the so-called 'research') a crack team of developers+managers researched the system thoroughly and found MyLibrary was used in just about every project. I wrote the .Net 1.1 MyLibrary as a mini-ORM to simplify the execution of database code (stored procs, etc) and gracefully handle+log database exceptions (auto-logged details such as the target db, stored procedure name, parameter values, etc, everything you'd need to troubleshoot database errors). This was before Dapper and the other fancy tools used by kids these days.

By the time the news got to me, there was a team cobbled together who's only focus was to remove any/every trace of MyLibrary from the code base. Using Waterfall, they calculated it would take at least a year to remove+replace MyLibrary with the equivalent ADO.Net plumbing.

In a department wide meeting:

DeptMgr: "This day forward, no one is to use MyLibrary to access the database! It's slow, unprofessionally named, and the root cause of all the database issues."

Me: "What about MyLibrary is slow? It's excecuting standard the ADO.Net code. Only extra bit of code is the exception handling to capture the details when the exception is logged."

DeptMgr: "We've spent the last 6 weeks with the Panorama engineer and he's identified MyLibrary as the cause. Company has spent over $100,000 on this software and we have to make fact based decisions. Look at this slide ... "

<DeptMgr shows a histogram of the stacktrace, showing MyLibrary as the slowest>

Me: "You do realize that the execution time is the database call itself, not the code. In that example, the invoice call, it's the stored procedure that taking 5 seconds, not MyLibrary."

<at this point, DeptMgr is getting red-face mad>

AreaMgr: "Yes...yes...but if we stopped using MyLibrary, removing the unnecessary layers, will make the code run faster."

<typical headknodd-ers knod their heads in agreement>

Dev01: "The loading of MyLibrary takes CPU cycles away from code that supports our customers. Every CPU cycle counts."

<headknod-ding continues>

Me: "I'm really confused. Maybe I'm looking at the data wrong. On the slide where you highlighted all the bottlenecks, the histogram shows the latency is the database, I mean...it's right there, in red. Am I looking at it wrong?"

<this was meeting with 20+ other devs, mgrs, a VP, the Panorama engineer>

DeptMgr: "Yes you are! I know MyLibrary is your baby. You need to check your ego at the door and face the facts. Your MyLibrary is a failed experiment and needs to be exterminated from this system!"

Fast forward 9 months, maybe 50% of the projects updated, come across the documentation left from the Panorama. Even after the removal of MyLibrary, there was zero increases in performance. The engineer recommended DBAs start optimizing their indexes and other N+1 problems discovered. I decide to ask the developer who lead the re-write.

Me: "I see that removing MyLibrary did nothing to improve performance."

Dev: "Yes, DeptMgr was pissed. He was ready to throw the Panorama engineer out a window when he said the problems were in the database all along. Didn't you say that?"

Me: "Um, so is this re-write project dead?"

Dev: "No. Removing MyLibrary introduced all kinds of bugs. All the boilerplate ADO.Net code caused a lot of unhandled exceptions, then we had to go back and write exception handling code."

Me: "What a failure. What dipshit would think writing more code leads to less bugs?"

Dev: "I know, I know. We're so far behind schedule. We had to come up with something. I ended up writing a library to make replacing MyLibrary easier. I called it KnightRider. Like the TV show. Everyone is excited to speed up their code with KnightRider. Same method names, same exception handling. All we have to do is replace MyLibrary with KnightRider and we're done."

Me: "Won't the bottlenecks then point to KnightRider?"

Dev: "Meh, not my problem. Panorama meets primarily with the DBAs and the networking team now. I doubt we ever use Panorama to look at our C# code."

Needless to say, I was (still) pissed that they had used MyLibrary as dirty word and a scapegoat for months when they *knew* where the problems were. Pissed enough for a flamethrower? Maybe.5 -

Don't you hate it when your co-worker does dumb things, but thinks it's the "clean code" way?

The following is a conversation between me and a co-worker, who thinks he's superior to everyone because he thinks he's the only one who read the Clean Code series. Let's call him Bill.

Me: I think the feature we need is quite simple, our application needs to call this third party API, parse the response and pass it to the next step. Why do you need to bury everything under an abstraction of 4 layers?

Bill: bEcAuSe It'S dEcOuPlInG, aNd MaKe ThE cOdE tEsTaBlE

Me: I don't know man, you only need to abstract the third party api client, and then mock it if you want. Some interfaces you define makes no sense at all. For example, this interface only has 1 concrete implementation, and I don't think it will ever have another. Besides, the concrete implementation only gets the input from the upper layer and passes it down the lower layer. Why the extra step? I feel like you're using interface just for the sake of interface.

Bill: PrOgRaMmInG tO iNtErFaCe, NoT cOnCrEtE iPlEmEnTaTiOn!!!

Me: You keep saying those words, I don't think they mean what you think they mean. But they certainly do not mean that every method argument must be an interface

Bill: BuT uNcLe BoB blah blah blah...

Me: *gives up all hope*14 -

! exactly dev

I'd ditched Windows and spent a while exploring the Linux ecosystem for content creation. And I have to say, it was not a nice experience.

As much as I respect the Linux mantra of "free as in freedom" and "you need to roll up your sleeves and figure out stuff on your own", it just isn't good enough for non-dev work. Sorry guys, but I need software that gets out of my way and at least does what it's supposed to do. I can't stand a horrible UI or delays and random crashes, which is exactly what happens with most things under Linux.

To replace my Windows workflow I used the following:

1. Windows -> elementaryOS (because Debian/Ubuntu repositories seem to have the best software support, and elementaryOS is the least horrible looking thing that supports that) and then Arch, because, well, Arch.

2. Blender + Maya -> Blender + Maya on Linux.

3. Reaper + FL Studio -> Ardour + LMMS.

4. Photoshop -> GIMP + Krita + Inkscape.

5. ZBrush -> nothing :(

As you can see, my use cases are pretty much all over the spectrum.

Firstly, installing and configuring stuff. A pleasure on Windows, an absolute pain on Linux. Everything just worked on Windows, I had to wrestle with library versions and patches and unstable audio layers (Linux audio just sucks, except for JACK) on Linux.

Out of these, Blender and Maya were the best experience. But even then, both would suffer from random crashes that just didn't happen on Windows.

Ardour is actually really nice when it works. Its use of JACK for routing makes it really really flexible, but it just isn't stable enough to depend on. LMMS is utter crap. I'm sorry, but I just hate the UI. Can't stand it.

GIMP, Krita, and Inkscape can't beat Photoshop, even when you consider them together. Adobe software workflow is just so much better and more intuitive.

Blender 3D sculpting is not bad, but it's nowhere as good as ZBrush.

Also, if you're a C++ dev like me, nothing beats Visual Studio 2017. Nothing. That IDE just blows everything else out of the water. Even VSCode. And it's not slow at all, it handled a fairly large project (PBRTv3) just fine on my Windows development VM. Yes, a VM.

So...I ditched Linux and went back to Windows, but I keep Linux as a VM for when I actually want to mess with Blender or Ardour. Or some dev stuff which Windows sucks at (which is becoming less frequent because of WSL).

Out of all the above, the only one I'd consider ready for production use would be Blender. Developers of open source software, please learn from Blender. Kickass UI and user friendly operation is extremely important, you can't make a random window with GTK buttons and text boxes and arcane config files and expect people to use it for serious work.

Also, Windows beats Linux hands down as an everyday OS. It's always been rock solid, if you take care of it properly (and that goes for any OS). Updates hardly take any time because I run it on a SSD. As for all the advertising and marketing bullshit, you can block a large amount of stuff. And for what can't be blocked, well, I just have to live with it, because the alternative is compromising on my creative output, which is too much for me.

I still run Linux on my server, though. And on my embedded devices (Pi, BeagleBone, etc.). It absolutely rocks there.

I realize that Linux software is not going to improve unless we do something about it, so I'll be contributing fixes and code (the joys of being a C++ dev, yay). Still, I feel that the platform and software as a whole is just not mature enough.18 -

The Cloud Of Bullshit

Every day I wake, and I think of my one true mission in life. To mock and ridicule paint huffing idiots. Something recently that drew my ire, like the hemorrhoids on my ass is this idea of 'the cloud', THE CLOUD and the buzzword lingo-bingo bullshit that providers use to hype and sell it.

For example, airtable is an amazing service. I love that I can insert just about anything into a row, create any of my own row datatypes, that it's flexible as all hell.

I love it.

And I hate that I'm essentially locked in to the cloud.

I fucking hate how if my internet goes down (thanks you pie eating inbred dipshits at comcast) I have no access.

If the company is bought, they'll shut down like all the rest , to be "relaunched at a later time" (or never).

I hate that if the company doesn't make enough money, or it's investors change their mind, woopsie, service is shut down.

I hate that the cloud is synonymous with massive data leaks and IOT-levels of stupidity in security practices.

Every time someone says "but its in the cloud! Isn't it amazing!"

I always think 1. YEAH IF IM AN INVESTOR I GET TO MILK LOW BROW FINGER PAINTING FUCKWITS EVERY MONTH like Adobe sucking the blood from infants who are still in college.

2. Why? So I can get locked into their platform, have them segment off previously free features (fucking youtube and the 'subscribe so you can continue playing audio with your screen off' bullshit), and then have fees increase month over month?

3. Why, so every four years during the presidential selection, if I piss off some fuckstick braindead lemming literally sucking his girlfriends BFs cock, they can potentially shut me out from my own data completely?

The Cloud is built on shit-colored hype sold to knob gobbling idiots, controlling idiots, profiting at the expense of idiots, and later fucking them for buyout payola. The Cloud is a Cloud of Bullshit shat out by huckster messiahs straight into the lapping mouths of fanatics worshiping slavishly like toilet drinking scum at the porcelain alter of a neon god, invisible, untouchable, and like a spigot, easily shut off without anyone noticing. And when it happens, I'll be there, shouting "WHERE IS YOUR CLOUD NOW?"

Native any day. 100% native or I don't fucking want it

None of this node.js-gone-native bullshit either with notetaking apps taking up hundreds of megabytes of ram, where everything is bootstrap or react, in a browser, in a window container, because people are so fucking incompetent we have to hold their hand WHILE they give themselves a reach around.

Native or nothing.

For my favorite notetaking app, I use Microsoft OneNote. "OH god, a heathen, quick, stick his body up on a stake!"

But hear me out. I'll be the first one in a crowd to kick bill gates in the nuts (not because I particularly hate microsoft, just because I think hes kind of a cunt).

So when I say onenote is good, I really fucking mean it. Sure they did some cunty things like 'dumbed down' the interface, and cut out some options. But you know what they can't do?

Shut down the damn service (short of a system update completely removing the whole app, which, frankly, wouldn't surprise me).

It's so god damn good it waxed my balls, cured my cancer, fixed my relationship with my father, found my long lost brother, and replaced ALL my irl notebooks.

It's so good that if it was cocaine I'd be hospitalized for overusing it.

So god damn good it didn't just replace all my notebooks, it even replaced and sped up my mockup process three to five times. Want layers?

Built in. Just drag an image on to the notebook to import instantly.

Want to rearrange layers? Right click select "send forward/back/bring to front/send to back".

Everything snaps to grid by default and is easily resizeable.

I had all the elements for a UI sliced and diced. Wanted to try a bunch of layouts. Was gonna take me two damn days.

Did it in three hours with the notebook features of onenote.

After I started using onenote, me and my bodypillow finally conceived even.

Sweet marries mammaries I just fucking jizzed. Thank you onenote.

P.s. It really did speed up my UI design, allows annotated images, highlighted text. Shit, it can even do kanban.

And all I can think is "good job microsoft making an awesome product for free, being dumb as fuck for not charging for it, and then not marketing it at ALL."

It was sheer fucking luck that I discovered it while was I was looking for vendor STD bloatware to blast off my new install.

OneNote: Worth a try even for the kick-gates-in-the-nuts fan club.

The cloud can suck my balls.18 -

Full stack developer.

I know what it's supposed to mean, but I feel like it gives discredit to the devs who perfect their area (frontend, backend, db, infrastructure). It's, to me, like calling myself a chef because I can cook dinner..

The depth, analysis and customization of the domain to shape an api to a website is never appreciated. The finicle tweaks on the frontend to make those final touches. Then comes a brat who say they are full stack, and can do all those things. Bullshit. 99.9% of them have never done anything but move data through layers and present it.

Throw these wannabes an enterprise system with monoliths and microservices willy nelly, orchestrate that shit with a vertical slice nginx ssi with disaster recovery, horizontal scaling, domain modeling, version management, a busy little bus and events flowing all decimal points of 2pi. Then, if you fully master everything going on there, I believe you are full stack.

Otherwise you just scraped the surface of what complexities software development is about. Everyone who can read a tutorial can scrape together an "in-out" website. But if your db is looking the same as your api, your highest complexity is the alignment of an infobox, I will laugh loud at your full stack.

And if you told me in an interview that you are full stack, you'd better have 10+ years experience and a good list of failed and successful projects before I'd let you stay the next two minutes..1 -

Everyone and their dog is making a game, so why can't I?

1. open world (check)

2. taking inspiration from metro and fallout (check)

3. on a map roughly the size of the u.s. (check)

So I thought what I'd do is pretend to be one of those deaf mutes. While also pretending to be a programmer. Sometimes you make believe

so hard that it comes true apparently.

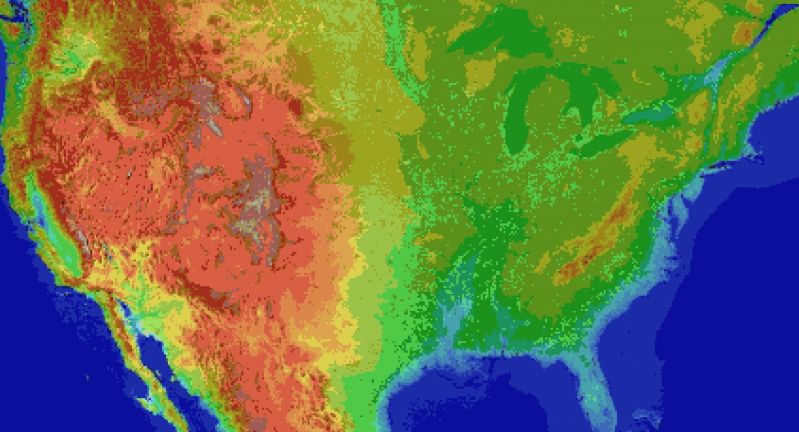

For the main map I thought I'd automate laying down the base map before hand tweaking it. It's been a bit of a slog. Roughly 1 pixel per mile. (okay, 1973 by 1067). The u.s. is 3.1 million miles, this would work out to 2.1 million miles instead. Eh.

Wrote the script to filter out all the ocean pixels, based on the elevation map, and output the difference. Still had to edit around the shoreline but it sped things up a lot. Just attached the elevation map, because the actual one is an ugly cluster of death magenta to represent the ocean.

Consequence of filtering is, the shoreline is messy and not entirely representative of the u.s.

The preprocessing step also added a lot of in-land 'lakes' that don't exist in some areas, like death valley. Already expected that.

But the plus side is I now have map layers for both elevation and ecology biomes. Aligning them close enough so that the heightmap wasn't displaced, and didn't cut off the shoreline in the ecology layer (at export), was a royal pain, and as super finicky. But thankfully thats done.

Next step is to go through the ecology map, copy each key color, and write down the biome id, courtesy of the 2017 ecoregions project.

From there, I write down the primary landscape features (water, plants, trees, terrain roughness, etc), anything easy to convey.

Main thing I'm interested in is tree types, because those, as tiles, convey a lot more information about the hex terrain than anything else.

Once the biomes are marked, and the tree types are written, the next step is to assign a tile to each tree type, and each density level of mountains (flat, hills, mountains, snowcapped peaks, etc).

The reference ids, colors, and numbers on the map will simplify the process.

After that, I'll write an exporter with python, and dump to csv or another format.

Next steps are laying out the instances in the level editor, that'll act as the tiles in question.

Theres a few naive approaches:

Spawn all the relevant instances at startup, and load the corresponding tiles.

Or setup chunks of instances, enough to cover the camera, and a buffer surrounding the camera. As the camera moves, reconfigure the instances to match the streamed in tile data.

Instances here make sense, because if theres any simulation going on (and I'd like there to be), they can detect in event code, when they are in the invisible buffer around the camera but not yet visible, and be activated by the camera, or deactive themselves after leaving the camera and buffer's area.

The alternative is to let a global controller stream the data in, as a series of tile IDs, corresponding to the various tile sprites, and code global interaction like tile picking into a single event, which seems unwieldy and not at all manageable. I can see it turning into a giant switch case already.

So instances it is.

Actually, if I do 16^2 pixel chunks, it only works out to 124x68 chunks in all. A few thousand, mostly inactive chunks is pretty trivial, and simplifies spawning and serializing/deserializing.

All of this doesn't account for

* putting lakes back in that aren't present

* lots of islands and parts of shores that would typically have bays and parts that jut out, need reworked.

* great lakes need refinement and corrections

* elevation key map too blocky. Need a higher resolution one while reducing color count

This can be solved by introducing some noise into the elevations, varying say, within one standard div.

* mountains will still require refinement to individual state geography. Thats for later on

* shoreline is too smooth, and needs to be less straight-line and less blocky. less corners.

* rivers need added, not just large ones but smaller ones too

* available tree assets need to be matched, as best and fully as possible, to types of trees represented in biome data, so that even if I don't have an exact match, I can still place *something* thats native or looks close enough to what you would expect in a given biome.

Ponderosa pines vs white pines for example.

This also doesn't account for 1. major and minor roads, 2. artificial and natural attractions, 3. other major features people in any given state are familiar with. 4. named places, 5. infrastructure, 6. cities and buildings and towns.

Also I'm pretty sure I cut off part of florida.

Woops, sorry everglades.

Guess I'll just make it a death-zone from nuclear fallout.

Take that gators! 5

5 -

web technologies rot your brain into a festering deadly biohazard mush. web technologies are the worst thing that ever happened to this world. fucking festering web shitosystem fuck this disgusting stupid fragile opaque bloated universe-sized chunk of retarded pukeshit.

I JUST WANT TO MAKE FUCKING GAMES, NOT HAVE MY BRAIN AND SOUL CONSTANTLY ROTTED BY THIS FUCKIN MONUMENT TO UTTER RETARDED LOBOTOMIZED HUMAN INCOMPETENCE FUCK YOU ALL FUCK ALL THIS SHIT FUCKFUCKFUCKFUCK DISGUSTING FUCKIN MINDRAPE PEDOPHILIACS SHOULD STOP FUCKING "INVENTING" SHITPOOLS.

WHEN

THE

FUCK

WILL

SOMEONE

COMPETENT

BE

THE

INVENTOR

OF

SOME

PIECE

OF

IT.

whoever were the rapists who "invented" php, js, html, css, SQL, and all the bullshit about how it's supposed to be configured and communicate with each other should have died of starvation in a fuckin ditch while being raped by squirrels... before they managed to "invent" any of that disgusting shit.

fuck you with your fuckin linux bullshit philosophy which keeps rotting all your brains thinking that this is fine and it can be fixed just by piling more and more layers of fucking shit on top of all this shit.

FUCK.

YOU.

ALL.18 -

The one thing I've learned most about developers is that developers like their lasagna more than their spaghetti.3

-

Is just me or being a developer has become a complete nightmare?

I mean, I never expected when I got into it to have a simple life in the first place, it's a fucking problems solving job.

But heck, I'm in the field from more than 12 years and something has definitely changed for the worse. Believe me I am just seeking for a general consensus not approval or anything, but it doesn't feel anything like 10 years ago.

I have worked with .NET mostly in all his sauces from aspx, wpf, up to today .NET 10 and C#13 and in the meanwhile it happened that I needed to do tech assistance, code in exoteric shit, use arduinos and raspberries, use perl, java, turn into full stack with databases, devops and shit.

Each year it's worse, the "developer" word gets more and more blurred word to say "the one who must know everything".

I'm asked to know docker, kubernetes, kafka, CI/CD and devop shit, web dev, to get ertifications, to learn how AI works to the level of learning again matht to do matrix interpolations, to get on data science, python, numpy, pandas, pytorch and shit, to know every OS, to know about networking because APIs now have to use rest, a single verb for every action, because if routers and new communication protocols break you have to know and figure out why.

Not to mention that marketing and sales guy shove up the big customers ass every new tecnology to make our work look like bling-a-ling top notch 1% developer stuff that always use latest bleeding edge technology and you're forced to learn new immature frameworks every 2 months or so (latest being various javascript/typescript diagramming libraries).

Every idiot feels entitled to puke out a new framework or supersets of existing languages. I lost count how many supersets css has that I had to peek and learn lol.

Every fucking simple software I did from scratch and designed by myself, web portals for big pharma were much simple than whatever PM i get assigned to are and guess what, I published it and fixed ofc some bugs, but most bugs are related to customer unstable datasets and well, I never had bugs after the first few weeks, except once every few months and nothing serious.

The fucking things they let me do now are hypercomplicated and I spend days fixing other people bugs and we get some hair pulling structural problems becuase they shove in all they can (mediator patterns are a must): kafka, docker, messagebus, whatever javascript clusterfuck they can, patchworks of html and css blurred out in layers of hierarchical scss or sass, slapped into angular (the most immature and crappy shit in js) that has all of his hidden ways to bury and hide DOM (ng-deep: anyone? :host anyone?).

And it's all like this. Whenever I put hands everyone wants to do his little frankeinstein experiment cooking togheter in a cauldron a shit ton of different stuff, overcomplicated patterns.

it's a challenge at shooting flies with bazookas.

I'm really tired of technology at all, not only for my jobs. This fucking trend is a plague spread everywhere and now, since everyone has to deal with it, everything is unstable.

In my daily usage of a smartphone app crashes a lot or have weird troubles, slowness, websites are pretending to be full blown app with this shitty SPA trend and are filled with bugs and incompatibilites.

Basically every tech tool we use is 100% more prone to bugs than 10 years ago.I'm really thinking to find a simple job like baker or shit and get an old phone that just can call and send SMS.

I need to get out of tech for a few years to get back my sanity.

This is not a problem-solving job anymore.

10 years ago I needed to study too but once I got the tools in my hands the job was fun, you got a magic wrench and sky was the limit.

Now you got to fucking learn a ton of bullshit everyday and it's not like you see a end on it, everyday people push out new unstable and bugged shit waiting for devs to be guinea pigs for them. You gotta learn a ton of stuff of which 3/4 will be useless/obsolete/broken and considered inefficient the next month.

jeeeeeez12 -

Chinese remainder theorem

So the idea is that a partial or zero knowledge proof is used for not just encryption but also for a sort of distributed ledger or proof-of-membership, in addition to being used to add new members where additional layers of distributive proofs are at it, so that rollbacks can be performed on a network to remove members or revoke content.

Data is NOT automatically distributed throughout a network, rather sharing is the equivalent of replicating and syncing data to your instance.

Therefore if you don't like something on a network or think it's a liability (hate speech for the left, violent content for the right for example), the degree to which it is not shared is the degree to which it is censored.

By automatically not showing images posted by people you're subscribed to or following, infiltrators or state level actors who post things like calls to terrorism or csam to open platforms in order to justify shutting down platforms they don't control, are cut off at the knees. Their may also be a case for tools built on AI that automatically determine if something like a thumbnail should be censored or give the user an NSFW warning before clicking a link that may appear innocuous but is actually malicious.

Server nodes may be virtual in that they are merely a graph of people connected in a group by each person in the group having a piece of a shared key.

Because Chinese remainder theorem only requires a subset of all the info in the original key it also Acts as a voting mechanism to decide whether a piece of content is allowed to be synced to an entire group or remain permanently.

Data that hasn't been verified yet may go into a case for a given cluster of users who are mutually subscribed or following in a small world graph, but at the same time it doesn't get shared out of that subgraph in may expire if enough users don't hit a like button or a retain button or a share or "verify" button.

The algorithm here then is no algorithm at all but merely the natural association process between people and their likes and dislikes directly affecting the outcome of what they see via that process of association to begin with.

We can even go so far as to dog food content that's already been synced to a graph into evolutions of the existing key such that the retention of new generations of key, dependent on the previous key, also act as a store of the data that's been synced to the members of the node.

Therefore remember that continually post content that doesn't get verified slowly falls out of the node such that eventually their content becomes merely temporary in the cases or index of the node members, driving index and node subgraph membership in an organic and natural process based purely on affiliation and identification.

Here I've sort of butchered the idea of the Chinese remainder theorem in shoehorned it into the idea of zero knowledge proofs but you can see where I'm going with this if you squint at the idea mentally and look at it at just the right angle.

The big idea was to remove the influence of centralized algorithms to begin with, and implement mechanisms such that third-party organizations that exist to discredit or shut down small platforms are hindered by the design of the platform itself.

I think if you look over the ideas here you'll see that's what the general design thrust achieves or could achieve if implemented into a platform.

The addition of indexes in a node or "server" or "room" (being a set of users mutually subscribed to a particular tag or topic or each other), where the index is an index of text audio videos and other media including user posts that are available on the given node, in the index being titled but blind links (no pictures/media, or media verified as safe through an automatic tool) would also be useful.12 -

Horror story and rant time I guess...

I haven't seen the main developer of this MVC project that I've been working on but I can totally assure that his seniority isn't in frontend development 😠 and I doubt the backend too... Fucking DataTables converted to IDictionaries<string,object>

Guess who need to build on top of the pile of shit!

Anyway, I wasn't really careful about what kind of template I was given to work on a new SPA page, so I'm doing the job given the time, but it's fucking gory:

- matrioska style layers (n.3) without documentation

- partials everywhere

- too much inline styling

- too many <style> sections (n per layer or partial)

- too many <script> sections (n per layer or partial)

- poor CSS styling or no styling at all! (classes without any style nor js association)😠

He's just been lucky that the browser is capable of handling his shit

Now that at the end of this year I'll leave this project (solo fullstack) and need to provide documentation for the next poor souls I was thinking to leave behind something at par of my skills and capabilities but analysing the current mess ticks my brain in a bad way, fuck you Marco!

Fuck you

and your seniority

and the Italian way of perceiving seniority that gives you a higher living in the wrong side of the field 🤬🤬🤬2 -

I fucking hate installing shit on Ubuntu via APT when it's not provided by Ubuntu itself. ONE HUNDRED PERCENT OF TIME this will create problems with outdated keys or whatever. Then, to solve the problems of software that was supposed to be transparent, I have to go learn about layers upon layers of its inner workings and waste my fucking time. I suppose this is the Linux experience in general. But I don't want to know about GPG whatever whatever because there's no need for me to learn it outside of solving this stupid-ass fucking problem. I don't want to learn that sources.list.d is a fucking directory. I never EVER want to touch any kind of keys or whatever shit, I just want to follow some instructions and fucking install software in a simple way. curl whatever | sh it is, I don't fucking care.

All I want is to develop software, not dive into problems with my operating system because it decided to shit the bed.5 -

OK, I could maybe write a quick app in C++ and cross compile it so I can send it to my friends who use windows, what is wrong with you I am ashamed for us all.

But why do that? Let's just go the EXTREME route and do things in a very inappropriate way that is natively """portable""" so we can say that (((It Just Works™))).

So if you haven't guessed already, it's 100% js rawdogging and I'm doing the graphics in SVGOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHH NOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOO uoykuf OoOOOOOOOOOOOOOOOOOOOOOOoOOOOOOOOOOOOOOOOOOOOO it's not so bad but here's things I've learned:

If you're using inkscape to convert your lazy 8x8 pixels per frame spritesheet.png into an svg file, and don't know how to use inkscape, you have to stack each frame on top of one another. Yes.

Erase the layers, erase everything that isn't the paths you want. Also erase invisible paths generated by the pixelart mode of the trace bitmap thingy, sometimes these ghosts exist for mysterious reasons.

Then, neatly stack everything into one square big enough to hold all the frames, select all the frames, resize to selection. OK, now double check that the names of your layers werent changed to generic path94958509 out of the fucking blue AGAIN, all good.

Also double check that inkscape hasn't changed the name and extension of your output file AGAIN then make sure inkscape hasn't changed the dimensions of your export AGAIN and then AGAIN and AGAIN...

OK, so you've exported your svg, now we start doing even more stupid and questionable things. We go into the file and delete the header, specially the comment at the top that clearly states this file was made with inkscape, because my experience was so DELIGHTFUL that I very much require some abstract form of petty vengeance. Also a cigarette.

Hold on. Patiently erase useless tags such as defs and g and shit, all you want is the svg and paths. Then, painstakingly convert each <path id=$ .../> into <symbol id=$> <path .../>.

Why didn't I write a perl script for this part? Actually that's a good idea, goes on the todolist, I didn't write a todolist app though, because I have a textfile. I mean, just what kind of negative IQ troglodyte would do something like that? ;>;>;>;>;>;>;>

Anyway, now utilize your black-magic-infused devilspeak q$p e r l$ script to fasten together an entire webapp into a single html file, all done with duct tape and clown jizz of course, see previous rant for VERY technical details. Also I jjust time traveled and wrote the previous paragraph while writing this one everything is out of order oh noes.

No matter it works now me is happiee.

I got heart icon for health bar but no health bar implemented not aproblem.

Uh also outlines. Here, let's keep it topical, this is rom.rol:

```rol

# vars:

$:%peso;>

let sprite,"$.elems.srpite";

$:/peso;>

# css:

$:%asis;>

path {

· stroke: $080808;

· stroke-width: 0.1;

· stroke-linejoin: round;

· paint-order: stroke;

}

$:/asis;>

# html:

$:%asis;>

<svg width="2.1166811mm" height="2.1166601mm" viewBox="0 0 2.1166811 2.1166601" xmlns="http://www.w3.org/2000/svg">

<symbol id="{$$.%sprite}_hp_0">

<path d="M 0.264594,0.26458 V 0.52916 H 1.1e-5 V 0.79375 1.05833 1.32291 H 0.264594 V 1.5875 H 0.529177 V 1.85208 H 0.793761 V 2.11666 H 1.058344 1.322927 V 1.85208 H 1.587511 V 1.5875 H 1.852094 V 1.32291 H 2.116677 V 1.05833 0.79375 0.52916 H 1.852094 V 0.26458 H 1.587511 1.322927 V 0.52916 H 1.058344 0.793761 V 0.26458 H 0.529177 Z"/>

</symbol>

<!--NOW DO THE OTHER NINE FRAMES-->

</svg>

$:/asis;>

```

so now I can say (in base.rol):

```rol

$:%peso;>

lib "[based]";

rol "rom.rol";

let hud,"$.elems.hdu";

$:/peso;>

$:%asis;>

<svg viewBox="0 0 23.283329 2.1166601" width="16%" height="16%" fill="#880808">

<use id="{$$.%hud}_hp" href="#{$rom.%sprite}_hp_0"/>

</svg>

<script>

document.getElementById("{$$.%hud}_hp").setAttribute('href',"#{$rom.%sprite}_hp_" + n).

</script>

$:/asis;>

```

Where `n` is just some frame counter this is duct tape now request animation frames REQUEST THEM YOU MUST.

Anyway this is immensely stupid but it made me giggle so I share.

AAA RPG with full svg graphics when?1 -

Not a horror. I'm rewriting services.

It started as a help request. I was asked to help with completing a service dealing with push notifications which was a research prototype. It was suggested to keep core part of it, but it was so awful that I just removed all files and wrote the service from scratch.

The second service had been developed for more than a year by a junior and then by our manager who wanted to complete it as fast as possible, without taking care of code quality. Then I was asked to take over the project and after some time I agreed with one condition: I'll have 1 month on takeover. But when I looked at the code, it became clear that it's much faster and better to rewrite everything except API and database than to takeover existing code.

The third service dealing with file exchange was working, but the junior who wrote it advised to rewrite it because it was a very simple service. So, I initiated rewriting, designed a new API and reviewed the final result.

And now I'm dealing with the fourth one. It was developed in my team but not under control. Now, when I "inherited" this complicated project, I decided to rewrite it because it should be simple, but it doesn't. It features reflection, layers inside layers, strange namespaces, strange solution structure. And that's after months of refactorings and improvements. So, wish me luck because I want to keep part of the infrastructure, but I don't know if it's possible. -

Why do you lil' shits keep making LAYERS and LAYERS of unnecessary abstraction and then call it goddamn progress???

Dude what the fuck is this UEFI shit?!

Why the hell do I NEED to import a frigging library and read tons of boring and overly complicated documentation just so I can paint a pixel on the screen now uh??

Alright alright yeah so the BIOS is a little basic but daaaamit son if you want something a bit more complicated you make it yourself or install an OS that provides it! Like we've been doing it for years!!!

Dude, you don't get to know what a file system is until I tell you!

The PC be like:

"You wanna dereference the 0x0 pointer? There you go: it's 0xE9DF41, anything else?

You wanna write to the screen? Ok I have a perfectly convinient interrupt setup for that.

Wanna paint a pixel yellow? Ok, just call this other interruption. Theere we go.

And it only took four bytes and a nanosecond to do it."

That shit works, and if you want something more complex, but not too much, that still runs efficiently install DOS.

Don't mess around with the hardware pleeease.

We can still understand what's going on down there. Once UEFI steps in, it'll be like sealing a door forever. Long live BIOS damn it all!1 -

OK. We've got this tiny little pet project of mine (work related)…

I rescued it from the git archive, simply put: someone hot glued an elasticsearch scroll + document processor (processing) together.

After a lot of refactoring, I had an simple, much improved (non-parallel) Akka Worker System without an Akka topology / hierarchy.

I left out the hierarchy at first, because I didn't know Akka at all.

I've worked with a lot of process workflows, and some systems that come very close to IPC, so I wasn't completely in the dark.

Topology requires knowledge / creation of a state machine / process workflow. And at that point of time I just had... Garbage. Partially working garbage.

I finished yesterday the rewrite into several actors... Compared to before, there are 8 actors vs 2... And round about 20 classes more. Mostly since I rewrote the Receive Methods of Akka as Command DTOs... And a lot of functions needed to be seperated into layers (which where non existent before)

Since that felt more natural than the previous chaos of passing strings or other primitive types around, or in the worst case just object....

(Yes: Previously an Actor was essentially a class with one or more functions "doEverything" and maybe a few additional functions which did everything - from Rest Client to Processing)).

Then I draw the actual state machine based on everything I've written in the last weeks and thought about how to create the actual topology and where / how parallelizing might make sense.

Innocent me stumbled in the Akka Docs on Akka Typed... (Didn't know it existed, since I'm very new to Java and Akka).

Hm, that sounds an a lot like what I did. In an different way, yes. But not so different that it might be VERY hard to port to.... And I need to change (for implementation of hierarchy) a few classes....

[I should have known at this stage that my curiosity would get the best of me, but yeah. Curiosity killed the cat.]

Actually the documentation is not bad. It's just that upon reading the first more complex examples, my brain decided to go into panic state.

The've essentially combined all classes in one class in all source code examples [which makes sense more sense later], where it is fscking hard for an chaotic brain like mine to extract information....

https://doc.akka.io/docs/akka/...

The thing is: It's not hard to understand… actually very simple.

It was just my brain throwing an fuck you tantrum.

So I've opened more examples in other tabs and cross referenced what happened there and why...

Few frustrated hours later I got that part.... And the part why it's called Akka Typed. It was pretty simple....

Open the gates of hell, bloody satan that was too easy for fucks sake.

Nooooow.... I just need to port my stuff to Akka Typed.

Cause. Challenge accepted, bitch - eh brain. You throw tantrum, you work overtime. -.-

I just cannot decide wether to go FP or OOP.

Now... I'm curious wether FP is that hard... Hadn't dealt with it at large before.

Can someone please stop me... I'm far too curious again. -.- *cries*6 -

Feature not a bug...

My work laptop has started rebooting almost every night.

It's not clear why, but I sort of think of it as a feature now.

I have an ultra-wide monitor, plus another wide next to that one, and a bunch of virtual desktops.

I often think "ok everything is where it is that's good" but coming in reality with a bazillion things open across all the desktops and screens sometimes when I come back the next day ... it's actually just a lot of mess / overhead to pick up where I was.

Sometimes I think we introduce a lot of complexity to solve a problem and ... actually it's just more complexity if you're not already 8 layers deep.5