Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

If possible,

Try to separate the storing as much as possible, from the other stuff.

So that other people can use your storing-module or can use their own , without having to change your library. -

A bit offtopic. Doesn't wikipedia have an API so you would not need to use a scraper?

-

@commanderkeen I am using Wikipedia API for searching and fetching pages, but to extract and to parse data, I am relying on the scrapper. So, this allows me to extract only relevant text and ingore other texts. Also, extract data from tables and image descriptions and so on...

-

Polarina1188yYou know that it is possible to download everything on Wikipedia, officially. There is no need for scraping.

Polarina1188yYou know that it is possible to download everything on Wikipedia, officially. There is no need for scraping.

https://en.wikipedia.org/wiki/... -

@StanTheMan @Polarina I understand though why you would suggest this, as I am either way querying wikipedia pages and storing them in Elasticsearch...

-

py2js27248yWhy not just use the Wikipedia data dump its around 10 GB. Use that and parse the data no need to use scraper. Scraper will take forever

py2js27248yWhy not just use the Wikipedia data dump its around 10 GB. Use that and parse the data no need to use scraper. Scraper will take forever

Related Rants

No questions asked

No questions asked As a Python user and the fucking unicode mess, this is sooooo mean!

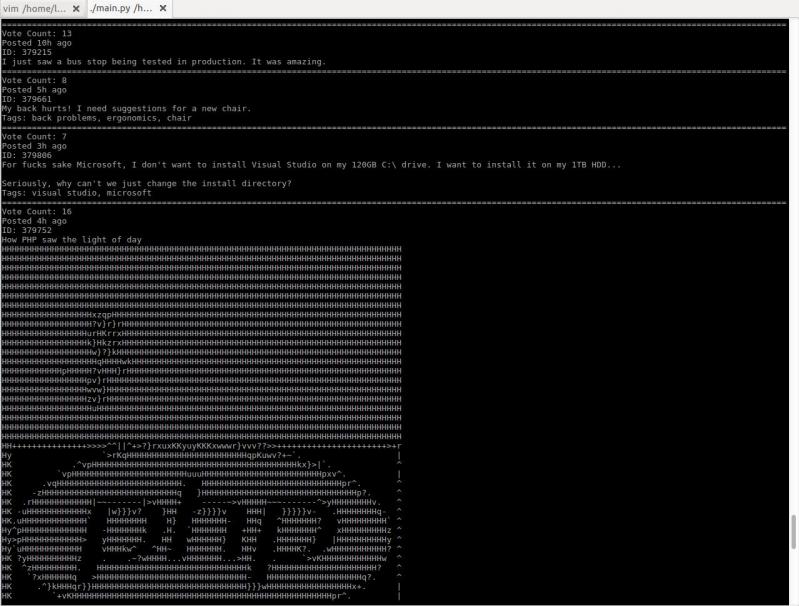

As a Python user and the fucking unicode mess, this is sooooo mean! I just started working on a little project to browse devrant from terminal. It converts images to ascii art!

I just started working on a little project to browse devrant from terminal. It converts images to ascii art!

Hi, I am using a Wikipedia scrapper in one of my Open Source project. The data extracted from it is the stored in Elasticsearch... Now I have decided to create library out of it so that other people can use it too... My question is should also include the Elasticsearch storing module in library or just add the scrapper... Please let me know your thoughts.

rant

wikipedia

python

scraper