Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

dfox421348y@Condor yeah, I mean like I said it was definitely a bad practice. But I also think it’s a bad practice for test servers to include production data since test servers tend to have more relaxed security protocols and mistakes can lead to real users getting sent emails and other issues. Generally I think it’s good to have fake data that mimics prod usage, but I think either way you still run into issues sometimes with things like third party integrations and having a proper test flow.

dfox421348y@Condor yeah, I mean like I said it was definitely a bad practice. But I also think it’s a bad practice for test servers to include production data since test servers tend to have more relaxed security protocols and mistakes can lead to real users getting sent emails and other issues. Generally I think it’s good to have fake data that mimics prod usage, but I think either way you still run into issues sometimes with things like third party integrations and having a proper test flow. -

To me nearly something similar happened last night (when I had been working for hours and was very tired): I forgot that I had changed a line for testing and got nearly crazy when I didn't know why other code, that relied on the one with the change, didn't want to work even it was absolutely correct.

-

totoxto2448yI was just starting out with Jest for testing and wanted to mock out a class that sent metrics, I did the mocking and added a console log to the method that sent the metrics to make sure it didn't run. I don't know what happened, probably some rushing to help with some other task or something. But after a heavy rebase some weeks later to get the tests ready to be merged in I fire up the app locally and send a request to it to check that the response looks right and nothing crashes. Reading the output afterwards I'm greeted with a "Shit, this is called" console log. Good thing I noticed before it was merged.

-

donuts232408yLol.... This was sorta what happened in my old team when everyone left and I took over the whole project. First REST server deploy crashed the whole thing because apparently the devs never checked in the changes for many many releases... They just build on their local and copied to the server...

donuts232408yLol.... This was sorta what happened in my old team when everyone left and I took over the whole project. First REST server deploy crashed the whole thing because apparently the devs never checked in the changes for many many releases... They just build on their local and copied to the server...

Eventually I matched the logs to figure the immediate issue and reverse-engineered out all the diffs. That fixed the immediate release...

Then I learned about WinDBG and IlSpy and used them to decompile the previous PROD server.

Back then I was an Junior/Intermediate Dev... Didn't get much thanks though I feel I should've been immediately promoted to God... -

donuts232408y@Condor we do it all the time because the IT teams are cost constrained and the ppl deciding what's needed are the equivalent of tech monkeys that think like this

donuts232408y@Condor we do it all the time because the IT teams are cost constrained and the ppl deciding what's needed are the equivalent of tech monkeys that think like this

Related Rants

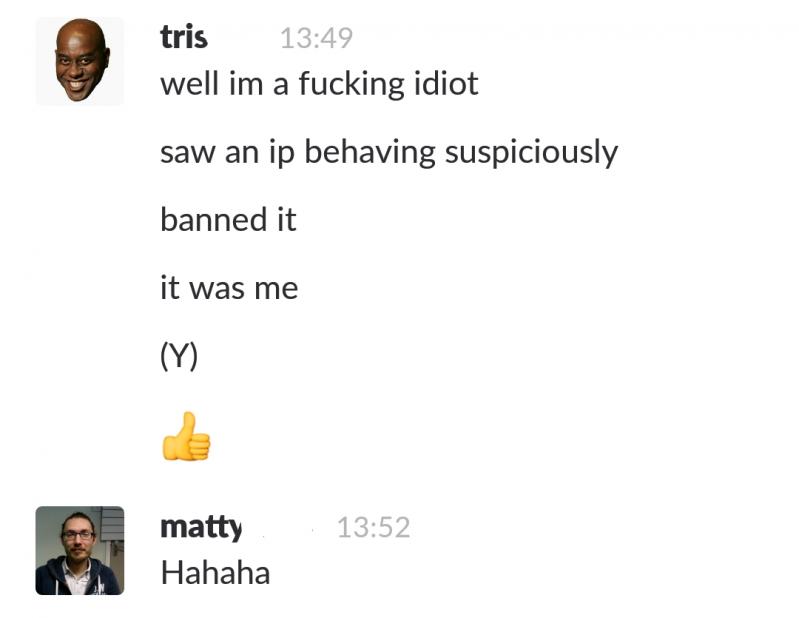

Just got this slack from our server admin.

Just got this slack from our server admin. When there are only 2 pages on Google you know you're in serious shit.

When there are only 2 pages on Google you know you're in serious shit.

I worked with a good dev at one of my previous jobs, but one of his faults was that he was a bit scattered and would sometimes forget things.

The story goes that one day we had this massive bug on our web app and we had a large portion of our dev team trying to figure it out. We thought we narrowed down the issue to a very specific part of the code, but something weird happened. No matter how often we looked at the piece of code where we all knew the problem had to be, no one could see any problem with it. And there want anything close to explaining how we could be seeing the issue we were in production.

We spent hours going through this. It was driving everyone crazy. All of a sudden, my co-worker (one referenced above) gasps “oh shit.” And we’re all like, what’s up? He proceeds to tell us that he thinks he might have been testing a line of code on one of our prod servers and left it in there by accident and never committed it into the actual codebase. Just to explain this - we had a great deploy process at this company but every so often a dev would need to test something quickly on a prod machine so we’d allow it as long as they did it and removed it quickly. It was meant for being for a select few tasks that required a prod server and was just going to be a single line to test something. Bad practice, but was fine because everyone had been extremely careful with it.

Until this guy came along. After he said he thought he might have left a line change in the code on a prod server, we had to manually go in to 12 web servers and check. Eventually, we found the one that had the change and finally, the issue at hand made sense. We never thought for a second that the committed code in the git repo that we were looking at would be inaccurate.

Needless to say, he was never allowed to touch code on a prod server ever again.

rant

mindfuck

debugging

wk99

whoops