Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

vicary4694yPeople seem to define layers by intuition and experience, sounds like a problem for machine learning itself.

vicary4694yPeople seem to define layers by intuition and experience, sounds like a problem for machine learning itself.

https://machinelearningmastery.com/... -

vicary4694yThe pruning process also looks tedious and static, I want something that is able to incrementally train itself from experience.

vicary4694yThe pruning process also looks tedious and static, I want something that is able to incrementally train itself from experience.

https://stats.stackexchange.com/que...

EDIT: GitHub Copilot is already doing this, in fact a wide range of products have been doing personalized trainings. But how does it work? Is it a smaller model sitting on top of a more general one? Or is it a copy of a template model and then incrementally trained with personalized data? -

vicary4694yProgress: Deep Q Learning and Multi-arm bandits approach pretty much removes 90% of the training data.

vicary4694yProgress: Deep Q Learning and Multi-arm bandits approach pretty much removes 90% of the training data.

But still, a real geth needs to imagine[1] if a new theory[2] works before putting it into real life decisions.

I am currently thinking of a more flexible approach that generations are not strictly defined, surviving actors will breed new actors randomly within the same episode if other actors had reached a terminal state before them.

Such training may not be easily defined with N iterations/episodes, but instead ends with specified training time or target fitness score.

---

[1] Sample training data

[2] Layer definitions

Related Rants

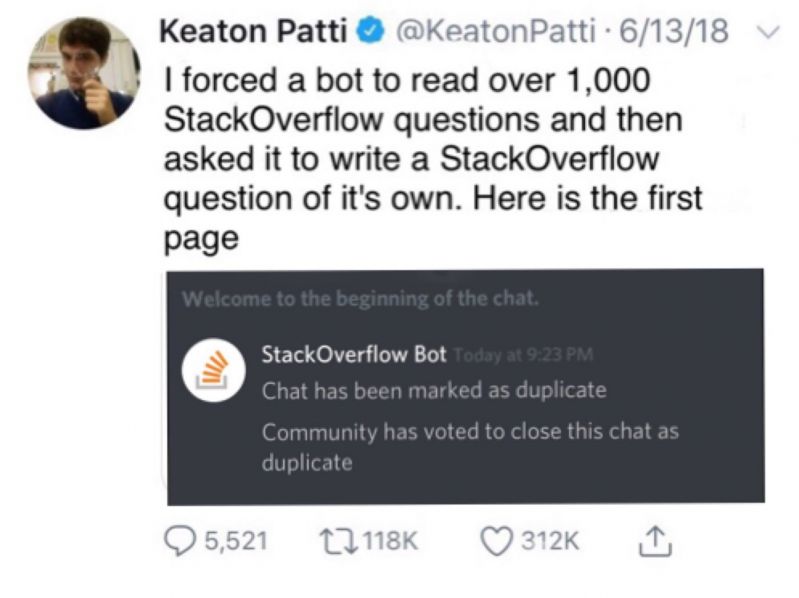

Machine Learning messed up!

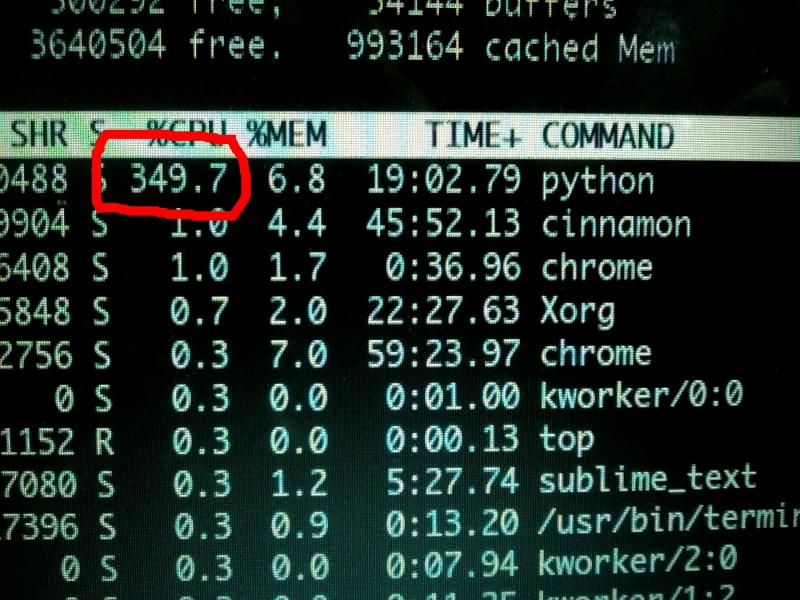

Machine Learning messed up! When your CPU is motivated and gives more than his 100%

When your CPU is motivated and gives more than his 100% What is machine learning?

What is machine learning?

Before I dip my toes on machine learning, let me leave some silly comments so I can laugh at myself in the future.

Let's make geth.

1. The model will spit out layer definitions and the size of sample data for training, children models are trained with limited computational resources.

2. Child models are voters that only response in terms of yes/no. A simple majority wins and then the action is taken.

3. The only goal for master models are to survive. i.e. To prevent me from killing them.

Questions:

1. How do models respond to a random output size? (Study GPT-3, should take weeks/months but worth it.)

2. How to define actions for voters to vote? Sounds like the boundary between actions should be blurry and votes can be changed from tick to tick (i.e. responding to something in a split second). Therefore

3. Why I haven't seen this yet? Is this design a stupidly complex way of achieving the same thing done by a simple neural network?

I am full of curiosity and stupidness.

rant

dummy

neural networks

machine learning