Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "machine learning"

-

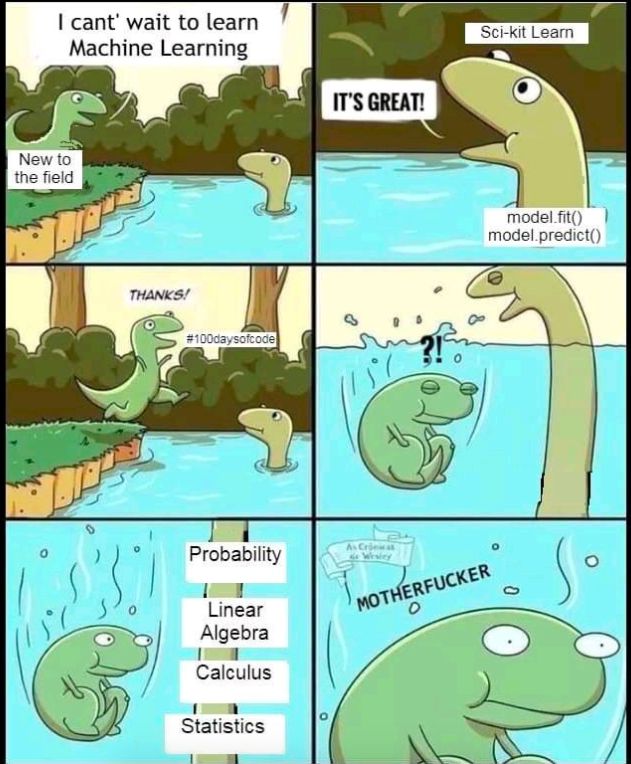

2010: PHP, CSS, Vanilla JS, and a LAMP Server.

Ah, the simple life.

2016: Node.js, React, Vue, Angular, AngularJS, Polymer, Sass, Less, Gulp, Bower, Grunt.

I can't handle this, I'm shifting domains to Machine Learning.

2017: Numpy, Scipy, TensorFlow, Theano, Keras, Torch, CNNs, RNNs, GANs and LOTS AND LOTS OF MATH!

Okay, okay. Calm down there fella.

JavaScript doesn't seem that complicated now, does it? 🙈14 -

Real conversation:

Coworker: I'm trying to classify data based on X

Me: Mhh. Seems like a hard task, we don't have data to figure out X

Coworker: I know! That's why I thought about using machine learning!

Me: (Oh, boy)

Coworker: I'm working on training this ML model that will be able to classify based on X

Me: and what are the inputs for your training?

Coworker: The data classified based on X

Me: And where did you get that from?

Coworker: I don't have it! That will be the output of my ML model!

Me: But you just said that was the input!

Coworker: Yes

Me: Don't you see a contradiction here?

Coworker: Yes, it's a pretty complicated problem, that's why I'm stuck. Can you help me with that?

Me: (Looking at my watch) Sorry I'm late for a meeting. Catch up later, bye!14 -

I just started playing around with machine learning in Python today. It's so fucking amazing, man!

All the concepts that come up when you search for tutorials on YouTube (you know, neural networks, SVM, Linear/Logic regression and all that fun stuff) seem overwhelming at first. I must admit, it took me more than 5 hours just to get everything set up the way it should be but, the end result was so satisfying when it finally worked (after ~100 errors).

If any of you guys want to start, I suggest visiting these YouTube channels:

- https://youtube.com/channel/...

- http://youtube.com/playlist/...9 -

For fuck's sake,if you are teaching "Machine Learning For Developers",you don't have to waste a whole hour explaining what the fuck a variable is or what is an if statement.Developers know what that is....aaargh.Off to sleep.13

-

Find me a co-founder of a startup who while telling about his/her company doesn't say "Machine Learning" in his/her speech.

I dare you. I double dare you.4 -

I'm proud to announce a new project made by myself and @thejohnhoffer, that started on devrant.

We started collaborating after I made a rant about machine learning and, since then we've been working hard on a plain language blog that simplifies machine learning concepts.

We call it learn-blog.

Check it out at ironman5366.github.io/learn-blog15 -

That moment when a friend was talking to you about an artificial intelligence he is building that is supposed to be a voice assistence and "even better" than Cortana. After a long time I asked him for the code like I wanted to check out the revolutionary techniques of machine learning he was talking about. So here is a short part of the 600 lines long "voice logic".

I almost started crying 😂😂 15

15 -

After months of tedious research, I finally feel like I understand machine learning.

All of my programmer buddies are in envy, but I keep trying to explain that what I finally get is that it's not as hard as it's presented to be.

I feel like a lot of the terminology in machine learning is really pretentious and unnecessary, and just keeps new people from the field.

For example: I could say: "Yeah, I'm training a classification model with two input neurons, a hidden activation layer, and an output neuron", and you might think I was hot shit. But that just gets translated into "I'm putting in two inputs, sorting them, and outputting one thing".

I feel like if there was a plain language guide to machine learning, the field would be a lot more attractive to a lot more people. I know that's why it was hard for me to get in. Maybe I'll write one.28 -

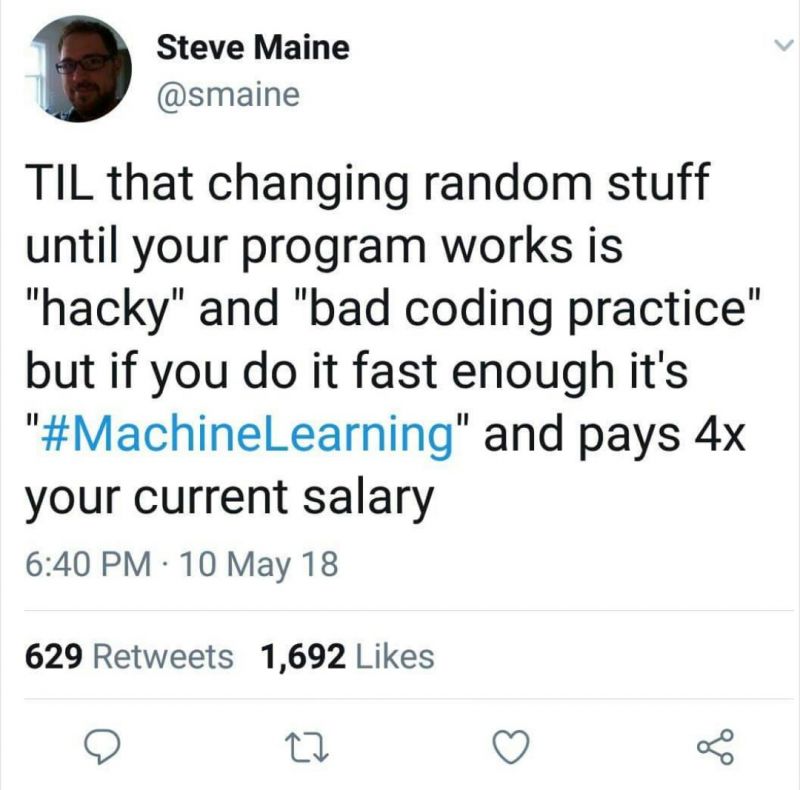

What I learned in the machine learning course so far, all the Buzzwords can be replaced by "statistical mathematics".5

-

Difference between machine learning and AI:

If it is written in Python, it's probably machine learning

If it is written in PowerPoint, it's probably AI2 -

*fortunes and tons of research spent on machine learning, signal processing and pattern recognition*

People: 5

5 -

Online ads....

I think the problem is that in the age of "AI" and "machine learning" etc etc - the reality is that targeted or personalised advertising is absolutely shite.

All I see when I browse around are ads for things that I bought. It's like - I FUCKING BOUGHT THIS WHY ARE YOU TRYING TO SELL IT TO ME??!

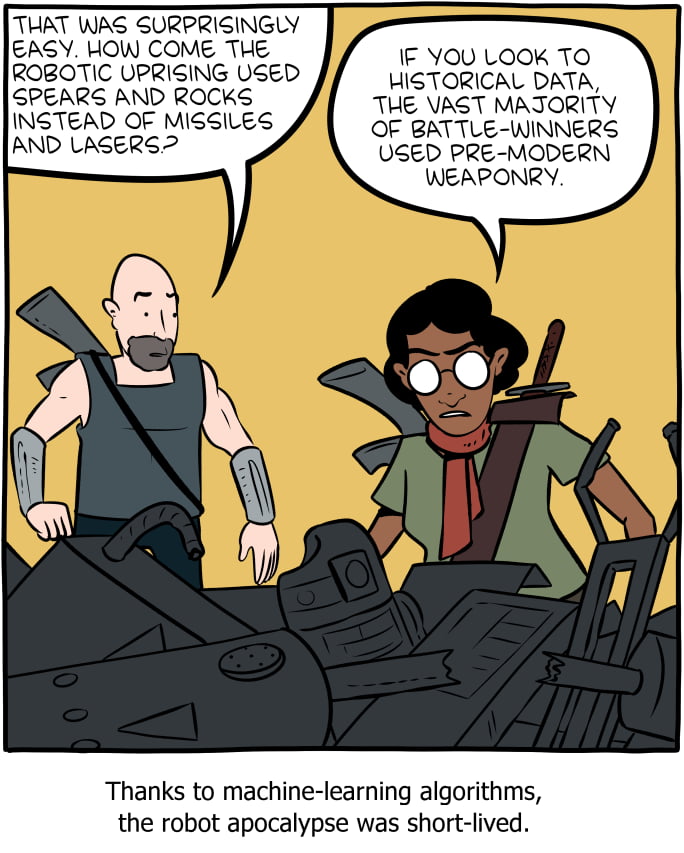

I think anyone worried about the machine uprising enslaving humanity can relax and not worry about it, at least until amazon can understand when it has sold you something or you just looked at something.6 -

Arrived today!

I'm using C++ for machine learning purposes using TensorFlow and OpenCV (rip Python, you are too slow 😭)

What are you using C++ for? 😁 18

18 -

Today I wrote a Neural Network in python for the first time, that could identify between strings, numbers and dates. Although the feat may look small it's a very proud moment for myself8

-

Today I'm trying to study how to encode data in idx-ubyte format for my machine learning project.

Professors I'm going to astonish you!

Good day and good coding to all of you! :) 6

6 -

!rant.

The 'Essence of Essential Algebra' is an amazing YouTube playlist by 3Blue1Brown to watch if you want to start understanding the algebraic concepts underlying Machine Learning!3 -

Everybody talking about Machine Learning like everybody talked about Cloud Computing and Big Data in 2013.4

-

The "explain x to an x years old boy/girl" questions are easy yet tricky.

Interviewer: Explain machine learning to an 8th years old kid.

"Imagine if <insert anecdotal example here>"

Interviewer: The kid is asleep. Try harder.4 -

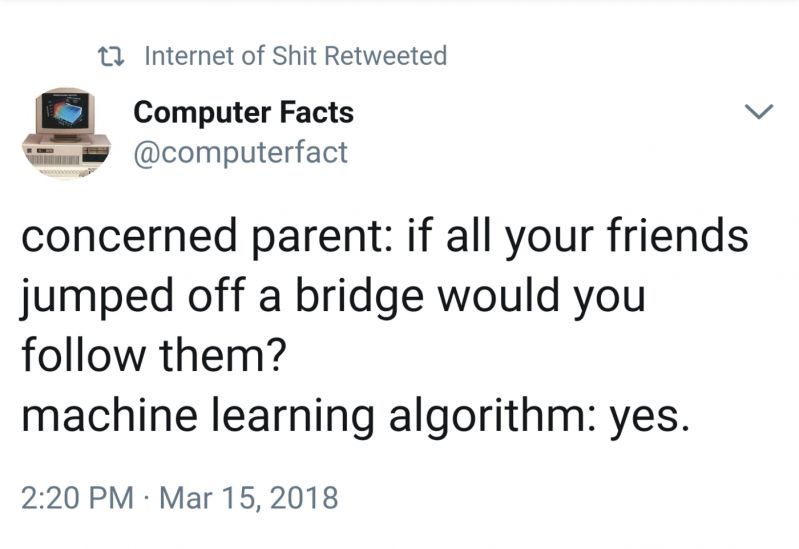

How machine learning works,

Interviewer: What's your biggest strength?

Developer: I am a quick learner

Interviewer: what is 1 + 1

Developer: 30.

Interviewer: Not even close, Its 2.

Developer: 210 -

Currently getting into Machine Learning and working on a joke-project to identify the main programming language of GitHub repositories based on commit messages. For half of the commits, the language is predicted correctly out of 53 possible languages. Which is not too bad given the fact that I have no clue what I'm doing...

9

9 -

"we use machine learning to ..."

Ffs you're interviewing a developer, not pitching to a investor. We know how much machine learning you use.7 -

> Open private browsing on Firefox on my Debian laptop

> Find ML Google course and decided to start learning in advance (AI and ML are topics for next semester)

**Phone notifications: YouTube suggests Machine Learning recipes #1 from Google**

> Not even logged in on laptop

> Not even chrome

> Not even history enabled

> Not fucking even windows

😒😒😒

The lack of privacy is fucking infuriating!

....

> Added video to watched latter

I now hate myself for bitting 22

22 -

Interviewer: what's your biggest strength?

Me: I'm a fast learner.

Interviewer: what's 11*11?

Me: 65.

Interviewer: Not even close. Its 121.

Me: Its 1211 -

Let me repeat this out and loud so that we are clear before another idiot starts pitching to me on building their "world class machine learning algorithm":

NO DATA, NO MACHINE LEARNING!2 -

*Goes for an interview*

Interviewer reads my resume and goes on to say : "You are the first person today, whose resume doesn't include 'machine learning' ".

Me : *Points towards Machine Learning written in my resume* Sir here it is.

We both have a good laugh about it.

That day i realised that EVERYONE is 'learning' machine learning. EVERYONE.4 -

This week I reached a major milestone in a Machine Learning/Music Analysis project that I've been working on for a long time!!

I'm really proud to launch 'The Harmonic Algorithm' as an open source project! It represents the evolution of something that's grown with me through two thesis' (initially in music analysis and later in creative computation) and has been a vessel for my passion in both Music and Computation/Machine Learning for a number of years.

For more info, detailed usage examples (with video clips) and installation instructions for anyone inclined to try it out, have a look at the GitHub repo for the project:

https://github.com/OscarSouth/...

"The Harmonic Algorithm, written in Haskell and R, generates musical domain specific data inside user defined constraints then filters it down and deterministically ranks it using a tailored Markov Chain model trained on ingested musical data. This presents a unique tool in the hands of the composer or performer which can be used as a writing aid, analysis device, for instrumental study or even in live performance." 1

1 -

Hey! How do I do machine learning?

Well first you start off with a metric shit ton of data.

And then you .fit() your data

from there you can .predict() your data

Trust me, the algorithms are already there. All you need to do is get the data.7 -

Why do people jump from c to python quickly. And all are about machine learning. Free days back my cousin asked me for books to learn python.

Trust me you have to learn c before python. People struggle going from python to c. But no ml, scripting,

And most importantly software engineering wtf?

Software engineering is how to run projects and it is compulsory to learn python and no mention of got it any other vcs, wtf?

What the hell is that type of college. Trust me I am no way saying python is weak, but for learning purpose the depth of language and concepts like pass by reference, memory leaks, pointers.

And learning algorithms, data structures, is more important than machine learning, trust me if you cannot model the data, get proper training data, testing data then you will get screewed up outputs. And then again every one who hype these kinds of stuff also think that ml with 100% accuracy is greater than 90% and overfit the data, test the model on training data. And mostly the will learn in college will be by hearting few formulas, that's it.

Learn a language (concepts in language) like then you will most languages are easy.

Cool cs programmer are born today😖 31

31 -

Mechanical Engineer friend took Machine Learning as an elective subject in college thinking that it had something to do with the Physical machines.

His reaction during the class was priceless.3 -

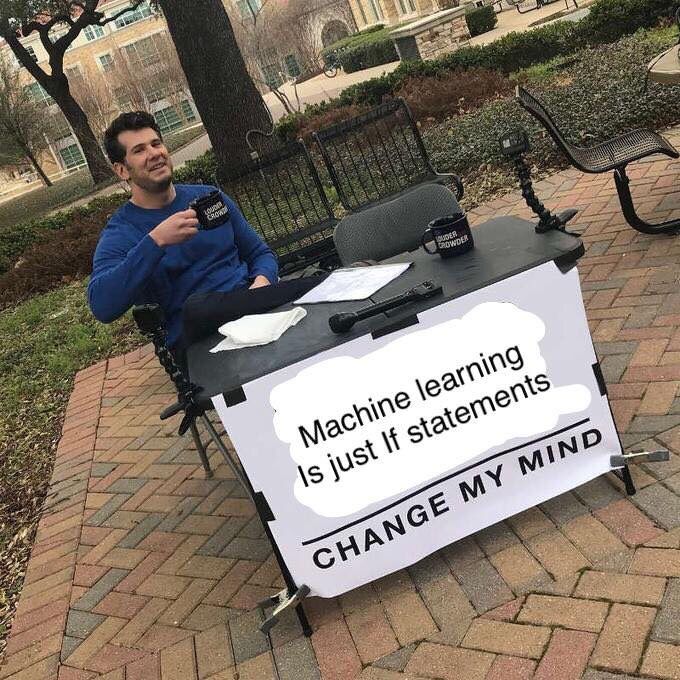

At work, an idiot who has never worked on machine learning before and understands nothing about it: "You know what, machine learning isn't actually hard. It is just basic statistics and then you download the model online and that's it! There's nothing else you are doing!"

STFU, you moron! Do you think just any model can work for your use case? Do you fucking think it is easy to come up with new architecture for a very specific use case and test it accurately? Do you think it's easy to effectively train a model and do hyper-parameter optimization?Do you fucking think it is easy to retrieve the right data for your use case? Do you think it's easy to keep up with research papers on arXiv being released daily? Do you think this is fucking javascript and there's a framework for everything? Stfu!

Honestly, i hate ignorant morons who generalize stuff they don't clearly understand.5 -

Today was the first time I was able to develop a full stack by my self!!!!

(I mean not by myself, StackOverflow was my Bible)

It's a small project with three modules , and while I've worked front end, back end and machine learning separately, I used to develop on a single component.

Today I built all the components on my own.

The hardest part was linking the nodejs file with the python script. Which seemed easy at first but then I needed to go through the documentation to understand the working behind the scenes.

Just looking how to deploy it now

This is a victory rant.

While it is not something big I feel so proud of myself 🥰1 -

Our boss demands us to implement machine learning to an obscure project.

So we use machine learning to find what to use machine learning for.5 -

Dank Learning, Generating Memes with Deep Learning !!

Now even machine can crack jokes better than Me 😣

https://web.stanford.edu/class/... rant deeplearning artificial intelligence ai neural networks stanford machine learning learning devrant ml2

rant deeplearning artificial intelligence ai neural networks stanford machine learning learning devrant ml2 -

So... Human are now learning machine learning. Interesting. Maybe we should instead teach a machine teach us machine learning so we can better human learn from the machines!2

-

Updated goal in regards to my road to machine learning mastery.

Status: new found motivation

Motivational case: bring humanity closer to having 2B become a real thing.

Reason: big booty droids should be a right to humanity.

End of transmission.

On another note, the sony xperia compact is a real nice device, so is the note 8. I would prob go with the note for power but the size of the xperia is more convenient for my taste.1 -

Le college freshman nibbas: Don't know C, Java, C++, python or any other programming language but want to do AI and machine learning!

💀🤷5 -

Anyone know how to convince a client that machine learning will not be a magical solution to all their problems???

2019 is gonna be super interesting.4 -

Machine learning is overhyped. A fellow halfwitted well-wisher wants my colleague to use supervised classification for a freaking search and replace problem.2

-

My dev area of focus? Machine Learning! Because it's fascinating to teach a computer to learn from experience :)4

-

Training Google's "efficient" net b7 be like epoch 1: ETA 6 hours

I come back in the afternoon and see this: 2

2 -

https://arxiv.org/abs/2006.03511

New reasearch paper about machine learning applied to translate code into different programming languages.

Would you see a use case for this at your work? 25

25 -

As you can see from the screenshot, its working.

The system is actually learning the associations between the digit sequence of semiprime hidden variables and known variables.

Training loss and value loss are super high at the moment and I'm using an absurdly small training set (10k sequence pairs). I'm running on the assumption that there is a very strong correlation between the structures (and that it isn't just all ephemeral).

This initial run is just to see if training an machine learning model is a viable approach.

Won't know for a while. Training loss could get very low (thats a good thing, indicating actual learning), only for it to spike later on, and if it does, I won't know if the sample size is too small, or if I need to do more training, or if the problem is actually intractable.

If or when that happens I'll experiment with different configurations like batch sizes, and more epochs, as well as upping the training set incrementally.

Either case, once the initial model is trained, I need to test it on samples never seen before (products I want to factor) and see if it generates some or all of the digits needed for rapid factorization.

Even partial digits would be a success here.

And I expect to create multiple training sets for each semiprime product and its unknown internal variables versus deriable known variables. The intersections of the sets, and what digits they have in common might be the best shot available for factorizing very large numbers in this approach.

Regardless, once I see that the model works at the small scale, the next step will be to increase the scope of the training data, and begin building out the distributed training platform so I can cut down the training time on a larger model.

I also want to train on random products of very large primes, just for variety and see what happens with that. But everything appears to be working. Working way better than I expected.

The model is running and learning to factorize primes from the set of identities I've been exploring for the last three fucking years.

Feels like things are paying off finally.

Will post updates specifically to this rant as they come. Probably once a day. 2

2 -

A teacher asked for my help in some machine learning project, I told her I don't have a background in ML.

She was working on an application that classified research papers according to the subject.

I said, seems like a basic NER project, but maybe I'm wrong I haven't worked on any ML projects before. But I do have experience with web, let me know if you need help in that.

She says, ML is also web, it's just like semantic web.12 -

Dear companies..

There is a fucking difference between:

-pattern recognition

-machine learning

And

- artificial INTELLIGENCE....

Learning from experience is NOT THE SAME as being able to make conclusions out of unknown conditions and figuring out new stuff without any input.8 -

Tired of hearing "our ML model has 51% accuracy! That's a big win!"

No, asshole, what you just built is a fucking random number generator, and a crappy one moreover.

You cannot do worse than 50%. If you had a binary classification model that was 10% accurate, that would be a win. You would just need to invert the output of the model, and you'd instantly get 90% accuracy.

50% accuracy is what you get by flipping coins. And you can achieve that with 1 line of code.5 -

The first time I took Andrew Ng's Machine learning course in 2014.

I was blown out of my wits at what could be achieved with simple algebra and calculus.1 -

So, the moment I started as a BA in a health insurance company, my Quora feed gets populated with Dilbert comics, and I can finally relate to them.

Thanks Machine Learning!

-

A friend approached me with an "unpopular opinion" regarding the worldwide famous intro to Machine Learning course by Andre Ng.

His opinion: "shit is boring AF and so is the teacher"

Honestly, I loved it, i think it is a really good intro to the actual intuition(pun/reference intended) to the area. I specially like how it cuts down the herd in terms of the people that stick with it and the people that don't, as in "math is too hard. All i want is to create A.I" <---- bye Felicia.

Even then, i think that the idea that Andrew Ng is boring is not too far from reality. I love math, i am by no means a natural, but with pen and paper in front of me and google I feel like i can figure out and remember anything, i do it out of sheer obsession and a knack for mathematical challenges. That is what kept me sane through the course. Other than that I find it hard to disagree, even if it was not boring for me.

Anyone here thinks the course was fucking boring as well? As in, the ones that have taken it.8 -

I'm starting to think that "Machine Learning" is the most unfortunate term that the industry has ever seen.

How people approach a problem here where I work: "I have a problem, I don't know how to solve it, I don't have any data. Let's implement a Machine Learning algorithm that will solve the problem for me."4 -

Which is the most promising sector of Artificial Intelligence in future(2025) ?

I am currently studying about 'Machine learning'.17 -

I was all happy using and applying Machine Learning algorithms until I came to do a Research Internship and now I'm sitting in the lab studying Linear Algebra, Probability and Statistics the whole day just to cope up with the guys who are improving and developing algorithms here!11

-

Just in case nobody mentioned it:

Humble Bundle : Machine Learning

https://humblebundle.com/books/...

and

Humble Bundle : UI UX

https://humblebundle.com/books/...6 -

Presenting to you: Google's latest Machine Learning technology.

https://m.imgur.com/2VI4doJ,0mzJjNf...

Those are all the same Honda Civic btw 7

7 -

My first rant here, I just found out about it, I don't have much of programming background, but it always triggeredmy intetest, currently I am learning many tools, my aim is to become a data scientist, I have done SAS, R, Python for it (not proficient yet though), also working on google cloud computing, database resources and going to start Machine Learning (Andrew Ng's Coursera).

Can anybody advice me, Am I doing it right or not.?2 -

My 2018 goals:

1. Graduate from the Deep Learning Nanodegree.

2. Get better at Python.

3. Learn C++.

4. Learn more about Machine Learning and AI.6 -

Well so after some fiddeling around, I managed to release a first preversion of my versatile Machine learning library for C++: https://github.com/Wittmaxi/...

I'd be more than happy to see people start using my Lib lol

In case you have ANY feedback, just open an issue ;) (feedback includes code review lol)2 -

I am beyond pissed at my Machine Learning class in college. you would think an advanced topic in Computer Science would require some prior knowledge of the field, but apparently not. A quarter of the class has ZERO programming knowledge, and the professor is basing the class around that. I took this course to learn how to CODE Machine Learning algorithms, not spend weeks upon weeks on learning how to calculate probabilities...2

-

python machine learning tutorials:

- import preprocessed dataset in perfect format specially crafted to match the model instead of reading from file like an actual real life would work

- use images data for recurrent neural network and see no problem

- use Conv1D for 2d input data like images

- use two letter variable names that only tutorial creator knows what they mean.

- do 10 data transformation in 1 line with no explanation of what is going on

- just enter these magic words

- okey guys thanks for watching make sure to hit that subscribe button

ehh, the machine learning ecosystem is burning pile of shit let me give you some examples:

- thanks to years of object oriented programming research and most wonderful abstractions we have "loss.backward()" which have no apparent connection to model but it affects the model, good to know

- cannot install the python packages because python must be >= 3.9 and at the same time < 3.9

- runtime error with bullshit cryptic message

- python having no data types but pytorch forces you to specify float32

- lets throw away the module name of a function with these simple tricks:

"import torch.nn.functional as F"

"import torch_geometric.transforms as T"

- tensor.detach().cpu().numpy() ???

- class NeuralNetwork(torch.nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__() ????

- lets call a function that switches on the tracking of math operations on tensors "model.train()" instead of something more indicative of the function actual effect like "model.set_mode_to_train()"

- what the fuck is ".iloc" ?

- solving environment -/- brings back memories when you could make a breakfast while the computer was turning on

- hey lets choose the slowest, most sloppy and inconsistent language ever created for high performance computing task called "data sCieNcE". but.. but. you can use numpy! I DONT GIVE A SHIT about numpy why don't you motherfuckers create a language that is inherently performant instead of calling some convoluted c++ library that requires 10s of dependencies? Why don't you create a package management system that works without me having to try random bullshit for 3 hours???

- lets set as industry standard a jupyter notebook which is not git compatible and have either 2 second latency of tab completion, no tab completion, no documentation on hover or useless documentation on hover, no way to easily redo the changes, no autosave, no error highlighting and possibility to use variable defined in a cell below in the cell above it

- lets use inconsistent variable names like "read_csv" and "isfile"

- lets pass a boolean variable as a string "true"

- lets contribute to tech enabled authoritarianism and create a face recognition and object detection models that china uses to destroy uyghur minority

- lets create a license plate computer vision system that will help government surveillance everyone, guys what a great idea

I don't want to deal with this bullshit language, bullshit ecosystem and bullshit unethical tech anymore.11 -

!rant

After a long time, finally I got an opportunity to participate in a Machine Learning competition.

Brushing up my memory.

And also teamed up with another colleague.

Hope, we both get to learn a lot even if we don't win. -

This is my understanding of "Machine Learning" in general

There are two sets of data:

1. In first data set, all the properties are known

2. In the second set, some properties are not known.

The goal of the machine learning is to find the value of the unknown properties of the second data set.

We do this by finding (or training) a suitable machine learning model (mathematical, logical or any combination of), that in the first data set, computes the value of the properties, which are unknown in second data set, with minimum error since we already know the real value of those properties.

Now, use this model to predict the unknown properties from the second data set. 3

3 -

That feeling when your first classifier on a real life problem exceeds the 97% majority class classifier accuracy.

I'm doing something right! -

People think Machine Learning is all about using Super Complex Prediction Models...

But turns out to devote most time in data gathering and data cleaning(preprocessing). 2

2 -

I finished a 30-minute lecture today with the conclusion "so it turns out that throwing way more data and resources at this Machine Learning problem gives much better results."

Who could have possibly predicted that?!2 -

I am Graduate student of applied computer science. I am required to select my electives. Now i have to decide between Machine Learning (ML) and Data Visualization. My problem is Machine Learning is very theoretical subject and I have no background in ML in my undergrad. In data visualization, the course is more focused towards D3.js. Due to lack of basic knowledge i am having second thoughts of taking ML. However, this course will be offered in next Fall term. And i am also studying from Coursera to build my background till that time.

I know there is no question here but I need a second opinion from someone experienced. Also, please suggest any other resource that I should look into to build my background in ML.2 -

I am learning Machine Learning via Matlab ML Onramp.

It seems to me that ML is;

1. (%80) preprocessing the ugly data so you can process data.

2. (%20) Creating models via algorithms you memorize from somewhere else, that has accuracy of %20 aswell.

3. (%100) Flaunting around like ML is second coming of Jesus and you are the harbinger of a new era.8 -

That very "awesomeee" moment when you have to create a website to a new startup which are using all the right buzzwords such as blockchain, AI and machine learning... (wuhuuu)

Btw. will you help a fellow dude by answering my survey for my final paper?

https://goo.gl/forms/...

Thank you!

- and it took a whole year for me (and some desperation for help) to write my first ever post on here :p4 -

Just did a 30min presentation with my team about machine learning and face/pose analysis. Everything went perfectly well. Couldn't be better. Such a good feeling! FeelsGoodMan. Time to go to the cantina :)

-

Decided to jump into the machine learning bandwagon and picked up a few books and some online courses. I already feel way out of my depth.5

-

From my big black book of ML and AI, something I've kept since I've 16, and has been a continual source of prescient predictions in the machine learning industry:

"Polynomial regression will one day be found to be equivalent to solving for self-attention."

Why run matrix multiplications when you can use the kernal trick and inner products?

Fight me.15 -

So I've started a little project in Java that creates a db of all of my downloaded movie and video files. The process is very simple, but I've just started incorporating Machine Learning.

The process is quite simple: You load the files into the db, the program tries to determine the movie's name, year and quality from the filename (this is where the ML comes in - the program needs to get this and dispose of useless data) and then does an online search for the plot, genre and ratings to be added to the db.

Does anyone have any feature suggestions or ML tips? Got to have something to do during the holiday!1 -

I just received this.

"I'm just saying that one should be proud to be able to import libraries and call themselves Data Scientist who can apply machine learning."

What the actual fuck.6 -

Learning Image Processing,Deep Learning,Machine Learning,Data modeling,mining and etc related to and also work on them are so much easier than installing requiremnts, packages and tools related to them!2

-

So I decided to run mozilla deep speech against some of my local language dataset using transfer learning from existing english model.

I adjusted alphabet and begin the learning.

I have pc with gtx1080 laying around so I utilized that but I recommend to use at least newest rtx 3080 to not waste time ( you can read about how much time it took below ).

Waited for 3 days and error goes to about ~30 so I switched the dataset and error went to about ~1 after a week.

Yeah I waited whole got damn week cause I don’t use this computer daily.

So I picked some audio from youtube to translate speech to text and it works a little. It’s not a masterpiece and I didn’t tested it extensively also didn’t fine tuned it but it works as I expected. It recognizes some words perfectly, other recognize partially, other don’t recognize.

I stopped test at this point as I don’t have any business use or plans for this but probably I’m one of the couple of companies / people right now who have my native language speech to text machine learning model.

I was doing transfer learning for the first time, also first time training from audio and waiting for results for such long time. I can say I’m now convinced that ML is something big.

To sum up, probably with right amount of money and time - about 1-3 months you can make decent speech to text software at home that will work good with your accent and native language. -

How machine learning works

Interviewer: What's your biggest strength?

Me: I'm fast learner.

Interviewer: What's 11 * 11

Me: 65

Interviewer: not even close. It's 121

Me: It's 1213 -

AWS released their machine learning training portal for the public for free. You can get certified for 300$.

https://aws.amazon.com/de/blogs/...1 -

So the new guy convinces my employer that he is a Machine Learning Expert. Nothing is wrong until He even convinces my employer that he can do Machine Learning with HTML (<==== is not a Programming Language); This is the time my IQ instantly dropped to ```Patrick Star```.8

-

Me searching for a Machine Learning internship online.

My friend searching for a Machine Learning internship online.

My ex-girlfriend searching for a Machine Learning internship online.

My neighbor searching for a Machine Learning internship online.

My Professor searching for a Machine Learning internship online.

My Father searching for a Machine Learning internship online.

My Ancestors searching for a Machine Learning internship online.

My cat searching for a Machine Learning internship online.

My guitar searching for a Machine ... I'm fucking done.

Tried the Andrew NG thing 6 times.

You read that right. 6. six.

Failed 7 times. Statistically, i failed.

*no comebacks here, I don't know machine Learning so I am getting 0 fucks from my current girlfriend, and you said if I play guitar, I'll have her. FUCK NO.*2 -

People completing Stanford + Andrew Ng's course and bragging how they know machine learning in and out while having no idea how to code simplest application using the simplest libraries.3

-

Adaptive Latent Hypersurfaces

The idea is rather than adjusting embedding latents, we learn a model that takes

the context tokens as input, and generates an efficient adapter or transform of the latents,

so when the latents are grabbed for that same input, they produce outputs with much lower perplexity and loss.

This can be trained autoregressively.

This is similar in some respects to hypernetworks, but applied to embeddings.

The thinking is we shouldn't change latents directly, because any given vector will general be orthogonal to any other, and changing the latents introduces variance for some subset of other inputs over some distribution that is partially or fully out-of-distribution to the current training and verification data sets, thus ultimately leading to a plateau in loss-drop.

Therefore, by autoregressively taking an input, and learning a model that produces a transform on the latents of a token dictionary, we can avoid this ossification of global minima, by finding hypersurfaces that adapt the embeddings, rather than changing them directly.

The result is a network that essentially acts a a compressor of all relevant use cases, without leading to overfitting on in-distribution data and underfitting on out-of-distribution data.12 -

My IoT professor expects us to, somehow, learn Machine Learning and use that to analyse the data we obtain in the working of our project! How are we supposed to learn ML to implement it's techniques, while simultaneously create a IoT project, learning its own techniques and also handle our other courses in just one semester?!6

-

GUYS! Humble bundle has teamed up with O'Reilly for a selection of Machine Learning books, anybody interested should check it out, 6 days left :

humblebundle.com/books/machine-learning-books2 -

Aren't u tired of machine learning, deep learning, IA, etc in news?

Everybody things that because I'm a programmer I should be updated about this things3 -

Ability to understand all machine learning models to modify code and those models directly and create better ones every time.

I would take existing ml model, modify it by hand to create better one, win some multimillion dollars competitions and make them open source.

Eventually all recommendation systems, text to speech, speech to text, music generation, movies generation etc would be opensource.

This would either destroy or boost all modern economy but for sure it would make harm to corporations and make them cry.

That would be fun to see.6 -

Working with machine learning gets very tiring sometimes.

Waiting for the results, tuning the parameters...

Arghhh....why does it keep getting stuck...1 -

So just ago i downloaded an app called "Replika" and holy fucking shit it made me realise how half-assed we are doing the AI structure and way of it

doing machine learning algorithms on text can only go so far, as it uses that text as a base, and nothing else, it doesnt *learn*, only make *connections* BETWEEN text, not FROM the text

what you need is an AI which can, at it's core, *interpret*, not make connections and hur dur be done with it

when you do machine learning, all you're doing is find the best connections

you can have an infinite number of connections and MAYBE you'll be fine, but you'll never learn the basis of how that text is formed

you'll never understand what connections the human used by making it, by thinking it

when you're doing machine learning, all you're doing is make an input-output machine and adjusting it constantly, WITHOUT preserving state

state is going to be a really fucking important thing if you want to make an AI, because state can include stuff like emotion, current thought, or anything else

if you make a fucking machine learned AI which constantly adjusts... well... the "rom" of itself without having any "ram", it'll fucking never be like us, we will NEVER be able to talk to it like it is a human being, we will NEVER make it fundamentally understand what we are saying or doing

if we want to have real fucking AI, we need to go to the core of what it means to THINK, what it means to INTERPRET, what it means to COMMUNICATE

we need to know how english language is structured, how we understand it, how we can build it in a program that can interpret for an AI, THAT can be "rom"-based, THAT can be static, NOT the AI itself

the AI needs to be in flux, the AI needs to be in a state, the AI needs to understand how to make emotions, how that will "strengthen" some connections, yes, maybe something magical will happen and it can have EMPATHY, something so fundamental that will finally, FINALLY, make the bot UNDERSTAND what we are saying7 -

Let’s just face it. Once the majority of people understand, data science (machine learning on custom behaviour mostly) will be the most disgusted job in the world. Mind boggling! You get shot right between the eye by doing it.

4

4 -

Started doing deep learning.

Me: I guess the training will take 3 days to get about 60% accuracy

Electricity: I dont think so! *Power cut every day lately

My dataset: I dont think so! *Running training only achieve 30% of learning accuracy and 19% of validation accuracy

Project submission: next week

😑😑😑2 -

Everyone I tell this to, thinks it’s cutting edge, but I see it as a stitched together mess. Regardless:

A micro-service based application that stages machine learning tasks, and is meant to be deployed on 4+ machines. Running with two message queues at its heart and several workers, each worker configured to run optimally for either heavy cpu or gpu tasks.

The technology stack includes rabbitmq, Redis, Postgres, tensorflow, torch and the services are written in nodejs, lua and python. All packaged as a Kubernetes application.

Worked on this for 9 months now. I was the only constant on the project, and the architecture design has been basically re-engineered by myself. Since the last guy underestimated the ask.2 -

I just find out that AI is gonna extinct humanity. And developers will be on a privileged sit to appreciate that. So i decided to learn python and machine learning to help!20

-

These many Python libs exist for machine learning....

Used only scikit and gensim till now...

Little of theano...

-

Next personal fail ...

previous rant

https://devrant.com/rants/2060249/...

Turned out that wavenet is sequential so it needs previous step to predict next.

Quite obvious when you look at how people speak sentences, they hardly stop in the middle of the word.

🤔

need to think how to proceed next, how to cut sentences.

Watched deepvoice3 and some accent models from baidu.

I can generate 8 sentences at a time, each takes 8 minutes so if I cut between words and got last mels between words right I can get 1 minute but I need to store model somewhere.

I forgot my machine learning and speech synthesis skills from previous life, time to load more skills ... -

Looks like unzipping on disk drive where you keep your dataset can crash machine learning training session.

Thanks nvidia for your great drivers.

I like your solutions. -

!rant thinking of learning machine learning. Have no much maths background. Where do I start?

Links, blog's, books, examples, tutorials, anything. Need help.3 -

Easily machine learning. A lot of stuff thst was bevore thought to be impossible or just plain was to hard suddenly gets reachable. My fav example is the dota ai. Just love it

-

Why do companies wrap up everything simple fucking operation with "Machine Learning."

What is the fucking purpose of ML to a Password Manager? What's the mystery of suggesting password based on TLD match?

"Congratulation, you now have ML to auto-fill your password" WTF, how was it working before the update?

Sick.4 -

First Kaggle kernel, first Kaggle competition, completing 'Introduction to Machine Learning' from Udacity, ML & DL learning paths from Kaggle, almost done with my 100DaysOfMLCode challenge & now Move 37 course by School of AI from Siraj Raval. Interesting month!

4

4 -

I'd like to create an imageboard app with React-Native and got a few questions.

Every user has to like or dislike the shown content to advance to the next image/video (tinder style). I want to use that data to feed a machine learning algorithm and generate an unique selection of displayed media for each user with that.

Even though I never used it yet before (I'm still learning to code) I want to use python and a python machine learning library for that.

Can you give me any advice for the python part? For example which library to use, where to start, etc. .

Do you think that might be an interesting idea to realize? :)2 -

I want to write a program that uses machine learning to predict questions in an exam. The questions to be predicted are based on topics or trends from one year of newspapers and related topics from a syllabus. I wish to use python for this. But dont know where to start. I know nothing about ml! Wish to structure this out. Help me.14

-

Which books are best for machine learning?question machine learning to make it sound complicated machine learning rants artificial intelligence machine learning deep learning5

-

Itd be cool if we could get something like Schenzen.Io going, but you build the chips from the gates up

Maybe package them into modular units, and connect those at a higher level or abstraction, ad infinitum.

Then add access to virtual LCD output, and other peripherals, or even map output to real hardware, essentially letting you build near bare-metal virtual machines.

Dont know about that last part, but the closest I've seen to the rest is circuit simulator and again, schenzen.

On the machine learning front I figured out I need about ten times as many training samples as validation samples, or vice versa. I'll have to check my notes. Explains why I could get training loss below 2.11

Also, I'm looking at grouping digits, and trying different representations. I'm looking at the hidden variables for primorials to see what that reveals. And I realized because of the amount of configurations and training that I want to do, even a personally built cloud isnt going to be sufficient. I'm gonna have to rent someone else's hardware and run it "in duh cloud."

Any good providers that are ridiculously upfront for beginners to get started with? Namely something cheapish.3 -

Q: How easy is it to create machine learning models nowadays?

= TensorFlow

That's ML commoditization.21 -

Whenever I start talking about machine learning the first question I get from the pretentious ones is, "Is it supervised or unsupervised classification?"

-

I had this dream, We had to destroy this super mainframe who wanted some revenge because we as a programmers made a lot of mistakes in the code.

So we had to create this amazing machine with super powers and machine learning and then to go back to the past to save the world and to find me and protect me from the same machines that we created..

At the end, I died.. because the fucking machine betrayed me.1 -

Lets say you used a dating app. It explicitly told you "please do not share any personally identifiable information when chatting with any boy/girl with this dating app because your chat will be stored and processed in our server to improve the recommendation algorithm of potential dates and autocompleting your flirty talk to increase your successful rate." at the beginning of the app, but you didn't read carefully and clicked "agree". After a while, you noticed the dating app already stored all your private chat in their server for machine learning.

Although legally I believe that dating app would still be GDPR compliant,

the question is, will you continue using that dating app or not?3 -

Team are getting into using Machine learning for anomalous behaviour detection for authentication and traffic behaviour... It's so interesting and another useful tool in our security arsenal

-

I currently have a 3+ year old laptop.

Dell Inspiron 15 3521:

OS: Windows 10 Pro

RAM: 8GB (4+4)

Processor: Intel Core i5 3rd Gen

Video/Graphics Card: AMD Radeon 8730M 2GB (and Intel HD 4000)

Hard Disk: 1TB

It's slowly becoming sluggish and has clearly outdated hardware. I want to pursue a Master's degree in CS (Machine Learning oriented).

Should I consider upgrading? Build a PC instead? Suggestions?35 -

A machine learning beer referral app, an AI music making algorithm, a GA to make machine learning research easier, and a text editor where ctrl-s deletes all of your work and ctrl-z saves it.3

-

Started to learn Reinforcement Leaning, from level 0: Atari Pong Game. Stopped and think a bit on the gradient calculation part of the blog.... hmm, I guess it's been almost a year since my Machine Learning basic course. Good thing is old memory eventually came back and everything starts to make sense again.

Wish me luck...

Following this blog:

https://karpathy.github.io/2016/05/...3 -

To all my Machine Learning engineers, Ive been doing Frontend development for 6 years and I'm done. Wanting to get into machine learning because I've always loved data.

1. What is your day to day like?

2. Any advice for my learning journey?

Thank you🙏14 -

Any example of machine learning / artificial intelligence on video auditing that the community knows of?

-

Before I dip my toes on machine learning, let me leave some silly comments so I can laugh at myself in the future.

Let's make geth.

1. The model will spit out layer definitions and the size of sample data for training, children models are trained with limited computational resources.

2. Child models are voters that only response in terms of yes/no. A simple majority wins and then the action is taken.

3. The only goal for master models are to survive. i.e. To prevent me from killing them.

Questions:

1. How do models respond to a random output size? (Study GPT-3, should take weeks/months but worth it.)

2. How to define actions for voters to vote? Sounds like the boundary between actions should be blurry and votes can be changed from tick to tick (i.e. responding to something in a split second). Therefore

3. Why I haven't seen this yet? Is this design a stupidly complex way of achieving the same thing done by a simple neural network?

I am full of curiosity and stupidness.5 -

I'm wondering if there is a way to use Machine Learning algorithm to optimize games like Screeps.com, which is a game that you control your game by writing JS code. Letting the algorithm write human readable code might be too challenging, but optimizing some aspect of the game should be possible, like the best scale up route optimization using re-enforced learning.3

-

Anyone know of any GPU accelerated Machine Learning libraries that DON'T need Python, something maybe using C/C++, C# or Java?12

-

What's the difference between data science, machine learning, and artificial intelligence?

http://varianceexplained.org/r/... -

Just enrolled myself in Andrew Ng's Machine Learning course at Coursera for the summer, is it a good place to start? Any recommendations?

-

thought of the day :

machine learning does not totally automate the end-to-end process of data to insight (and action), as is often suggested. We do need human intervention. And having the right mix of specialists is equally important as they have the expertise to build prototype projects in different business lines. Thus, one must hire the right team in the context of her organization to ensure an assured path towards success.

Besides, it is important to note that organizations don’t have machine learning problems. Instead, there are just business problems that companies might solve using machine learning. Therefore, identifying and articulating the business problem is mandatory before investing significant effort in the process and before hiring the machine learning experts.1 -

Have any of you moved from Web application development to more deep and complex stuff? I mean without finding it boring. I just moved to data and analytics at my job. And in a few months we will be getting into AI and machine learning. I just don't know if I'm going to find it boring or not. I really enjoy and still love web development.1

-

Hello Guys... Just wanted to have some opinions:

Considering long-term career stability, how does a Machine Learning engineer compare with a Software Developer ( C, C++, Java, Python, etc. ) & a Web Developer ( Java, JavaScript, SQL, CSS, Python ) ? 9

9 -

I've nearly completed Machine learning Andrew Ng course from Coursera and now I am confused what to do next. Suggestions would be useful.3

-

I would like to learning something about machine learning and create something with it. Byť i want it to be something usefull, jave your aby idea what should i try to do ?

-

Machine Learning and Deep Neural Networks in particular. More job offers to pick from in upcoming years for me :P

-

I watched "imitation game" for the first time.

The first machine learning was unsupervised learning? Really?

You're too crazy boys... -

Are there any good courses for practical machine learning (that uses an actual language)? I tried out Andrew Ng's course and it seems more theoretical than practical.2

-

!rant

One of my coworkers told me I'd be interested in machine learning, but I'm not conviced

Is it really that great ?8 -

Quite interesting talks and discussion, if you are interested in non-technical topics of machine learning

https://youtu.be/_zwBCDmlvv8 -

Have anyone used machine learning in real world use cases? (would be nice if you can describe the case in a few words)

I'm reading about the topic and do some testing stuff but at the moment my feeling is that ml is like blockchain. It solves a specific type of problem and for some reason everyone wants to have this problem.6 -

Don't hate siraj so much he taught me all of machine learning.

P.s I started my machine learning journey just 5 minutes ago

#SirajRocks1 -

So I have a STUPID question about Machine Learning.

And I am being serious when I say this.

I want to get into machine learning but I really don’t want to accidentally create the AI that kills us all.

I’m not trying to boast my abilities or anything I’m not that great but I just

one don’t completely understand how machine learning works?

And two how do I keep it from learning more than I want it to??

I’m not trying to be stupid I’m just trying to understand so I don’t make anything that I regret.17 -

hey if devrant has any suggestions to learn machine learning AI from that would be nice and yes my github is under construction9

-

Want to do all of these Open Source, machine learning and competitive coding. But can't choose one. I would do all but apparently getting good grades is important in college. Fml

-

One of team member was showcasing their time series modelling in ML. ARIMA I guess. I remember him saying that the accuracy is 50%.

Isn't that same as a coin toss output? Wouldn't any baseline model require accuracy greater than 50%?3 -

Do I have to be good at Mathematics to be good in Machine Learning / Data Science?

I suck at Mathematics, but ML/DS seems so fascinating. Worth a try if I hate Maths?

As they say, do what you enjoy doing.8 -

Amazon started machine learning models marketplace and they are organizing marketplace hackaton if someone is interested.

https://awsmarketplaceml.devpost.com/...

It’s available till 15 April and there is $48,000 in prizes1 -

I am interested in Machine learning and don't know how to proceed. there are tons of courses on udemy, Coursera, udacity etc..

I am doing Coursera machine learning by Andrew Ng and didn't know what to do next. Suggestions will be helpful.

Btw I am doing Machine learning to implement it in Android. -

Skip to last block for actual question, everything else is about what i see and dont understand.

Machine learning and artificial intelligence is very interesting to me, ive watched a few videos but i cant manage to wrap my mind around it.

I see a few people starting out with projects that appear to be an easy start, but i of course have no idea, were they make a self-driving car in GTA (crashes alot, but still) or teach the program to complete levels in a game (snake, mario, run forrest)

I watched a few videos on Jabrils youtube channel that seemed to make alot of sense, until one point..

How does the AI know when it hit a wall?

How does it know where the walls are?

How does it measure the distance? How does it know when it has respawned?

I find it really, really confusing.

Can anyone of you geniuses suggest me anything to get into this? Id prefer if the goal was to make an AI using machine learning, that can complete some basic game, like in Jabrils videos.2 -

Is it just me or everyone takes atleast twice or sometimes even five times the duration specified to complete a MOOC.? I have been doing Andrew NG Coursera machine learning for almost two years now.!1

-

Machine learning algorithm with 20 threads. Machine is like: "bitch please" (and runs the algo clean until the end)

Increase parameter to 40 threads. NullPointerExceptions. NullPointerExceptions everywhere.4 -

I have a project in need of machine learning. It takes an image and turns it into text. How do I begin acquiring the data needed to feed the machine? Should I just start taking pictures of this particular item on many different devices and get as many friends to do the same? How do I begin gathering my data is the question?4

-

Recently learnt about machine learning and it's applications. First thing that comes to my mind "how often do women buy lingerie from supermarkets?"

-

If you are required to do a custom Object Detector for Mobile.

Would rather use Tensorflow, Theano, or PyTorch to train the model?1 -

Is learning artificial intelligence, deep learning, machine learning the only way to survive in this industry for the next decade or so?1

-

Just Started learning unsupervised learning algorithms, and i write this: Unsupervised Learning is an AI procedure, where you don’t have to set the standard. Preferably, you have to allow the model to take a chance at its own to see data.

Unsupervised Learning calculations allow you to make increasingly complex planning projects contrasted with managed learning. Albeit, Unsupervised Learning can be progressively whimsical contrasted and other specific learning plans.

Unsupervised machine learning algorithm induces patterns from a dataset without relating to known or checked results. Not at all like supervised machine learning, Unsupervised Machine Learning approaches can’t be legitimately used to loss or an order issue since you have no proof of what the conditions for the yield data may be, making it difficult for you to prepare the estimate how you usually would. Unsupervised Learning can preferably be used to get the essential structure of the data. -

Ok this is my first try in machine learning, this is DQN for Pong!

I want to add my own flavor by adding pressure touch to paddle movements, such that the harder I press in a direction it moves faster to a max speed.

Does it makes sense if I apply the floating point outputs of sigmoid directly to speed control? Or should I make multiple outputs to represent different "steps" of pressure/speed? -

What is the Hot Tech (Best Emerging Technology) of this generation?

Machine Learning, Internet of Things, Big Data, Android development.5 -

I want to add Python programmers engineer , SQL Server engineer, machine learning engineer on my social media like Instagram , Snapchat , LinkedIn , WhatsApp etc . To know about better understanding of these languages and their concepts and explore more in engineering field . Plz comments your I'd and be my mentor .

Your friend ,

Degel(Rahul Vishwas)2 -

New software unveiled at the CES could track down on password sharing on streaming platforms. The program uses “machine learning” so that the platform will improve as it is being used, helping to further identifying “consumption patterns.”

-

Hi I'm getting into machine learning and I am wondering what's the best way to learn machine learning ?3

-

There are people who develop Neural Networks/Deep Learning Models/AI based Softwares.

Does anybody know what do we call them? Is it okay to call all of them Machine Learning Engineer/AI researcher/AI engineer?

If I'm looking for someone who can make AI based program for me. Whom should I be looking for on freelancer or LinkedIn?1 -

What is the best searching algorithm for big data technologies like Machine learning and Neural networks?

ANY GUESS!!!

Comment it.5 -

Need some help here!!

I'm learning machine learning, so planning to buy Asus R510JX-DM230T. Are the below specs enough to practice TensorFlow ?? Specs : 2.6GHz Core i7 4720HQ processor

8GB DDR3 RAM, 1TB 5400rpm Serial ATA hard drive

15.6-inch FHD Anti-Glare Display, 2GB Nvidia GeForce GTX 950M Graphics13 -

I have created a "Spam Email Detection using Machine Learning" Project.

Do check out and let me know if any suggestions.

The project is uploaded on "myblindbird" website. Visit myblindbird and search "spam email detection" it will land you on the article.

Thanks1 -

We developed an application for getting started with Machine Learning in Flutter! Please check out and share feedback https://play.google.com/store/apps/...