Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

Kodnot4588y@Alice well, it was just two pictures, so he wasn't seriously storing them. He gave some excuses about how someone sent him the pictures and he was surprised and saved them as evidence, but the court didn't buy it. Of course though, the stupidity of the people who get caught like this astounds me - those who store their illegal content properly simply do not get caught, so there are no stories about them 😂

Kodnot4588y@Alice well, it was just two pictures, so he wasn't seriously storing them. He gave some excuses about how someone sent him the pictures and he was surprised and saved them as evidence, but the court didn't buy it. Of course though, the stupidity of the people who get caught like this astounds me - those who store their illegal content properly simply do not get caught, so there are no stories about them 😂 -

moagggi7708yIndeed, (and AFIK!) Microsoft eventually employs some people to actually look through all pictures/videos on OneDrive. There were some news about a pair of them opening a lawsuit against MS because of depression / postraumatic syndrome they now have, because they've seen murder and several other assault beeing filmed and stored.

moagggi7708yIndeed, (and AFIK!) Microsoft eventually employs some people to actually look through all pictures/videos on OneDrive. There were some news about a pair of them opening a lawsuit against MS because of depression / postraumatic syndrome they now have, because they've seen murder and several other assault beeing filmed and stored.

Here is (one of them) links for example:

https://mspoweruser.com/microsoft-e... -

monr0e12278yAccording to a whitepaper a while back, this sort of thing is achieved first by matching signatures of known criminal files. These can be anything from documents detailing how to make offensive weapons or explosives, to known distributions of child pornography. Machine learning also plays a part in this, which limits the amount of data that needs to be trawled through to find incriminating evidence. Onedrive checks for signatures on file uploads, so its simply a matter of time before human verification took place and the offender was caught.

monr0e12278yAccording to a whitepaper a while back, this sort of thing is achieved first by matching signatures of known criminal files. These can be anything from documents detailing how to make offensive weapons or explosives, to known distributions of child pornography. Machine learning also plays a part in this, which limits the amount of data that needs to be trawled through to find incriminating evidence. Onedrive checks for signatures on file uploads, so its simply a matter of time before human verification took place and the offender was caught. -

skprog18748yUntil you find out that the foreign power put the pictures in his one drive. Because he doesnt even have one drive enabled because he hates it.

skprog18748yUntil you find out that the foreign power put the pictures in his one drive. Because he doesnt even have one drive enabled because he hates it. -

Possibly something with for example PRISM/XKeyScore? Two nsa programs which pretty much suck up everything on the internet.

-

Kodnot4588y@Lahsen2016 Well, in the link that @moagggi provided, it is clearly said that microsoft uses technology (probs some sort of NN AI like you said) to scan for illegal content, but later still uses humans to verify the findings.

Kodnot4588y@Lahsen2016 Well, in the link that @moagggi provided, it is clearly said that microsoft uses technology (probs some sort of NN AI like you said) to scan for illegal content, but later still uses humans to verify the findings. -

Kodnot4588y@Lahsen2016 but that would be for CP generation, not recognition. God, can you imagine what it would be like to code such a program?... Every time you debug and run the code, CP is produced, and your goal is to make the CP as good as possible... 😓

Kodnot4588y@Lahsen2016 but that would be for CP generation, not recognition. God, can you imagine what it would be like to code such a program?... Every time you debug and run the code, CP is produced, and your goal is to make the CP as good as possible... 😓

Anyways, there shouldn't be a need for that. As such a program would likely be created by a law enforcement agency, the programmer would likely have access to databases with evidences, plenty of CP for traning data included. -

monr0e12278yDeep learning networks are definitely used for analysis purposes. The process of analysing image content, though, likely falls down to facial analysis which we've had for many years. Its not all that difficult to determine if an image is of a child, and its not all that difficult to determine if a subject within an image is nude. Those two principles married up likely form a better solution cost wise, otherwise you also have to shell out for psychological services for developers, rather than just analysts. That point is even more strongly reinforced when you consider that you can only employ someone for a limited amount of time before the employee is either overwhelmed with the emotional impact of the job role, or desensitised to it. The latter is legally concerning, the former is cost-heavy.

monr0e12278yDeep learning networks are definitely used for analysis purposes. The process of analysing image content, though, likely falls down to facial analysis which we've had for many years. Its not all that difficult to determine if an image is of a child, and its not all that difficult to determine if a subject within an image is nude. Those two principles married up likely form a better solution cost wise, otherwise you also have to shell out for psychological services for developers, rather than just analysts. That point is even more strongly reinforced when you consider that you can only employ someone for a limited amount of time before the employee is either overwhelmed with the emotional impact of the job role, or desensitised to it. The latter is legally concerning, the former is cost-heavy.

So no, I doubt neural learning algorithms are trained on sun material specifically.

Related Rants

Privacy is a legend

Privacy is a legend

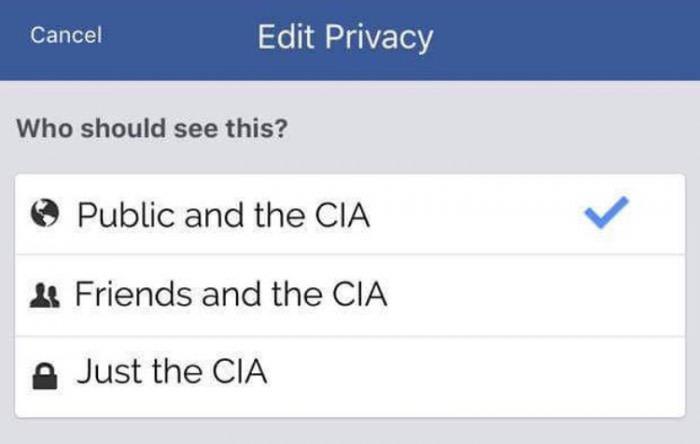

So yesterday there was an interesting news story in my country. A man was fined for posession of two pictures containing pornographic depictions of children.

Now that's all great. The interesting part, however, is how the man was caught.

A tip was given from foreign agencies to the law enforcement of my country that the man was storing the pictures on his OneDrive. Not sharing them or anything, simply storing them there.

How the FUCK did the know? Do they monitor everything you put in a fucking private cloud repository? I've never used OneDrive, and now I'll make sure to never use it in the future. Fucking spyware.

rant

fucktards

privacy

spyware