Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Related Rants

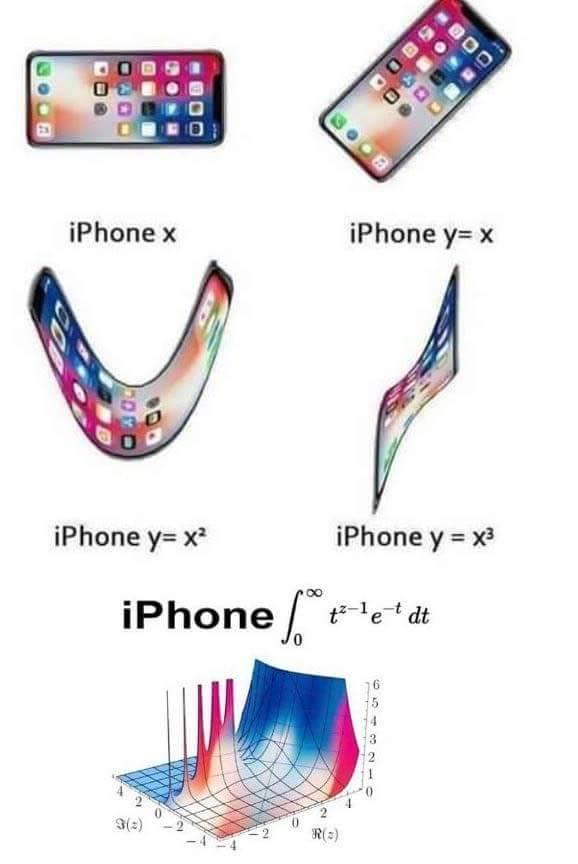

how about stepping up the iphone meme

how about stepping up the iphone meme Response to iPhone X

Response to iPhone X

My first prompt with grok, for giggles:

"how to disable grok"

This is a certified black mirror classic.

rant

x