Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

Voxera108837yHave not used embedded c but I have tried TDD.

Voxera108837yHave not used embedded c but I have tried TDD.

My experience is that its best used from the start and with the ambition to not implement anything unless you first have a failing test to satisfy.

That way you will get code coverage.

Also consider not just expected input but plain wrong or broken input and create tests that fail if the methods does not fail in the intended way.

Should they throw an exception, create a test that expects an exception. Should the return an error code, test that.

If you know the input will only ever be between 1 and 9 and intend to use that to make the method faster, make a test that inputs 12, 2346643788 and -467.74 depending on what the language and variables can transfer.

This will build robust code that when it fails, it will tell you very much about why it failed.

Did you mixup feet and meter or convert that , to . the wrong way ;) -

Haven't done embedded. Have done tdd. Like @Voxera said, do it from the beginning.

At first it mught feel like a waste of time, but later you'll realize it makes you go much faster than w/o tdd. Write tests one-by-one. Test->make it pass->refactor codez if needed->test->.... And so on. That way you'll always have working code. And your test method names will serve you as a doc, perfectly explaining what your sw does and what it doesn't!

Try to maintain coverage >90%. -

Voxera108837y@irene its one way to get good coverage.

Voxera108837y@irene its one way to get good coverage.

Its also a way to make sure working things keep working.

Every time you add something or change something there is always the risk that you break something.

If you write tests before code, nothing you already have had working can break without the tests warning you.

Like every technique, it requires some discipline and in cases where I really have no clue to what I need and do a lot of experimentation and major changes to see what works I mostly skip it.

But once I have some idea of how the interface for the code should look like I try to use TDD.

The tests not only verify the code, they are a good test of the interface.

If the tests require a lot of code and gets complex its a warning that the interface might be to complex and hard to work with.

And if you still cannot find a way to make it simpler the tests as mentioned can act as documentation and examples on how to call the interface.

A new dev can just find the test verifying his use case and copy that, remove the testing params and inserting the real and they will be up and running.

To often have I struggled to get something working by looking on other code just to find out there was a hidden setup step somewhere not obvious that I did not get.

A good test will do all initialization per test and test a single thing in each test.

That does not mean only a single assert, but a single call or concept.

If the method returns an object I might have a dozen asserts checking the different properties instead of duplicating the test a dozen times with different asserts in the end.

Also if there are three required steps that can not be used separately I sometimes have asserts in between the steps since making multiple tests will give little extra info, but I know that there are opinions on that ;) -

740026937yWith real hardware test fixtures or with emulation/simulation of the processor? I would be interested in the setup you end up with.

740026937yWith real hardware test fixtures or with emulation/simulation of the processor? I would be interested in the setup you end up with. -

Voxera108837y@irene that depends on how you do.

Voxera108837y@irene that depends on how you do.

Unit tests should start by testing units in as small steps as possible to pinpoint a problem.

But I often have tests that check a mesh of objects together as a catch all solution.

But if unit tests are done completely TDD there should never be a problem because every unit will behave as tested and tests should test both ends of a unit.

Not only test what you put in and get back, but also, through moched dependencies that its does all calls to next layer and that given wrong answers from the moched dependency its fails in accordance to plan.

That way you have tests on both sides of the class and the tests on the inner side should mimic the tests on the corresponding outside of the dependency class.

So if class A is dependent on B you would have tests with a moch B verifying that calls to A results in the right calls to B.

And then have tests doing the same calls to B to verify that B returns the right answers.

When you then combine A and B there should not be possible to end up in a situation not already covered and verified by tests.

It will require lots of tests yes. -

Voxera108837y@irene of cause, there might be situations where it can be hard, race conditions in parallel code and such but good tests lets you focus on the overall complexity.

Voxera108837y@irene of cause, there might be situations where it can be hard, race conditions in parallel code and such but good tests lets you focus on the overall complexity. -

Voxera108837y@irene well if you connect units the wrong way without compilation error, yes that will be a problem.

Voxera108837y@irene well if you connect units the wrong way without compilation error, yes that will be a problem.

But in my experience that is rarely the hard problems unless you have many similar classes in which case you might reconsider the structure to try to make it more clear.

And you are right in that TDD does not solve all problems but many and the most important aspect is the protection against regressions.

Good tests make refactoring much easier and safer.

Related Rants

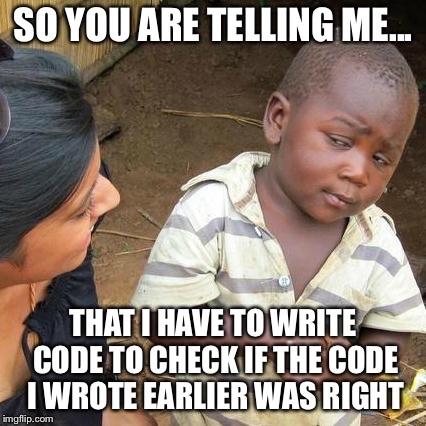

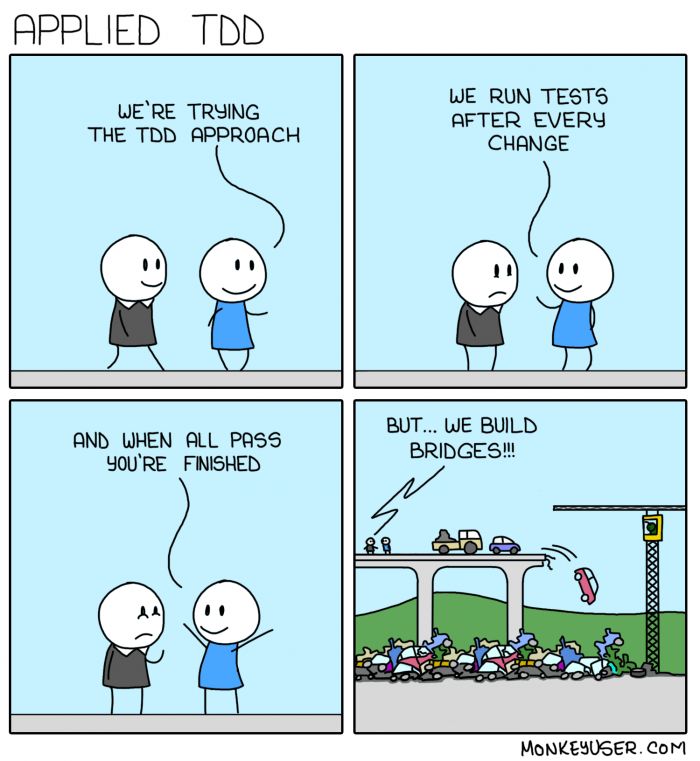

Do you follow test driven development?

Do you follow test driven development? TDD in construction 🚧

TDD in construction 🚧

I am about to try TDD for embedded C. Does anyone around here tried that? What are your experiences with it? Thanks!

question

test driven development

tdd

embedded