Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

Voxera108806yAs long as you can link direct to the pages it should work with google

Voxera108806yAs long as you can link direct to the pages it should work with google

But if the only way to view a page is through actively clicking, yes that will be a problem.

Links need to be links for google to index them. -

@Voxera with SPA frameworks (unless you've using server-side rendering) the client is only getting an empty template HTML without any content, which is then filled by the framework in JS. No content means no links either and crawlers don't execute JS. You can add a sitemap, but all of these links will only contain that empty template. SPAs are useless for bots unless they use SSR.

-

@24th-Dragon I was looking into that. And also GatsbyJS. Next.js might take some time until I get familiar with documentation.

I'll get back to Flask with AngularJS. Fuck me. -

@halfflat It has its advantages. SPAs feel more responsive, load once and then only fetch data, they can offer richer, more user-friendly UI. I believe with SSR one could solve the problem of heavy reliance on JS completely, I'm not sure if such implementations exist though.

-

@gronostaj Google executes JavaScript for it's index, because of the many SPAs. However having a site which effectively only works with JS is probably still not the best variant.

-

inaba44856y@gronostaj You are factually wrong though

inaba44856y@gronostaj You are factually wrong though

https://developers.google.com/searc...

> Googlebot queues all pages for rendering, unless a robots meta tag or header tells Googlebot not to index the page. The page may stay on this queue for a few seconds, but it can take longer than that. Once Googlebot's resources allow, a headless Chromium renders the page and executes the JavaScript. Googlebot parses the rendered HTML for links again and queues the URLs it finds for crawling. Googlebot also uses the rendered HTML to index the page.

Googles bot will open a page, find all the links on that page, open those pages, repeat until it has found all the pages, then it will render the found pages with javascript, find all the links on that page and repeat the first process. -

Voxera108806y@gronostaj google today actually runs js on pages they crawl.

Voxera108806y@gronostaj google today actually runs js on pages they crawl.

So if you use react router and have backend return the page for all relevant paths google still should be able to crawl it.

At least that is what I read in a paper they released a couple of years ago.

So you should not need server side rendering.

https://google.se/amp/s/...

https://google.se/amp/s/... -

eddgr06yHave you looked into nfl-helmet? It creates the necessary title and meta description tags for pages to be crawled in React.

Related Rants

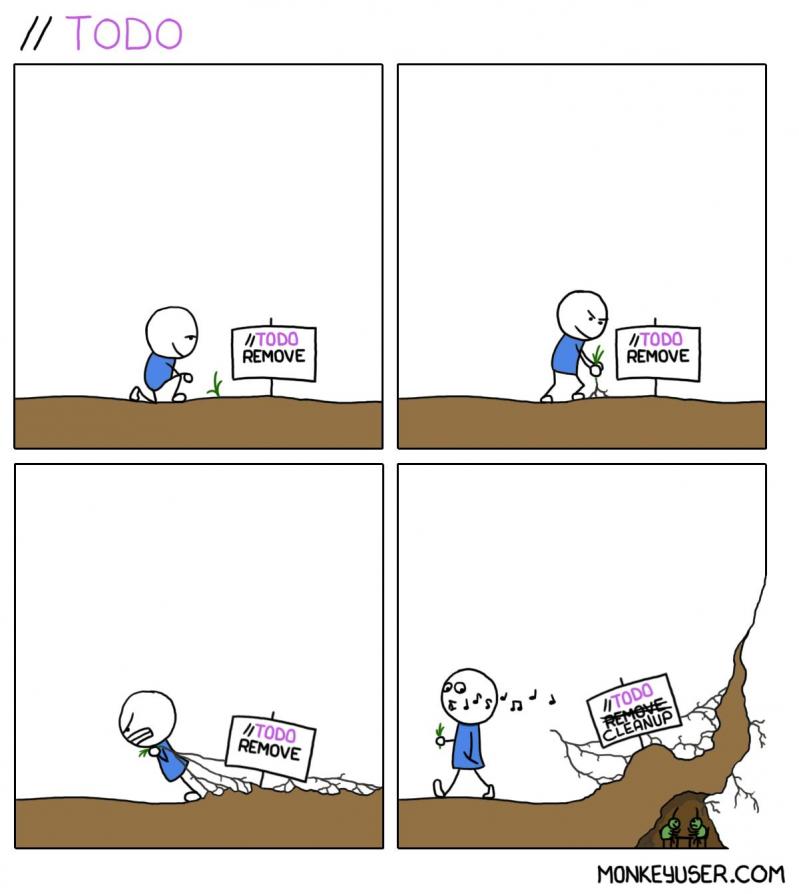

//TODO: Laugh

//TODO: Laugh Nike's robots.txt says "just crawl it"

Nike's robots.txt says "just crawl it" Fucking react programmers

Fucking react programmers

Me: Hey SEO guy. I am updating our online store from Flask/jQuery in ReactJS.

SEO guy: That is amazing. Google LOVES ReactJS and it will crawl the site very fast.

*fast forward*

SEO guy: Hey, did you change anything in the site because the site is not ranking anymore on Google. The URLs are dynamically generated in front end. Google does not like that.

ME: But you said that Google loves React. It took me nearly 1 month to migrate the code in React.

Fucking hell.

rant

reactjs

seo