Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

Only for "You need JavaScript to use this site" or "Click here for non JS version" sorta things

-

-

Root772335yYes. Everything should degrade gracefully, and that's not limited to just websites.

Root772335yYes. Everything should degrade gracefully, and that's not limited to just websites.

Also: I have javascript disabled by default because I seriously distrust the javascript ecosystem. It's far too easy to accidentally include malicious code in your js project and not even realize it. There's also the whole spying and tracking norm I'd rather avoid. -

Yes, it is, because people use the NoScript browser extension to intentionally disable JS.

If your JS is doing something non-essential, <noscript> is not needed, just live without the script. Otherwise, place some nicely styled <noscript> message right where a functionality cannot work. That lets the page work as far as possible without JS.

Oh, and don't even think of relying on JS for your main navigation! -

RobbieGM355y@TheCommoner282 I can agree with not bothering to support users of an extension that is designed to break websites, but accessibility is pretty important. The number of people with various disabilities is not that low.

RobbieGM355y@TheCommoner282 I can agree with not bothering to support users of an extension that is designed to break websites, but accessibility is pretty important. The number of people with various disabilities is not that low. -

@TheCommoner282 That's what the devs at Domino's thought, too. And then Domino's was dragged into court over their inaccessible piece of trash website, and lost. That's why.

Also, NoScript doesn't break websites. Websites are accessible by default. YOU break them, you as dev. -

RobbieGM355y@Fast-Nop I agree that websites are accessible by default, but NoScript does break websites. It's no secret that JavaScript is required for most websites to work. But if you want to go back to the stone age of doing everything through forms (rather awful UX, due to constant page reloading and no client side rendering) you go right ahead.

RobbieGM355y@Fast-Nop I agree that websites are accessible by default, but NoScript does break websites. It's no secret that JavaScript is required for most websites to work. But if you want to go back to the stone age of doing everything through forms (rather awful UX, due to constant page reloading and no client side rendering) you go right ahead. -

@RobbieGM Most websites require JS for no good reason at all except the usual gross incompetence of frontend devs, and that's a reason, but not a good one.

Of course, interactive widgets won't work without JS, that's OK, but what I mean is "nothing works at all" which plain bad.

I've seen shit like an onclick handler in JS that changes the document URL instead of using an anchor tag. -

RobbieGM355yInteresting that you think writing web apps as single page client rendered applications has no good use case and is only a result of "gross incompetence."

RobbieGM355yInteresting that you think writing web apps as single page client rendered applications has no good use case and is only a result of "gross incompetence." -

@RobbieGM A web app counts as big interactive widget, I added that later to clarify.

But you even see news articles where the whole page stays blank without JS, and that's gross incompetence. -

@TheCommoner282 Apps are widgets by definition because they're supposed to be dynamic, and that's the use case for JS. Something like devRant itself would be an example.

But! just abusing JS for displaying static content like news articles is moronic. A news article isn't an app.

Also, if you're adding accessibility as an afterthought, it becomes expensive because that's the wrong way of designing it. Legal requirements are there to get companies to do it the right way. Not complying will get the company into court AGAIN, and it becomes more expensive - until the company gets it.

A frontend dev these days has to know about WCAG 2.1 and design his stuff accordingly. That's also basics of the craft.

But given e.g. the shit trend design with light grey fonts on white background, even sighted users are struggling. -

RobbieGM355yWell you could use a framework like next.js to handle getting the same content rendered client side and server side, but I know not everyone does that. Also in the US you can get sued under the ADA if you have >=15 employees, just FYI.

RobbieGM355yWell you could use a framework like next.js to handle getting the same content rendered client side and server side, but I know not everyone does that. Also in the US you can get sued under the ADA if you have >=15 employees, just FYI. -

@TheCommoner282 Legal requirements are black and white. If you don't get this, talk to the legal department of your company. With your misguided stance, you are increasingly becoming a liability for your company.

"Going with the time" means introducing tons of useless JS for you. And then wondering why that stupid shit loads and parses slow as hell. Yeah, because you are misusing JS to replicate HTML, and probably also CSS, that's why. -

@TheCommoner282 You're the one full of shit, namely the typical grossly incompetent frontend dev. You risk legal problems for your company, you bloat websites needlessly with JS.

From what you said here, you are an example for frontend shitheads. The only reason why you don't understand this is because you're on the low end of the Dunning-Kruger effect.

And yeah, that your shitty creations don't display anything without JS won't astonish anyone. -

Sumafu21905y@TheCommoner282 so you are not a front end developer, but you presume to talk about good and bad behavior in front end development?

Sumafu21905y@TheCommoner282 so you are not a front end developer, but you presume to talk about good and bad behavior in front end development? -

@TheCommoner282 The bigger picture are ever more bloated websites which bleed money because users just bounce away, companies dragged into court and losing over their trash sites, and you being too stupid to even understand the concept behind Dunning-Kruger.

-

@TheCommoner282 It should - because I have solid arguments while you have just nonsense handwaving. Come on, entertain me a little more. I'm curious how you will secure your "idiot of the month" title.

How about "arguing" that functional stuff on the web is hopelessly outdated and that the mondern web is meant to be unusable shit? -

@TheCommoner282 The DOM argument is true - for complex applications like Facebook. Otherwise, the reason for spaghetti is that many controls impact many other controls, which makes a confusing UI anyway.

- More bandwidth. You argued for even ajaxing in static content, which renders the browser cache useless.

- Slower load time because more pieces are loaded and pieced together.

- More battery drain because of useless JS being downloaded and run.

- PWAs suck for anything even slightly complex. Just compare an Electron editor with Notepad++.

- Single page design breaks bookmarking. Ever tried to browse a Reddit group down? Takes an eternity, AND you can't even bookmark it like with pagination.

- Often used to "lazy load", which means instead of eliminating the bloat, they try to lessen the effects. And when you scroll down, you still have the shit to load, only now with more delay. -

RobbieGM355y- Ajaxing in static content does not render the browser cache useless. Properly built RESTful APIs will allow the browser to use its built-in cache, and GraphQL API results can be cached by the client using something like Apollo (which is not so lightweight) or URQL (which is, but has inferior caching).

RobbieGM355y- Ajaxing in static content does not render the browser cache useless. Properly built RESTful APIs will allow the browser to use its built-in cache, and GraphQL API results can be cached by the client using something like Apollo (which is not so lightweight) or URQL (which is, but has inferior caching).

- Client-side rendering != larger page load time. Bloated scripting is not caused by how you render your pages. There are many lightweight and well-built PWAs out there (like dev.to) as well as crappy static sites (dailymail.co.uk). Not everything is "pieced together" in a waterfall fashion.

- Bloat != CSR. Yes we should try to avoid bloating pages with unnecessary JS, but that doesn't mean that all JS is bad or all frontend frameworks are bad and we need to go back to the days of CGI.

- What? I can name one electron editor that is quite fast and far more fully featured that Notepad++. Yes it's VSCode. -

RobbieGM355y(continued)

RobbieGM355y(continued)

- If you do it improperly, yeah. But good SPAs will store the right data in the URL and use it to load the right content when loading the page. Just look at vue-router, react-router, reach-router, etc.

- Would you rather lazy load and get the most important stuff in 2 seconds but have the other stuff take an additional 2 seconds so a couple widgets may not be loaded, or take the full 4 seconds to load the page? This one sounds like a no-brainer to me. -

@RobbieGM

- Basically, even more bloat just to replicate what the browser could do without effort: inner platform effect looming.

- Using JS to fetch the pieces and render does take longer than using static markup for static content. Not least because the DOM has to be modified, and that's never fast.

- VScode is dog slow compared to Notepad++. No wonder because Notepad++ is done in C++.

- That would require devs to manually emulate the native URL behaviour, which most don't, and it's again additional effort just to replicate native browser behaviour.

- I would rather eliminate the bloat. 3MB for 500 bytes of text is downright crazy.

- Don't even get me started on the rampant abuse of JS to not only do HTML's job, but even CSS'. -

Root772335y@TheCommoner282

Root772335y@TheCommoner282

Someone removes leftpad.

A dependency of a dependency of a dependency decency of vue injects a keylogger that flows unnoticed into upstream projects.

You introduce a subtle flaw into your navigation js, rendering the site unusable for 5% of your users (like my router!). It takes a month of debugging to find and fix. If you do not fix it, you increase your legal liability surface, but if you do it's expensive and it doesn't justify 5% more sales.

Also why the hell are you only serving json from your backend? Converting is trivial; storing both even moreso.

To be clear, I'm not against JS in general, but I am absolutely against using it for everything. It's a magic hammer that makes everything look like a nail. Also, integrating the graceful degradation approach is exceedingly difficult to do in an established (large) project; it's something you should have in mind when you start. It's a design pattern. I also don't expect any of this to change your mind; you seem pretty set in your ways.

"Less bandwidth" Your 2mb js bundle would take a lot of markup savings to realize this point. Also it's better to focus on responsively serving smaller (compressed) images for larger bandwidth (and hosting cost) savings.

"A modern environment allows for a more functional immutable approach." That's great! Html is also completely immutable 😉. But seriously, this is circular logic. You don't need immutability and trivially recreating vue objects if you don't use vue.

There's also the argument of wanting to know what your computer is doing, and with the web, that's not really possible anymore. We live in times where not even the developer knows what their code is doing anymore, let alone what it will be doing next month.

Also, even gmail has an html-only version. Apparently the profit-minded company decided it was worthwhile to maintain; and I'm glad because Google decided to permanently break the JS version on my computer -- even when I allow all JS. -

RobbieGM355y@Fast-Nop a lot of what you're saying is true for small applications or simple static sites. But when it comes to larger ones, the benefits of this extra JS start to become clear:

RobbieGM355y@Fast-Nop a lot of what you're saying is true for small applications or simple static sites. But when it comes to larger ones, the benefits of this extra JS start to become clear:

- GraphQL caching can be more effective than HTTP caching since it is more granular. For example, you could fetch relations on an object in one query and then run a completely different query fetching the same relations and the relations could be cached from the first query while other stuff is loaded from the server.

- If you have one app shell and load the content inside separately, you can cache the app shell to make subsequent page loads really fast. And AFAIK DOM modification is rarely slow, but feel free to find me an example.

- Dog slow? Never experienced that. After startup, which only takes a couple seconds, everything is pretty much instant. -

RobbieGM355y(continued)

RobbieGM355y(continued)

- Client-side routing is not hard. You're just a history.pushState() away from updating the URL instantly. This is not "replicating native browser behavior"--this is native browser behavior.

- The discussion was about lazy loading or not lazy loading, and your answer is "I would rather just eliminate the bloat." Of course I would like to that too, but what if you want to add some widgets to your site without slowing down the rendering of the main content? Like a share widget? Or the part of your navigation bar that doesn't appear until the user clicks on it? Or a notification reader component? If those features are necessary, wouldn't you rather have them lazy loaded? -

@RobbieGM Last time I needed an interactive, JS driven widget with animation and SVG, the whole pageload was 18 kB. It's not like you have to pull in megs of JS to do things.

Also, the browser cache is uncompressed data (or has that changed?), and mobiles are pretty aggressive in evicting items. Unfortunately, mobile connections are the worst.

While bandwidth improves, latency does not, especially mobile. That's another reason why unneseccarily piecing together many small items isn't the best idea.

I mean, e.g. implementing a blog with ajaxing stuff together has to be one of the most absurd ideas ever. I'd go with an SSG instead, eliminating the JS bloat and the backend bloat at once. Fast, much less attack surface, easy win. -

RobbieGM355y@Fast-Nop Good. I never said that more JS = better website. I'm just saying that when you need to have more JS, lazy loading is useful.

RobbieGM355y@Fast-Nop Good. I never said that more JS = better website. I'm just saying that when you need to have more JS, lazy loading is useful.

According to https://stackoverflow.com/questions... it seems that most browsers store the data in compressed format. I would be surprised if they didn't. iOS Safari allows 50MB of cache per website before starting to clear stuff out. 50MB should be plenty.

Why do you think that piecing together small scripts into one (like with webpack) means they are all loaded in a waterfall, one after the other? They aren't. They can all be bundled together, or they can be loaded separately but concurrently.

Why not have the benefits of both? Load statically, but with scripts that make subsequent loads faster. This is what Next.js does, for example. -

@RobbieGM Interesting with the browser cache, though still half a meg of compressed useless JS is a lot for 50 MB total cache.

And no matter how you do it, you have to request the pieces, which means requests to your backend, which means a lot more requests, and a lot more fudging around with the DOM. If you pull this in as JSON, converting it into markup on the client is also not in zero time.

There's no way that this would be faster than not using JS, not having dozens of superfluous DB requests, and not fudging around with the DOM.

KISS. Code you don't run will always be faster than code that you do run. Also, code that you don't have has no bugs, no security problems, no dependency risks.

Related Rants

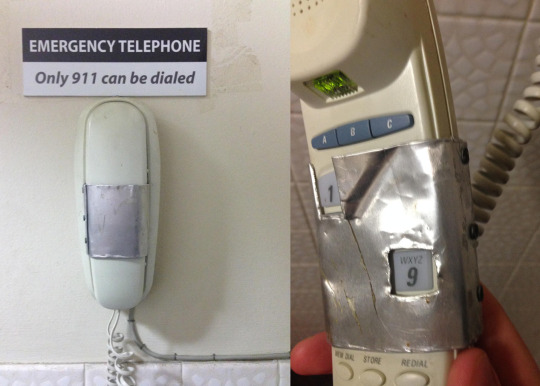

What only relying on JavaScript for HTML form input validation looks like

What only relying on JavaScript for HTML form input validation looks like Yeah no

Yeah no Hey, have you ever wanted to punch a developer 8611 times before?

Hey, have you ever wanted to punch a developer 8611 times before?

A question to all web devs here: Do you think that the <noscript> tag is necessary nowadays?

question

noscript

modern world

html