Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

dfox421449yIt's not the fault of the person who initially accidentally deleted the data, but their company as a whole failed epically and there's no excuse for it IMO.

dfox421449yIt's not the fault of the person who initially accidentally deleted the data, but their company as a whole failed epically and there's no excuse for it IMO. -

Qchmqs5339y@dfox their line of prpduction is basically : "deploy"

Qchmqs5339y@dfox their line of prpduction is basically : "deploy"

this strategy is more than inept, it's idiotic

no redundant servers, no offline backups, no testing for catastrophe recovery, no actual backup mechanism, and on top of that doing modifications on a live server -

dfox421449y@Qchmqs I completely agree.

dfox421449y@Qchmqs I completely agree.

I was watching their live stream and I frankly found the whole thing ridiculous. A few times they tried to just play if off as something that "happens." And then someone asked a question along the lines of "are you going to fix your backup process?" and they got pretty defensive and were like "we just need to monitor it better, we had a good process but lots of stuff just failed."

I think they failed to grip the severity of what happened. They should take whatever "process" they had and delete the entire and start from the beginning. -

@cheke Nope, there is a gap between "Shit happens" and "Holy shit, we could have lost everything because non of our backups were working".

-

Your backup solution and infrastructure should be treated as seriously as your code. It's not a matter of if the prod database gets deleted, it's a matter of when. And just like your code, if it's not tested it's broken. Gitlab obviously had not tested their disaster recovery and backup solution in a very long time, if ever.

Related Rants

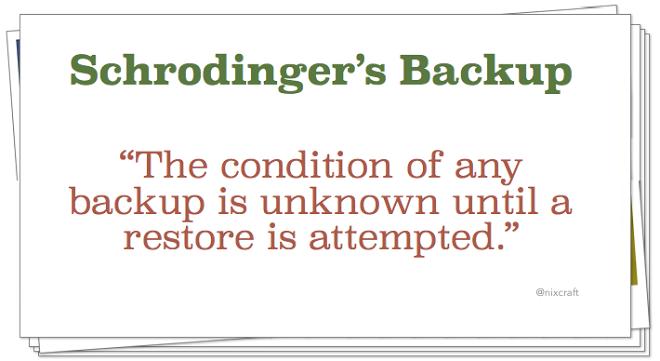

schrodinger's backup

schrodinger's backup Source:- Reddit

Source:- Reddit

Everyone is posting jokes about GitLab recent incident and how the guys responsible for that must be feeling right now.

Shit happens, sometimes it's you accidentally deleting a branch on your repo and turning that into a major crisis, sometimes is a huge mistake that impacts not only the whole company business, but also it's clients work.

This situation reminds me of a famous quote from Thomas J. Watson (ex lBM CEO):

"Recently, I was asked if I was going to fire an employee who made a mistake that cost the company $600,000. No, I replied, I just spent $600,000 training him. Why would I want somebody to hire his experience"

Those guys at GitLab have probably learned one of the most expensive lessons in IT world and I really wish them to come up with a solution that not only fixes this case, but that helps them preventing future occurrences.

undefined

not a joke

!rant

gitlab