Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Related Rants

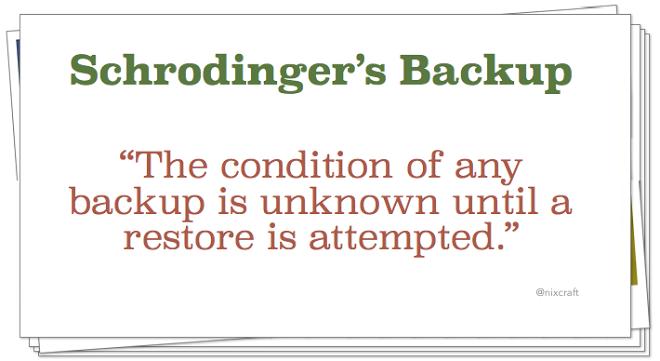

schrodinger's backup

schrodinger's backup Source:- Reddit

Source:- Reddit

Business Continuity / DR 101...

How could GitLab go down? A deleted directory? What!

A tired sysadmin should not be able to cause this much damage.

Did they have a TESTED dr plan? An untested plan is no plan. An untested plan does not count. An untested plan is an invitation to what occurred.

That the backups did not work does not cut it - sorry GitLab. Thorough testing is required before a disruptive event.

Did they do a thorough risk assessment?

We call this a 'lesson learned' in my BC/DR profession. Everyone please learn by it.

I hope GitLab is ok.

undefined

dr

lesson learned

gitlab