Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

Update:

I implemented back tracking, where if a set of mutated parameters leads to lower fitness (less results that are easily favorable after being running through the algorithm) then it discards the mutation and reloads the last set of parameters that worked.

The goal is to find a set of parameters that cover as many semiprimes as possible.

I also modularized the core of the algorithm, so I can just pass in functions as parameters, allowing me to switch up the exact formula when testing.

Also I increased the bit length of the products being factored by 50%, and put a limit on the bruteforce inner loop of a/8, where (a) is the factor we are looking for. Theres no purpose in searching for parameters that make the formula run fast, if the value of (i) that the formula relies on is itself >= a/2.

Lastly I checked before going to work, I'd achieved exactly 20% fitness on a couple parameter space variations. -

Also, I thought fitness (or the number of primes the algorithm would apply to) would drop a lot more with the restriction of a/8 instead of a/4 for bruteforcing, as well as the larger bit length for the products factories, but apparently not. In practice a/8 is the limit in this given instance, but the speed at which the algorithm returns a correct answer (a/n) is actually a lot faster. I'm talking regular gains of x16 times faster on average.

I'm thinking at super larger products (high values of i), there are "supercorrelated" modular spaces, meaning there should be m values, given the correct parameters, that like an island of stability, actually make or *faster* to factor numbers the larger the exponent.

The fitness function for some parameters could be higher now, could come across better solutions for the algorithm right now, I just dont know at the moment because I'm at my day job. -

Next steps are to distill those solutions that have a niche lower quotient (higher potential fitness) *in situ* during the generation/scoring process and see what makes them different.

I've confirmed the results are not a mirage or a bug, and they're pretty consistent so far too. I'm a little afraid of the boy-who-cried-wolf setting in but encouraged by the results I'm seeing.

If results continue to improve, I'll push the bitlength up, push the ratio needed for searching for bruteforcing down to a/32, and maybe setup a patreon if anyone is interested, organizing my results better, with toy examples, documentation, experimental setups, results posted weekly. Itd help me pay for the server compute to do bigger tests and experiments as well.

I have a lot of ideas for how to expand this and explore the concept space better, including dashboard visualizations.

Related Rants

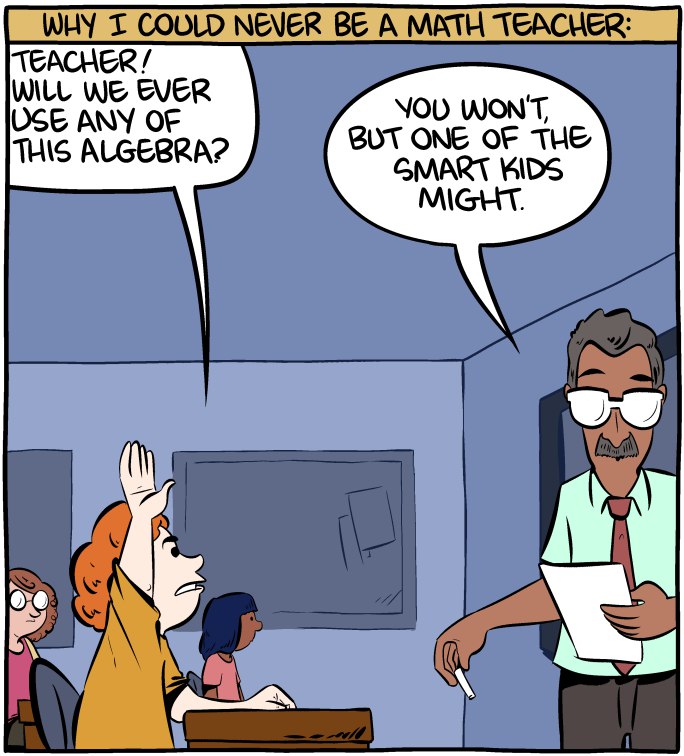

Math is hard.

Math is hard. When you wanted to know deep learning immediately

When you wanted to know deep learning immediately :)) finally a good answer

:)) finally a good answer

After learning a bit about alife I was able to write

another one. It took some false starts

to understand the problem, but afterward I was able to refactor the problem into a sort of alife that measured and carefully tweaked various variables in the simulator, as the algorithm

explored the paramater space. After a few hours of letting the thing run, it successfully returned a remainder of zero on 41.4% of semiprimes tested.

This is the bad boy right here:

tracks[14]

[15, 2731, 52, 144, 41.4]

As they say, "he ain't there yet, but he got the spirit."

A 'track' here is just a collection of critical values and a fitness score that was found given a few million runs. These variables are used as input to a factoring algorithm, attempting to factor

any number you give it. These parameters tune or configure the algorithm to try slightly different things. After some trial runs, the results are stored in the last entry in the list, and the whole process is repeated with slightly different numbers, ones that have been modified

and mutated so we can explore the space of possible parameters.

Naturally this is a bit of a hodgepodge, but the critical thing is that for each configuration of numbers representing a track (and its results), I chose the lowest fitness of three runs.

Meaning hypothetically theres room for improvement with a tweak of the core algorithm, or even modifications or mutations to the

track variables. I have no clue if this scales up to very large semiprime products, so that would be one of the next steps to test.

Fitness also doesn't account for return speed. Some of these may have a lower overall fitness, but might in fact have a lower basis

(the value of 'i' that needs to be found in order for the algorithm to return rem%a == 0) for correctly factoring a semiprime.

The key thing here is that because all the entries generated here are dependent on in an outer loop that specifies [i] must never be greater than a/4 (for whatever the lowest factor generated in this run is), we can potentially push down the value of i further with some modification.

The entire exercise took 2.1735 billion iterations (3-4 hours, wasn't paying attention) to find this particular configuration of variables for the current algorithm, but as before, I suspect I can probably push the fitness value (percentage of semiprimes covered) higher, either with a few

additional parameters, or a modification of the algorithm itself (with a necessary rerun to find another track of equivalent or greater fitness).

I'm starting to bump up to the limit of my resources, I keep hitting the ceiling in my RAD-style write->test->repeat development loop.

I'm primarily using the limited number of identities I know, my gut intuition, combine with looking at the numbers themselves, to deduce relationships as I improve these and other algorithms, instead of relying strictly on memorizing identities like most mathematicians do.

I'm thinking if I want to keep that rapid write->eval loop I'm gonna have to upgrade, or go to a server environment to keep things snappy.

I did find that "jiggling" the parameters after each trial helped to explore the parameter

space better, so I wrote some methods to do just that. But what I wouldn't mind doing

is taking this a bit of a step further, and writing some code to optimize the variables

of the jiggle method itself, by automating the observation of real-time track fitness,

and discarding those changes that lead to the system tending to find tracks with lower fitness.

I'd also like to break up the entire regime into a training vs test set, but for now

the results are pretty promising.

I knew if I kept researching I'd likely find extensions like this. Of course tested on

billions of semiprimes, instead of simply millions, or tested on very large semiprimes, the

effect might disappear, though the more i've tested, and the larger the numbers I've given it,

the more the effect has become prevalent.

Hitko suggested in the earlier thread, based on a simplification, that the original algorithm

was a tautology, but something told me for a change that I got one correct. Without that initial challenge I might have chalked this up to another false start instead of pushing through and making further breakthroughs.

I'd also like to thank all those who followed along, helped, or cheered on the madness:

In no particular order ,demolishun, scor, root, iiii, karlisk, netikras, fast-nop, hazarth, chonky-quiche, Midnight-shcode, nanobot, c0d4, jilano, kescherrant, electrineer, nomad,

vintprox, sariel, lensflare, jeeper.

The original write up for the ideas behind the concept can be found at:

https://devrant.com/rants/7650612/...

If I left your name out, you better speak up, theres only so many invitations to the orgy.

Firecode already says we're past max capacity!

random

math