Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Related Rants

Machine Learning messed up!

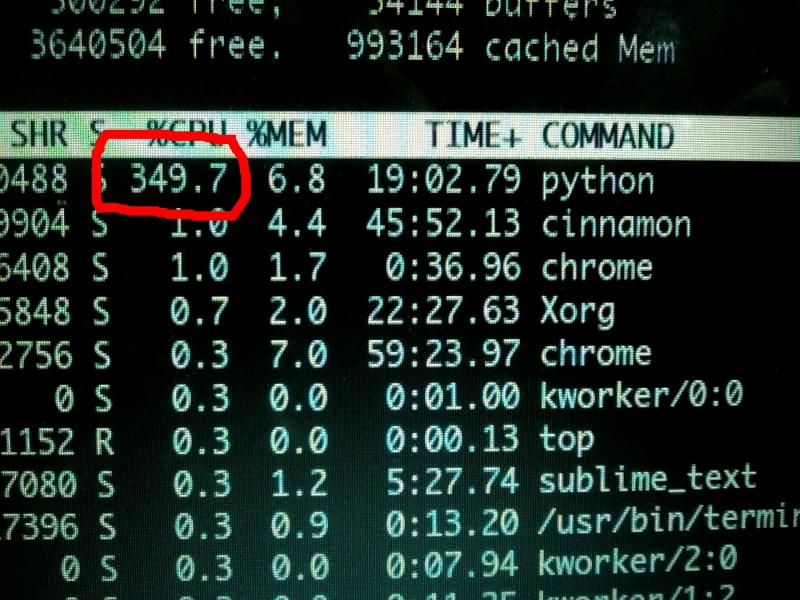

Machine Learning messed up! When your CPU is motivated and gives more than his 100%

When your CPU is motivated and gives more than his 100% What is machine learning?

What is machine learning?

Holy duck, I lost two days on a convolutional autoencoder splitted in two separate neural networks to encode and decode separately, it reconstruction had some strange behaviours. I was giving as input an image and then saving the encoded compressed representation in a new image, in this way I could decode it with the decoder whenever I want saving space.

How much retarded am I?

The internal layer's weights hadn't constraints so in learning phase the convolutional filters can contain any number, positive > 255 or even negative and I cannot save it in a new image as they are so they were clipped automatically between 0 and 255 with an huge information loss.

It's so frustrating when you rewrite the code in any possible way, you obtain the same wrong result and then you realize that was a borderline behaviour of a third part library.

undefined

convolution

dimensionality reduction

rbg

autoencoder

machine learning

255

neural networks

image processing