Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Related Rants

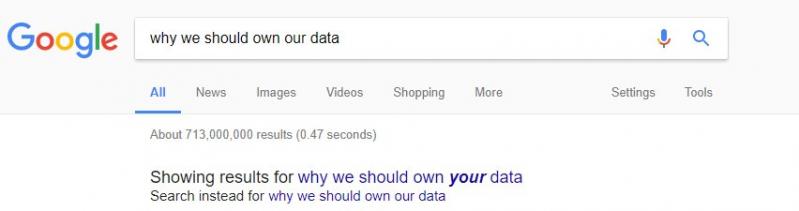

Creepy AF!

Creepy AF!

One thing when working with a ton of data:

If there is a slight, infinitesimal probability that something will be wrong, then it will 100% be wrong.

Never make assumptions that data is consistent, when dealing with tens of gigabytes of it, unless you get it sanitized from somewhere.

I've already seen it all:

* Duplicates where I've been assured "these are unique"

* In text fields that contain exclusively numeric values, there will always be some non-numeric values as well

* There will be negative numbers in "number sequences starting with 1"

* There will be dates in the future, and in the far far future, like 20115 in the future.

* Even if you have 200k customers, there will be a customer ID that will cause an integer overflow.

Don't trust anything. Always check and question everything.

undefined

data