Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "data"

-

The highest data transfer rate today - 256 gigabytes per second - was achieved when the cleaner's vacuum cleaner accidentally sucked the flash drive in from the floor.8

-

I’ve been thinking lately, what is it that devRant devs do?

So after a couple of days of pulling data from devRants API's, filtering through the inconsistent skill set data of about 500 users (seriously guys, the comma is your friend) i’ve found an interesting set of languages being used by everyone.

I've limited this to just languages, as dwelling into frameworks, libraries and everything else just grows exponentially, also ive only included languages with at least 5 users out of the pool.

sorry you brainfuck guys. 28

28 -

Professor essentially doing a dance to show data visualisation:

“Hey pay attention! I’m trading my dignity for your education”2 -

Was recently phone interviewed by a recruiter that asked "So do you know data structures and algorithms?" I replied "That's like asking someone if they know Mathematics - can you be a bit more specific?"7

-

PM used his wife's data in testing against legacy data.

Discovered she had different social number before they met.

Apparently she was also a he.....

Might be a interesting evening for him.5 -

When you have to figure out whether it's better to transfer 85GB of data via internet or to load it onto a harddrive and drive it 600 km/ 370 miles in your car.17

-

!rant

Yesterday i updated my LinkedIn title to Data scientist.

Today morning i got 3 phone calls for job offers.8 -

At the data restaurant:

Chef: Our freezer is broken and our pots and pans are rusty. We need to refactor our kitchen.

Manager: Bring me a detailed plan on why we need each equipment, what can we do with each, three price estimates for each item from different vendors, a business case for the technical activities required and an extremely detailed timeline. Oh, and do not stop doing your job while doing all this paperwork.

Chef: ...

Boss: ...

Some time later a customer gets to the restaurant.

Waiter: This VIP wants a burguer.

Boss: Go make the burger!

Chef: Our frying pan is rusty and we do not have most of the ingredients. I told you we need to refactor our kitchen. And that I cannot work while doing that mountain of paperwork you wanted!

Boss: Let's do it like this, fix the tech mumbo jumbo just enough to make this VIP's burguer. Then we can talk about the rest.

The chef then runs to the grocery store and back and prepares to make a health hazard hurried burguer with a rusty pan.

Waiter: We got six more clients waiting.

Boss: They are hungry! Stop whatever useless nonsense you were doing and cook their requests!

Cook: Stop cooking the order of the client who got here first?

Boss: The others are urgent!

Cook: This one had said so as well, but fine. What do they want?

Waiter: Two more burgers, a new kind of modern gaseous dessert, two whole chickens and an eleven seat sofa.

Chef: Why would they even ask for a sofa?!? We are a restaurant!

Boss: They don't care about your Linux techno bullshit! They just want their orders!

Cook: Their orders make no sense!

Boss: You know nothing about the client's needs!

Cook: ...

Boss: ...

That is how I feel every time I have to deal with a boss who can't tell a PostgreSQL database from a robots.txt file.

Or everytime someone assumes we have a pristine SQL table with every single column imaginable.

Or that a couple hundred terabytes of cold storage data must be scanned entirely in a fraction of a second on a shoestring budget.

Or that years of never stored historical data can be retrieved from the limbo.

Or when I'm told that refactoring has no ROI.

Fuck data stack cluelessness.

Fuck clients that lack of basic logical skills.4 -

Did you know?

This rant is a part of the 3 quintillion bytes of data that the world generated today.8 -

I've dreamed of learning business intelligence and handling big data.

So I went to an university info event today for "MAS Data Science".

Everything's sounded great. Finding insights out of complex datasets, check! Great possibilities and salery.

Yay! 😀

Only after an hour they've explained that the main focus of this course is on leading a library, museum or an archive. 😟 huh why? WTF?

Turns out, they've relabled their librarian education course to Data science for getting peoples attention.

Hey you cocksuckers! I want my 2 hours of wasted life time back!

Fuck this "english title whitewashing"4 -

"Big data" and "machine learning" are such big buzz words. Employers be like "we want this! Can you use this?" but they give you shitty, ancient PC's and messy MESSY data. Oh? You want to know why it's taken me five weeks to clean data and run ML algorithms? Have you seen how bad your data is? Are you aware of the lack of standardisation? DO YOU KNOW HOW MANY PEOPLE HAVE MISSPELLED "information"?!!! I DIDN'T EVEN KNOW THERE WERE MORE THAN 15 WAYS OF MISSPELLING IT!!! I HAD TO MAKE MY OWN GODDAMN DICTIONARY!!! YOU EVER FELT THE PAIN OF TRAINING A CLASSIFIER FOR 4 DAYS STRAIGHT THEN YOUR GODDAMN DEVICE CRASHES LOSING ALL YOUR TRAINED MODELS?!!

*cries*7 -

$50,000+ Computer Science fucking diploma, yet here I am on a Sunday evening doing data entry coz there is no one else to do it!11

-

The public seems to be worried a lot on the Facebook "data breach" yet doesn't bat an eye on a bigger website that has already been selling private data for more than a decade.

Google9 -

Hey guys

So, why so many rants about Facebook now?

I already knew that Facebook sells our data for a few years.... Didn't anyone else knew before that stupid #deletefacebook campaign?11 -

Our Service Oriented Architecture team is writing very next-level things, such as JSON services that pass data like this:

<JSON>

<Data>

...

</Data>

</JSON>23 -

Couple days ago found the DisneyResearch channel again and it's really addictive, impressive and scary to watch, here's an example: https://youtu.be/E4tYpXVTjxA

wonder how much data could be extracted from disney world/land visitors with that in the food service areas 3

3 -

I just deleted 3.5TB of junk data from S3, effectively saving my company about 88 dollars.

I feel so fucking good.

Think I'm going to ask for a raise😂3 -

My TEN YEAR OLD twin girls came to me with a TIMESHEET and PIE CHARTS to explain to me why "Our household would benefiter (sic) a Nintendo Switch".

They... actually did what for an intern would be a passable data storytelling job (orthographic errors aside).

They explained how they would share the videogame between themselves (because it is not allowed at their school, not that we would let them bring it there anyway) in a colorful timesheet spanning four days a week.

They even put a pie chart showing how most of the time nobody will be using it.

I feel at the same time immensely proud, scared, and a wee bit freaked out that they came with all that to me but with their mother they just talked. Do I seem so distant that they feel they can't convince me without data? I gotta watch out for using work jargon at home.

Anyway, first "interns" that I have ever seen using a pie chart with the appropriate number of classes (even if highly biased).9 -

Let me repeat this out and loud so that we are clear before another idiot starts pitching to me on building their "world class machine learning algorithm":

NO DATA, NO MACHINE LEARNING!2 -

WHAT THE FUCK IS WRONG WITH YOU APPLE?! THATS A SIXTH OF MY MOBILE DATA! WHERE IS THIS MUCH USAGE COMING FROM?????

8

8 -

Data Engineering cycle of hell:

1) Receive an "beyond urgent" request for a "quick and easy" "one time only" data need.

2) Do it fast using spaghetti code and manual platforms and methods.

3) Go do something else for a time period, until receiving the same request again accompanied by some excuse about "why we need it again just this once"

4) Repeat step 3 until this "only once" process is required to prevent the sun from collapsing into a black hole

5) Repeat steps 1 to 4 until it is impossible to maintain the clusterfuck of hundreds of "quick and simple" processes

6) Require time for refactoring just as a formality, managers will NEVER try to be more efficient if it means that they cannot respond to the latest request (it is called "Panic-Driven Development" or "Crappy Diem" principle)

7) GTFO and let the company collapse onto the next Data Engineering Atlas who happens to wander under the clusterfuck. May his pain end quickly.2 -

"We use WSDL and SOAP to provide data APIs"

- Old-fashioned but ok, gimme the service def file

(The WSDL services definition file describes like 20 services)

- Cool, I see several services. In need those X data entities.

"Those will all be available through the Data service endpoint"

- What you mean "all entities in the same endpoint"? It is a WSDL, the whole point is having self-documented APIs for each entity format!

"No, you have a parameter to set the name of the data entity you want, and each entity will have its own format when the service return it"

- WTF you need the WSDL for if you will have a single service for everything?!?

"It is the way we have always done things"

Certain companies are some outdated-ass backwater tech wannabees.

Usually those that have dominated the market of an entire country since the fucking Perestroika.

The moment I turn on the data pipeline, those fuckers are gonna be overloaded into oblivion. I brought popcorn.6 -

OK I live in lithuania, small country, my grandparents live in silute, super small city, the internet is shit here, I need to use my mobile data to program, next day I wake up to this graph explaining me how I lost all of my fucking data😤

41

41 -

Coworker: so once the algorithm is done I will append new columns in the sql database and insert the output there

Me: I don't like that, can we put the output in a separate table and link it using a foreign key. Just to avoid touching the original data, you know, to avoid potential corruption.

C: Yes sure.

< Two days later - over text >

C: I finished the algo, i decided to append it to the original data in order to avoid redundancy and save on space. I think this makes more sense.

Me: ahdhxjdjsisudhdhdbdbkekdh

No. Learn this principal:

" The original data generated by the client, should be treated like the god damn Bible! DO NOT EVER CHANGE ITS SCHEMA FOR A 3RD PARTY CALCULATION! "

Put simply: D.F.T.T.O

Don't. Fucking. Touch. The. Origin!5 -

Connect a pen drive, format it successfully. Connect to a new machine to copy data and see the data exists.

Crap! which drive did I formatted :(1 -

User: I need you to extract all the invoice data for us.

Me: What invoice data in particular, what are filters you require. This is a massive database with millions of transactions.

User: JUST EXTRACT THE TABLE!

Me: Right.....(this is a database with 3000+ tables and hundreds of joins)7 -

Windows: Would you like me to give all your data to MS or somewhat less data?

Me: Well .. I guess .. I'll choose the Basic option..

Windows: Here is a fun fact .. I'll send all the data regardless of your choice :)

This is what's happening in the background, I think. I mean there isn't even a phuqing option to turn this thing off. 19

19 -

Oh shit i watched youtube with mobile data and i used 459mb... 31mb left to use it for 12 days... Shit12

-

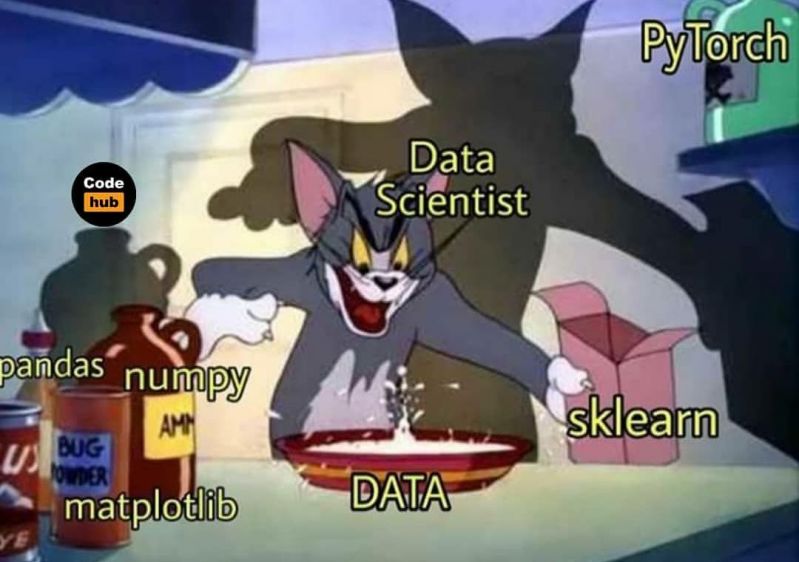

data science is just a sexy word for statistics.

1% programming skills

99% practically all science thats not computer science7 -

That feeling when the business wants you to allow massive chunks of data to simply be missing or not required for "grandfathered" accounts, but required for all new accounts.

Our company handles tens of thousands of accounts and at some point in the past during a major upgrade, it was decided that everyone prior to the upgrade just didn't need to fill in the new data.

Now we are doing another major upgrade that is somewhat near completion and we are only just now being told that we have to magically allow a large set of our accounts to NOT require all of this new required data. The circumstances are clear as mud. If the user changes something in their grandfathered account or adds something new, from that point on that piece of data is now required.

But everything else that isn't changed or added can still be blank...

But every new account has to have all the data required...

WHY?!2 -

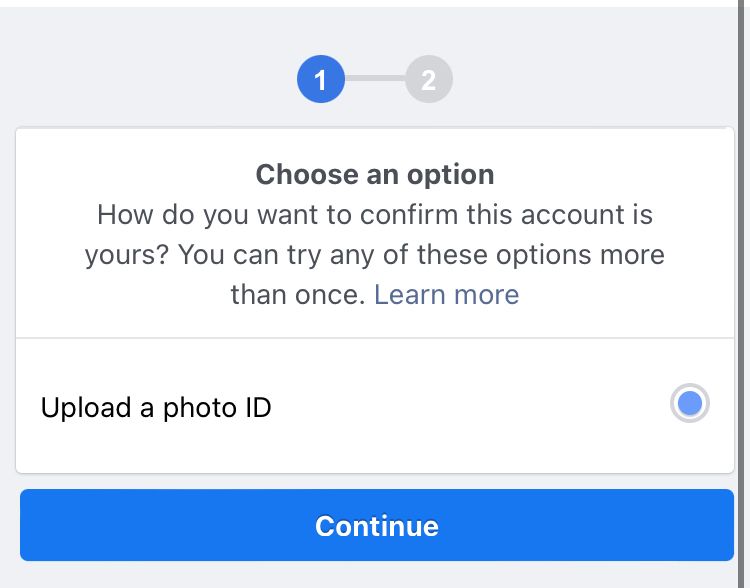

FaceBook, over the years you’ve proven that you can’t be trusted and you still have the nerve to ask for this type of personal data?

5

5 -

!rant

Did you ever have that feeling of "what the fuck - how did I do THAT" - I just had one; I was fine with the algorithm taking like 15/25 minutes to fetch, process, save all data, now it shreds through 10 times of the previous amount in like 7 minutes.3 -

Talk about data protection, I am fucking furious!! A hotel i stayed in recently has sent an email with a scan of my passport and credit card. Do I have any legal rights to fuck them up the arse? The hotel is in france.1

-

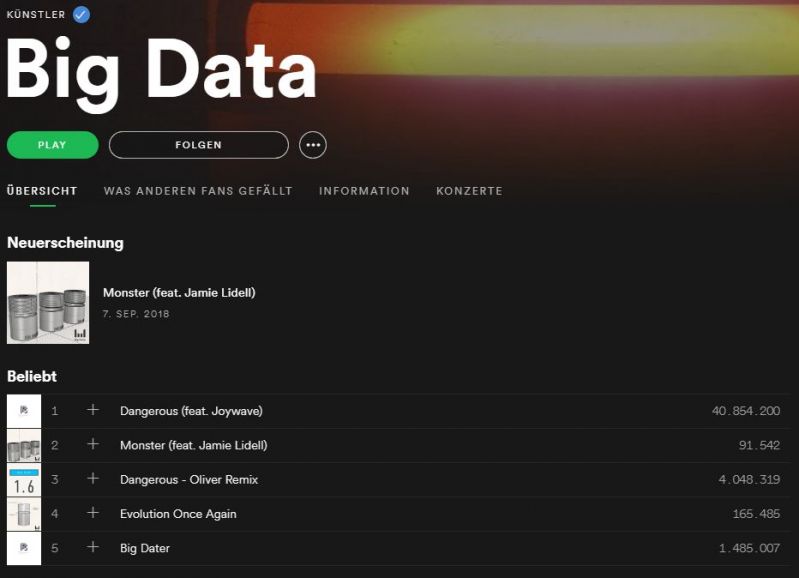

Recently found an artist called "Big Data". His song titles describe what Big Data actually is really well:

-

I don't get all the amazement etc people show when they find out that I don't use services like WhatsApp, Instagram, Gmail, Outlook, Google etc, why would it be 'weird' to want control over who has/owns your data? That's not being fucking paranoid, that's being conscious about who you give your data to.1

-

Because sharing is caring.

For anyone whom cares, I've extracted the CoronaVirus data for total infected / deaths from the world health organisation and shoved it into applicable csv files per day.

You can find the complete data set here:

https://github.com/C0D4-101/...5 -

Just came across a video telling how cloudflare fetches real random data for their generators: Lava lamps, radio active properties and a chaotic pendulum

https://youtube.com/watch/... 8

8 -

Google collects more data than I could imagine.

So i read an article a few days ago and it absolutely blew me off. It mentioned how google collects your personal data and makes it available to you as well (just to rub it in your face I guess). E.g

1. Visit https://google.com/maps/timeline/ : Collects exactly what it says.

2. Visit https://myactivity.google.com/ :

These people collect everything on your device(at least android)!!!!! Even the time spent on home screen! WTF!!!

3. Visit https://takeout.google.com/ : To download your data archive. Ranges from Google photos to Hangouts and everything in between.

-> All the above require signing in with your gmail account.

So basically, if someone manages to get a hold of your gmail password, they have the power to know everything about you.

Aaaahhhhhhh. Ridiculous.9 -

Apply for a data engineer role.

Get invited for a data science interview.

HR says they're building AI and I were to supervise another person writing its algorithm.

It's a media company.

*Risitas intensifies*6 -

OH MY FUCKING GOD!!!!! FUCK YOU SPRING-DATA-NEO4J YOU ARE DRIVING ME CRAZY YOU SHIT FUCK !!! FUCK YOU AND YOUR DELETE ON SAVE BULLSHIT!!!! OMFG!!!!!!! EVERYTIME IS SOME FUCKING SHIT THAT DELETES OTHER SHIT THAT SHOULD NOT HAVE BEEN DELETED!!! JUST FUCK YOU ALREADY IM GONNA REWRITE ALL THIS SHIT!!!!!!!1

-

pushing sensitive data to GitHub repo...not realising for days...and then going through the painful process of removing it again 😑4

-

Ok this is freaking creepy. I searched for information about login systems on other websites for some time. Not once did I touch Facebook. Then Facebook show me targeted ads when I hoped on 30 minutes later. How the heck does Facebook get that data in the first place? I'm starting to get pissed that my data is just handed out to everyone, even by search engines.

22

22 -

"The client is complaining that the data takes a long time to load."

Me, after investigating: "Does the client really need [not an exaggeration, actual figures] 130,000 rows of data?"

"I will show them these stats but they said they need it"5 -

#10 year challenge is basically data set father for new ai which will predict how X looks after 10 years

Data mining at its best2 -

Devrants mobile data usage is so low. Really love don't needing a good connection and don't using to much of my mobile data when using it :)

Also: Grammarly STOP auto-correcting devrant to servant ALL THE TIME...4 -

Forgot to turn off mobile hotspot when I connected my phone with a Windows PC. Fucking windows used all my data to download 'security' updates. Bhenchod.9

-

I accidently lost a whole tables data today. Lucky me I also created a XML-file on the server which was for printing.

I parsed the files back into a simplexmlobject and recovered most of the lost data. 💪13 -

A lot of people give Google, Facebook, Microsoft, etc. shit for "selling" user data although in my opinion acting as a matchmaker between advertisers and users does not really constitute selling data.

In contrast there seem to be a lot of companies that actually do sell user data that I never hear anyone here talking about. 5

5 -

Got myself into Facebook's Graph-API...

...everything is so easy and well optimized.

NOT! Now I have to optimize the request to reduce loadtime. Why is there data so fractured? -

Anyone who has watched Black Mirror and thought what shit it is and technology can never go that far but wonder what if it does and we all are fucked.

Or we are already there but mini version of it. I see people glued on there phone, screen, and even I am guilty of that. But the amount of data we create everyday which can be treated as huge asset by the AI and Data mining enthusiast, I dont doubt we will be at a breaking point, which is coming sooner than we think.6 -

Data Disinformation: the Next Big Problem

Automatic code generation LLMs like ChatGPT are capable of producing SQL snippets. Regardless of quality, those are capable of retrieving data (from prepared datasets) based on user prompts.

That data may, however, be garbage. This will lead to garbage decisions by lowly literate stakeholders.

Like with network neutrality and pii/psi ownership, we must act now to avoid yet another calamity.

Imagine a scenario where a middle-manager level illiterate barks some prompts to the corporate AI and it writes and runs an SQL query in company databases.

The AI outputs some interactive charts that show that the average worker spends 92.4 minutes on lunch daily.

The middle manager gets furious and enacts an Orwellian policy of facial recognition punch clock in the office.

Two months and millions of dollars in contractors later, and the middle manager checks the same prompt again... and the average lunch time is now 107.2 minutes!

Finally the middle manager gets a literate person to check the data... and the piece of shit SQL behind the number is sourcing from the "off-site scheduled meetings" database.

Why? because the dataset that does have the data for lunch breaks is labeled "labour board compliance 3", and the LLM thought that the metadata for the wrong dataset better matched the user's prompt.

This, given the very real world scenario of mislabeled data and LLMs' inability to understand what they are saying or accessing, and the average manager's complete data illiteracy, we might have to wrangle some actions to prepare for this type of tomfoolery.

I don't think that access restriction will save our souls here, decision-flumberers usually have the authority to overrule RACI/ACL restrictions anyway.

Making "data analysis" an AI-GMO-Free zone is laughable, that is simply not how the tech market works. Auto tools are coming to make our jobs harder and less productive, tech people!

I thought about detecting new automation-enhanced data access and visualization, and enacting awareness policies. But it would be of poor help, after a shithead middle manager gets hooked on a surreal indicator value it is nigh impossible to yank them out of it.

Gotta get this snowball rolling, we must have some idea of future AI housetraining best practices if we are to avoid a complete social-media style meltdown of data-driven processes.

Someone cares to pitch in?13 -

When you hear the data engineers talk about ambari, storm, sqoop, kafka & phoenix and you are not sure if they are talking about frameworks or pokemons..

2

2 -

Saw a reddit thread earlier asking about the most unsettling shit that people have found out Google has on them by downloading their data. I saw a bunch of comments about people finding voice recordings that Google had taken. After reading these, I was wondering what I could find from downloading my data. Decided to download my data, and on the page for it I saw that apparently I had disabled location history, audio activity, and device information.

Knowing companies like Google, I wouldn't be surprised if they didn't stop recording that stuff, just that they're not providing it to me. There were zero voice recordings, but there was location history up until about the beginning of 2017.

Another thing they have is all the pictures from all of my hangouts chats. Apparently there had a good amount of older pictures of myself in there. Going back to probably 2-3 years ago, when I had my emo hair. Just a bit of a throwback. One picture I saw was from last January, when my hair was reaching my chest. Made me really miss my hair.

Other than that, nothing that interesting. Just something I thought I'd share.9 -

Girl (trying to turn things on): people from my country are great in bed.

Guy: what data points do you have ?

*Girl walks out of the room* -

My God is map development insane. I had no idea.

For starters did you know there are a hundred different satellite map providers?

Just kidding, it's more than that.

Second there appears to be tens of thousands of people whos *entire* job is either analyzing map data, or making maps.

Hell this must be some people's whole *existence*. I am humbled.

I just got done grabbing basic land cover data for a neoscav style game spanning the u.s., when I came across the MRLC land cover data set.

One file was 17GB in size.

Worked out to 1px = 30 meters in their data set. I just need it at a one mile resolution, so I need it in 54px chunks, which I'll have to average, or find medians on, or do some sort of reduction.

Ecoregions.appspot.com actually has a pretty good data set but that's still manual. I ran it through gale and theres actually imperceptible thin line borders that share a separate *shade* of their region colors with the region itself, so I ran it through a mosaic effect, to remove the vast bulk of extraneous border colors, but I'll still have to hand remove the oceans if I go with image sources.

It's not that I havent done things involved like that before, naturally I'm insane. It's just involved.

The reason for editing out the oceans is because the oceans contain a metric boatload of shades of blue.

If I'm converting pixels to tiles, I have to break it down to one color per tile.

With the oceans, the boundary between the ocean and shore (not to mention depth information on the continental shelf) ends up sharing colors when I do a palette reduction, so that's a no-go. Of course I could build the palette bu hand, from sampling the map, and then just measure the distance of each sampled rgb color to that of every color in the palette, to see what color it primarily belongs to, but as it stands ecoregions coloring of the regions has some of them *really close* in rgb value as it is.

Now what I also could do is write a script to parse the shape files, construct polygons in sdl or love2d, and save it to a surface with simplified colors, and output that to bmp.

It's perfectly doable, but technically I'm on savings and supposed to be calling companies right now to see if I can get hired instead of being a bum :P14 -

Wow! Just updated my devRant android and it has search! Opens the floodgates to data mining the rapidly growing content. Thanks!!!3

-

Oh my fucking god. Austria wants to sell the data of it's citizens to schools, universities museums, and: Companies with enough money. What the fuck?

The data contains shit from the central register of residents, information about name, date of birth, sex/gender, nationality, recidence, health data (!), education, social security/insurance, tax data, E-Card/ELGA Data (system where your doctor visits, prescribed medicines/drugs, all these things, are saved), and other shit.

Welcome to 2018, where you can try as hard as you want to keep your privacy, and then your government sells all the shit you are not able to remove. Fucking bullshit.9 -

Opened my PC today...found my local disk empty...170gigs of data lost...

Feel like crying...hope Recuva works3 -

I hate debugging document oriented data types..

Can't even sysout easily like primitive data types.

Need a debugging duck.1 -

For shit's sake, data stream processing really is only for people with high throughput looking to do transformations on their data; not for people aggregating <10Gb/day of data.

Fuck me DSP is going to be the new buzzword of 2020 and I'm not looking forward to it. I've already got stakeholders wondering if we can integrate it when we dont have the need, nor the resources or funds.10 -

I think I just miiiight have found a new job, but before, some comments about the state of the data engineering industry:

- Sooooooo many people outsource it. Man, outsourcing your data teams is like seeing the world through an Apple Vision Pro fused to your skull. Fine if it is working well, but you will go blind of your subscription expires. Or if Apple decides to ban you. Or if they decide to abandon the product... you are entirely dependent on their whims. In retrospect this is par for the course, I guess.

- Lots of companies think data engineering *starts* with an SQL database. Oh, honey, I have some bad news.

- Quite a few expect MS POWER BI will be able to deliver REAL TIME DASHBOARDS summarizing TERABYTES of data sourced from SQL SERVER (or similar). Facepalm.

- Nearly all think the handling of data engineering products is just like that of software engineering. Just try. I dare you.

- Why people think that "familiarity in several SQL dialects" is something to brag about?

- Shit, startups. Startups are dead, boomers. Deader than video rental physical stores.

That's all. On to the next round of interviews! -

Holly shit, they will never understand....

If you come to dev, minding his buissness, writing some code, beeing focused, with headphones on, etc. You absolutely destroy his cache of things in mind, you stay next to him gazing at his screen and throwing away madly words that you need some data extracted from database NOW and you will stay standing next to him gazing when he quickly types few lines of ultra-wide screen of SQL querry with all the fucking joins and shit you wanted with exac aliases you understand and makes one typo but query executes and spits out some data. He didn't notice that something is wrong with it becouse he didn't memorize database's data, and he sends it to you.

Now you are coming back to him pissed and in general fighting mood becouse he did one fucking typo when you was actively pressurizing him to make fucking query faster while you absolutely destroyed his flow of work (in meaning he now needs to dive back into code, figure out why he was editing given file and what was idea for further structure)

Now you are standing again next to him and absolutely pissing him off that he fucked up and made one fucking typpo that unfortnately database didn't say "nope" but it instead spit out wrong data.

If you can relate to "you" stated above, sincerly fuck you.

If you can relate to "he" stated above, I feel ya man, its fucking annoying, isn't it?1 -

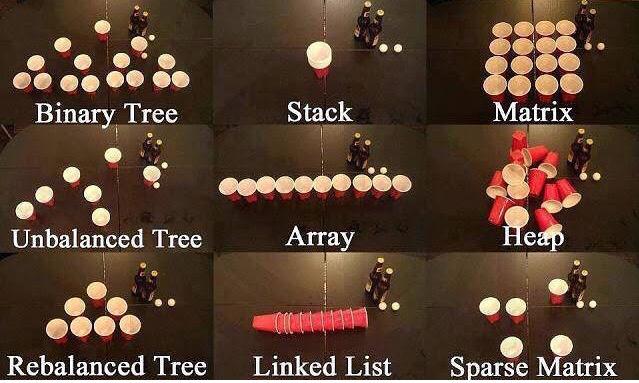

How many of you use the right data structures for the right situations?

As seasoned programmer and mentor Simon Allardice said: "I've met all sorts of programmers, but where the self-taught programmers fell short was knowing when to use the right data structure for the right situation. There are Arrays, ArrayLists, Sets, HashSets, singly linked Lists, doubly linked Lists, Stacks, Queues, Red-Black trees, Binary trees,.. and what the novice programmer does wrong is only use ArrayList for everything".

Most uni students don't have this problem though, for Data Structures is freshman year material. It's dry, complicated and a difficult to pass course, but it's crucial as a toolset for the programmer.

What's important is knowing what data structures are good in what situations and knowing their strengths and weaknesses. If you use an ArrayList to traverse and work with millions of records, it will be ten-fold as inefficient as using a Set. And so on, and so on.31 -

Husband looking into online schools for CS. Anything data science related. He loves math (and is freaking good at it) and teaches himself R for fun.

I put 0 thought into my own schools (terrible, I know, but not likely to change any time soon). Any suggestions for good online data Science programs, with a math minor potentially?

It's for his bachelor's.6 -

You know that the Algorithm & Data Structure exam is coming, when you start traversing binary search trees in your sleep2

-

When your company has data integrity issues and they expect hacky workarounds instead of fixing the data

-

The worst thing about university is having to listen to lecturer say DATA MINING IS GOOD at every single lecture there is.3

-

What container data structures do all of you actually use?

For me, I'd say: dynamically sized array, hashtable, and hash set. (And string if you count that.)

In the past 8 months, I've used another container type maybe once?

Meanwhile, I remember in school, we had all these classes on all these fancy data structures, I havent seen most of them since.18 -

One of my colleagues who thinks he knows all about "big data": "I try to put everything in hadoop, that is my philosophy".

We don't even have a hadoop cluster. -

Part 1: https://devrant.com/rants/4298172/...

So we get this guy in a meeting and he is now saying "we can't have application accounts because that violates our standard of knowing who accessed what data - the application account anonamizes the user behind the app account data transaction and authorization"

And so i remind him that since it's an application account, no one is going to see the data in transit (for reference this account is for CI/CD), so the identity that accessed that data really is only the app account and no one else.

This man has the audacity to come back with "oh well then thats fine, i cant think of a bunch of other app account ideas where the data is then shown to non-approved individuals"

We have controls in place to make sure this doesnt happen, and his grand example that he illustrates is "Well what if someone created an app account to pull github repo data and then display that in a web interface to unauthorized users"

...

M******* why wouldnt you JUST USE GITHUB??? WHO WOULD BUILD A SEPARATE APPLICATION FOR THAT???

I swear I have sunk more time into this than it would have costed me to mop up from a whole data breach. I know there are situations where you could potentially expose data to the wrong users, but that's the same issue with User Accounts (see my first rant with the GDrive example). In addition, the proposed alternative is "just dont use CI/CD"!!!

I'm getting pretty pissed off at this whole "My compliance is worth more than real security" bullshit. -

Am I Data Engineer or Software Development Engineer ?

I design the infrastructure for analytics data, and I build the infra entirely including an development. Except making reports out of the data.

What I'm supposed to be called ?

Data Engineer ?

Software Development Engineer ?

Definitely not an Data Scientist. Official designation given by company is Data Engineer II. But what I'm ?

Confused, someone help me please.5 -

i was learning neural networks, started with keras and was on the first tutorial where they started by importing pandas

so i switched to learning data analysis using pandas in Python where they started by importing matplotlib and i realized data visualization is also important and now I'm reading matplotlib docs...🙄11 -

what's your expirience with business intelligence / data science? Do your company has a own department for it?

If so how does it affect you?2 -

I wonder, are some apps on purpose made to be small size, but then later when you open them force you to download the actual data made with converting people that are low on space or dont want heavy apps to actual download numbers? since if they now after discovering that, delete the app - they already have boosted the downloads

I can understand pulling offline databases or game updates from own servers to get the newest data, but sometimes you come across apps that have no need in any of those and could just e.g. package the audio which will never be updated5 -

A few minutes ago, I was going crazy over a bug caused due to data mutation in Rails.

Basically, user1 was creating a post record and it's stored in the database normally however, on page reload for a second user, user2, the post (that user1 created) was update to belong to user2. This is because, on page reloads, I was using `<<` method call to append user2 and user1 posts together! Apparently, `<<` not only mutates the array, it also performs a database update.

Kill me please!!

Also, data immutability seems a more reasonable feature in languages now.1 -

Why is python supposedly something big data people use ? Sounds like r and stats and well I don’t see the adoption of that though python is used somewhat I note in a lot of Linux apps and utilities

Just seems strange that an interpreted language would be used that way to me or am I an idiot ?35 -

Customer: You say you connect to XYZ service, but my data is not getting synced!

*checks their account*

Me: I see you have not integrated your two accounts. Go to Settings > Integrations > Connect to XYZ.

C: Why? But you already connect XYZ! You should already know all of my data!3 -

What is it about robot collected data that makes researchers so anal? Like, dude, it's not even personal data. It's literally robot's joint motor recordings. It's not nuclear data, so why the fuck do you protect it like your life and your country depend on it?

I hope you get fisted by that data every night and how it will end up in oblivion sooner because you didn't publish it. You asshole.8 -

"It is pointless to use just a fraction of the data in a homologation environment"

Those words reveal the truth in our creed.

We work in the deepest of back-ends to serve the front.

No data is true. Everything can be edited.

We are Data Engineers.

And for those words to take hold, a junior must execute a leap of faith, and push a hotfix into production.5 -

Imagine an annual $50k+ enterprise software package that didn't distinguish between a null and an empty string in valuing critical data. Not noticed for years - wtf?3

-

We specified a very optimistic setup for a data science platform for a client....

Minimum one machine with a 16 core CPU with 64GB RAM to process data.....

Client's IT department: Best we can do is an 8 core 16GB server.

Literally what I have on my laptop.

Data scientist doesn't use any out-of-memory data processing framework, e.g. Dask, despite telling him it's the best way to be economical on memory; ipykernel kills the computation anyway because it runs out of memory.

Data scientist has a 64GB machine himself so he says it's fine.

Purpose of the server: rendered pointless.5 -

Sending email to client (the following is a short version of it)

"

Dear Data Evaluation Team,

Here is a link with the password to the data export for the questionaire.

You will find in there 4 sections:

1. Utilization Report

2. Question List

3. User Responses to questionnaire

4. Summary of responses

"

Email from client

"

Thank you data team.

I see that the user responses have some ids for the questions. Can you please give me the full question text, where is it?

"

My response

"Section 2, Question List"

Like really? Did you just not f*ing read the email and just jumped into the data export blindly. I wrote some fucking docs for a reason. -

I've just spent the last hour or so banging my head against a brick wall trying to figure out why I'm unable to retrieve some data via AJAX even though I know data is being returned as I can see it in my error log.

Turns out the permission system I wrote a few days ago actually works and because I didn't specify a permission it automatically denied my user from retrieving the data. One thing I forgot to add was an error message to tell me when I don't have sufficient permission to do something. Adding a message could have just saved me a lot of time :/1 -

To solve an issue of slow data retrieval on an app, I suggested pre-loading the data once a week and storing it in a spreadsheet ready for instant download.

"Great idea. If more data gets added to the database after we download the data, will the spreadsheet we downloaded also update?"

Seriously, I understand that not everybody is technical but fuck me how do you expect us to update a file on your computer? -

Hey guys any advice/study plan for a 22 year old C++ Server developer to transition into data science ?

P.s. currently based in London4 -

2018: Data Scientist = Stack overflow copy pasting: "I followed a 12-hour 'DS' course on Lynda!"

lm() # science2 -

Recipe for reverse engineering data structures / binary formats:

1℅ understanding the theory.

1℅ expections about what you will find.

3% luck.

45% trial and error.

50℅ persistence.2 -

Went to a Big Data workshop, now I know why there's a elephant as hadoop's logo and how it came. Still no clue how it works.

-

I want to do something data-science-y.

Gimme project ideas, and where can I get the data for it?

Also, not looking for machine learning, just basic data analysis stuff.

I'm bored.10 -

Anybody have any great tutorials about web scraping with python? The data science courses I took only covered maipulauting and visualizing data not getting it.4

-

DEAR NON TECHNICAL 'IT' PERSON, JUST CONSUME THE FUCKING DATA!!!!

Continuation of this:

https://devrant.com/rants/3319553/...

So essentially my theory was correct that their concern about data not being up to date is almost certianly ... the spreadsheet is old, not the data.... but I'm up against this wall of a god damn "IT PERSON" who has no technical or logic skills, but for some reason this person doesn't think "man I'm confused, I should talk to my other IT people" rather they just eat my time with vague and weird requests that they express with NO PRECISION WHATSOEVER and arbitrary hold ups and etc.

Like it's pretty damn obvious your spreadsheet was likely created before you got the latest update, it's not a mystery how this might happen. But god damn I tell them to tell me or go find out when the spreadsheet was generated and nothing happens.

Meanwhile their other IT people 'cleaned the database' and now a bunch of records are missing and they want me to just rando update a list of records. Like wtf is 'clean the database' all about!?!?!?

I'm all "hey how about I send you all records between these dates and now we're sure you've got all the records you need up to date and I'll send you my usual updates a couple times a day using the usual parameters".

But this customer is all "oh man that's a lot of records", what even is that?

It's like maybe 10k fucking records at most. Are you loading this in MS Access or something (I really don't know MS Access limits, just picking an old weird system) and it's choking??!?! Just fucking take the data and stick it in the damn database, how much trouble can it be?!!?!?

Side theory: I kinda wonder if after they put it in the DB every time someone wants the data they have some API on their end that is just "HERE"S ALL THE FUCKING DATA" and their client application chokes and that's why there's a concern about database size with these guys.

I also wonder if their whole 'it's out of date' shit is actually them not updating records properly and they're sort of grooming the DB size to manage all these bad choices....

Having said all that, it makes a lot more sense to me how we get our customers. Like we do a lot of customer sends us their data and we feed it back to them after doing surprisingly basic stuff ever to it... like guies your own tools do th---- wait never mind....1 -

Can anyone recommend good resources for learning how to design NoSQL (document) data models?

I'm interested in stuff that talks about how to make the choices about distributing data across collections, etc.

When to have a single collection, when to split data across different collections, when to duplication data, etc,6 -

I need cyclic data structures but algebraic data types are my first love and tying the knot is impossible with the eagerness F# has. The interfaces and classes I abandoned C# for are the ones I am now writing in F#. What a job well done on my part in avoiding mutability :(6

-

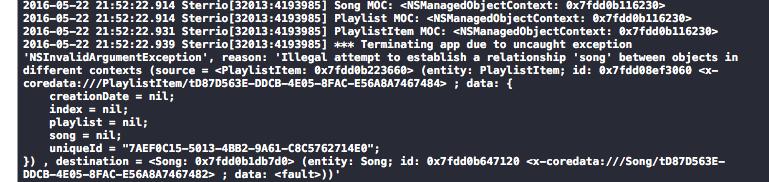

Sometimes I just wish Core Data would die.

I logged the managed object context of each object being touched and they're all identical. 😑😑😑😑😑😑 1

1 -

I’ve been reading this book “Designing Data Intensive Applications” by Martin Kleppman. The concepts are really well explained !!2

-

Data Structures professor enters class, today's topic being RBTs. He opens slides, forgot to redact the name and university from where he blatantly copied it from. Continues anyways. *Facepalm*6

-

I decided to try and see if I can live on my 3GB mobile data plan for work and study today. For 3 hours, I just burnt 200MB by ssh, whatsapp, telegram, browsing forums to fix ticket, and reading manuals.

Guess I have to rely on my customer's restrictive WiFi. Sigh.6 -

!rant

Started Data Science course on big data universuty. The outcomes are heavily dependent on domain experts/stakeholders!!! Since all the answers are false positives and need to decide what make sense with the help of domain experts. And most of the Data scientists are not from programming background, they are domain experts who turned into Data scientists. Thoughts if I should continue with learning big data/data science, knowing that I have knowledge in information retrieval and search engines. -

Let’s just face it. Once the majority of people understand, data science (machine learning on custom behaviour mostly) will be the most disgusted job in the world. Mind boggling! You get shot right between the eye by doing it.

4

4 -

Question & Android

Is there a way on android to limit what apps are allowed to use mobile data and what are only allowed to use wifi?11 -

When someone from your data team claims that 250k worth of data doesn't exist and when the shit hits the fan it magically appears.

-

So I get this email from google for my development account about these new general data protection regulations and what they're doing with admob and all that good stuff.

I didn't dive too deep and there's nothing crazy in it but it definitely feels like it's spawned on by this "selling you data to advertisers thing."

We live in such a weird society where it's like outrage after outrage. I've never known anybody who has NOT known that their statistics and data was sold to third party for marketing for EVERYTHING they do on the computer or phone. For a DECADE or longer. It always seemed to be such a second hand thought but now out of nowhere everyone has their panties in a wad for something they ALREADY knew.

Are we like that miserable/bored/no hobbies/unsatisfied with our first world life that we have to just flip out about dumb crap all the time? -

So today in school I decided on my career choice. So I've decided on becoming a data scientist because I can still use my programming and I very much do like looking at data and researching things as well as program obviously :) so if there are any data scientists or data miners out there do you have advice?5

-

Fucked a clients wordpress website up by editing a saved option thats saved as serialised php data. Tried using the row data from a backup and updating it in the database and it still loads the fallback theme settings. What do?5

-

Why is everyone into big data? I like mostly all kind of technology (programming, Linux, security...) But I can't get myself to like big data /ML /AI. I get that it's usefulness is abundant, but how is it fascinating?6

-

So I cant resize the window and I can't scroll down anymore... Where the fuck is "below" so I can turn "off" data collection 😤

2

2 -

I received the following e-mail today:

Hey, XYZ! Could you please check the following in your web application. The data do not show correctly. Could it be a bug?

[insert attached screenshot with said "bug"]

My reply:

Hey, ABC! It is not a bug. You uploaded the data into the wrong table. 😊

[insert attached screenshot with the incriminating evidence]

-----------------------------

I felt a bit savage and I liked it. 🔥4 -

Personal data in exchange for a coffee coup. Must be a joke, wait is for real.

https://npr.org/sections/thesalt/...4 -

The gap of data science in industry and academia is so large. As a data scientist in a large financial company, I see that people are still using traditional models such as linear regression and SVM, while people in academia keep inventing new concepts and techniques such as deep learning.

I am not saying that we should completely embrace deep learning, or stick to classic methods. But I just feel so surprised that the gap is so large...Sometimes I am even thinking whether I am doing the right "data science"...3 -

Perhaps as a tip for the junior devs out there, here's what I learned about programming skills on the job:

You know those heavy classes back in college that taught you all about Data Structures? Some devs may argue that you just need to know how to code and you don't need to know fancy Data Structures or Big o notation theory, but in the real world we use them all the time, especially for important projects.

All those principles about Sets, (Linked) lists, map, filter, reduce, union, intersection, symmetric difference, Big O Notation... They matter and are used to solve problems. I used to think I could just coast by without being versed in them.. Soon, mathematics and Big o notation came back to bite me.

Three example projects I worked in where this mattered:

- Massive data collection and processing in legacy Java (clients want their data fast, so better think about the performance implications of CRUD into Collections)

- ReactJS (oh yes, maps and filters are used a lot...)

- Massive data collection in C# where data manipulation results are crucial (union, intersection, symmetric difference,...)

Overall: speed and quality mattered (better know your Big o notation or use a cheat sheet, though I prefer the first)

Yes, the approach can be optimized here, but often we're tied to client constraints, with some room if we're lucky.

I'm glad I learned this lesson. I would rather have skills in my head and in memory than having to look up things and try to understand them all the time.5 -

*-- There's something kind of child like and adorable about working for a client who spends THOUSANDS of dollars on their data infrastructure, yet finds it ever so difficult to provide ONE user to help reconcile and test the new data warehouse.

-

Dirty data? More like dirty laundry! And don't even get me started on explaining complex models to non-techies. It's like trying to teach a cat to do calculus. Furr-get about it!5

-

So we finished our requirement ( barely) for a new client. Next is data modelling and system design.

We started with data modelling. Unfortunately the lead developer does not know the difference between database and data modelling.

me: hey bro, we'll do the database and stuff later, now let's focus on data modelling.

him: (acting like he knows) yeah I have developed a sample design for the "data model".

me: no this is database design.

him: what's the difference?

me: dude, they're totally different. Okay, simple explanation data model is what you want to store, whereas DB design is how you store it.

him: So, if I am not wrong, it's implied that you know what to store if you are talking about how to store it.

me: but you don't know what it is you want to store yet. And one of them precedes the other.

him: Okay, let's start with DB design.

me: What?????? you want to build a house without a plan??? That's it for me I am done !!!

I left the project yesterday, later I heard that, the team members are coders, who think that developing a software is all about coding and fixing errors. -

So we are gonna have a presentation offshore in an international event, in some hours from now.

Boss asked me to update some data along with some that a coworker was working on.

I asked about said data to coworker and she replied: they are in dropbox since yesterday.

And I was like OK.

AND NOW I'M FUCKING AWAKE AT 3 AM TO UPDATE IT AND 80% OF THE FUCKING DATA ISN'T ON THE FUCKING DROPBOX!!!! WTFFFF!!!!! -

Real question:

If I save your data in the cloud and it's raining outside, will I lose my data? or Am I safe?4 -

When you wanna be a Data Scientist and always land into internships where you are assigned with web development...

Learnt Node, Flask, Spring frameworks across different internshis... -

Saw a movie related to Data Security and Data privacy. The movie ended 1 hour ago and i am now terrified how my data is going to end up somewhere where it can be misused .Frantically removed all app permissions from my mobile. Wonder how many days it will last. But now after hearing such gory details , i wonder how i can keep my interests safe in this world. I am now even afraid to give my laptop for changing its battery.. Thinking of wiping all possible compromisable data. But dont know how to.

How will technologies like blockchain affect this ? Will it make it worse or is it trying to make it better..?10 -

Here's one for the data scientists and ML Engineers.

Someone set a literal date feature (not month, not season, but date) as a categorical feature... as a string type 🥺

I don't trust this model will perform for long2 -

Has anyone played around with kaggle.com to get into data science?

I’m good with data, good with analytics and good with programming but I need to combine those skills and learn whatever I’m missing.

Kaggle came up and it seems to have some nice data sets and challenges to do.

Thought?1 -

I am excited about Websocket getting more popular. I just completed a project using it. It is so good for sending market data to the front.

-

According to a report from VentureBeat: Verizon Media has launched a "privacy-focused" search engine called OneSearch and promises that there will be no cookie tracking, no ad personalization, no profiling, no data-storing and no data-sharing with advertisers.

By default, Advanced Privacy Mode is activated. You can manually toggle this mode to the "off" but you won't have access to privacy features such as search-term encryption. In the OneSearch privacy policy, Verizon says it it will store a user's IP address, search query and user agent on different servers so that it can not draw correlations between a user's specific location and the query that they have made. "Verizon said that it will monetize its new search engine through advertising but the advertising won't be based on browsing history or data that personally identifies the individual, it will only serve contextual advertisements based on each individual search," reports VentureBeat.

https://www.onesearch.com/5 -

Just dumped one month's GitHub public data or my local machine. Ah boy that was too much data. About 80 GB with 30.9 million documents in mongodb. Too much for local machine. :sigh:

-

I took a project. Wild mix of php and html including db stuff and data processing. About 200 files, some 3000+ lines long with if else cases processed in another template/logic behemoth...

I wrote a js file included it at the footer of the monster and update dom + data via ajax on my own api implementation because I'm too afraid to write in any of those files.

I've been told its quality code and well documented3 -

Can anyone explain to me why facebook got pulled over for selling user data and google hasn't yet?5

-

i previously had Windows 10 and somehow my Windows was deleted so i installed Ubuntu and wiped the hard disk

can u suggest any full data recovery tool in linux from which i can recover the data i had in Windows.1 -

I see bad data and thought to myself, "I'll be able to fix this with a simple regex." A month later, I'm still finding new data patterns. Never give users the ability to store all of their data in a huge textarea box where they can make stuff up.2

-

Not sure what I wanna do ---

Backend Development or Data Analyst

Even in backend development confused between nodejs, PHP, Python though I don't have proper knowledge of all of them.

For Data Analyst I am looking the way from where I should start.

I am in 3rd year of my college

Can anyone please help me with this?5 -

Got a file with cobol data in it.. Also got thr structure to read it, but what the fuck is COMP-3? Any idea how I can decode this into a human readable format? 🤔😓🤓

-

I hate data structures. I try to work out their algorithms in my head but they're completely counterintuitive. lol.8

-

Data scientists should be charged with animal trafficking and animal abuse because they import Pandas 🐼 and feed them to Python 🐍2

-

My typical user interaction:

Me: "But we need to pull the data from the source and we don't have access to it"

User: "Make it work"

Me: "I want to fucking die." -

The new UK law for data sharing with the governments is crazy with making it law for service providers to hold data of browsing history and big sites like google, facebook so on to retain human readable access to there data is they offer a service to the UK, what steps do we take to protect the data, service but also follow this law I can't see anything that would make any sense to be able to follow this law.

What are your views and ideas going forward, at the moment the UK as made it law even tho the EU said stop this madness, so lets take it as red its there, is there sense-able way to do this or are we going to have to provide UK users data a means to be back doored?11 -

What's the difference between data science, machine learning, and artificial intelligence?

http://varianceexplained.org/r/... -

Accidentally deleted ftp account and it deleted public_html folder. All data is gone can I recover those files in it ? server is godaddy4

-

Does anyone here use Google Photos and, if so, have you seen an ungodly amount of mobile data usage even though you have mobile uploading turned off in the settings?2

-

Quick question on Android development. Is it good practice to access UI elements from code, i.e R.id.example? Or is there something similar to WPF's data binding?10

-

I was wondering if it is allowed to crawl all posted rants on devrant to do some fancy data mining stuff while learning python. Any clue?3

-

it's actually kind of surprising how many times programmers, who are experts in data representation, mess up coming up with data representation standards then have to reinvent them

so when you run into a data format that hasn't changed in 30 years it's like woweeee someone thought something through well for once, wtf2 -

Us users would never accept data lock-in for photos and videos from the camera app on smartphones.

Yet, for some reason, we accepted data lock-in for saved pages on mobile web browsers.5 -

FEAR OF DATA LOSS

Resolution => backupS

1: external drive (or NAS)

2: cloud storage (maybe multiple services)

3: git repositories

After all these backups you are kind of demigod of data recovery...8 -

Cyber threats are the top concern of C levels. In actuality companies unintentionally expose way too much data. It's ridiculous what some make public.

-

For any webDev in here, is there any open source tools for web data extraction? i've seen imacros and UiPath, maybe you use other tools.5

-

AngularJs:

Two main Controllers (main layout, sub page) and two directives with controllers. Controllers and directives have two-way data binding. All of them use $watch, $broadcast, $on.

BRAINFUCK OF MY LIFE. DO NOT COMBINE WATCH WITH TWO-WAY DATA BINDING. -

Anyone here work as a Data Scientist? If so would you be able to say what kind of things you get up to in an average day?13

-

Sometimes I have to do some data analysis a I use R Studio.

Today I tried to do the same thins using KNIME.

It just like comparing playing with Lego Duplo after having tried Lego Technic. 1

1 -

I'm all for alternative methods of learning but this post on FreeCodeCamp is total bollocks.

How does someone take and complete a class in data structures in one week?

https://medium.freecodecamp.com/how...5 -

I've recently learned how committing of the Save Data to file works in my project.

The file is updated w/ _each change_ made to the settings.

Worse yet - the file is updated even when _no actual change_ is made due to the setting already being at its highest / lowest value possible.

/*

e.g. 5 is maximum sound volume.

- You try increasing the sound volume.

- Setting can't get any higher, so remains unchanged.

- *Update the Save Data*.

*/

What kind of abusive masochist would do that?

// Yes... there's always blame.4 -

for fellow Data Scientists/Analysts..

I was wondering...which is the longest maintained time series data of all time? i am just learning about trends, seasonality , etc in a time series, and wondered if the pattern still exists in fairly large data, like for 100 years or 100,000 days or if our present forcasting models like arma/ arima would cover them -

A note to the team designing recommendations on google ads:

Just because I search for deep learning concepts for personal learning, does not mean I will be purchasing every paid online data science course on the planet.6 -

Every time you give me a CSV to import, why is it arranged differently to the last one, missing info that is required for the point of the thing I'm importing it into, and I have to spend 2 hours going through it with a text editor and even a hex editor? Your data entry is about as clean as my arse after a particularly spicy burrito.3

-

Context: I am leaving my company to work at a data science lab in another one.

My senior dev (with PO hat): we need to gather data from prod to check test coverage. You will like it as you will be data scientist hehehe (actually not funny). You will have to analyze the features, and find relations between them to be able to compare with the existing tests

Me: oh cool, we can use ML to do that!

Him: Nope, we need to di it in the next 3 weeks so we need to do it manually.

Me:... I have quit for something.... -

This is why you keep production data separate -- and out of the hands of developers: http://businessinsider.com/uber-emp...3

-

I have been doing web development on NodeJs and React and then I got bored and I started learning into Data Science, it's been a year since I have been doing courses and learning all the related fields and now I feel like I could use some hands on experience. So I'm looking for Internship as a Data Analyst or ML Engineer role.

Anyone who would be able to help would be great -

So I've been studying masters in business analytics and big data. So far it's been 2 months and I don't have a clear picture about what big data actually is.

I guess that's normal and no cause of concern right guys ? -

I really want to switch my career from being a Full-Stack python/javascript developer to be a Data Engineer.

I've already worked with relational and non-relational databases, troubleshooted a couple of Airflow DAGs, deployed production-ready python code but now I feel kinda lost, every course I start on the Data engineering topic feels really useless since I feel like I've already worked with that technology/library, but I'm still afraid of start taking interviews.

Any good book/course or resource that I should look in?

BTW first rant in a couple of years, this brings me memories1 -

Anyone else here done a data migration from CiviCRM to Salesforce with a metric arseton of custom fields? Every so often I find some dirty data issue that predates even the previous lead dev and most other people who still work here.1

-

This nonsense gave me an idea. Now I want to start building a big, organised, db for good bad examples. I can think of so many uses for it if everything is tagged/categorised well.

Thank you rando LinkedIn reject, you gave me the best birthday gift I could hope for... another potential branch of my data architecture to play with new data in new and to be discovered ways!

The site of the rando is athensnexus.com 5

5 -

Question to you all, do you really think you own your computer or system/data when almost all sites/services out there state very clearly in there ELUA(Fuck yes ours) that they might use your data how they feel fit, now this does not stop with websites, Mac, Windows and some Linux Distros also do this.

I for one stop thinking that I own data but I just change a few bits to make it look different these days, everything on your computer is not yours, we its and hardware, read the ELUA/TOS many hold the right to recall, revoke and so on use of the items to the point you paid for it they will take it back.

Items now sending keylogs, data usage and apps usage data to MS, Apple, some big linux distro, and YES this happens don't fool yourself Apple and MS both admit this happen and both US and UK now requesting these companies to let the have full access to this data, if it was not there they wouldn't want it.

This wont stop me from messing with code and loving tech but do you really feel you own anything anymore?

I don't :P7 -

How possible is it to have a decentralised internet?

Because currently we have an almost centralised net... Where the major players are Google, Amazon, Alibaba and the likes acting as data holders.

This really bothers me and it's fair to say that the original dream of an internet for the people, by the people, is long gone.

Can we foresee a p2p in place of this monopolitic centralised internet?2 -

"Like ... phenomena united by Einstein's formula E = mc², procedure and data are to some extent two different ways of viewing the same thing."

-

I hate pl-sql and data warehousing. For this project we're extracting from source tables using a generic method equal for every student, changing the data and then copying to a table for analytics.

Everyone's project is fine. Mine occupies 90mb and exceeds the quota already. Delivery due in 2 days... So much for that cs grad. FML. -

Guys Is anyone here a Data Analyst?

Can you guys tell me more about he job and what skills are required

Thanks in advance1 -

I want to become a data scientist and am now making an Android app to collect data from people. Am I doing this right? Idk wtf I'm doing anymore x(1

-

Basically any idea without any monetizing strategy whatsoever or just the idea of "selling data". What data and to whom?!

-

I've started looking into stuffs like GraphQL and Linked Data (though I've used Micro Data before on websites) today and while fiddling with stuff, I found this handy tool:

https://search.google.com/structure...

I'm trying to create JSON-LD data and it's really helpful to have something validate my test data 🙂 -

I just landed a job in data science, and I've been asked by my employer about the hardware specs of the machine that I'm going to use. What do you guys suggest? I don't want to be a financial burden, but I also want something that wouldn't require frequent upgrades.1

-

i understand way too little about web data types. while having to store a shitload of data in cookies (sorry for that, no localstorage for local sites, insensitive though) i was so proud of compressing strings with bitshifting only to find out that uriencoding bloats chinese characters massively up. fml3

-

Anyone got any good resources or advise regarding data visualization and finding relations between data programmaticly?6

-

I got to create the data platform with Azure Data Factory. I am new to data platform.

Any advise on what to look out for?

Could you guy please tell me if you know any good use case I can look at or any obvious pitfall which drain all the credit and so on?

I just a vague idea of what Azure data factory can do.4 -

What it's like being a data architect working with clients and their staff:

https://youtube.com/watch/... -

Mass notification vendors lack of understanding that to create value for clients they must turn raw data into information into knowledge into wisdom. Shoveling oceans of raw data at us does not impress us.

-

So - I basicly have free data on facebook and messenger traffic. Is there a way I could possibly tunnel all my other traffic through facebook which would kind of give me unlimited data?1

-

Listening to podcasts on programming and development, and one on data science peaked my interest. Any data scientists out there willing to share what you're actually doing with data science?1

-

Have opted for Big Data and analytics this sem as Dept. elective. Can't understand anything in the lectures(as usual). Any suggestions how to start learning?(books, tutorials, courses, etc.)

-

I'm trynna buid a new pc for data related works. I already have i7 8700k processor with decent cooler in Asrock z370 extreme 4 MoBo and 16 gb ram. I cant decide between RX 5700XT and RTX 2080 Super.

Please help. Your valuable opinion is highly appreciated.4 -

I am trying to extract data from the PubSub subscription and finally, once the data is extracted I want to do some transformation. Currently, it's in bytes format. I have tried multiple ways to extract the data in JSON format using custom schema it fails with an error

TypeError: __main__.MySchema() argument after ** must be a mapping, not str [while running 'Map to MySchema']

**readPubSub.py**

import apache_beam as beam

from apache_beam.options.pipeline_options import PipelineOptions

import json

import typing

class MySchema(typing.NamedTuple):

user_id:str

event_ts:str

create_ts:str

event_id:str

ifa:str

ifv:str

country:str

chip_balance:str

game:str

user_group:str

user_condition:str

device_type:str

device_model:str

user_name:str

fb_connect:bool

is_active_event:bool

event_payload:str

TOPIC_PATH = "projects/nectar-259905/topics/events"

def run(pubsub_topic):

options = PipelineOptions(

streaming=True

)

runner = 'DirectRunner'

print("I reached before pipeline")

with beam.Pipeline(runner, options=options) as pipeline:

message=(

pipeline

| "Read from Pub/Sub topic" >> beam.io.ReadFromPubSub(subscription='projects/triple-nectar-259905/subscriptions/bq_subscribe')#.with_output_types(bytes)

| 'UTF-8 bytes to string' >> beam.Map(lambda msg: msg.decode('utf-8'))

| 'Map to MySchema' >> beam.Map(lambda msg: MySchema(**msg)).with_output_types(MySchema)

| "Writing to console" >> beam.Map(print))

print("I reached after pipeline")

result = message.run()

result.wait_until_finish()

run(TOPIC_PATH)

If I use it directly below

message=(

pipeline

| "Read from Pub/Sub topic" >> beam.io.ReadFromPubSub(subscription='projects/triple-nectar-259905/subscriptions/bq_subscribe')#.with_output_types(bytes)

| 'UTF-8 bytes to string' >> beam.Map(lambda msg: msg.decode('utf-8'))

| "Writing to console" >> beam.Map(print))

I get output as

{

'user_id': '102105290400258488',

'event_ts': '2021-05-29 20:42:52.283 UTC',

'event_id': 'Game_Request_Declined',

'ifa': '6090a6c7-4422-49b5-8757-ccfdbad',

'ifv': '3fc6eb8b4d0cf096c47e2252f41',

'country': 'US',

'chip_balance': '9140',

'game': 'gru',

'user_group': '[1, 36, 529702]',

'user_condition': '[1, 36]',

'device_type': 'phone',

'device_model': 'TCL 5007Z',

'user_name': 'Minnie',

'fb_connect': True,

'event_payload': '{"competition_type":"normal","game_started_from":"result_flow_rematch","variant":"target"}',

'is_active_event': True

}

{

'user_id': '102105290400258488',

'event_ts': '2021-05-29 20:54:38.297 UTC',

'event_id': 'Decline_Game_Request',

'ifa': '6090a6c7-4422-49b5-8757-ccfdbad',

'ifv': '3fc6eb8b4d0cf096c47e2252f41',

'country': 'US',

'chip_balance': '9905',

'game': 'gru',

'user_group': '[1, 36, 529702]',

'user_condition': '[1, 36]',

'device_type': 'phone',

'device_model': 'TCL 5007Z',

'user_name': 'Minnie',

'fb_connect': True,

'event_payload': '{"competition_type":"normal","game_started_from":"result_flow_rematch","variant":"target"}',

'is_active_event': True

}

Please let me know if I m doing something wrong while parsing the data to JSON. Also, I am looking for examples to do data masking and run some SQL within Apache Beam4 -

Alright, I need your help! (Im going to so fucking regret this...) My external backup HDD is corrupt, the EaseUS Trial (piece of fucking garbage tool) can detect all the data, but im not going to pay 50-80 bucks for this (fucking piece of shit) tool...

What do you use for data recovery?

Or should I just torrent (Hate me, but im not going to pay for a tool im going to use ONE time) some other (not so piece of shit) tool?5 -

Looks like the EU is about to do another healthy push towards data privacy. What do you guys think? Is this the real deal, or is there something hidden underneath?

https://politico.eu/article/... -

Is there a community about infrastructures? or data warehousing? Trying to find solutions for current projects, but don't have anyone for asking questions.

-

Any suggestions on android data recovery ??

The device is not rooted and there weren't any backups.

I have already tested different paid (cracked) software on another device for testing purpose but none of them work.

Send Help!!7 -

what are the basics I should know about "data streaming" for working on video streaming companies as a future senior backend Golang developer?2

-

Today I got my plan upgraded for free to unlimited data it's a dream come true and between 12am and 12pm I used 8GB hahaha

-

I'm lost here 😑! Got a new job and I supposed to analyze/fix/update/ the communication softwares/hardwares internally. Data security is insanely important and everything should be inexpensive 😑. Any suggestion what I can use as softwares and communication tools?7

-

Can you guys please recommend books that made you cry?

My Answer:

Data Structures and Algorithms in Java4 -

I need to add 44,000 image to git repo. I try to use git-lfs but it's too slow when I run "git add ." command. Is there any faster solution?

Extra Information : The image are the data set for my AI model. The reason I use git is because I wanted to manage my data set easier since I am going to add/remove images to that data set.16 -

What is your favorite programming language to implement algorithms and data structure?

Or to be more specific, if you write interpreter and compiler, what is your choice of tool?5 -

Here's my latest and greatest(ish) post:

How to overcome GDPR ... with data leaks.

https://loosy.gitlab.io/2019/10/...5 -

How would one go about pulling the entire post history? ...and the weekly questions? Is that even okay, and if so, do you perhaps share archival data somewhere? I mean devRant, of course.1

-

Really need help to upskill as a data engineer. Are there any free sample databases data out there with practice questions to upskill as one? I wanna learn database design, data lake and data warehousing skills... I don't know if I'm asking the right questions but if I'm not making sense, please help me out!

-

So my hosting service recently informed me of a personal information leak due to a data feed that “accidently“ went public. I'm lost for words.1

-

So i have begun learning python and jupyter notebooks etc Do you have any advice for someone like me who's a react dev and trying to switch to data science?10

-

Hey guys i need help, i want to switch to data field from react, i hv 2 yrs exp in react, should i go for data science? Can a frontend guy like me become a data scientist? And is the data science job fun when compared to react? Or should i go for power bi developer? I heard that power bi has a lot of scope as well. Thanks