Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Related Rants

Finding this buried as a joke...

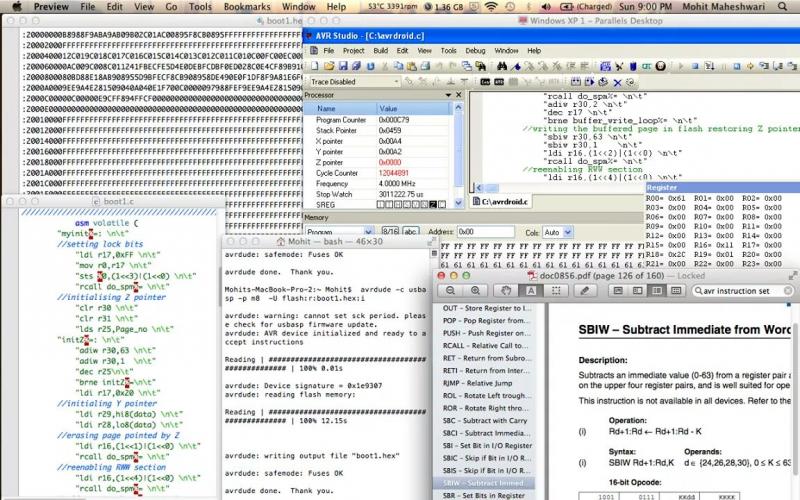

Finding this buried as a joke... In my college days i was designing a bootloader for avr microcontroller , i had the idea to flash code wireles...

In my college days i was designing a bootloader for avr microcontroller , i had the idea to flash code wireles...

I dunno about coolest, but I did sort of cement my reputation as the "database guy" in my first job because of this.

My first job was with a group maintaining a series of websites. Because of the nature of the websites, every morning we had to pull the records from one database on one network, sneaker net the data to a database on another network, and import the data via custom data import function.

However, the live site would crash after 100 or so records were imported. The dba at the live site had to script out a custom data partitioning script to do his daily duties, but it definitely messed up his productivity.

Turns out, the custom mass import function had recycled the standard import function, which was only used to import 1 record at a time, and it never closed its database connections, because it never needed to. A one line fix to production code was delivered 6 months later (because that was our release cycle) and I came up with the temporary work around, which was basically removing the connection limit. It would still crash with the work around, but only with multiple days worth of data. So basically only on Monday. Also developed the test set for the import (15k+ records).

undefined

wk6