Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

you can always install stable diffusion on your PC.

Although i think the latest data set is a cleaned one aswell. -

Hazarth91911yCensorship cuts both ways. No matter if you're liberal or conservative. But hey, I guess this is what the vocal minority of the population wanted. Let's police speech to the extreme :)

Hazarth91911yCensorship cuts both ways. No matter if you're liberal or conservative. But hey, I guess this is what the vocal minority of the population wanted. Let's police speech to the extreme :) -

kobenz9591y@Chewbanacas no worries, we'll see it very soon 🤣 not even in my corruption ravaged country there has been a convicted felon running for presidency

kobenz9591y@Chewbanacas no worries, we'll see it very soon 🤣 not even in my corruption ravaged country there has been a convicted felon running for presidency -

anux7111yCensorship is a code smell for a society. It is not the root problem.

anux7111yCensorship is a code smell for a society. It is not the root problem.

In this case, there are still an advantage from it. Nothing stops a human from creating it. Cartoonists at least, can still maintain a creative edge. -

Hazarth91911y@tosensei true, but Im not sure what to call it then. It's a company that scraped public data, articles and conversations from the internet, trained a model on it and them trained that model to reply to a part of that data that they don't agree with with a predefined warning.

Hazarth91911y@tosensei true, but Im not sure what to call it then. It's a company that scraped public data, articles and conversations from the internet, trained a model on it and them trained that model to reply to a part of that data that they don't agree with with a predefined warning.

It sounds a little bit like censorship since we're talking using public data and... Censoring it? It's not removed from the dataset, It's not filtered, It's covered over by additional RLHF training. "Censor" comes to mind as an intuitive word for this. Not all that different than bluring an unwanted part of a publicly posted image or video. -

Hazarth91911y@tosensei I see what you're saying but I'f still feel It's different. LLMs are much closer to google/software than to humans. The LLM didn't decide anything. It was hotwired to respond this by a round table of engineers. It's much closer to the same round table deciding what can and can't show on google. I think we still collectively agree that's censorship, no?

Hazarth91911y@tosensei I see what you're saying but I'f still feel It's different. LLMs are much closer to google/software than to humans. The LLM didn't decide anything. It was hotwired to respond this by a round table of engineers. It's much closer to the same round table deciding what can and can't show on google. I think we still collectively agree that's censorship, no?

Related Rants

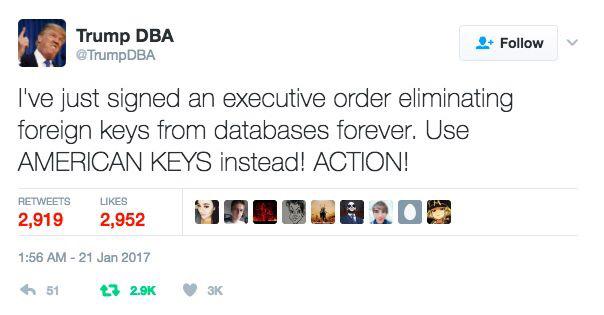

Can't stop laughing.... Brilliant post...

Can't stop laughing.... Brilliant post... I had to do it

I had to do it MAKE THE WEB GREAT AGAIN FTW!

MAKE THE WEB GREAT AGAIN FTW!

Seriously, ChatGPT?? What other use could you have in this historic moment?? Guess OpenAI forgot to train opportunism into its models.

rant

trump