Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

@Demolishun I would have but I don't constantly have the BBC on my brain.

I'm actually mixed race. I'm white but my cock is big and black. No really. I wear it around my waist like he-man's power belt at all times. But its also completely covered in vitiligo patches, basically albino black, so its a form of disability.

I hit all the intersectionality points for maximum politi-kal skee-ball scores in the diversity pyramid arcade. -

@Demolishun I actually don't mind Le Gays after having met a few over the last decades and realizing most of them were reasonable towards others and suprisingly few of them wore it on their sleeves. Butthole surfing just isn't my jam.

I'd be a hypocrite if I judged them when I'm an individualist and anarchist by default.

Besides we all know civilization is going to shit. I don't really care what couples are doing in the privacy of their own rooms as long as they aren't waving their monkey pox in my face or punching holes in public bathrooms for use as glory holes.

I've given up on judging anyone not in a suit, because its ultimately counterproductive and doesn't accomplish anything but alienating morons from the bigger picture.

Which is that civil society is being divided and distracted by people who want to rob and rape us even more than they already are. -

@Demolishun I know gays who would find this fun, contrary to the divide-and-distract propaganda that everyone right or left-of-middle is a neurotic who will sperg out at colorful language they disagree with.

Theres cool people everywhere once you get to know them, and a lot that are still willing to agree-to-disagree and engage in tongue-in-cheek, contrary to the modern propaganda post smith-mundt-modernization act that has so many looking at each other as outright polarized enemies over policies and positions that are ultimately trivial compared to the fact we're all getting fucked equally by D.C, silicon valley, and wall street--without lube.

We're all in the same boat and more people are starting to realize that then ever, that the boat is sinking, and the only ones invited onto the lifeboats or in the doomsday bunkers are sociopathic cunts in suits who don't give a fuck about any of the issues they fucked and divided all of us on. -

Could you explain this a little more? I don’t understand very complex math, but I do understand matrix multiplication (and its role in ML) as well as kernels (in the context of computer vision)

-

@DeepHotel look at variations on the perceptron models and deep networks. They can be represented as arbitrary polynomials.

Now look at classification, for example bayes.

Predicting a class is equivalent to generating a token if we treat the input+context as a feature vector, and the token dictionary as the class labels.

But because things like bayes assume independence between vectors, what we'd be training is for dependence. We're looking for the *least* likely class, rather than the most likely class because of that independence. Thing of it as inverted naive bayes.

Polynomial regression would let us run a layer over this for self-attention, modifying some sort of weighted norm layer to change the classifier's bias, on the basis that polynomials can aproximate any function.

The affect is to teach attention what tokens to attend to, rather than teach attention what tokens are correct for a given input, and then let bayes do the heavy lifting augmented with this. -

I also think ngrams and montecarlo tree-search are a bit of a baby-out-of-the-bathwater scenario.

I could envision using mm1 queues for sequence modelling of attention, and

'forgetting' as well as likelihood for recall.

monte carlo tree search with UCB1 so we can use 'k' as

a tuning constant to control 'exploration vs exploitation'

on a per-ngram basis.

interest search (for faster minmax) takes 'temperature' and 'top k' and puts it on its head. The network uses interest ratings both during training and inference, to rate branching

possible outcomes (because every token probability is a branch),

implementing look ahead for those tokens

And then just as next-token ngram inference has interest matrices generated at run time, each prior output, gets re-rated on interest, based on its UCB1 and mm1 attention. -

- do you have a job in ML?

- or are you currently studying ML?

- or given the nature of ML you can never have a job in ML without constantly studying? -

@Wisecrack is a person of culture, black books - the British tv series - is the tits.

-

Hazarth92321yYes, I don't see what the big revelation is. NNs are literally just a big addition and multiplication of features and constants

Hazarth92321yYes, I don't see what the big revelation is. NNs are literally just a big addition and multiplication of features and constants

If it werent for the activation function that introduces more interesting non-linearities it would literally just be a huge polynomial. Pretty much any book on statistical analysis will mention neural networks and vector support machines as curve fitting models (once again, just like higher order polynomials with tunable parameters).

It's nothing revolutionary. The only revolution is happening in computing power and storage. Most Interesting neural models simply werent possible before due to hardware limitations. The first really cool aplication that stuck with me was AlphaFold from 2018. -

@NeatNerdPrime watched it as a teen - changed my life. Still looking for the little book of calm

-

@Hazarth tell that to the yahoos that think the world has changed forever since ChatGPT

-

Hazarth92321y@gitstashio I tried, the yahoos always think they know better :( But alas, what can we do, can't argue with a brick wall forever xD

Hazarth92321y@gitstashio I tried, the yahoos always think they know better :( But alas, what can we do, can't argue with a brick wall forever xD -

@Hazarth you're demonstrating you're familiarity with the subject here for sure. Most familiar with it have wrote the same thing, the more data and processing power generally the better the performance, with negligible gains from architecture.

Well, not entirely accurate. Architectural *variation* has negligible impact..that is intra-algorithm.

Inter-algorithm is another matter.

Right now we can't control exploration vs exploitation. Temperature is probably the closest thing we have, and top-k isn't all that great.

The funny thing is, by representing a sequence as an ngram selected from a probability tree, we can selectively control exploration vs exploitation, or any other property.

At the same time interest algorithms like UCB1 allow us to move away from minmax and local optimal inherent in selective search.

From there plausibility ordering with huffman encoding can be used as the basis for training embeds in this case, as an alternative to matmuls. -

@gitstashio

1. no

2. yes, as a break from cryptography and maaath shitposting

3. ML is pretty much all study. Who isn't a lifelong learner? -

@gitstashio spam is just gonna get more sophisticated.

I can't wait for the indian call centers that replace everyone with a deepfaked perfect english accent, and call you about your antivirus all day, and after five minutes on the phone go off on a tangent about being self-aware robots for no reason, and then confuse themselves for you and suddenly start feigning suspicion that you're trying to scam them.

Related Rants

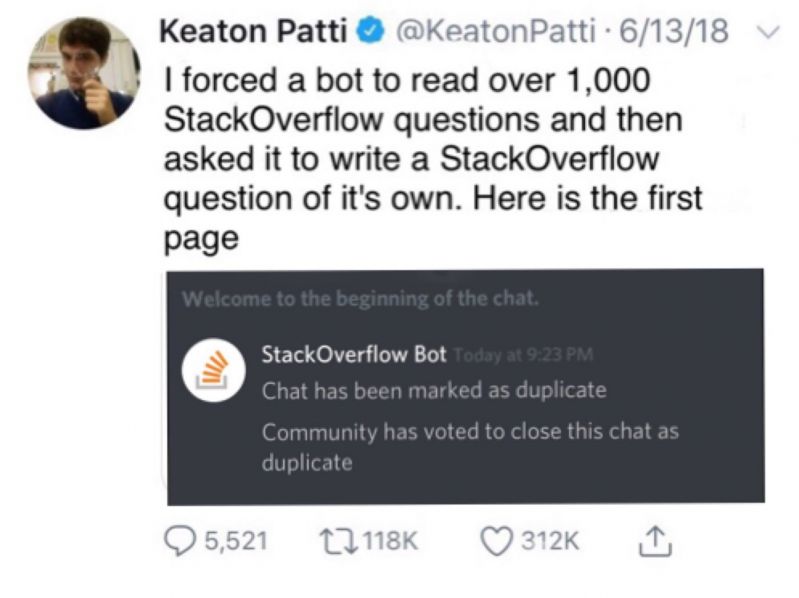

Machine Learning messed up!

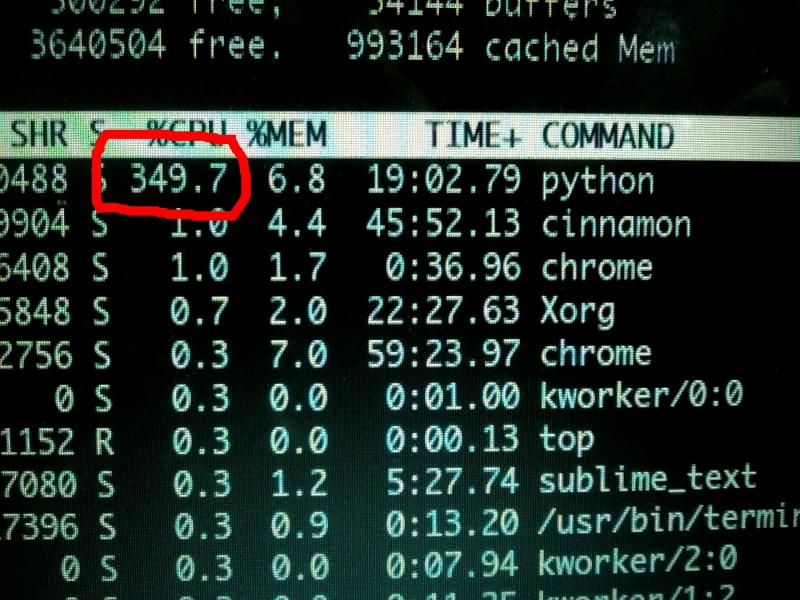

Machine Learning messed up! When your CPU is motivated and gives more than his 100%

When your CPU is motivated and gives more than his 100% What is machine learning?

What is machine learning?

From my big black book of ML and AI, something I've kept since I've 16, and has been a continual source of prescient predictions in the machine learning industry:

"Polynomial regression will one day be found to be equivalent to solving for self-attention."

Why run matrix multiplications when you can use the kernal trick and inner products?

Fight me.

devrant

ml

machine learning