Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Related Rants

No questions asked

No questions asked As a Python user and the fucking unicode mess, this is sooooo mean!

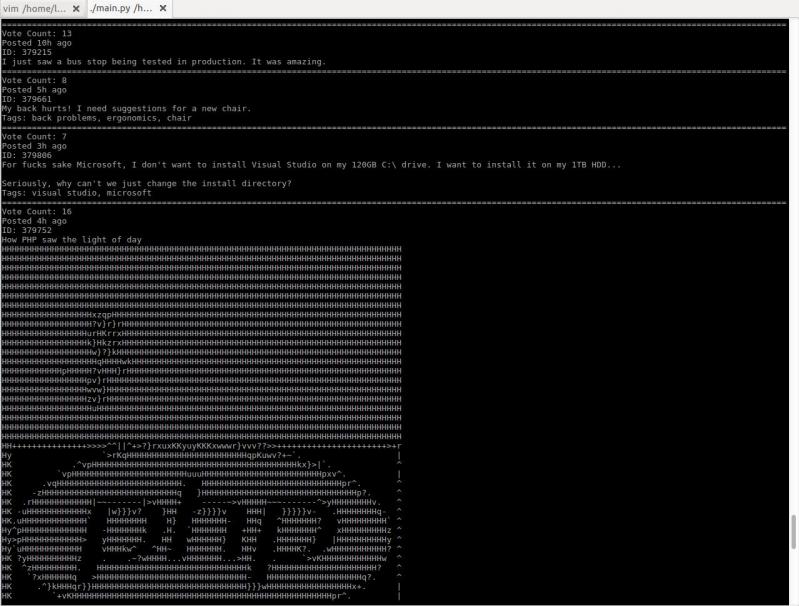

As a Python user and the fucking unicode mess, this is sooooo mean! I just started working on a little project to browse devrant from terminal. It converts images to ascii art!

I just started working on a little project to browse devrant from terminal. It converts images to ascii art!

!rant

Ever find something that's just faster than something else, but when you try to break it down and analyze it, you can't find out why?

PyPy.

I decided I'd test it with a typical discord bot-style workload (decoding a JSON theoretically from an API, checking if it contains stuff, format and then returning it). It was... 1.73x the speed of python.

(Though, granted, this code is more network dependent than anything else.)

Mean +- std dev: [kitsu-python] 62.4 us +- 2.7 us -> [kitsu-pypy] 36.1 us +- 9.2 us: 1.73x faster (-42%)

Me: Whoa, how?!

So, I proceed to write microbenches for every step. Except the JSON decoding, (1.7x faster was at least twice as slow (in one case, one hundred times slower) when tested individually.

The combination of them was faster. Huh.

By this point, I was all "sign me up!", but... asyncpg (the only sane PostgreSQL driver for python IMO, using prepared statements by default and such) has some of it's functionality written in C, for performance reasons. Not Cython, actual C that links to CPython. That means no PyPy support.

Okay then.

rant

performance

python

pypy