Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

endor54477yWith ipset you can create a list of ip and ip blocks to avoid (it works in combination with iptables).

endor54477yWith ipset you can create a list of ip and ip blocks to avoid (it works in combination with iptables).

The idea is:

1) get an incoming packet

2) check if it comes from the list of banned ips; if yes, discard it

3) otherwise, keep traversing the other iptables rules -

As others have mentioned, fail2ban, and lock down certain pages to specific addresses.

-

cursee164357yOk probably the most noob question you have every seen regarding this topic.

cursee164357yOk probably the most noob question you have every seen regarding this topic.

The devs said they have setup everything and also using cloudflare.

So why do I still see those requests in the logs? -

cursee164357yThank guys. I have advised them to learn about fail2ban and implement it.

cursee164357yThank guys. I have advised them to learn about fail2ban and implement it.

Let's see 😁

I'll share update here if there is any.

Personally I just use someone's services for my projects if it's something I'm not strong at. This advice is for a friend.

Related Rants

Did you say security?

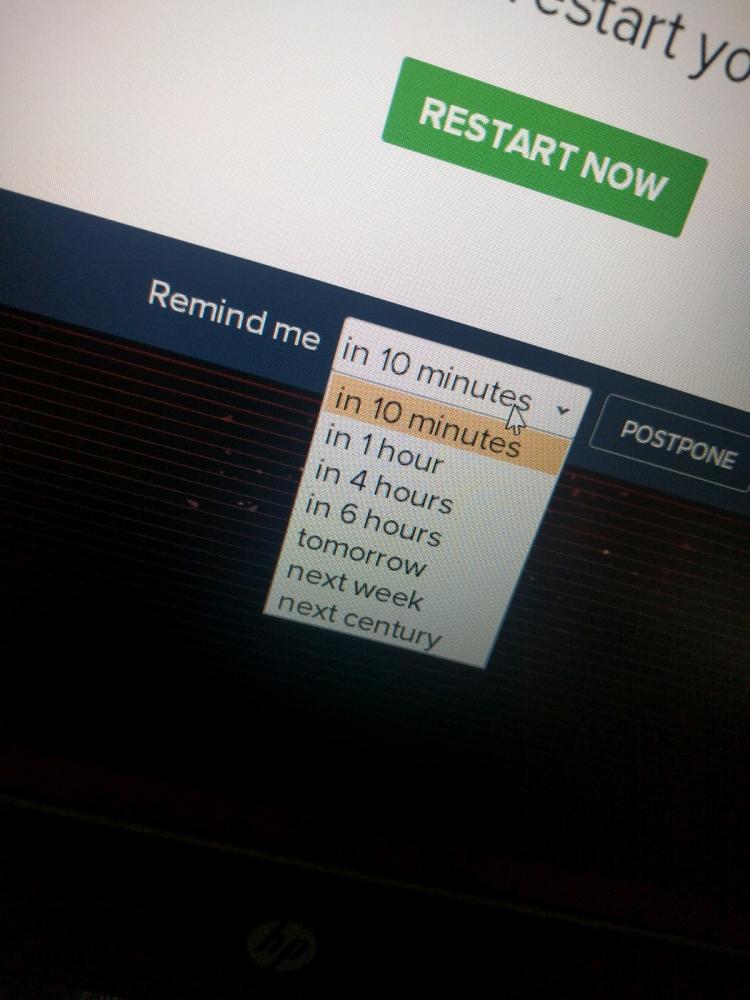

Did you say security? 10 points for next century option.

10 points for next century option.

Question for Web Server Gurus and Security Ninjas.

How to prevent bots, crawlers, spammers sending various numerous requests to your web servers?

There have been numerous requests to routes like /admin /ssh /phpmyadmin etc etc and all kinds of stuff to the web server.

Is there a way to automatically block those stupid IPs :/

question

server

security