Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

stop65805yIt works perfectly on linux, because cmake can find the libs easily. Under windows its hard to find, because the libs can be everywhere.

stop65805yIt works perfectly on linux, because cmake can find the libs easily. Under windows its hard to find, because the libs can be everywhere. -

@stop this

It's not hard to setup a cmake config file, even with different targets. And yeah the pkgconfig and find features are great. If i work on any c/cpp project, i would prefer cmake anyday.

Most of the time the installation of the software on other Machines is easy aswell.

Just create a build folder,

cd that folder,

cmake .. build

cmake install (if needed)

(if my memories are correct)

that's it

the windows argument is valid though. always had trouble with windows and compiling stuff with it. On macOS it works like a charm though -

@thebiochemic why doesn't goddamn microsoft support more single instance shared libraries and publish the paths through some underlying system like linux does ? I know there can be downsides if the symbol name is something like libtiff and libtiff-1.2 is installed and you need 1.4 and its a symlink that exposes the same name for example, seems like there would be a workaround for that too in the works.

and no. anaconda and idea like it are not workarounds and flatpak just copies window application space inefficiencies ! -

@MadMadMadMrMim

i know gdal,

and i built it.

Needed that on my previous job, since i was the GIS guy back then. Was needed for MapServer.

To be fair, gdal is a mess in general, aswell as a few other dependencies regarding MapServer. I remember having like two different version of the same dependency needed for libraries, that inturn are needed for MapServer. -

@thebiochemic the code is drawing from a lot of places indeed.

I finally skipped trying a fresh build to get the bleeding edge and just used osgeo4win. -

@thebiochemic hey what do you think about sectioned pngs over .img rasters ?

They save ALOT of space just not sure what the performance loss looks like since i'm guessing gdal uses seek functionality on one file the os already has open instead of doing more os functions to open more tiles etc

I had already written a space consumption test it drops ALOT on the raster I tested my algorithm on in size, given that rasters with ige's are totally uncompressed but I haven't gotten around to writing the test to see if it slows alot otherwise. -

@MadMadMadMrMim

not sure honestly. I always converted raster files to geoTIFF.

If there was a performance penalty, it has been offsetted by a MapCache running inbetween, which cached all files in an SQLite Database.

That worked pretty well.

The first render of the tile took a bit longer, but every subsequent access was nearly instant.

But now that you mention it, i remember having less control of the values or their resolution in png. in my case the geoTIFF was a grayscale image with -11k to 9k or something, and therefore worked better -

@thebiochemic so did you encounter many rasters that had many layers of data ? And were the Geotiffs the result of splitting the larger huge ass raster into tiles ? I only worry about the display lag if I have to rescale a bunch of them to get a 1000 mile view of the data when visualizing it but as to creating a fairly comprehensive set of geospatial indexes and parent child sibling relationships that was going to be the direction I went. On slight hiatus from my project at the moment.

-

@MadMadMadMrMim

Ah no, the images, that were sent to the clients were png tiles all the way. But the geotiff was a huge chunk of image data (i think more than 8GB)

I think i used WMS as mapserver output (and i think for mapcache aswell later, though I'm not sure). It worked like Google maps or Open Street Maps, where the tiles have been loaded from multiple different endpoints round robin style. I used Openlayer for browser output and built a whole suite of custom tools ontop of it.

I did encounter files with multiple layers, though they weren't images. More like raw data in files or straight up data base tables in a db.

Related Rants

-

DrPenguin9

DrPenguin9 !rant

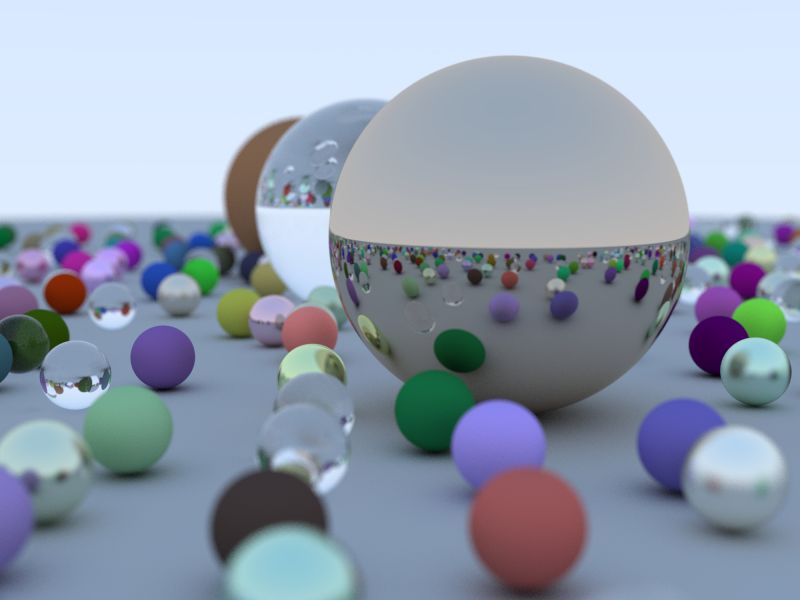

Some days ago I finished "Ray tracing in a weekend" (Peter Shirley) and I'm learning a lot :D

In the n...

!rant

Some days ago I finished "Ray tracing in a weekend" (Peter Shirley) and I'm learning a lot :D

In the n... -

pps839In 15+ years of full time work as a C++ software engineer there is one tool that I always hated: CMAKE. What a...

pps839In 15+ years of full time work as a C++ software engineer there is one tool that I always hated: CMAKE. What a... -

Hastouki2

Hastouki2 This bonehead wants to delete your C++ code. He obviously means that to use this for out-of-source builds, but...

This bonehead wants to delete your C++ code. He obviously means that to use this for out-of-source builds, but...

Is Cmake really worthwhile ? Like, I see the point of autotools, and it seems pretty worthwhile, but why make something you have to hand configure from the looks of it with a vast array of tags you'll use maybe once per project ?

Is there a frontend maybe for it ? I mean I know the concept they state but it hasn't seemed to work very well the last number of projects I tried to build using it, on windows. On linux everything works LOL But if the point is cross platform building whats the deal ?

question

cmake