Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

The problem with that is that we can't write files to the server. And they have a strict post max size.

-

Thanks guys. Never heard of it. Will do research and learn it.

Will comment again on Monday to let you guys know how it went. -

We don't have access file write permissions to export it onto the server to be able to transfer it.

-

jchw10959y@wolt actually it does: it's more robust (to issues like corruption, using rolling checksums) and has support for compression built-in. Besides that, Scp is an old program that predates modern alternatives like sftp and rsync and as far as I can tell the only good reason to use it is if those things are not supported.

jchw10959y@wolt actually it does: it's more robust (to issues like corruption, using rolling checksums) and has support for compression built-in. Besides that, Scp is an old program that predates modern alternatives like sftp and rsync and as far as I can tell the only good reason to use it is if those things are not supported. -

If any other solution doesn't work for you, please avoid S3/Drive/Box. Use Storj instead. It is free.

Related Rants

!rant, HTML is the toughest

!rant, HTML is the toughest We got a winner here!

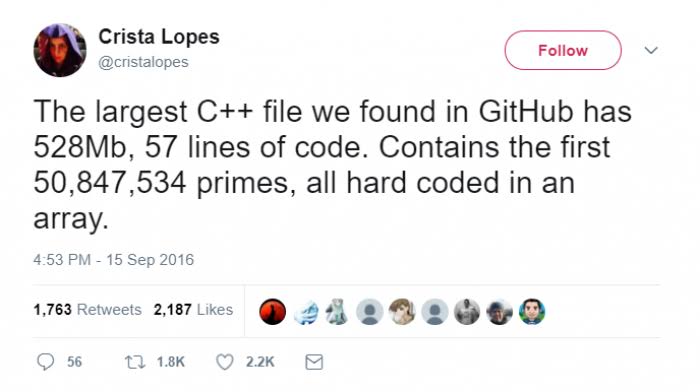

That guy should be remembered.

We got a winner here!

That guy should be remembered. !Rant

So the interns tried to 3d print a rubberduck and it got stuck mid way.

Guess i have a coding duck no...

!Rant

So the interns tried to 3d print a rubberduck and it got stuck mid way.

Guess i have a coding duck no...

I have to do a transfer of about 2 GB of data from one remote server to another. Any suggestions?

My idea was to do multiple curl requests while compressing the data using gzcompress.

Preliminary testing shows that won't work. Now I'm considering putting the data in a file on our S3 bucket for the other server to obtain.

undefined

!rant