Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

hinst12623yDocker on Raspberry Pi Zero = RIP

hinst12623yDocker on Raspberry Pi Zero = RIP

You can write init-file for systemd for your service apps -

rantydev2603yI've used supervisor on RPis. Lightweight and easy to set up. It can also be set to monitor and restart programs automatically in case they crash. 10/10 would use again

rantydev2603yI've used supervisor on RPis. Lightweight and easy to set up. It can also be set to monitor and restart programs automatically in case they crash. 10/10 would use again -

If you want to export metrics - best would be definitely export a Prometheus endpoint and scrape it.

You can do this in any language - coding side is trivial in my opinion, what's harder is understanding metrics, naming of metrics and metric types (gauge, counter, ...).

The joyful thing of Prometheus is it's wide adaptation.

You can easily use InfluxDbs Telegraf to scrape *multiple* Prometheus endpoints -/ InfluxDb endpoints etc and output a *single* scrape point with metrics.

One open port external, everything else on loopback network. Firewall configuration is easy peasy.

Grafana is a possibility for dashboards.

The beauty is: Telegraf -/ Prometheus allow you to split the simpler task - gathering metrics from the beefy part (aggregation, evaluation, representation, etc.)

So you can just run Telegraf on the RPI, scrape it on another system and do everything heavy on the other system, too.

--

Do you want to execute a certain command at a certain time?

Go for SystemD timers.

The primary reason is flexibility and recoverability.

SystemD timers do *not* just run at a certain period of time, they can - if wanted - store the last time of execution.

Meaning that if for whatever reason there is an interruption like reboot, the timer will still trigger.

Plus you get the dependency management of units. Which simplifies things when you're dependent on e.g. a network connection, certain mounts etc.

--

What you should *NEVER* do is trying to multi command inside e.g. a docker container.

Don't try to be clever and e.g. define a CMD with multiple commands -/ processes that run parallel.

It's fragile, it's an anti pattern, it's painful and it will haunt you in many ways.

--

Why metrics instead of raw data… like an JSON endpoint?

The beauty of metrics is that they take away the math - interpolation, timeline etc. All done. No need to bother. No room for mistakes in format.

Only complexity is understanding and writing proper metrics (naming, tagging, types).

Related Rants

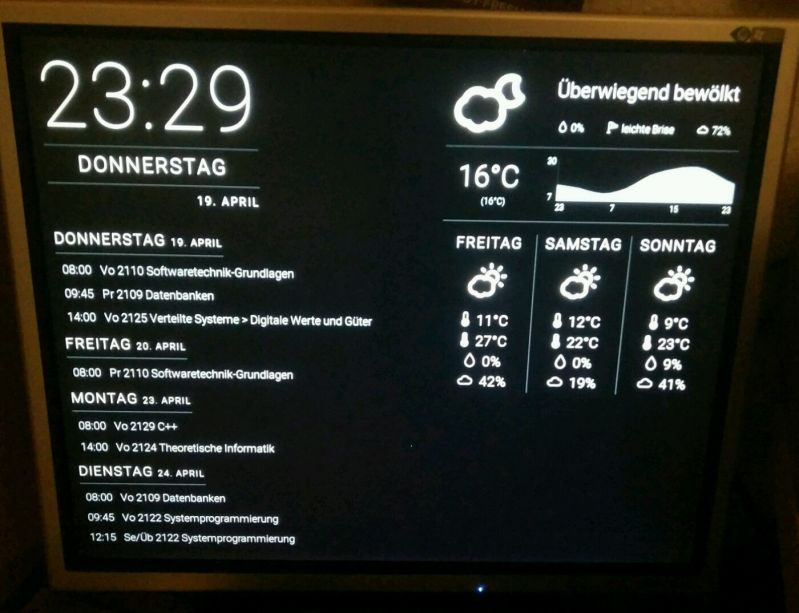

I've made a thing and I'm proud of it.:D

I've made a thing and I'm proud of it.:D This is how cables are meant to be! 😍

This is how cables are meant to be! 😍

How do you guys monitor programs on your servers?

For example, I have a raspberry pi zero w running raspbian (headless). On this pi, I have a bunch of discord bots and web scrapers running at the same time. My solution was to run them all from a bash file:

Python3 discordbot1.py &

Python3 discordbot2.py &

Python3 webscraper1.py &

Node webscraper2.js & etc.

Is there a better way I could be running these services? How is stuff like this usually done?

question

raspberry

multiple programs

servers