Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

Liebranca1276222dIf its just for RNG then you dont need accurate time, read the cycle counter on the CPU instead. The modulo part is the same.

Liebranca1276222dIf its just for RNG then you dont need accurate time, read the cycle counter on the CPU instead. The modulo part is the same.

This looks like one way to do it in rust https://github.com/gnzlbg/tsc/...

The _rdtsc() call is the important part (see rdstc instruction for the x86: https://felixcloutier.com/x86/...). Everything else seems to be about translating the instruction to different architectures, if Im interpreting the code correctly.

Performance wise, reading the counter should take around 40 cycles on average, at 3GHz that should be 15 nanoseconds I think. Your mileage may vary.

Applying modulo depends on whether youre dividing by a constant, as the division would be optimized out by the compiler in that case and replaced with cheaper instructions. Anyway, even in the worst case its somewhere around a 100 cycles, so 35 nanoseconds at 3GHz.

Estimated total: 50-80 nanoseconds. Time it, I could be wrong. -

Liebranca1276222dlmao, rustc strikes again.

Liebranca1276222dlmao, rustc strikes again.

We may have to look at the disassembly to get to the bottom of it; this goes pretty fast on my dice roll util (https://gist.github.com/Liebranca/...), so why in all of the world's fuck would compiled code be slower than that, no idea.

Also did you just call me nerd. I'll have you know I'm a man of science!!!11 -

jestdotty7560222d@Liebranca ok sorry I got distracted. x10'ed some money somewhere, woo not being a failure

jestdotty7560222d@Liebranca ok sorry I got distracted. x10'ed some money somewhere, woo not being a failure

fixed up my test to not be retarded / made benchmark fn

and yeah it's like x5 as fast with the CPU timer turns out

when I first ran my code I was accidentally running some other random method cuz I forgot to comment it out lol

revised numbers:

system:

- 10k: 400-450 nanos

- 100k: 2500-3600 nanos

instant:

- 10k: 900-1300 nanos

- 100k: 9600-11000 nanos

cpu:

- 10k: 65-75 nanos

- 100k: 650-750 nanos -

Liebranca1276222d@jestdotty yeah, I went to write my own benchmark as the numbers seemed too low. It didn't add up at all haha.

Liebranca1276222d@jestdotty yeah, I went to write my own benchmark as the numbers seemed too low. It didn't add up at all haha.

"ms" is for milliseconds (1/1,000), "μs" is for microseconds (1/1,000,000) and "ns" is for nanoseconds (1/1,000,000,000). Note that the consensus is that nowadays it's pointless to use nanoseconds as resolution for measuring runtime of software, but I still do it because I'm counting cycles.

If I give you a number in seconds, with nine decimal places, then you can read it as such: 0s.000ms,000us,000ns

Anyway, highest times I'm seeing when timing my handrolled fasm:

- 0.001|985|073s for a thousand iterations.

- 0.002|364|874s for ten thousand.

- 0.004|305|840s for a hundred thousand.

- 0.025|751|829s for a million.

- 0.106|004|000s for ten million.

- 0.928|516|150s for a hundred million.

This includes the overhead of forking to run the program. But even the most extreme test is still somehow under a second, fucking shit. -

Liebranca1276221d@jestdotty Well, if I were to run the benchmark on the assembly code directly, rather than measuring time spent inside the program from a shell script, then I would get a more precise result...

Liebranca1276221d@jestdotty Well, if I were to run the benchmark on the assembly code directly, rather than measuring time spent inside the program from a shell script, then I would get a more precise result...

Let's try that. I'll be back in a few hours! -

Liebranca1276221dAlright, remind me to never do that again.

Liebranca1276221dAlright, remind me to never do that again.

As expected, lower number of iterations (say 30-40K downwards) run in under a millisecond, because I'm not spawning a new process inbetween starting the clock. But results are about the same for everything else. -

typosaurus12049221dWhy not just incremental.

typosaurus12049221dWhy not just incremental.

I thought this would be fast:

```

int unique_id(){

static unsigned long id = 0;

return id++;

}

```

But it's 81.01µs for 10.000 rounds.

1.9ms for 100.000 rounds -

Liebranca1276221d@retoor You're only reading from memory and writing back, so context switches causing cache misses. At some point your process lives long enough for an interrupt to happen.

Liebranca1276221d@retoor You're only reading from memory and writing back, so context switches causing cache misses. At some point your process lives long enough for an interrupt to happen.

But now pair the id with say a 256 long array of pseudo random numbers. Return array[id++ AND $FF] and you've got the PRNG from the original DOOM.

Would that be faster? No. But it's cooler. -

typosaurus12049221dBenchmarking is addicting. I found out that wrapping functions to an OOP object takes quite a performance hit in wren language.

typosaurus12049221dBenchmarking is addicting. I found out that wrapping functions to an OOP object takes quite a performance hit in wren language.

@Liebranca you should check wren too so you can forget about perl ;) wren is a complete language having only the basics. It kinda misses stdlib so it's nice to work on -

typosaurus12049221d@jestdotty yeah, it's what i mostly do. It's guaranteed unique in a way you're sure. A UUID4 is only as unique as all the grains of sand in the world. That means collision is possible! Oh no!

typosaurus12049221d@jestdotty yeah, it's what i mostly do. It's guaranteed unique in a way you're sure. A UUID4 is only as unique as all the grains of sand in the world. That means collision is possible! Oh no! -

typosaurus12049221d@jestdotty worked on an OS? Cool. It does fit in my type of projects in case of doing everything myself but the time span is a bit high and doubt if i ever get the networking implemented. But for implementing network @awesomemeest offered help :)

typosaurus12049221d@jestdotty worked on an OS? Cool. It does fit in my type of projects in case of doing everything myself but the time span is a bit high and doubt if i ever get the networking implemented. But for implementing network @awesomemeest offered help :) -

typosaurus12049221dHow do you benchmark something with 7ns? The code to calculate the duration already costs 100ns using the language of angels (C):

typosaurus12049221dHow do you benchmark something with 7ns? The code to calculate the duration already costs 100ns using the language of angels (C):

nsecs_t start = nsecs();

nsecs_t end = nsecs();

Retrieving nsecs() is a few lines tho. I do have a decade old laptop tho. But still. These are the times my check comes with:

471ns

(っ◔◡◔)っ ♥ ./unique

250ns

(っ◔◡◔)っ ♥ ./unique

827ns

(っ◔◡◔)っ ♥ ./unique

230ns

(っ◔◡◔)っ ♥ ./unique

433ns

Yes, that's how my bash prefix look like. Deal with it :P -

typosaurus12049220d@jestdotty the CPU takes the same amount of time to solve a sudoku puzzle. It's hard to measure smth like that i guess. How consistent is your 7ns?

typosaurus12049220d@jestdotty the CPU takes the same amount of time to solve a sudoku puzzle. It's hard to measure smth like that i guess. How consistent is your 7ns? -

typosaurus12049220d@soldierofcode don't flip, but there's someone here repeating the same code with same parameters to measure speed and it wasn't me this time. It's the dottii!

typosaurus12049220d@soldierofcode don't flip, but there's someone here repeating the same code with same parameters to measure speed and it wasn't me this time. It's the dottii! -

typosaurus12049220dMy bench mark works like this:

typosaurus12049220dMy bench mark works like this:

RBENCH(times, {//code}}). It's a c macro. The only way to benchmark it even more secure is to generate one huge file with the code duplicated on it and compile it once. You're indeed canceling out the loop overhead.

I iterated many times to cancel out luck of poker. It takes 3000 times playing to ensure you have the mathematical % of winning. For example, if math says 7%, it requires you 3000 times playing with those cards to actually reach it. The pokercard simulator was a beautiful piece of code that I lost. It contained the whole game. You could also give amount of players as parameter. Didn't matter, random is random. Still, I'm sure that computers random doesn't come in neighborhood of real random.

My friend that visits me today calculated the three doors problem using code and said, it's better to switch. Sure, with the "random" function you use. I should replicate that code and use different Random fns. Mysql's random is once proven not to be so

Related Rants

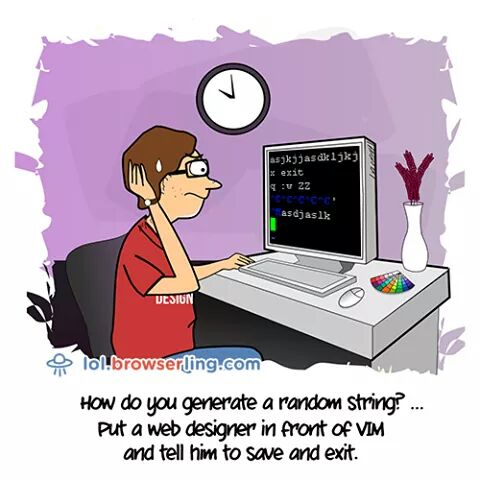

No, thank you.😆

No, thank you.😆 The real random generators!

The real random generators!

need a random number

AI says just use system time and modulus it. I'm wondering if I can get performance down lower cuz I'm doing this maybe like thousands of times a second (im too lazy to do the math rn)

found a crate called fastrand. they're all like this isn't secure for cryptography and yada yada. peak inside curious how they do it. not too sure, seems like they have a predetermined hash and they do some bitwise or something. kind of a lot to read so I don't wanna. either case seems like they're not using system time

make a test to benchmark, 10k rounds how fast is it?

430 nano seconds for system time

460 nano second for fastrand

lol

all that typing and you end up slower than system time. I'm assuming system time can be guessed as well but what's the point of fastrand if it's slower 🤔

I mean maybe on some OS systems looking up the system time might be slower? no clue

rant

rust

benchmark

random