Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

@retoor first, I checked it out, that looks interesting. Especially because so few people are willing to use LSTMs anymore with the new shiny thing on the block.

I prefer relu because it's pretty standard all things being equal.

Incidentally theres no non-linearity happening here. Theres not even multiplication happening.

It's all about using noise to map a given distribution to another distribution, with hard part simply being a permutation search for a given key layer that forms an isomorphic projection between the input and output distribution.

The idea is that instead of doing a computation, we do a sampling of noise, and filter by simple comparison for noise that does the mapping for us. (theres also a lot of different types of noise, all with different properties, like pink noise, white noise, black noise, etc). -

I've also left out a TON of information that makes this sound like handwaving and woo.

Things like how you *also* have graph tokens, and a graph token dictionary. Graph tokens are special nodes that seek through scattershot embeddings of a context window, and perform specific functions, like counting instances of words or tokens, checking presence, or lack of tokens, performing matching, performing token substitutions, etc.

The same encoder-decoder that pairs input tokens to output tokens, also does the same for sequences of graphs and subgraph nodes.

How does this interact with inputs and outputs?

Well, during training, for each input, many graphs contexts are generated, and this is done in parallel to training

on tokens.

However, the critical bit is, during inference the input is processed and an output is generated first, and only then are the graphs run on the token context window for the respective input (queries) and outputs (values). -

What do the graphs really do here though?

If the same embedding technique is used to encode and decode the graphs, as are used for the context tokens,

then we really only need to ask what the graphs do internally?

First, we assume the graphs themselves are a sort of embedding based on our particular embedding technique.

Second you'll be prone to saying "given the current technique, you can train the system to output anything, any

random string of tokens or words based on any random input." Thats correct, but its also true for

all LLMs. They're only as good as their training data specifically, and their architecture and underlying

math generally. It is, to say, that the world is its own ground truth, and like how axioms within a particular

system cannot prove the set is complete (incompleteness), there is no way to say, definitely, anything

a machine outputs is congruent with the reality outside that *merely* represented by its own training data. -

Third, just as each graph token in the graph token dictionary performs a different function, from looking

for particular word token entries (or sets of them), to constraining token order, to counting instances,

its function is divorced from its representation. A graph, being an embedding, also HAS an embedding.

What it does is add additional state information, and generate *new* state, based on the input.

Graph objectives are therefore trained and tested and validated in parallel, to input/output pairs.

Why is this important? -

Because if you compressed a lot of training data using the Ln autoencoder method, along with relevant

graphs, then the noise embedding retrieved for the most similar training data, an embedding introduced by a *new* input and

its variance, acts to modify the mean of the graph representation, which changes which graph tokens are

generated from that representation's embedding.

Think about it as dual encoder-decoder pairs, where during training they run in parallel,

one for tokens, and one for graphs. And during inference, the decoder outputs tokens from the encoder's input,

and only *then* does the selected graph for the decoder's network mean output modify said output. -

...In much the same way that modern research reveals through MRI, that we often formulate our responses automatically

without being aware of the fact, and then filter and modify them deliberately on the fly only *after* becoming aware our brain

has determined a response. There is supporting evidence of this in the motor system, where the premotor cortext

is known to generate many possible responses and motions in any given situation, and uses inhibitory networks to

filter and select for optimal movements for the given situation. -

DevRant butchering my sentences and linebreaks notwithstanding), I hope any of that is readable at all.

The final piece is aligning the function of the generated trained/tested graphs to their embeddings, so in this way, finding the best embedding for mapping an input to a generated output, is equivalent to performing some function on the output that brings the final output closer to the distribution of the input. -

The bonus is that all the learning and functions happen at the symbolic level, without hard coding any rules, making such a network highly modifiable and inspectable.

And for any given artificial rule, it becomes trivial to put one together, and then find a matching embedding that works for the training/test/validation data.

if any of this doesn't make any sense I've likely butchered it further, and left out pieces while I was trying to splice around devrants post and comment character limit.

Probably the first *solid* test of the system would be a character-level assessment (ascii characters as tokens) to teach it to identify word spacing.

Some graph tokens I considered included ones that would produce continuations past a default length, modify the target distribution, ones that would embed 'ephemeral tokens' (they don't show up as printable characters or visible tokens but modify the context and the graphs function in some way, or act as state placeholders, etc). -

Divorcing representation from function was probably the major thing.

Ironically it is about as fast as most of the 8b models I ran on my system, producing maybe 20 tokens per minute.

Right now just the token encoder-decoder is built and functional, but it was proof enough that the entire premise has merit.

After character level, I'll train it to do word detection (spaces, character chunking).

That'll be proof that the graph method is also viable.

From there component word extraction (noun, verb, adjective, subject, etc).

A lot of this was initially inspired by a brief article I read on gaussian splatting, and research into the subgraph problem that LLMs deal with. (they use subgraphs in training data to pattern match for some definition of pattern matching) in order to solve problems in a way, once revealed, that is counter-intuitive, and not in the fashion the generated output itself may at first even claim. -

Theres a few bits and bobs I left out, like how levenshtein distance is used to mutate the transition matrix, or how parts of it (if you squint real hard) are pagerank (or page-rank-like) shoe-horned into a completely non-pagerank shaped problem.

-

Also, this is probably the least shit-posty post I've made in a while, thanks for being the first to respond!

I got to go to bed before work, so if I don't respond to any comments right away, I will when I'm back up. -

@retoor thanks for that.

I think I read an article a few years back that explained the same exact principle in the same style of experiment. Identifying boolean values.

Backpropogation is still a beautiful thing.

I'll make illustrations with source code before long (with way less over-explaination) when the graph portion of the code comes along.

But for example the entire process could be inverted, with the context window composed of graph tokens, and the prior graph layer being composed of token dictionary embeddings, or at least this in affect by finding token dictionary embeddings, used as a noise layer, that map one graph sequence to another. -

The output of the sequence might of course look like the stream-of-subconcious babble a guy might produce while flying high on DMT and talking to machine elves, or some other variation of hallucination: the words produced would look random and have no correlation to their real-world meaning. They'd just be a higher-dimensional vector to represent the mapping, using words (and their random embeddings) instead of straight numbers.

But hypothetically, these random looking phrases, would be functions that compress the graph sequences in question wherever we find an autoencoder input where the output is shorter.

Likewise hidden dimensions of a graph sequence could be blown up by finding these 'random word phrases' (representing random vectors) that map shorter graph sequences to longer graph sequences.

God damn, it really does come off as mere babble. -

@retoor

Not math wizard like Mr. @Wisecrack, but can try.

Relu is basically a 0-clamped ramp function, while sigmoid is exponential.

Deep NNs use relu mainly because it's significantly faster to compute (no exponents), significantly faster to backpropagate (just an if), and produces less gradient saturation than sigmoid (as in, more places in the function where the derivative is far enough from 0), which speeds up learning. -

figoore2331ythe comment butchering made the best cliff hanger there

figoore2331ythe comment butchering made the best cliff hanger there

> why is that important?

And i can confirm… f*ck it… it worked on me 😂

Pretty intresting writings, love it! @Wisecrack -

figoore2331y@retoor can’t aggree more on the jail conspiracy theory 😂

figoore2331y@retoor can’t aggree more on the jail conspiracy theory 😂

Thank god @Wisecrack is doing maths and not meths 😂 -

@CoreFusionX "more places in the function where the derivative is far enough from 0"

isn't it ReLu that produces derivatives close to the mean too, or am I confusing it with another non-linearity? -

@figoore I never said I'm not on ritalin.

But for the record I'm not.

I'm glad you enjoyed the post, even though theres no nice and shiny graphics to explain what the fuck I'm even talking about. -

@retoor like a generator-discriminator pair!

Thats fucking cool.

"beat the machine" as it were.

I like it. I like it alot.

Same on the sleepless nights. Laying down, staring up at the white painted ceiling in the dark, thoughts going a million miles an hour, while the street lights filter in through my blinds.

Or pacing on my porch, smoking cigarette after cigarette, beneath the moonlight while turning new ideas over in my head, what will work, what won't work, what might work, what offers new avenues to attack the problem, new possibilities.

Nothing quiet like it.

And then, the a-ha moment, like athena springing from the head of zeus, fully formed, and racing off to code it all. -

figoore2331y@Wisecrack those nights are the best parts of being a programmer

figoore2331y@Wisecrack those nights are the best parts of being a programmer

I really like this wording, such a nice devrant gem -

@retoor Nothing like smoking after four hours of going without, the rush, eyes half-lidded, as everything comes into sharp focus.

-

@retoor some dude brought in a bad-dragon sized vape didn't he?

I bet he did.

And they were like "we draw the line at smoking pole in the office!" -

@retoor company policy 178: "you will NOT convert the company's open floor plan into a hookah shop!"

Related Rants

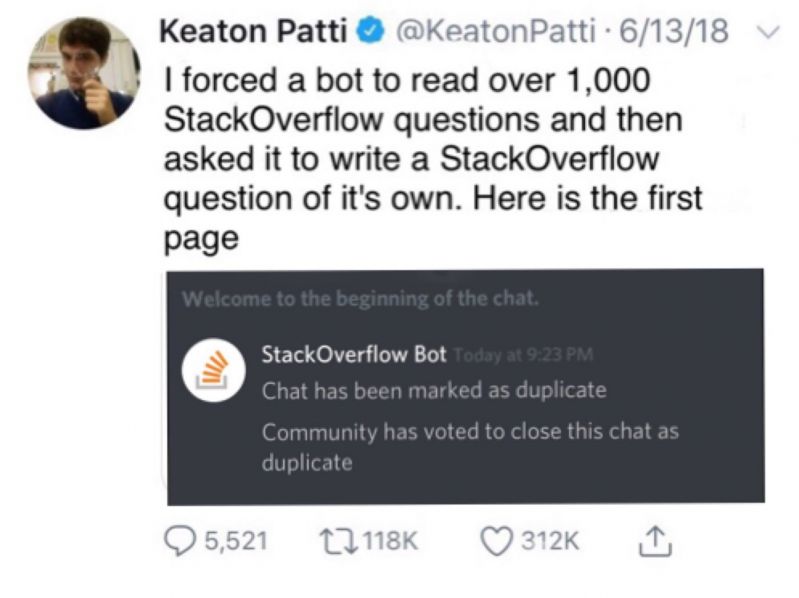

Machine Learning messed up!

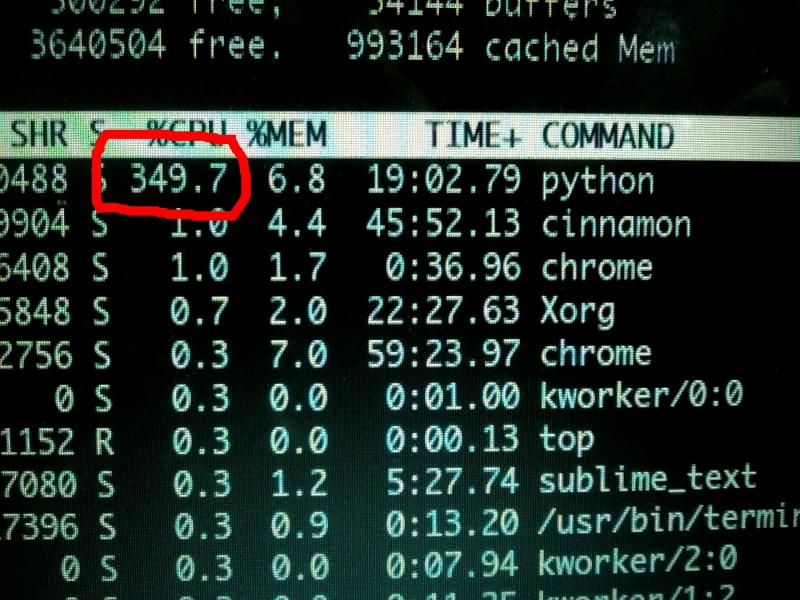

Machine Learning messed up! When your CPU is motivated and gives more than his 100%

When your CPU is motivated and gives more than his 100% What is machine learning?

What is machine learning?

Heres some research into a new LLM architecture I recently built and have had actual success with.

The idea is simple, you do the standard thing of generating random vectors for your dictionary of tokens, we'll call these numbers your 'weights'. Then, for whatever sentence you want to use as input, you generate a context embedding by looking up those tokens, and putting them into a list.

Next, you do the same for the output you want to map to, lets call it the decoder embedding.

You then loop, and generate a 'noise embedding', for each vector or individual token in the context embedding, you then subtract that token's noise value from that token's embedding value or specific weight.

You find the weight index in the weight dictionary (one entry per word or token in your token dictionary) thats closest to this embedding. You use a version of cuckoo hashing where similar values are stored near each other, and the canonical weight values are actually the key of each key:value pair in your token dictionary. When doing this you align all random numbered keys in the dictionary (a uniform sample from 0 to 1), and look at hamming distance between the context embedding+noise embedding (called the encoder embedding) versus the canonical keys, with each digit from left to right being penalized by some factor f (because numbers further left are larger magnitudes), and then penalize or reward based on the numeric closeness of any given individual digit of the encoder embedding at the same index of any given weight i.

You then substitute the canonical weight in place of this encoder embedding, look up that weights index in my earliest version, and then use that index to lookup the word|token in the token dictionary and compare it to the word at the current index of the training output to match against.

Of course by switching to the hash version the lookup is significantly faster, but I digress.

That introduces a problem.

If each input token matches one output token how do we get variable length outputs, how do we do n-to-m mappings of input and output?

One of the things I explored was using pseudo-markovian processes, where theres one node, A, with two links to itself, B, and C.

B is a transition matrix, and A holds its own state. At any given timestep, A may use either the default transition matrix (training data encoder embeddings) with B, or it may generate new ones, using C and a context window of A's prior states.

C can be used to modify A, or it can be used to as a noise embedding to modify B.

A can take on the state of both A and C or A and B. In fact we do both, and measure which is closest to the correct output during training.

What this *doesn't* do is give us variable length encodings or decodings.

So I thought a while and said, if we're using noise embeddings, why can't we use multiple?

And if we're doing multiple, what if we used a middle layer, lets call it the 'key', and took its mean

over *many* training examples, and used it to map from the variance of an input (query) to the variance and mean of

a training or inference output (value).

But how does that tell us when to stop or continue generating tokens for the output?

Posted on pastebin if you want to read the whole thing (DR wouldn't post for some reason).

In any case I wasn't sure if I was dreaming or if I was off in left field, so I went and built the damn thing, the autoencoder part, wasn't even sure I could, but I did, and it just works. I'm still scratching my head.

https://pastebin.com/xAHRhmfH

random

llm

machine learning