Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

I got you. Congrats on proper ssh setup!

For basic protection make sure you have fail2ban installed and configured to screen any service that accepts a login on your machine.

You should have a firewall. ufw if you’re using Debian based hosts and iptables directly or firewall-cmd for Redhat/CentOS. It should be service based if possible, and default to dropping packets that don’t match your allowed services.

Scan your machine with the networking tool “nmap” It’s the first thing an attacker will do to get a picture of your security posture. A good general picture is with the following command:

“nmap -A -T4 [your server’s public IPv4 address]”. You want that sucker to return as little I formation as possible. Often this means disabling default webserver pages in your Nginx/Apache config and turning off informational headers in any running services. Force TLS 1.2 connections and disallow weak cipher protocols, a quick google will list a sane default webserver config.

If you’re interested in learning server management / system administration / DevOps generally then your new Bible is this book: https://amazon.com/UNIX-Linux-Syste..._ -

mpie1697yIn addition to what Diactoros said.

mpie1697yIn addition to what Diactoros said.

Block your ports and use ssh tunnels to reach those ports if you need to have access to specific services or any docker containers that is running on a specific port without reverse proxy -

ltlian21117yProps for considering security in the first place. That's already better than a lot of sites and systems out there.

ltlian21117yProps for considering security in the first place. That's already better than a lot of sites and systems out there.

I'm not a security expert myself, but having spoken to a few pen testers the thing that always comes back is layers of security. You can't make it unhackable, but you can make it tedious and time consuming.

This site offers a series of hacking challenges that I found fairly educational. There's probably an answer set somewhere if you want a quick overview of the vulnerabilities demonstrated.

http://overthewire.org/wargames/ -

Condor315487yI highly recommend running a VPN server on servers you maintain and putting sensitive services behind that (yes @mpie, hiding stuff behind a VPN 😉 the same idea that you're using your SSH tunnels for). Using that VPN you get a separate server-sided virtual network to play with and configure, of course with all the related advantages firewall-wise. In my mailers those hidden services would be e.g. sshd, the webserver for Roundcube, and Dovecot and such. Only 25/tcp and 1194/udp are publicly visible really.

Condor315487yI highly recommend running a VPN server on servers you maintain and putting sensitive services behind that (yes @mpie, hiding stuff behind a VPN 😉 the same idea that you're using your SSH tunnels for). Using that VPN you get a separate server-sided virtual network to play with and configure, of course with all the related advantages firewall-wise. In my mailers those hidden services would be e.g. sshd, the webserver for Roundcube, and Dovecot and such. Only 25/tcp and 1194/udp are publicly visible really.

As for the crawlers.. welcome to servers I guess. The main reason why I hid the sshd away (despite also requiring keys, so those attacks won't be able to crack the server anytime soon) is because it clutters the logs. It's a rather cheap (maintenance-wise) and quick way to get them all out. Quite elegant too IMO.

For anything public though.. well first off make sure that those crawlers don't hit a shitty phpmyadmin config or wp-admin and such in the first place. But most of the time you can rest assured.. just like the SSH crawlers they are dumb attacks. They aren't targeted at all. Generally speaking I don't really worry about them too much. In those cases blocking them using things like CSF (@linuxxx mentioned this earlier, haven't tried it though) or fail2ban should work.

Be sure to also focus on IDS/IPS such as Snort, and rootkit prevention software such as rkhunter. Also, antivirus software such as ClamAV if you can get away with it on the server resource-wise and if it's justifiable in that particular scenario. If the server interfaces with and serves files to general Windows users, it definitely would be a good idea.

Finally as @ltlian mentioned, security is indeed done in layers. So is pentesting by the way. For example webserver exploits that don't give RCE < those that do give RCE < shell access < root shell.

Related Rants

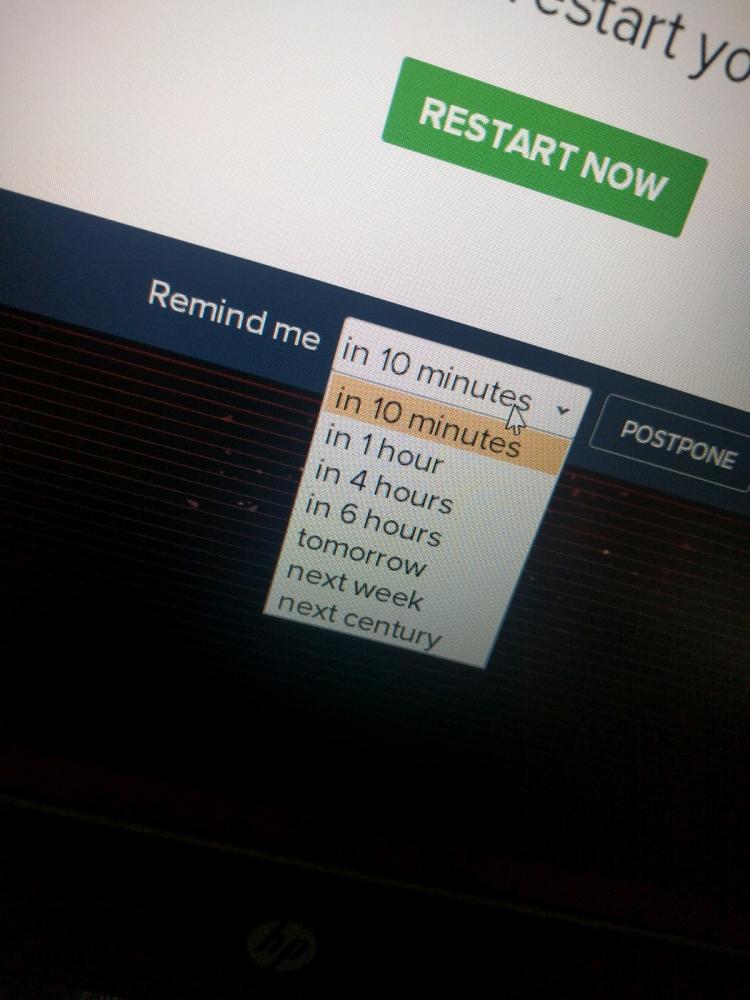

Did you say security?

Did you say security? 10 points for next century option.

10 points for next century option.

Hey there!

So during my internship I learned a lot about Linux, Docker and servers and I recently switched from a shared hosting to my own VPS. On this VPS I currently have one nginx server running that serves a static ReactJs application. This is temponarily, I SFTP-ed the build files to the server and added a config file for ssl, ciphers and dhparams. I plan to change it later to a nextjs application with a ci/di pipeline etc. I also added a 'runuser' that owns the /srv/web directory in which the webserver files are located. Ssh has passwords disabled and my private keys have passphrases.

Now that I it's been running for a few days I noticed a lot of requests from botnets that tried to access phpmyadmin and adminpanels on my server which gave me quite a scare. Luckily my website does not have a backend and I would never expose phpmyadmin like that if I did have it.

Now my question is:

Do you guys know any good articles or have tips and tricks for securing my server and future projects? Are there any good practices that I should absolutely read and follow? (Like not exposing server details etc., php version, rate limiting). I really want to move forward with my quest for knowledge and feel like I should have a good basis when it comes to managing a server, especially with the current privacy laws in place.

Thanks in advance for enduring my rant and infodump 😅

question

security

server

docker

nginx