Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

elazar10169y@norman70688 the editor should not care about the size of the file. It only shows a tiny fraction of it. What matter are the operations performed on the file, and these should be local or run in the background if requested.

elazar10169y@norman70688 the editor should not care about the size of the file. It only shows a tiny fraction of it. What matter are the operations performed on the file, and these should be local or run in the background if requested. -

arekxv10289ySublime Text is bad with large files since it loads all of that file into memory, and that makes you wait when you just want to perform a single operation. Better solution would be if they implemented Large File Support to only load part of the file as user scrolls to it. Only drawback to that is that scrolling and search would be little bit slower, but at least you wont have to wait 5 minutes to open 2-3 GB file.

arekxv10289ySublime Text is bad with large files since it loads all of that file into memory, and that makes you wait when you just want to perform a single operation. Better solution would be if they implemented Large File Support to only load part of the file as user scrolls to it. Only drawback to that is that scrolling and search would be little bit slower, but at least you wont have to wait 5 minutes to open 2-3 GB file. -

For large files you should use sublime text or vim, they are both very good with those files.

-

Try Sublime, Notepad++ or UltraEdit. All should be able to handle large gigabyte text files.

-

elazar10169y@arekxv scrolling need not be any slower, since you can load just enough to make it quick at any time. Searching a huge file is slower, but this is inherent in a search operation over raw data, which must be processed at least once.

elazar10169y@arekxv scrolling need not be any slower, since you can load just enough to make it quick at any time. Searching a huge file is slower, but this is inherent in a search operation over raw data, which must be processed at least once. -

Agree with @arcadesdude UltraEdit works nicely with huge files, especially when you disable some basics like temp files and line numbers before opening (as example of 2 out of the 8 suggested tips)

-

Colleague got stumped by >5gig log files then found JOE, but replaced that with something more user friendly I forget the name

Related Rants

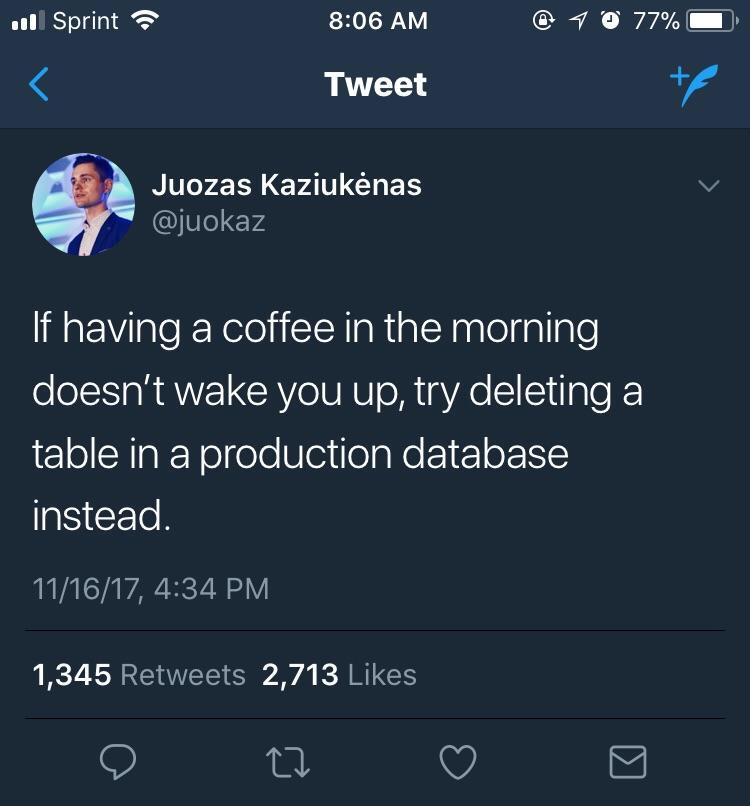

Oh sh*t

Oh sh*t Hacking yourself.

Hacking yourself. When you thought you oversimplified the user interface but it's still too confusing for the user...

When you thought you oversimplified the user interface but it's still too confusing for the user...

When i open a 2 gigabyte wordlist text file and forgot that my defaults text editor is GUI based.

undefined

gui

database

frustration