Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

wtf? you're doing TDD wrong then. How can you have an implementation w/o a working (correct) test? That's not TDD then. That's just testing your code

-

Since the test code is code as well, there is no reason why it should have any fewer bugs than the code that it is supposed to test.

Because, if people were able to write bugfree code, then they wouldn't waste that skill on test code, but just write the actual code without bugs right away.

So it's more of a double check kind of thing. However, it becomes partially pointless if the person writing the tests is the same as the one who writes the actual code because you'll make the same errors, in particular misunderstanding requirements, both in production and test code.

That's why ideally, code as well as tests are done independently by different people. -

@Fast-Nop writing tests first aids with design decisions. I think it's imperative the devs write the tests, and that they're written first so any non obvious requirements, understanding, or bad design get discovered before the code is written.

I don't think you'd get the same thing with someone else writing the tests. -

@netikras You might be right, not sure what the OP means but there's a scenario where tests are passing but they for all the wrong reasons.

For example: articleTest asserts that "the article page should have a menu with 4 links".

You didn't bother testing anything else - to keep the test slim.

But then you notice that actually this test can't even render an article-page, it's causing a server error but a 500-error-page is rendered and that page also has a menu with 4 links, so that's why the test is passing. -

@lungdart Then you make the same mistakes in your design decisions that you already made in the tests.

This is why certified software from certain levels upwards has the mandatory process requirement that this is done with independence. -

My previous post had a lame example.

In practice most of my tests end-to-end tests along those lines (testing the entire rendering pipleine from input to html output) all include the same boilerplate asserts for testing that the response status is ok and the page type is the expected page type. before testing any details like the menu… but I hope you get the gist

When doing TDD I might try to simplify the assets and not test for anything too implementation detailnspecific that’ll change during development (like classnames) and that can lead to mistakes -

Gotta admit I’m not a huge fan of TDD (but always open to being convinced) even after having pair programmed with TDD evangelists

One argument is that good TDD should makes you consider business requirements first and not get stuck on implementation details.

Thar works for me when writing test scenarios.

But when it comes to asserts - I can’t get away from the fact that I end up getting into implementation details. quite often my code changes often while experimenting my way forward. if I write my assets early on during dev, I end up changing them so often that I lose track of the big picture.

For me it’s often easier to add the asserts later on when my implementation feels close to being ready -

@Fast-Nop I don't think I got through clearly.

The act of the dev writing their own tests first forces them to think about what they're building. This reveals poor or missing design decisions before they're implemented. If another dev does this they may leave too many things as "an exercise for the reader".

It's okay if they do it together in code pairing sessions, but you don't want to separate the person defining design/tests from the person implementing it. That usually leads to confused and frustrated devs. -

@lungdart Missing requirements also would pop up during the coding. You don't need to write tests for that. A dev who writes some whatever-code without clear requirements will also write the test cases in the same manner.

Missing requirements are part of validation, as in, are we building the right product. Tests are about verification, as in, are we building the product right. Abusing verification for validation is way too late in the product cycle. -

@Fast-Nop are you talking about old school IV&V? that's some 1999 stuff right there!

The only other engineer I ever worked with who brought that software policy up was in her mid 50s and came from an electrical engineering background.

That process is slow AF. Hopefully you're talking about something else, or you're working for the government or something -

@Fast-Nop ”it becomes partially pointless if the same person writing the tests is the same one who writes the actual code”

I argue that even in the case of a dev who writes bug free code the tests are needed to ensure future stability

a year later when new devs join or that amazing dev forgot about an edge case - and attempt to refactor - the tests are there to ensure they don’t screw it up -

@lungdart If you don't have clear requirements, writing tests just doesn't cut it, no matter which process. As I said, if you write code while you don't even know what you shall implement, you will write tests in the same manner.

Also, I'm writing software that does follow processes because it's not some consumer grade stuff where nobody really gives a shit.

@jiraTicket Sure, the regression testing is covered by that. But only as in, the code continues to do whatever thing it has done before - unrelated to whether it has ever done the right thing. -

@Fast-Nop I've yet to work anywhere where I've gotten clear requirements. Usually it's up to me to work with the stake holders to come up with them.

So for me it works something like this:

* We need x, What does x do?

* What should it do when y happens? What about z?

* Generate behavior definitions and check the stake holders accept it

* Start writing acceptance tests, and realize I missed some non obvious edge cases

* Go back and clarify behavior with stake holders

* Repeat until acceptance tests are finished

* Start writing the unit tests I think I'll need

* Realize I'm dumb and start over

* Iterate until unit tests are done

* Write code until unit tests pass

* Use units to finish the code until the acceptance tests pass

* Go back to stake holders and have them tell me they never wanted this and I show them the behaviors they signed for

* Go back to defining behaviors... -

@lungdart Yeah, and hoping to even find the edge cases because when writing your tests, your IQ somehow doubles compared to when writing code, which is why you get struck by genius insights when writing tests, but not when writing code.

You also somehow manage to trick your genius ideas supply into thinking that test code isn't code because otherwise, your genius level would immediately drop back to half, just like it does for normal code.

And then you wonder why software with mandatory certification isn't developed that way? ;-) -

iceb10913yI think TDD should be a loose term.

iceb10913yI think TDD should be a loose term.

Technically once you have tests for certain part of the code.

any change you make there is TDD since you already wrote tests.

I find TDD helpful when you know exactly what you need.

Otherwise I find it easier to write a little bit of code and get a sense of whether the current design works before proceeding.

Which I understand is really only a problem if you are doing OOP. -

@jiraTicket that's just an incomplete test suite. The test is not wrong and it is how tests in tdd work: you write a test that's failing, covering a single case, ignoring all the others. When you have code 'implementing' that test [which is now passing], the code is focused on that single test case. Then you write another test covering another case and adjust your code to make that test pass. Write enough tests and make enough adjustments to the code until you cover all the cases and your code is squeezed tightly into tests.

If you have a case that is not covered by your tests, that's just begging for another test - an incomplete test suite -

@Fast-Nop you're being facetious.

TDD is a proven methodology that reduces bug density in a measurable way.

Writing tests first does not require more genius to prevent bugs. If you truly think this, you're a shit dev. Sorry to be harsh about it, but your 30 year old development process has been proven to release worse software slower.

I'd recommend diving into the state of devops reports from Google's DORA research team to get more insight. -

@Fast-Nop one benefit from TDD is that when you make the tests first, it forces the implementation to a structure that's possible to write tests for, a very modular structure. Coincidentally, that kind of code is also easier to read and less spaghetti.

-

@lungdart TDD doesn't prove that. All it proves is that having systematic testing in place does reduce bugs, which is no surprise.

Also, it was your point that you need tests to come up with the edge cases - and the conclusion is that when writing code, you somehow fail to come up with the edge cases. That's simple logic. -

@Fast-Nop no, that wasn't my point. You're either twisting my words, or I'm not communicating clearly.

My point is that it's FASTER to come up with edge cases when developing tests then the actual code base. This is because the test code base is SMALLER than the actual code base and requires less time.

So you get nearly the same design insights when developing less code than developing more code, you should lean left and discover/fail early.

And yes, they have measured TDD against testing after the fact, and TDD is associated with reduced failure rates and faster delivery times. -

@lungdart In requirement based engineering, one of the requirements for a requirement is that it needs to be testable, that's where testability comes in early on. Also, you need a verification plan early on - as in, at the beginning of the project. That's a critical milestone to pass.

Sure, if you code in any way with no planning ahead and then at the end suddenly have to think about how to test that mess, then that's an issue that TDD would have solved - but that's not specific to TDD.

Also, when I look at the test scripts at my company, that effort at least equals the actual development. That's no wonder because the contained amount of information is similar. In particular when you have higher certification levels where stuff like code statement coverage is mandatory.

Related Rants

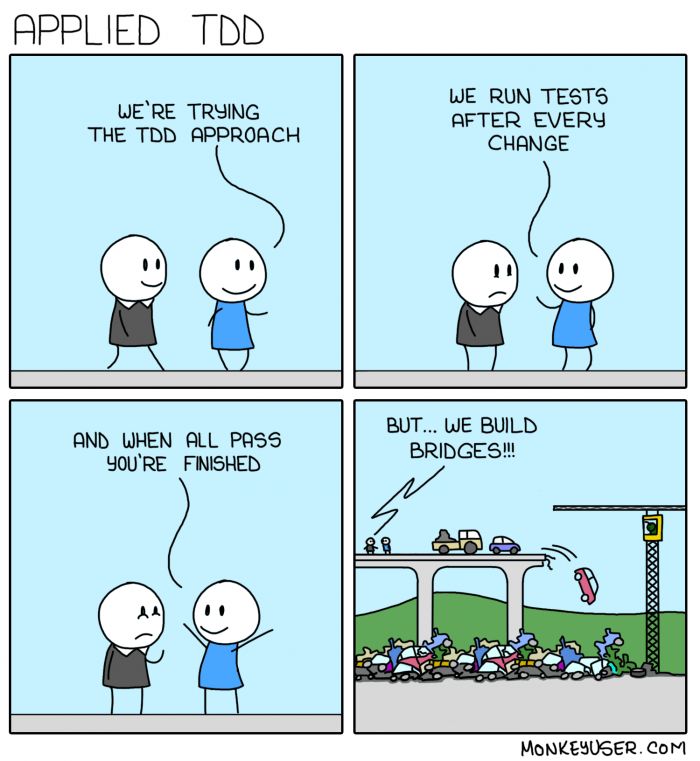

Do you follow test driven development?

Do you follow test driven development? TDD in construction 🚧

TDD in construction 🚧

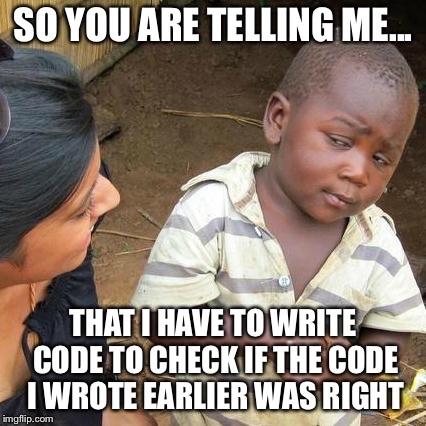

they say do TDD

but its often that my test is wrong while my implementation is correct lol.

rant

tdd