Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

That last sentence makes everything you said much easier to understand. Good metaphor and very interesting

-

Hazarth92073yI don't think you can even quantize lover than 4 bits effectively. even with 4 bits you already limit yourself to only 16 discrete float values, with 3 bits you're down to 8, 2 bits down to 4 and then 1b would be literally unrepresentable as you can't fit any meaningful float into one bit other then 1 or 0, which would require the network to actually inflate considerably to still fit in any useful information.

Hazarth92073yI don't think you can even quantize lover than 4 bits effectively. even with 4 bits you already limit yourself to only 16 discrete float values, with 3 bits you're down to 8, 2 bits down to 4 and then 1b would be literally unrepresentable as you can't fit any meaningful float into one bit other then 1 or 0, which would require the network to actually inflate considerably to still fit in any useful information.

I'd say 3bit maaaaybe could work but it would really really suck as you can't even move in increments lower than 0.1 at that point, which can really screw up the gradients then...

That being said, It's mostly sounds like you're proposing a reverse knowledge distillation technique (with KD you go from BIG -> SMALL) and it seems you want to go from SMALL -> BIG by taking the SMALL as a really bad "teacher" that you're supposed to ignore... I doubt it will work well though, the optimization space of something that has 13B params is gigantic, the bad NN wont be bad enough -

@ookami that's why you're enrolling, so you will understand! You'll get there soon enough. 😊

-

@Hazarth

I basically shut my brain off at the 1bit mark, thanks Hazarath for noticing the error.

You summarized it way better than I could.

The question that remains what would constitute a sufficiently bad teacher?

Do we have techniques in ML for that?

Is there a way to develop very inaccurate models? -

Hazarth92073y@Wisecrack I'm not sure if you can train a sufficiently large bad teacher network. The issue really is that there are more wrong answers than there are right answers and NNs are heavily based in randomness. If you train two NNs on identical data for the same amount of times, they will both converge, but they each can use wastly different parameters to reach the same result. because ultimately it doesn't matter if they calculate X * Y + 1 where X=0, Y = 1 or X + 1 * Y where X=1, Y=0. NNs can combine the operations in any number of ways which depends on how their weights were initialized (which is random) and which ones were closer to the optimized path at that time.

Hazarth92073y@Wisecrack I'm not sure if you can train a sufficiently large bad teacher network. The issue really is that there are more wrong answers than there are right answers and NNs are heavily based in randomness. If you train two NNs on identical data for the same amount of times, they will both converge, but they each can use wastly different parameters to reach the same result. because ultimately it doesn't matter if they calculate X * Y + 1 where X=0, Y = 1 or X + 1 * Y where X=1, Y=0. NNs can combine the operations in any number of ways which depends on how their weights were initialized (which is random) and which ones were closer to the optimized path at that time.

You can ofc get rid of the randomness and train a bad teacher with the same seed as the good net, but then what's the point, it's just the same training data for longer... hmm -

Hazarth92073y@Wisecrack I mean random noise is a pretty bad teacher, but what does it teach? Only thing it tells the new network is how to not be random... and for a bad LLM how does it differ from random noise? What's a "wrong" sentence that still tells you something about converging to a correct sentence? That's what I mean when I say the wrong answer space is much larger than the right answer one, There's thousands of other things that don't fall under the bad teacher knowledge... it only not-knows one thing in your proposed method... but you would need it to know all of the wrong things... which is the same as knowing only the right thing

Hazarth92073y@Wisecrack I mean random noise is a pretty bad teacher, but what does it teach? Only thing it tells the new network is how to not be random... and for a bad LLM how does it differ from random noise? What's a "wrong" sentence that still tells you something about converging to a correct sentence? That's what I mean when I say the wrong answer space is much larger than the right answer one, There's thousands of other things that don't fall under the bad teacher knowledge... it only not-knows one thing in your proposed method... but you would need it to know all of the wrong things... which is the same as knowing only the right thing

so essentially a properly bad teacher would have to just be the inversion of the best teacher. Which might mean generating every possible random noise other than the right answers -

@Hazarth I'm obviously speculating, but I think you may be half correct here.

If theres any merit to the idea than I think the bad teacher will have a *very specific* type of distribution, rather than simply being gaussian.

For an ancillary example, there are different types of noise (white noise, blue noise, brown, pink, grey noise, etc). Likewise it was recently discovered that the *type* of regularization terms used are responsible for non-random clustering of labels into semantic categories during self-supervised learning.

So there is definitely something to the suggestion that if the correct distribution can be found, then there could also exist a negative image as it were.

That is to say what we might be looking for is a measure that reflects the relative compliment

or symmetric difference rather than the absolute compliment of our samples (training and verification).

Absolute compliment would be everything thats not in the training set (incorrect answers). -

What this might look like is mapping a subset of Not A to another set B, learning a schema of some sort.

If theres anything to it, then it is probably the next leg up on single-character methods and simple diffusion, but I don't know enough about statistics to take it past mere speculation. -

In fact the 'infinite attention length' models for GPT are doing something similar to this but for the positive case, information present in self awareness, intra-head, and then in lieu of creating more heads, using alternative methods to decide attention on larger contexts.

Something probably gets lost in the mix here of course, but I digress.

The idea is that the system doesn't try to learn *everything* that is not set A, but rather some small subset of Not A.

And this is useful because as the network grows, it can use counterfactual joint distributions of this negative set to better pin down any element of A (A=the training and validation sets). -

Actually the more I think about it the more sense it makes.

I tried my hand at converting the idea to set notation:

Ac ∧ (A x B) = d ∉ A where 1. (x ∉ A) ∈||⊆ B or 2. (d ∉ A) approximating A ∆ B as the training and validation sets grow.

and where the approximation of A∆B can be used to derive and calculate any A from d.

In fact Ac ∧ (A x B) suggests what we're looking for to begin with is pairs of elements AxB ⊄ A, i.e. that the pairs are themselves NOT contained in A, conditioning A to a particular distribution, namely one where the ordered pairs between sets A and B are not a subset of A.

This seems counterintuitive, and my application of sets is amateur at best, but the logic is that every training and validation sample, let alone sample of a distribution, contains within it some constraint on the overall distribution, to the exclusion of samples that don't belong to that distribution, no? -

For example, training on the mnist database, every example of '0' also in some way excludes the digits 1 through 9, some more than others, based on similarity. If you train to recognize the digit '0' using a lot of samples, you don't need to train it to recognize every '0' to the exclusion of

the full range of things it isn't, some samples you just say "this isn't a 1, a 2, or a 3", and others you say "this isn't a 5, 7, or 9", etc.

And the overall joint distribution of these negative samples, should improve performance on recognizing any *given* sample.

And if you think about it, it actually makes sense:

A sample, be it training or validation, tells us more about the overall distribution, when we *also* include *explicit* information about what the sample *is not*. Typically this is done by measuring training and validation loss. -

Weirdly, it almost seems like doing the inverse of validation, or putting validation before training. Approaching it from this last statement looks absurd, until the reasoning is explained.

Maybe I'm reaching though.

Related Rants

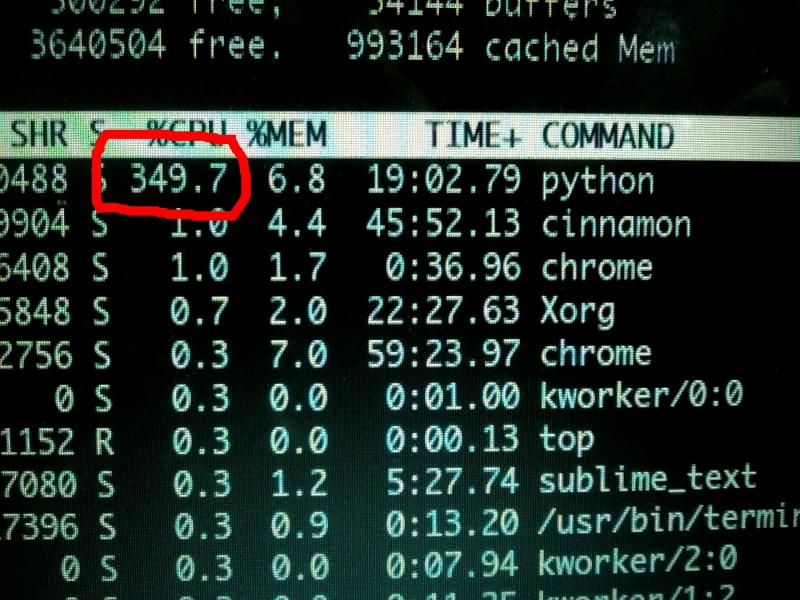

Machine Learning messed up!

Machine Learning messed up! When your CPU is motivated and gives more than his 100%

When your CPU is motivated and gives more than his 100% What is machine learning?

What is machine learning?

New models of LLM have realized they can cut bit rates and still gain relative efficiency by increasing size. They figured out its actually worth it.

However, and theres a caveat, under 4bit quantization and it loses a *lot* of quality (high perplexity). Essentially, without new quantization techniques, they're out of runway. The only direction they can go from here is better Lora implementations/architecture, better base models, and larger models themselves.

I do see one improvement though.

By taking the same underlying model, and reducing it to 3, 2, or even 1 bit, assuming the distribution is bit-agnotic (even if the output isn't), the smaller network acts as an inverted-supervisor.

In otherwords the larger model is likely to be *more precise and accurate* than a bitsize-handicapped one of equivalent parameter count. Sufficient sampling would, in otherwords, allow the 4-bit quantization model to train against a lower bit quantization of itself, on the theory that its hard to generate a correct (low perpelixyt, low loss) answer or sample, but *easy* to generate one thats wrong.

And if you have a model of higher accuracy, and a version that has a much lower accuracy relative to the baseline, you should be able to effectively bootstrap the better model.

This is similar to the approach of alphago playing against itself, or how certain drones autohover, where they calculate the wrong flight path first (looking for high loss) because its simpler, and then calculating relative to that to get the "wrong" answer.

If crashing is flying with style, failing at crashing is *flying* with style.

random

ml

. chatgpt

diffusion

machine learning