Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

Yeah, it broke our Nix build.

But as @devRancid mentioned at least it was reverted in a recent release 😅 -

@devRancid after a whole bunch of backlash, less than a day ago:

https://github.com/serde-rs/serde/... -

Just that I got this right...

To support a "precompile macro" feature, which rust seems to not support by default, they baked into the build chain a compilation and insert binary into package feature....?

Could anyone enlighten me why this precompile stuff seems to be such a necessity?

While I can understand the uproar, and imho this is definitely a bad idea TM, I would really want to know the why.

Coz to me it sounds at the moment like "optimization by whacking shit together". -

@IntrusionCM well it does, and it's called proc_macros, the idea behind it is to extend rust itself with macro features. such as automatic traits, specific behaviours and even stuff like extending or mutating the input Tokenstream and stuff like that. It's essentially a mini-rust crate that acts on the input data of the rust code that gets supplied to it, hence the need of the step before that.

If were comparing that with C/C++ Macros, it's essentially those, but compiled separately (with all their advantages) and described in the same language instead of some pattern matching system.

You could for example build a macro that parses html into functional rust logic to build that specific html, while still having the validation checking and stuff like that. the html!(...) macro is one example of this. There are obviously tons of other uses for that, as you can imagine.

Just for the love of god, don't do what the serde devs did. -

@thebiochemic yeah...

But what's the need of doing it in a macro way?

It worked without it. Why the necessity to do it in a macro way... That would interest me deeply. -

@IntrusionCM it just comes down to ease of use at the end of the day. Ah.. and you can't have a proc_macro in the same lib crate as the lib that's using it because of the previously mentioned way how it works.

in the case of serde i don't need to implement serialization by hand, instead i just use

#[derive(Serialize, Deserialize)]

and i'm good. In the background, that proc_macro (or whatever they tried) destructures the stuff into their components and builts a specialized set of functions for them (which essentially is just using a form of reflection). It creates a certain layer of abstraction of course.

however, i don't understand, why anyone would do, what the serde devs did in the first place. Like how would they even come to the conclusion, that "hey it might be a good idea to inject a fucking binary"..

like wtf -

Just wondering... compiling parts of the code to auto-generate further source files is nothing new. Ordinary makefiles have been able to do that for decades: just have the executable as intermediate target as well as dependency for the lib.

Assuming that other projects only use the lib, but don't modify it, that would be a one-time compile of that part.

Is the Rust build system really so bad that it can't even keep up with makefiles? Or were the devs just too lazy to do it that way? -

@IntrusionCM "optimization by whacking shit together" is a respectable expression and I will claim it as my own from now on

-

@Fast-Nop No, i think you got that wrong.. it is getting compiled once, for all the stuff that doesn't change

However since youre talking about makefiles, that's exactly not what proc_macros are. a proc_macro (after being compiled once) runs at the same time, as the preprocessor would run in C/++, which means everytime you'd compile something while using this specific macro library in your target. As a matter of fact, proc_macros getting compiled the moment you add them to your project in VSCode for example, because they can give you additional Hints and stuff.

And whenever you build your project, the preprocessr will be needed to be rerun before the actual compile starts.

the closest you would get to makefiles are the build.target and cargo.toml files (i think?), but you rarely need to mess with stuff like that, because cargo does a good enough job of pregenerating and configuring everything for you (unless maybe for IoT Stuff, but even then: there are Presets for that). -

@thebiochemic That's missing the point. My question was, why does that helper executable have to be shipped as binary? With a makefile, it would be possible to generate that executable from its source, and only once, as prep for the following actual compilation run of the lib itself.

Given that the intermediate executable is not supposed to change, or else it would be pointless to distribute it as binary, that needs to be built only once. That is, on the building machine instead of on the lib maintainer's machine.

Or did I get that totally wrong, and the executable in question is not a helper one, but the resulting lib itself? -

@Fast-Nop no youve got it right.

what you describe, is what usually happens yeah. you shouldn't need to ship a binary, because it's being built on the developer's machine once. That's why i was so confused by the serde dev's decision to forcefully ship a precompiled binary.

If we talk about binary, it's essentially something like a .lib or .so file that contains the stuff for the proc_macro, basically that intermediate youre talking about. However in serde's case there was no way to verify, if it was *actually* just the compiled file, especially because at that time, there was no way to precompile it yourself (other than let it being done by rustc + cargo internally).

People (rightfully so) got suspicious and trust has been destroyed almost immediately. -

I skimmed over the dynamic loading bit and it seems like everything is still compiled from source so if you trust the code in the repo (which you do anyway) then this is exactly as verifiable as any other pipeline

-

@Fast-Nop I meant the now removed dynamic loading logic, but then I realized that this build script probably runs on the dev machine before publish:

https://github.com/pinkforest/...

I think Cargo distributes the entire project folder except its own /target folder by default, so if this binary is in the project folder then it'll be distributed to anyone that pulls Serde. -

Either way this is incredibly inconvenient, Cargo needs to explicitly be told to ignore that the working directory contains uncommitted changes for example. I really don't see why anyone would allow this in their project without strong evidence that it's a major DX improvement.

-

@Fast-Nop I know, Cargo normally applies gitignore and halts if there are uncommitted but trackable changes left in the directory before push, so one of these behaviours has to be manually overridden for this to work, which in turn increases maintenance burden because contributors have to watch out for a type of issue no other Rust project has.

-

On the note of trust though, I think Serde is primarily maintained by David Tolnay. He maintains a stupid amount of code for Rust, much more than anyone could ever audit and more importantly diverse enough to mount far subtler supply chain attacks than this. If you use Rust, you already trust him.

Related Rants

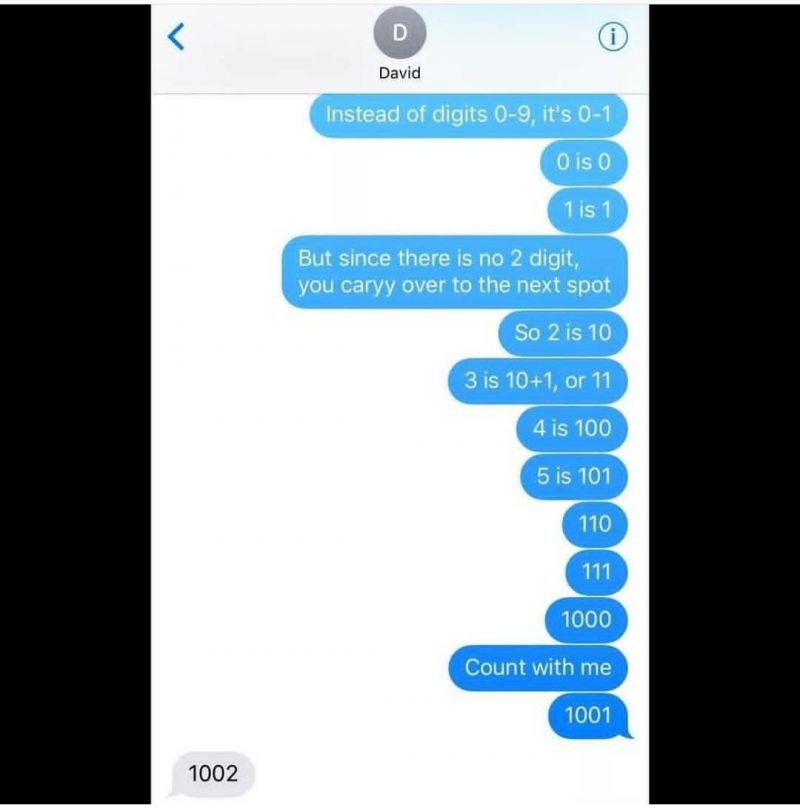

So, my wife sends me this picture because our car had 111,111 miles on it. Of course she called me a nerd when...

So, my wife sends me this picture because our car had 111,111 miles on it. Of course she called me a nerd when... Binary is easy to teach

Binary is easy to teach EDIT: devRant April Fools joke (2018)

-------------------------

Hey everyone! As some of you have already noti...

EDIT: devRant April Fools joke (2018)

-------------------------

Hey everyone! As some of you have already noti...

https://github.com/serde-rs/serde/...

Shit like this makes me wonder, wtf is going on in some developers heads.

TL;DR: serde devs sneakily forced precompiled libraries onto all of the users of the library using serde_derive without an obvious way to verify, what's in this binary and no obvious way to opt out, essentially causing all sorts of havoc.

The last thing i want in a fucking Serialization library (especially the most popular one) is to not being able to verify if something shady is going on or not. All in the name of compilation speed.

Yeah compilation speed my ass.

The worst thing of it all is, even if i decide to drop serde as a direct dependency, it will still download the binary and potentially use it, because of transient dependencies. But i guess, i will try to disable serde wherever possible and implement my own solution for that. Thanks but no thanks.

This is so fucking stupid, it's unbelievable.

rant

asshats

binary

rust

serde