Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

Solid rant!

Welcome to devrant.

Please note that the devrant tag/category is for rants about the platform itself.

"rant" would be correct in this case. -

chatGPT is just a faster Google and it should accept its role. It's good for deduplicating answers, but it really should stop saying random word salad nonsense and just give me concise lists that could possibly be the answer to what I'm looking for 😒

-

@jestdotty No you got it exactly wrong.

ChatGPT is not meant to be a better Google search. Its role is to have a conversation with you. You can ask questions and you get answers, but it’s not the same as a search. -

Think of chat gpt as a coworker who can never say "I don't know".

You can't trust everything someone tells you with confidence blindly either. You need to confirm what it tells you. The pro version will cite sources with Bing now, which helps a lot. -

@lungdart exactly! And not only it can’t say "I don’t know", it doesn’t know that it doesn’t know and it will hallucinate some bullshit which sounds like it could be correct in that case.

-

@lungdart it should have done so from the start. if it admitted that it took the answer from a stackoverflow answer from 10 years ago, it would immediately alert the user and saved him a lot of headache.

-

@jestdotty I disagree. I can have a conversation with a support person with the aim to solve a technical problem.

That doesn’t mean that I want to bond with that person.

The moralization that you feel from talking with chatgpt is just a side effect of the attempt to sound like a human and of course some manually implemented safeguards against sensitive topics. Judging those safeguards is irrelevant for the definition of what chatgpt is supposed to be. -

@daniel-wu it likely does not know where it's taking answers from. It's training set is a single source, and I doubt they put meta data in.

-

@ostream and what do humans do?

We come out of the womb with neurons firing randomly, save for a few pretrained instincts. Were fed data for years. First we start to emulate the movements and behavior we see. Then physics confirmed if moving your legs that way works or not. Then family laughs encourage you repeat cute and funny behaviors. Parents start to punish you when you misbehave reducing those behaviors.

Eventually you're an adult who's a mix of their neural network layout with all their experiences, predicting the next best thing to do.

Related Rants

-

Root30I’m getting really tired of all these junior-turn-senior devs who can’t write simple code asking ChatGPT t...

Root30I’m getting really tired of all these junior-turn-senior devs who can’t write simple code asking ChatGPT t... -

R3ym4nn11I am really going nuts about everyone using ChatGPT. Had literally discussions 'bUt cHaTgPt sAyS iTs TrUe', wh...

R3ym4nn11I am really going nuts about everyone using ChatGPT. Had literally discussions 'bUt cHaTgPt sAyS iTs TrUe', wh... -

DrPenguin9

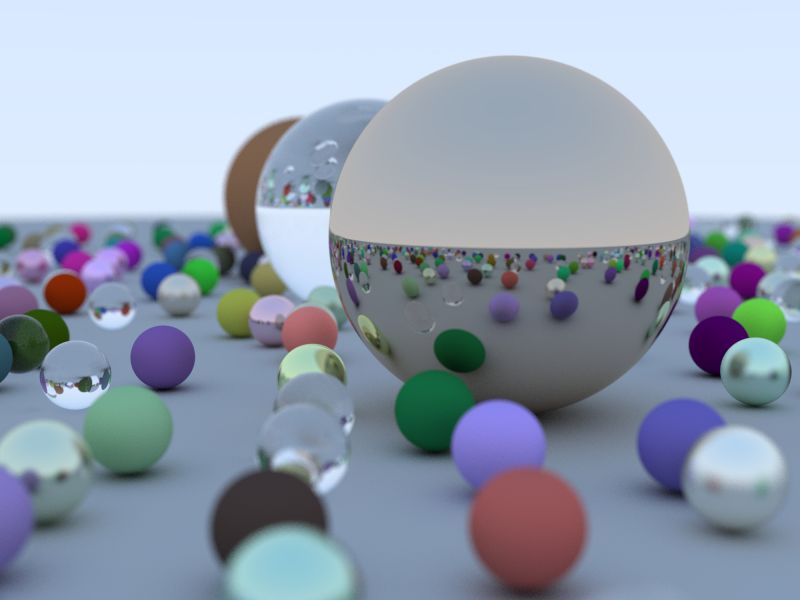

DrPenguin9 !rant

Some days ago I finished "Ray tracing in a weekend" (Peter Shirley) and I'm learning a lot :D

In the n...

!rant

Some days ago I finished "Ray tracing in a weekend" (Peter Shirley) and I'm learning a lot :D

In the n...

This is a story of suffering and despair.

I'm working on a build system for our firmware. Nothing major, just a cmake script to build everything and give me an elf file.

I'm fairly new to cmake at that point, and so it's not abundantly clear to me how the `addDirectory` command works.

Now those of you with experience in cmake will say:

"Hold on there champ, this is not a cmake command, the real thing is add_subdirectory()"

Well, that is not what chatGPT told me. I still trusted the fucking thing at this point, it explained that it was in fact a command, and that it added all subsequent source files from a given folder. When I asked it to provide me with sources, it gave me a dead link in a cmake dot com subdomain.

I spent FUCKING HOURS trying to understand why I couldn't find that shitty command, I looked through that shitty page they call documentation through and through, I fucking checked previous and nightly versions, the command was nowhere to be found.

Until I found an old as time post in stackOverflow...

Someone had made a macro with that name, that did what GPT had described...

On the positive side, I know cmake now. I also don't use this fucking deep Learning piece of shit. Unless you write simple JS or blinking LEDs with Arduino it codes like a Junior, high on every kind of glue on the market.

devrant

chatgpt

cmake