Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

C0D4643836y@don-rager these are pretty damn fast when done right, I use them all the time. But it's easily done in an expensive manner.

C0D4643836y@don-rager these are pretty damn fast when done right, I use them all the time. But it's easily done in an expensive manner.

@catgirl

A select with multiple (10+) left joins into the same table will slow things down if you don't have millions of records lying around. -

Lots of nested sub-queries will do it especially when you're going to return more than a single row

-

TRUNCATE on an important table. Really expensive, billions of dollars in some cases. It's most effective when you don't have a working backup.

-

Create anything with a large number of joins and scalar operations. Anything with scalar analytical operations won't be executed in parallel.

-

Just do something like:

Select * from table1 inner join table2 on mod(table1.id, 10) = mod(sqrt(table2.id), 10)

Make sure to pick 2 very large tables. -

JOINs are only slow when non Indexes fields are used and / or the result set get's very large.

LIKE can use an index for the whole string till wildcard (hence LIKE '%...%' no index).

Subselects are derived tables... Depending on result set / query.

@ItsNotMyFault got it right.

Best is to use functions to prevent index usage and force creating an large result set....

But there is another way.

Simple OR bomb.

Just generates a cartesian product between two large tables and append a ton of OR combinations between both of them.

The optimizer will have fun. :) -

Cartesian products are fun. Quickly and exponentially increase the data to be processed.

select max(1) from table1 t1, table2 t2, table3 t3, table4 t4 order by t1.id desc;

Increase that as you like. Also max with same table or others.

Ordering a exponentially growing dataset in a way that basically reverses it isn't funny for many sorting algorithms and adds some extra time.

I used that on my RaspberryPi and it took 1,5 sec for 4x the same small table (~20 rows). Sorting added another 0,2 secs.

If you go crazy, you can probably pin a cpu to 100% for a very long time.

If you do that more often also swap some tables, max with min, etc. to make caching impossible.

Also, If the dataset is bigger than the ram, add all tables in there.

And run as many queries in paralell as the server has cpu cores. -

Also most SQL should be turing complete. How about running most of your logic or ported complex algorithms.

https://media.ccc.de/v/...

Also take a look at PL/SQL.

Related Rants

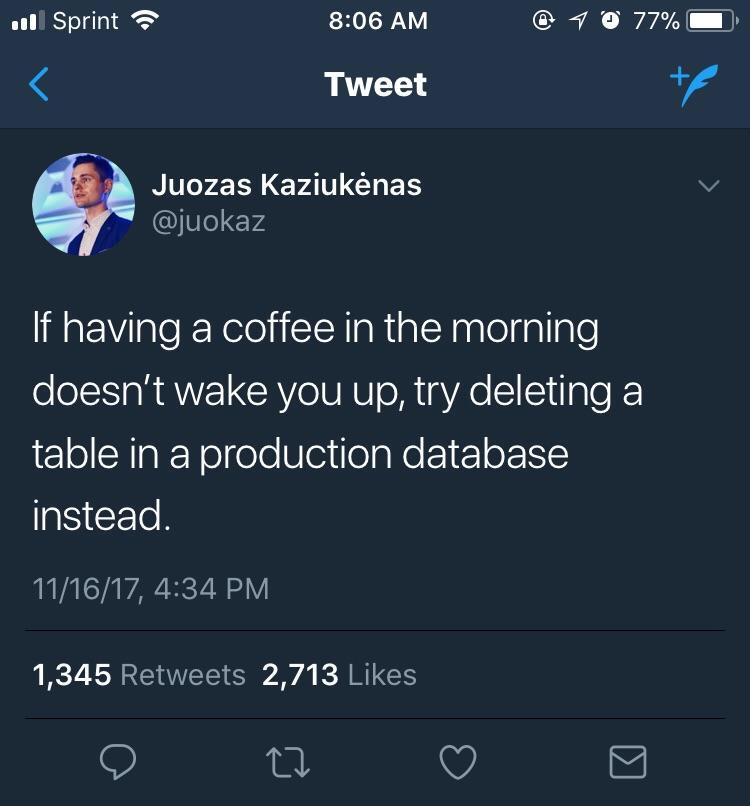

Oh sh*t

Oh sh*t

What’s a really expensive MySQL Query? I need to add it to a CMS application for “research purposes”.

question

mysql horror

database

php

mysql sucks

mysql

sql