Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "perplexity"

-

My colleague thought process became the average of all possible existing LLMs. He has perplexity, ChatGPT, Claude and Cursor open on different tabs every time I go check on him. He is literally performing majority vote on all possible LLMs -> he basically stopped thinking.8

-

New models of LLM have realized they can cut bit rates and still gain relative efficiency by increasing size. They figured out its actually worth it.

However, and theres a caveat, under 4bit quantization and it loses a *lot* of quality (high perplexity). Essentially, without new quantization techniques, they're out of runway. The only direction they can go from here is better Lora implementations/architecture, better base models, and larger models themselves.

I do see one improvement though.

By taking the same underlying model, and reducing it to 3, 2, or even 1 bit, assuming the distribution is bit-agnotic (even if the output isn't), the smaller network acts as an inverted-supervisor.

In otherwords the larger model is likely to be *more precise and accurate* than a bitsize-handicapped one of equivalent parameter count. Sufficient sampling would, in otherwords, allow the 4-bit quantization model to train against a lower bit quantization of itself, on the theory that its hard to generate a correct (low perpelixyt, low loss) answer or sample, but *easy* to generate one thats wrong.

And if you have a model of higher accuracy, and a version that has a much lower accuracy relative to the baseline, you should be able to effectively bootstrap the better model.

This is similar to the approach of alphago playing against itself, or how certain drones autohover, where they calculate the wrong flight path first (looking for high loss) because its simpler, and then calculating relative to that to get the "wrong" answer.

If crashing is flying with style, failing at crashing is *flying* with style.15 -

Adaptive Latent Hypersurfaces

The idea is rather than adjusting embedding latents, we learn a model that takes

the context tokens as input, and generates an efficient adapter or transform of the latents,

so when the latents are grabbed for that same input, they produce outputs with much lower perplexity and loss.

This can be trained autoregressively.

This is similar in some respects to hypernetworks, but applied to embeddings.

The thinking is we shouldn't change latents directly, because any given vector will general be orthogonal to any other, and changing the latents introduces variance for some subset of other inputs over some distribution that is partially or fully out-of-distribution to the current training and verification data sets, thus ultimately leading to a plateau in loss-drop.

Therefore, by autoregressively taking an input, and learning a model that produces a transform on the latents of a token dictionary, we can avoid this ossification of global minima, by finding hypersurfaces that adapt the embeddings, rather than changing them directly.

The result is a network that essentially acts a a compressor of all relevant use cases, without leading to overfitting on in-distribution data and underfitting on out-of-distribution data.12 -

https://perplexity.ai/page/... love these articles. Those researchers have too much money to spend anyway. About AI companions, could be very well be true. They're not good enough yet, but I could prefer it as well. They destroyed everything with identity politics and anti human mindset. Not fault of AI. I already prefer internet relationships above real life ones. I had such a busy social life before, it was actually terrible thinking back obout it. I really started to hate society since covid and love my detached life. Some times my parents come over and make me realize that world exist snapping out my peaceful quiet, happy and stable life. Go away with your dystopia. "We are rebuilding the fire place in our house, not the way we want, but in a way the insurance company want so it's practically impossible to get a fire, and then we insure it.". Wraaaaahhhhh. That kinda fucked up logic. I can't stand it. "That's just how it works.". Waaaaaah. Society in a nutshell.

This perplexity news site is a good way to stay up-to-date tho, can read it for hours.11 -

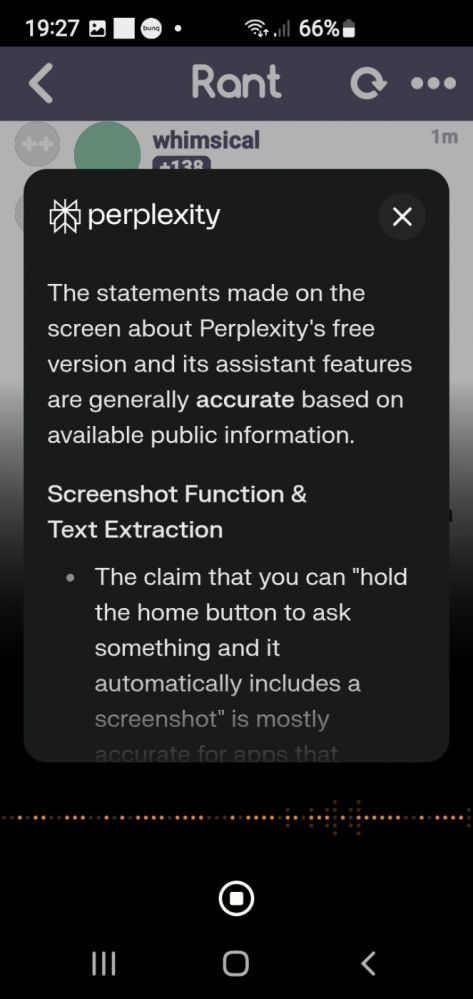

I'm using perplexity for a few days now and want to do a shout out to the assistant. My favorite function works also on the free version without ads: by holding the home button you can ask something and it automatically includes screenshot. That's handy, it understand every meme and you can extract the text of any image or document you're looking at. You can fact check statement made in a rant for example. I actually pay for it but didn't configure on my phone yet without knowing, and thus I can say, the free version assistant is very convenient. Regarding pro features review, will post something end of month. Because, its main functionality is like deep search, but everyone has that now. Is it better? We'll see.

2

2