Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "xml parsing"

-

The time my Java EE technology stack disappointed me most was when I noticed some embarrassing OutOfMemoryError in the log of a server which was already in production. When I analyzed the garbage collector logs I got really scared seeing the heap usage was constantly increasing. After some days of debugging I discovered that the terrible memory leak was caused by a bug inside one of the Java EE core libraries (Jersey Client), while parsing a stupid XML response. The library was shipped with the application server, so it couldn't be replaced (unless installing a different server). I rewrote my code using the Restlet Client API and the memory leak disapperead. What a terrible week!

2

2 -

Imagine, you get employed to restart a software project. They tell you, but first we should get this old software running. It's 'almost finished'.

A WPF application running on a soc ... with a 10" touchscreen on win10, a embedded solution, to control a machine, which has been already sold to customers. You think, 'ok, WTF, why is this happening'?

You open the old software - it crashes immediately.

You open it again but now you are so clever to copy an xml file manually to the root folder and see all of it's beauty for the first time (after waiting for the freezed GUI to become responsive):

* a static logo of the company, taking about 1/5 of the screen horizontally

* circle buttons

* and a navigation interface made in the early 90's from a child

So you click a button and - it crashes.

You restart the software.

You type something like 'abc' in a 'numberfield' - it crashes.

OK ... now you start the application again and try to navigate to another view - and? of course it crashes again.

You are excited to finally open the source code of this masterpiece.

Thank you jesus, the 'dev' who did this, didn't forget to write every business logic in the code behind of the views.

He even managed to put 6 views into one and put all their logig in the code behind!

He doesn't know what binding is or a pattern like MVVM.

But hey, there is also no validation of anything, not even checks for null.

He was so clever to use the GUI as his place to save data and there is a lot of parsing going on here, every time a value changes.

A thread must be something he never heard about - so thats why the GUI always freezes.

You tell them: It would be faster to rewrite the whole thing, because you wouldn't call it even an alpha. Nobody listenes.

Time passes by, new features must be implemented in this abomination, you try to make the cripple walk and everyone keeps asking: 'When we can start the new software?' and the guy who wrote this piece of shit in the first place, tries to give you good advice in coding and is telling you again: 'It was almost finished.' *facepalm*

And you? You would like to do him and humanity a big favour by hiting him hard in the face and breaking his hands, so he can never lay a hand on any keyboard again, to produce something no one serious would ever call code.4 -

Today's project was answering the question: "Can I update tables in a Microsoft Word document programmatically?"

(spoiler: YES)

My coworker got the ball rolling by showing that the docx file is just a zip archive with a bunch of XML in it.

The thing I needed to update were a pair of tables. Not knowing anything about Word's XML schema, I investigated things like:

- what tag is the table declared with?

- is the table paginated within the table?

- where is the cell background color specified?

Fortunately this wasn't too cumbersome.

For the data, CSV was the obvious choice. And I quickly confirmed that I could use OpenCSV easily within gradle.

The Word XML segments were far too verbose to put into constants, so I made a series of templates with tokens to use for replacement.

In creating the templates, I had to analyze the word xml to see what changed between cells (thankfully, very little). This then informed the design of the CSV parsing loops to make sure the dynamic stuff got injected properly.

I got my proof of concept working in less than a day. Have some more polishing to do, but I'm pretty happy with the initial results!6 -

I have to write an xml configuration parser for an in-house data acquisition system that I've been tasked with developing.

I hate doing string parsing in C++... Blegh! 16

16 -

So I just spent the last few hours trying to get an intro of given Wikipedia articles into my Telegram bot. It turns out that Wikipedia does have an API! But unfortunately it's born as a retard.

First I looked at https://www.mediawiki.org/wiki/API and almost thought that that was a Wikipedia article about API's. I almost skipped right over it on the search results (and it turns out that I should've). Upon opening and reading that, I found a shitload of endpoints that frankly I didn't give a shit about. Come on Wikipedia, just give me the fucking data to read out.

Ctrl-F in that page and I find a tiny little link to https://mediawiki.org/wiki/... which is basically what I needed. There's an example that.. gets the data in XML form. Because JSON is clearly too much to ask for. Are you fucking braindead Wikipedia? If my application was able to parse XML/HTML/whatevers, that would be called a browser. With all due respect but I'm not gonna embed a fucking web browser in a bot. I'll leave that to the Electron "devs" that prefer raping my RAM instead.

OK so after that I found on third-party documentation (always a good sign when that's more useful, isn't it) that it does support JSON. Retardpedia just doesn't use it by default. In fact in the example query that was a parameter that wasn't even in there. Not including something crucial like that surely is a good way to let people know the feature is there. Massive kudos to you Wikipedia.. but not really. But a parameter that was in there - for fucking CORS - that was in there by default and broke the whole goddamn thing unless I REMOVED it. Yeah because CORS is so useful in a goddamn fucking API.

So I finally get to a functioning JSON response, now all that's left is parsing it. Again, I only care about the content on the page. So I curl the endpoint and trim off the bits I don't need with jq... I was left with this monstrosity.

curl "https://en.wikipedia.org/w/api.php/...=*" | jq -r '.query.pages[0].revisions[0].slots.main.content'

Just how far can you nest your JSON Wikipedia? Are you trying to find the limits of jq or something here?!

And THEN.. as an icing on the cake, the result doesn't quite look like JSON, nor does it really look like XML, but it has elements of both. I had no idea what to make of this, especially before I had a chance to look at the exact structured output of that command above (if you just pipe into jq without arguments it's much less readable).

Then a friend of mine mentioned Wikitext. Turns out that Wikipedia's API is not only retarded, even the goddamn output is. What the fuck is Wikitext even? It's the Apple of wikis apparently. Only Wikipedia uses it.

And apparently I'm not the only one who found Wikipedia's API.. irritating to say the least. See e.g. https://utcc.utoronto.ca/~cks/...

Needless to say, my bot will not be getting Wikipedia integration at this point. I've seen enough. How about you make your API not retarded first Wikipedia? And hopefully this rant saves someone else the time required to wade through this clusterfuck.12 -

I have come across the most frustrating error i have ever dealt with.

Im trying to parse an XML doc and I keep getting UnauthorizedAccessException when trying to load the doc. I have full permissions to the directory and file, its not read only, i cant see anything immediately wrong as to why i wouldnt be able to access the file.

I searched around for hours yesterday trying a bunch of different solutions that helped other people, none of them working for me.

I post my issue on StackOverflow yesterday with some details, hoping for some help or a "youre an idiot, Its because of this" type of comment but NO.

No answers.

This is the first time Ive really needed help with something, and the first time i havent gotten any response to a post.

Do i keep trying to fix this before the deadline on Sunday? Do i say fuck it and rewrite the xml in C# to meet my needs? Is there another option that i dont even know about yet?

I need a dev duck of some sort :/39 -

Fuck my life! I have been given a task to extract text (with proper formatting) from Docx files.

They look good on the outside but it is absolute hell parsing these files, add to these shitty XML human error and you get a dev's worst nightmare.

I wrote a simple function to extract text written in 'heading(0-9)' paragraph style and got all sorts of shit.

One guy used a table with borders colored white to write text so that he didn't have to use tabs. It is absolute bullshit.2 -

For some project, I wrote this algoritem in Java to parse a lot of XML files and save data to database. It needs to have some tricks and optimisations to run in acceptable time. I did it and in average, it run for 8-10 minutes.

After I left company they got new guy. And he didn't know Java so they switched to PHP and rewrote the whole project. He did algorithm his way. After rewrite it run around 8 hours.

I was really proud of myself and shocked they consider it acceptable.4 -

So I had been developing a real estate website and developing a MLS feed parser. I had only 1 year experience at that time and parsing a XML feed was already complex enough. On top of it, the client wanted to automate feed download from the MLS provider through HTTP authentication. Managed to do it. Everything worked for 15 days and on 16th day the property location markers stopped appearing on Google maps. Turned out that address to lat-long reverse geocoding was failing because API limit exhausted. My bad, I coded it on view instead of caching the lat-long in database. Fixed it in a day and viola!

-

The feature was to parse a set of fairly complex xml files following a legacy schema. Problem was, the way this was done previously did not conform to the schema so it was a guideline at best, which over the course of many years snowballed into an anarchy where clients would send in whatever and it was continuously updated per case as needed. They wanted to start enforcing their new schema while phasing out the old method.

The good news is that parsing and serialization is very testable, so I rounded up what I could find of example files and got to work. Around the same time I asked our client if they had any more examples of typical cases we need to deal with, and sure enough a couple of days later I receive a zip with hundreds of files. They also point out that I should just disregard the entire old set since they decided to outright cut support for it after all if it makes things simpler. Nice.

I finish the feature in a decent amount of time. All my local tests pass, and the CD tests pass when I push my branches. Once we push to our QA env though and the integration tests run, we get a pass rate of less than 10%.

I spend a couple of days trying to figure out what's going on, and eventually narrow it down to some wires being crossed with the new vs. old xml formats. I'm at a loss. I keep trying to chip away at it until I'm left with a minimal example, and I have one of those lean-back moments where you're just "I don't get it". My tests pass locally, but in the QA environment they fail on the same files.

We're now 3 people around my workstation including the system architect, and I'm demonstrating to the others how baffling and black magic this is. I postulate that maybe something is cached in my local environment and it's not actually testing the new files. I even deleted the old ones.

"Are you sure you deleted the right files?"

"Duh of course -- but let me check..."1 -

I'm rewriting the wrapper I've been using for a couple years to connect to Lord of the Rings Online, a windows app that runs great in wine/dxvk, but has a pretty labyrinthine set of configs to pull down from various endpoints to craft the actual connection command. The replacement I'm writing uses proper XML parsing rather than the existing spaghetti-farm of sed/grep/awk/etc. I'm enjoying it quite a bit.1

-

Aka... How NOT to design a build system.

I must say that the winning award in that category goes without any question to SBT.

SBT is like trying to use a claymore mine to put some nails in a wall. It most likely will work somehow, but the collateral damage is extensive.

If you ask what build tool would possibly do this... It was probably SBT. Rant applies in general, but my arch nemesis is definitely SBT.

Let's start with the simplest thing: The data format you use to store.

Well. Data format. So use sth that can represent data or settings. Do *not* use a programming language, as this can neither be parsed / modified without an foreign interface or using the programming language itself...

Which is painful as fuck for automatisation, scripting and thus CI/CD.

Most important regarding the data format - keep it simple and stupid, yet precise and clean. Do not try to e.g. implement complex types - pain without gain. Plain old objects / structs, arrays, primitive types, simple as that.

No (severely) nested types, no lazy evaluation, just keep it as simple as possible. Build tools are complex enough, no need to feed the nightmare.

Data formats *must* have btw a proper encoding, looking at you Mr. XML. It should be standardized, so no crazy mfucking shit eating dev gets the idea to use whatever encoding they like.

Workflows. You know, things like

- update dependency

- compile stuff

- test run

- ...

Keep. Them. Simple.

Especially regarding settings and multiprojects.

http://lihaoyi.com/post/...

If you want to know how to absolutely never ever do it.

Again - keep. it. simple.

Make stuff configurable, allow the CLI tool used for building to pass this configuration in / allow setting of env variables. As simple as that.

Allow project settings - e.g. like repositories - to be set globally vs project wide.

Not simple are those tools who have...

- more knobs than documentation

- more layers than a wedding cake

- inheritance / merging of settings :(

- CLI and ENV have different names.

- CLI and ENV use different quoting

...

Which brings me to the CLI.

If your build tool has no CLI, it sucks. It just sucks. No discussion. It sucks, hmkay?

If your build tool has a CLI, but...

- it uses undocumented exit codes

- requires absurd or non-quoting (e.g. cannot parse quoted string)

- has unconfigurable logging

- output doesn't allow parsing

- CLI cannot be used for automatisation

It sucks, too... Again, no discussion.

Last point: Plugins and versioning.

I love plugins. And versioning.

Plugins can be a good choice to extend stuff, to scratch some specific itches.

Plugins are NOT an excuse to say: hey, we don't integrate any features or offer plugins by ourselves, go implement your own plugins for that.

That's just absurd.

(precondition: feature makes sense, like e.g. listing dependencies, checking for updates, etc - stuff that most likely anyone wants)

Versioning. Well. Here goes number one award to Node with it's broken concept of just installing multiple versions for the fuck of it.

Another award goes to tools without a locking file.

Another award goes to tools who do not support version ranges.

Yet another award goes to tools who do not support private repositories / mirrors via global configuration - makes fun bombing public mirrors to check for new versions available and getting rate limited to death.

In case someone has read so far and wonders why this rant came to be...

I've implemented a sort of on premise bot for updating dependencies for multiple build tools.

Won't be open sourced, as it is company property - but let me tell ya... Pain and pain are two different things. That was beyond pain.

That was getting your skin peeled off while being set on fire pain.

-.-5 -

I use the ICU format often for translation because it's simple enough and supported on many platforms. It's something of a standard so I can use the same translation string format and similar library functions everywhere.

ICU is like a really simple templating language, somewhere between printf and something like smarty or twig simplified and specifically intended for internationalisation.

I updated a library providing ICU compatible parsing and formatting for one of the platforms I'm using and find tests break. I assume that only thing to change is the API. ICU very rarely changes and if it did it would be unexpected for it to break the syntax in a major way without big news of a new syntax.

The main contributor of the library has changed since some time last year. Someone else picked up the project from previous contributors.

Though the library is heavily advertised as using ICU it has now switched to using a custom extended format that's not fully compatible and that is being driven by use case demand rather than standardisation.

Seems like a nice chap but has also decided for a major paradigm shift for the library.

The ICU format only parses ICU templates for string substitution and formatting. The new format tries to parse anything that looks XML like as well but with much more strict rules only supporting a tiny subset of XML and failing to preserve what would otherwise be string literals.

Has anyone else seen this happen after the handover of an opensource library where the paradigm shifts?3 -

Well, that's a lovely youtube ad, if I ever saw one

XML Parsing Error: no root element found

Location: https://secure.jdn.monster.com/rend...-...

Line Number 1, Column 1:

^

By the looks of it, it may have been an ad for monster.com .

-

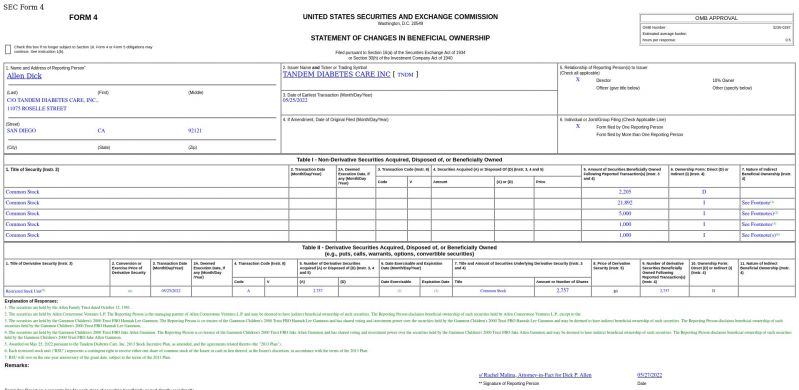

I'm currently working on a project that scrapes the SEC's EDGAR website for type 4 filings.

I currently have the required data in raw text format that somehow looks like xml, i really can't tell what it is but i'm trying to parse this data into json.

I've not parsed something as complex as this before and will appreciate any form of pointers as to how to go about this.

i have attached a screenshot of one sample.

this link fetches the data of a single filing in text format.

https://sec.gov/Archives/edgar/... 5

5 -

[Prestashop question / rant]

Yes, it's not StackOverflow here, neither is it prestashop support forum - but I trust u people most :)

Proper solution for working with big(?) import of products from XML (2,5MB, ~8600 items) to MySQL(InnoDB) within prestashop backoffice module (OR standalone cronjob)

"solutions" I read about so far:

- Up php's max execution time/max memory limit to infinity and hope it's enough

- Run import as a cronjob

- Use MySQL XML parsing procedure and just supply raw xml file to it

- Convert to CSV and use prestashop import functionality (most unreliable so far)

- Instead of using ObjectModel, assemble raw sql queries for chunks of items

- Buy a pre-made module to just handle import (meh)

Maybe an expert on the topic could recommend something?3