Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "stupid compiler"

-

Finding the bug. The usual flow:

"Omg! I think it's a bug in the compiler"

10 minutes later:

"OK, it surely is a bug in the runtime"

20 minutes later:

"I'm certain this is a bug in the core library"

2 hours later:

"Oh, it's a bug in my code. Again, as usual, I'm the idiot. Stupid world."1 -

Just got an email accusing me of not implementing a feature that is quite clearly implemented.

It's not my fault if your too stupid to #include my header file. Did you just expect the compiler to magically find the functions for you.

Also thanks for raising this with my team lead and his boss.

May you spend eternity in a cold ditch coding java script on a 386 with a 28k modem you disgusting fuck nugget.5 -

EEEEEEEEEEEE Some fAcking languages!! Actually barfs while using this trashdump!

The gist: new job, position required adv C# knowledge (like f yea, one of my fav languages), we are working with RPA (using software robots to automate stuff), and we are using some new robot still in beta phase, but robot has its own prog lang.

The problem:

- this language is kind of like ASM (i think so, I'm venting here, it's ASM OK), with syntax that burns your eyes

- no function return values, but I can live with that, at least they have some sort of functions

- emojies for identifiers (like php's $var, but they only aim for shitty features so you use a heart.. ♥var)

- only jump and jumpif for control flow

- no foopin variable scopes at all (if you run multiple scripts at the same time they even share variables *pukes*)

- weird alt characters everywhere. define strings with regular quotes? nah let's be [some mental illness] and use prime quotes (‴ U+2034), and like ⟦ ⟧ for array indexing, but only sometimes!

- super slow interpreter, ex a regular loop to count to 10 (using jumps because yea no actual loops) takes more than 20 seconds to execute, approx 700ms to run 1 code row.

- it supports c# snippets (defined with these stupid characters: ⊂ ⊃) and I guess that's the only c# I get to write with this job :^}

- on top of that, outdated documentation, because yea it's beta, but so crappin tedious with this trail n error to check how every feature works

The question: why in the living fartfaces yolk would you even make a new language when it's so easy nowadays to embed compilers!?! the robot is apparently made in c#, so it should be no funcking problem at all to add a damn lua compiler or something. having a tcp api would even be easier got dammit!!! And what in the world made our company think this robot was a plausible choice?! Did they do a full fubbing analysis of the different software robots out there and accidentally sorted by ease of use in reverse order?? 'cause that's the only explanation i can imagine

Frillin stupid shitpile of a language!!! AAAAAHHH

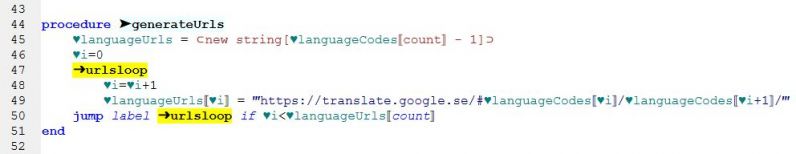

see the attached screenshot of production code we've developed at the company for reference.

Disclaimer: I do not stand responsible for any eventual headaches or gauged eyes caused by the named image.

(for those interested, the robot is G1ANT.Robot, https://beta.g1ant.com/) 4

4 -

What's wrong with this code?

std::pair<float, float> foo() { return { 0, 0 }; }

"Nothing," would you say.

That's because you're normal.

But the most stupid C++ compiler ever (M$ VS)

issues an ERROR that converting 0 to float incurs possible "loss of data". So you have to write "0.f".

BTW, "0." is a double, so you really have to write "0.f". Or "static_cast<float>(0)" if you like ugly, impossible-to-read code.12 -

literally what the fuck is the point of C++

>takes 3 years to make anything half-functional

>language was made in like fucking 1902 so it's damn near fucking impossible to make anything that works without sifting through bumfuck retarded syntax/libraries

>error messages that tell you absolutely nothing of use and are indecipherable garbage 90% of the time

fuck C, fuck it's retarded downie little brother C++, and fuck the stupid fucking boomers who say you're not a real programmer unless you force yourself to become a masochist by using either one of these stupid fucking languages

"oh but it's fast!!11!1!!" yeah but working with it sure as fuck isn't

half the fucking time if I just stop including certain headers in another file then the compiler throws like literally 400 fucking errors at me even though the thing(s) I excluded had no bearing on whatever the compiler decides it wants to loudly bitch and whine about

"oh but games were made on it!!!!111!" yeah not without fucking horrific spaghetti code and 900000 different libraries and dependancies designed just to make a single fucking window28 -

TL;DR Pluralsight should be ashamed for taking 299 USD a year and writing some very low-quality quizzes.

I've always heard that Pluralsight is a great platform having some high quality courses, so I chose it as a benefit, as our company was giving us some budget for learning purposes. I've paid (or rather the company did it in the end) 299 USD for this year, which, I guess is not much for US standards, but it is a lot for Eastern European standards.

I didn't actually get to the point of watching any of the courses, but I started to use a feature called "Stack up", which is a long series of questions in a specific theme, like Java, Kotlin, C++, etc., accessible once a day. I must say, I'm amazed by the fact, that people pay quite a great amount of money and they get something so poorly made with a lot of errors and stupid questions.

Take the question from the included image for example. Not only that the 2 possible answers are repeated (and thus I failed to select the correct one from 2 equal answers), but the supposedly correct answer is also missing some type specifications. No Java compiler will compile it this way as far as I know. There would be at least 3 ways to fix it.

Then there is today's gem (should be included as first comment) as well, where the answer is wrong in both Chrome 96, Firefox 95 and Node v10. Heck, THIS IS one of the reasons why you should never use `var` in your JavaScript code, but always `let` and `const`!

So the courses on Pluralsight might be good, but I would be ashamed, if I were to release something like this. People might actually try to solidify their knowledge by solving these quizzes but instead of learning something useful, they will be left with some bullshit. I just don't get how could they release a feature with so much incorrect information and I am kind of disappointed, even if I didn't try the courses yet. 9

9 -

Not quite a interview question, but in a competition (I had build a compiler) the jury (they all told me they had all studied informatics) asked me what a compiler is... Not in a "lets try to catch him off manner" but rather in a "i am too stupid for this world manner" he asked me what a compiler was... And it got even worse: my compiler is based on linuxes utils (nasm+ld) the guy didnt know about linux. Assembler was much too much for him and when my compiler threw an error (I wanted to show them the error system) he told me I shouldnt present unfinished projects... Atleastthe other two were really nice and i still got 2nd place (behind a person who prorammed an Nxt thingy)7

-

How can I be so fucking stupid?

I was developing and testing a small webserver running on an ESP32.

3 fucking hours were wasted resolving connectivity problems because I did not realize the fucking VPN on my PC was still up thus hiding my ESP32's IP.

How fucking brainless...

I've cie ked everything. Dead flash, wrong offsets, compiler warnings, CPU freq. config...6 -

WTF IS WRONG WITH ASSEMBLY LANGUAGE?!

I was just modifying an existing program for adding a sequence of numbers from the data section and through console input. I studied the code and started modifying it one step at a time. I needed to modify it into a multiplication program. So I started by changing the ADD functions, replaced the result and buffer registers with bigger size and thought I completed it. WELL GUESS WHAT? SHIT JUST GIVES ME SEGMENTATION FAULT! NOW I HAVE TO REDO THE WHOLE THING! WHY DOESN'T IT TELL ME WHICH LINE OF THE CODE I FUCKED UP AT?! STUPID NASM COMPILER.9 -

We need an open-source alternative to stack overflow. They have fucking monopolizing pieces of ratshit admins there and lame ass bots.

I HAD A FUCKING 450 REP :/ and now i have "reached my question limit"

I mean its okay of you want to keep stackoverflow clean , but straight out rejecting the new queries should be against your god damn principles, if those mofos have any!

If it is so easy to downvote and delete a question for the mods, why can't they create a trash site called dump.stackoverflow.com ? whenever a question is not following their stupid guidelines , downvote it to oblivion. After a certain limit, that question goes to dump space where it will be automatically removed after 30 days. Atleast give us 30 fucking days to gather attention of audience !

And how does a question defines someone's character that you downright ban the person from asking new questions? Is there a phd that we should be doing in our mother's womb to get qualified as legitimate question author?

"No questions are stupid" is what we usually hear in our school/college life. And that's a stretch, i agree. Some questions are definitely stupid. But "Your questions are so stupid we are removing you from the site" is the worst possible way to deal with a question asker.

Bloody assholes.

Now, can anyone tell me that if am passing a parcelable list of objects in an intent before starting a new activity, how can i retrieve it in the new activity without getting any kotlin warnings?

The compiler is saying that the data coming via intent is that of list<Type!> aka list of platform type, so how to deal with this warning?13 -

I fucking hate the process of setting up IDEs and compilers! All the build files, cmake files, tasks, all that shit.

I undrerstand it's integral part of coding, but fuck, why does it always take so long to set up a stupid project. Just let me start coding ffs.

Sometimes I get so frustrated that I rather write a bash script or run the compiler commands in the shell instead of going through the hassle of setting all this up.2 -

Avoid ACPICA if at all possible. It's one garbage tier cluster fuck of bad design, horrible documentation and downright misleading and wrong code

It's meant to consist of an ASL compiler, disassembler, debugger, dumper, various user space utitilies and a kernel resident OSPM implementation *if* you can figure out what belongs to what. Even just compiling this pile of trash is a mystery in itself. Think you need the source files in source/common? EEEEH, wrong. Well, at least partially since most of them seem to be for the user space stuff..? Other ones *are* needed on the other hand. At least the disassembler and/or debugger and/or dumper components seem to reference them. Not that I could figure out how to compile those anyways. The real path to your goal seems to be to ignore a seemingly arbitrary subset of source and header files until your linker stops complaining

There's also a bunch of configuration defines, some of which *you* define, some defined *for* you, based on again others. Of course most of them do stupid shit. Enabling the debugger automatically enables debug logging. Enabling the disassembler force enables debug allocation tracking... What?

The code itself isn't of much help either. Looking in "os_specific/service_layers" you find what looks to be reference implementations of acpica functions in certain os' like windows and unix. Of course I had a look because AcpiOsReadMemory is supposed to read physical memory and I don't know how I would even implement that. But hey, osunixxf.c (xf for interface... of course) should tell me. I'll let you see for yourself in the attached image. Apparently it does fuck all and just returns AE_OK. No error, no logging, no nothing. Just ok. As you can imagine, AcpiOsWriteMemory doesn't do much more either.

...okay so maybe physical memory accesses aren't actually used and these functions are some sort of relic from past times? Nope! They are absolutely necessary for doing low level device interaction. WTF. So finally I went to the linux source and checked how *they* implemented them, and just as I thought, these functions are anything but no-ops...

...So for what fucking reason do these stupid interface implementations even exist but to purposefully mislead you?? They aren't used for fucking anything! As far as I know Windows doesn't even *use* ACPICA and Linux have their own fork with working implementations... They just sit there, just to tell you how to NOT do it

So that's some of my thoughts about ACPICA. Note that I haven't even used it as a library yet, I just got it to compile and link and it already fucked with me this much.

There's also so much more I didn't mention like that you *have* to modify the acpica source in order to get your own platform header working (else #error) eventhough the docs explicitely instruct you not too but you get the point

Don't use ACPICA if you don't have to. Save your sanity for something that's worth it

-

C# has become shit.

I work since 2013 with C# (and the whole .NET stack) and I was so happy with it.

Compared to Java it was much lean, compared to all shitty new edge framework that looked like a unfinished midschool project, it was solid and mature.

It had his problems,. but compared to everything else that I tried, it was the quickes and most robust solution.

All went in a downhill leading to a rotten shit lake when all this javascript frenzy began to pop up and everyone wanted to get on the trendy bandwagon.

First they introduced MVC, then .NET Core, now .NET 5-6-7-8.

Now I'm literally engulfed with all these tiny bits of terror javascript provoked and they've implemented in all the parts of their framework.

Everything has to be null checked at compilation time, everything pops up errors "this might be nulll heyyyyy it's important put a ! or a ? you silly!!!" everywhere.

There are JS-ish constructs and syntax shit everywhere.

It's unbearable.

I avoid js like a plague whenever I can (and you know it's not a luxury you get often in the current state of a developer life) and they're slowly turning in some shit js hybrid deformed creature

I miss 2013-2018, when it wass all up to me to decide what to do with code and I did some big projects for big companies (200-300k lines of code without unit tests and yes for me it's a lot) without all this hassle.

I literally feel the need c# had to have some compiler rule you can quickly switch called "Senior developer mode" that doesn't trigger alarms and bells for every little stupid thing.

I'm sure you can' turn on/off these craps by some hidden settings somewhere, but heck I feel the need to be an option, so whoever keeps it on should see a big red label on top of the IDE saying "YOU HAVE RETARDED DEV MODE ON"

So they get a reminder that if they use it they are either some fresh junior dev or they are mentally challenged.17 -

Trying out the new version of fasm, I realize it's good, and conclude I should update my code to work with it as there's small incompatibilities with the syntax.

So, quick flat assembler lesson: the macro system is freaking nuts, but there are limitations on the old version.

One issue, for instance, is recursive macros aren't easily possible. By "easily" I mean without resorting to black magic, of course. Utilizing the arcane power of crack, I can automatically define the same macro multiple times, up to a maximum recursion depth. But it's a flimsy patch, on top of stupid, and also has limitations. New version fixes this.

Another problem is capturing lines of code. It's not impossible, again, but a pain in the ass that requires too much drug-addled wizardry to deal with. Also fixed in new version.

Why would you want to capture lines of code? Well, because I can do this, for instance:

macro parse line {

··match a =+ b , line \{

····add a,b;

··\}

};

You can process lines of code like this. The above is a trivial example that makes no fucking sense, but essentially the assembler allows you define your own syntax, and with sufficient patience, you can use this feature to develop absolutely super fucking humongous galactic unrolls, so it's a fantastic code emitter.

Anyway, the third major issue is `{}` curlies have to be escaped according to the nesting level as seen in the example; this is due to a parser limitation. [#] hashes and [`] backticks, which are used to concatenate and stringify tokens respectively, have to be escaped as well depending on the nesting level at which the token originates. This was also fixed.

There's other minor problems but that gives you sufficient context. What happens is the new version of fasm fixes all of these problems that were either annoying me, forcing me to write much more mystical code than I'd normally agree to, and in some rare cases even limiting me in what I could do...

But "limiting" needs to be contextualized as well: I understand fasm macros well enough to write a virtual machine with them. Wish I was kidding. I called it the Arcane 9 Machine, A9M for short. Here, bitch was the prototype for the VM my fucking compiler uses: https://github.com/Liebranca/forge/...

So how am I """limited""", then? You wouldn't understand. As much as I hate to say it, that which should immediately be called into question, you're gonna have to trust me. There are many further extravagant affronts to humanity that I yearn to commit with absolute impunity, and I will NOT be DENIED.

Point is code can be rewritten in much simpler, shorter, cleaner form.

Logic can be much more intricate and sophisticated.

Recursion is no longer a problem.

Namespaces are now a thing.

Capturing -- and processing -- lines of code is easier than ever...

Nearly every problem I had with fasm is gone with this update: thusly, my power grows rather... exponentially.

And I SWEAR that I will NOT use it for good. I shall be the most corrupt, bloodthirsty, deranged tyrant ever known to this accursed digital landscape of broken souls and forgotten dreams.

*I* will reforge the world with black smoldering flame.

*I* will bury my enemies in ill-and-damned obsidian caskets.

And *I* will feed their armies to a gigantic, ravenous mass grave...

Yes... YES! This is the moment!

PREPARE THE RITUAL ROOM (https://youtube.com/watch/...)

Couriers! Ride towards the homeland! Bring word of our success.

And you, page, fetch me my sombersteel graver...

I shall inscribe the spell into these very walls...

in the ELEVENTH degree!

** MANIACAL EVIL LAUGHTER ** -

So I figure since I straight up don't care about the Ada community anymore, and my programming focus is languages and language tooling, I'd rant a bit about some stupid things the language did. Necessary disclaimer though, I still really like the language, I just take issue with defense of things that are straight up bad. Just admit at the time it was good, but in hindsight it wasn't. That's okay.

For the many of you unfamiliar, Ada is a high security / mission critical focused language designed in the 80's. So you'd expect it to be pretty damn resilient.

Inheritance is implemented through "tagged records" rather than contained in classes, but dispatching basically works as you'd expect. Only problem is, there's no sealing of these types. So you, always, have to design everything with the assumption that someone can inherit from your type and manipulate it. There's also limited accessibility modifiers and it's not granular, so if you inherit from the type you have access to _everything_ as if they were all protected/friend.

Switch/case statements are only checked that all valid values are handled. Read that carefully. All _valid_ values are handled. You don't need a "default" (what Ada calls "when others" ). Unchecked conversions, view overlays, deserialization, and more can introduce invalid values. The default case is meant to handle this, but Ada just goes "nah you're good bro, you handled everything you said would be passed to me".

Like I alluded to earlier, there's limited accessibility modifiers. It uses sections, which is fine, but not my preference. But it also only has three options and it's bizarre. One is publicly in the specification, just like "public" normally. One is in the "private" part of the specification, but this is actually just "protected/friend". And one is in the implementation, which is the actual" private". Now Ada doesn't use classes, so the accessibility blocks are in the package (namespace). So guess what? Everything in your type has exactly the same visibility! Better hope people don't modify things you wanted to keep hidden.

That brings me to another bad decision. There is no "read-only" protection. Granted this is only a compiler check and can be bypassed, but it still helps prevent a lot of errors. There is const and it works well, better than in most languages I feel. But if you want a field within a record to not be changeable? Yeah too bad.

And if you think properties could fix this? Yeah no. Transparent functions that do validation on superficial fields? Nah.

The community loves to praise the language for being highly resilient and "for serious engineers", but oh my god. These are awful decisions.

Now again there's a lot of reasons why I still like the language, but holy shit does it scare me when I see things like an auto maker switching over to it.

The leading Ada compiler is literally the buggiest compiler I've ever used in my life. The leading Ada IDE is literally the buggiest IDE I've ever used in my life. And they are written in Ada.

Side note: good resilient systems are a byproduct of knowledge, diligence, and discipline, not the tool you used. -

YGGG IM SO CLOSE I CAN ALMOST TASTE IT.

Register allocation pretty much done: you can still juggle registers manually if you want, but you don't have to -- declaring a variable and using it as operand instead of a register is implicitly telling the compiler to handle it for you.

Whats more, spilling to stack is done automatically, keeping track of whether a value is or isnt required so its only done when absolutely necessary. And variables are handled differently depending on wheter they are input, output, or both, so we can eliminate making redundant copies in some cases.

Its a thing of beauty, defenestrating the difficult aspects of assembly, while still writting pure assembly... well, for the most part. There's some C-like sugar that's just too convenient for me not to include.

(x,y)=*F arg0,argN. This piece of shit is the distillation of my very profound meditations on fuckerous thoughtlessness, so let me break it down:

- (x,y)=; fuck you in the ass I can return as many values as I want. You dont need the parens if theres only a single return.

- *F args; some may have thought I was dereferencing a pointer but Im calling F and passing it arguments; the asterisk indicates I want to jump to a symbol rather than read its address or the value stored at it.

To the virtual machine, this is three instructions:

- bind x,y; overwrite these values with Fs output.

- pass arg0,argN; setup the damn parameters.

- call F; you know this one, so perform the deed.

Everything else is generated; these are macro-instructions with some logic attached to them, and theres a step in the compilation dedicated to walking the stupid program for the seventh fucking time that handles the expansion and optimization.

So whats left? Ah shit, classes. Disinfect and open wide mother fucker we're doing OOP without a condom.

Now, obviously, we have to sanitize a lot of what OOP stands for. In general, you can consider every textbook shit, so much so that wiping your ass with their pages would defeat the point of wiping your ass.

Lets say, for simplicity, that every program is a data transform (see: computation) broken down into a multitude of classes that represent the layout and quantity of memory required at different steps, plus the operations performed on said memory.

That is most if not all of the paradigm's merit right there. Everything else that I thought to have found use for was in the end nothing but deranged ways of deriving one thing from another. Telling you I want the size of this worth of space is such an act, and is indeed useful; telling you I want to utilize this as base for that when this itself cannot be directly used is theoretically a poorly worded and overly verbose bitch slap.

Plainly, fucktoys and abstract classes are a mistake, autocorrect these fucking misspelled testicle sax.

None of the remaining deeper lore, or rather sleazy fanfiction, that forms the larger cannon of object oriented as taught by my colleagues makes sufficient sense at this level for me to even consider dumping a steaming fat shit down it's execrable throat, and so I will spare you bearing witness to the inevitable forced coprophagia.

This is what we're left with: structures and procedures. Easy as gobblin pie.

Any F taking pointer-to-struc as it's first argument that is declared within the same namespace can be fetched by an instance of the structure in question. The sugar: x ->* F arg0,argN

Where ->* stands for failed abortion. No, the arrow by itself means fetch me a symbol; the asterisk wants to jump there. So fetch and do. We make it work for all symbols just to be dicks about it.

Anyway, invoking anything like this passes the caller to the callee. If you use the name of the struc rather than a pointer, you get it as a string. Because fuck you, I like Perl.

What else is there to discuss? My mind seems blank, but it is truly blank.

Allocating multitudes of structures, with same or different types, should be done in one go whenever possible. I know I want to do this, and I know whichever way we settle for has to be intuitive, else this entire project has failed.

So my version of new always takes an argument, dont you just love slurping diarrhea. If zero it means call malloc for this one, else it's an address where this instance is to be stored.

What's the big idea? Only the topmost instance in any given hierarchy will trigger an allocation. My compiler could easily perform this analysis because I am unemployed.

So where do you want it on the stack on the heap yyou want to reutilize any piece of ass, where buttocks stands for some adequately sized space in memory -- entirely within the realm of possibility. Furthermore, evicting shit you don't need and replacing it with something else.

Let me tell you, I will give your every object an allocator if you give the chance. I will -- nevermind. This is not for your orifices, porridges, oranges, morpheousness.

Walruses.16 -

fuck lazarus

fuck pascal

please people you get C, C++, rust, java, javascript EVEN BRAINFUCK is better than this fucking stupid and obsolete language.

The toolchain is just horrible. fpc, fp-compiler, lazarus...

Even in repos 3.0.0 is not the latest one. Like who the fucking cares about improving this language, please think of people who don't give a shit and freeze it already

language is slow

language is horribly verbose

language is CRYPTIC to debug

nobody sain would ever want to learn this language

10 years ago as a student I would pit on lazarus

today I still pit on it

now about lazarus...

The IHM is one of the most shittiest interface we could ever dream of.

Even gimp does it better

you get to download fucking Mbs for a condensated pack of windows all over the places

fuck that2 -

My reasoning is stupid, I just think it's cute in a pimp my ride kind of way. I heard you like getting colossally pounded in the fucking ass, so we put a virtual machine inside your compiler so you can use your binaries while you compile your binaries.

But there is a practical angle to it, too. It's state, structures and execution within the code itself -- that is, in a sense, generators "embedded" within the source, but without any kind of special syntax.

Rather, the code is all the same, and I'd have the option to make calls at compile time: the output of these calls could, in turn, be part of the resulting binary or processed by further calls.

It'd greenlight the wildest fuckery in the jungle, because *that* is the true and ultimate abstraction: programs that write other programs with minimal human intervention. But is my (still) theoretical, cheap ass two-dollar prototype approach held together with clown jizz and prayers better than the endless cumloads worth of corporate investment that's dumped and pumped into generative AI on a daily basis?

Well... **lights cigarette**

That's what we're about to find out, mother fuckers. -

The rear ducking continues. We've built a reliable translator in the dumbest fucking way possible, it's just lovely. I simply reused the structure for feeding data to the VM assembler, an array of arrays, where there's one array of (ins [args]) per node in the parse tree.

It's nice because nodes can be solved out of order without affecting the actual sequence in which the instructions are output. And if one statement (node) equals multiple instructions, you just push multiple entries to the corresponding array, or push nothing if you need to output nothing. Easy as goblin pie.

This is enough to convert an input language to the assembly-like intermediate representation we use for the virtual machine. So then there's doing it backwards: walk the same array of arrays, and map those virtual instructions to a physical architechture. I guess I could do the encoding to native binary myself, it'd certainly be interesting to try, but I'm burnt-out already so I'll just use fasm for now.

Initial test: wrote a test program in my own stupid language, ran the translator, dump output to file, assemble that with fasm, run with r2 -d.

Crashes? No.

Runs fine? Yes and no.

For fuck's sake, I don't have syscalls. Mainly because the VM doesn't have an operating system, lmao. I was testing virtual programs by just freezing state, terminating, then dumping the fucking registers and stack to the console, we have no I/O to speak of. Not even a real 'exit', VM handles that by reading a return value every step like a mentally damaged son of a bitch.

So anyway, I manually paste the linux mambo, you know:

mov rax,60

mov rdi,0

syscall

And NOW our program can end execution without crashing.

Okay then, so does the test code work correctly?

** DRUM ROLL **

Yes.

Ladies and gentlemen, mother fucking PESO is now a compiled language, and going forward I will be expectantly receiving your marriage proposals for reviewing. Oh, but not so fast, we still need a frontend...

Well, we'll handle that in the next few days. I'm just glad to be *nearly* finished with this fucking compiler, I want nothing to do with anything else ever, but we know that's not going to happen, so Lord please end my pain.

No sponsor as this rant has been paid for by tax evasion. -

Sigh...this is kinda stupid.

I'm getting a new ThinkPad at work after 4 years. At first I was like "oh yeah...a new machine!". But they are replacing my quad core T540p with a dual core T560. The T560 CPU has a 30-40% less multi core benchmark score (surprise).

So...dear IT: We are not a small 50ppl company that builds some console apps or small shiny hipster web sites. We are developing fucking large business applications with dozens of projects. Our IDEs and our compiler platform are benefiting from raw CPU power and multiple cores. So can I pls not getting A FUCKING DOWNGRADE AFTER >4 YEARS FFS? THANK YOU!

(before anyone asks: keeping the current notebook is not an option because of warranty/support contracts)5 -

everytime i try to learn kotlin, i can only think WTF is happening, why should it be happening?

after wasting last 4 hours, i came to this conclusion table regarding kotlin var and val notation.

And now my fucking compiler is saying that i can rather write :

val x:Int

and initialize it later, when i thought val is immutable and must be initialized at the beginning only(like public static final int x =5)

Who the fuck are those people that like this stupid language? why would you say some variable as immutable(meaning which can be changed 0 Times "ONCE" ASSIGNED A VALUE ) and when i can create a program with a variable that never got ASSIGNED A VALUE EVEN ONCE?? 10

10 -

Rubber ducking your ass in a way, I figure things out as I rant and have to explain my reasoning or lack thereof every other sentence.

So lettuce harvest some more: I did not finish the linker as I initially planned, because I found a dumber way to solve the problem. I'm storing programs as bytecode chunks broken up into segment trees, and this is how we get namespaces, as each segment and value is labeled -- you can very well think of it as a file structure.

Each file proper, that is, every path you pass to the compiler, has it's own segment tree that results from breaking down the code within. We call this a clan, because it's a family of data, structures and procedures. It's a bit stupid not to call it "class", but that would imply each file can have only one class, which is generally good style but still technically not the case, hence the deliberate use of another word.

Anyway, because every clan is already represented as a tree, we can easily have two or more coexist by just parenting them as-is to a common root, enabling the fetching of symbols from one clan to another. We then perform a cannonical walk of the unified tree, push instructions to an assembly queue, and flatten the segmented memory into a single pool onto which we write the assembler's output.

I didn't think this would work, but it does. So how?

The assembly queue uses a highly sophisticated crackhead abstraction of the CVYC clan, or said plainly, clairvoyant code of the "fucked if I thought this would be simple" family. Fundamentally, every element in the queue is -- recursively -- either a fixed value or a function pointer plus arguments. So every instruction takes the form (ins (arg[0],arg[N])) where the instruction and the arguments may themselves be either fixed or indirect fetches that must be solved but in the ~ F U T U R E ~

Thusly, the assembler must be made aware of the fact that it's wearing sunglasses indoors and high on cocaine, so that these pointers -- and the accompanying arguments -- can be solved. However, your hemorroids are great, and sitting may be painful for long, hard times to come, because to even try and do this kind of John Connor solving pinky promises that loop on themselves is slowly reducing my sanity.

But minor time travel paradoxes aside, this allows for all existing symbols to be fetched at the time of assembly no matter where exactly in memory they reside; even if the namespace is mutated, and so the symbol duplicated, we can still modify the original symbol at the time of duplication to re-route fetchers to it's new location. And so the madness begins.

Effectively, our code can see the future, and it is not pleased with your test results. But enough about you being a disappointment to an equally misconstructed institution -- we are vermin of science, now stand still while I smack you with this Bible.

But seriously now, what I'm trying to say is that linking is not required as a separate step as a result of all this unintelligible fuckery; all the information required to access a file is the segment tree itself, so linking is appending trees to a new root, and a tree written to disk is essentially a linkable object file.

Mission accomplished... ? Perhaps.

This very much closes the chapter on *virtual* programs, that is, anything running on the VM. We're still lacking translation to native code, and that's an entirely different topic. Luckily, the language is pretty fucking close to assembler, so the translation may actually not be all that complicated.

But that is a story for another day, kids.

And now, a word from our sponsor:

<ad> Whoa, hold on there, crystal ball. It's clear to any tzaddiq that only prophets can prophecise, but if you are but a lowly goblinoid emperor of rectal pleasure, the simple truths can become very hard to grasp. How can one manage non-intertwining affairs in their professional and private lives while ALSO compulsively juggling nuts?

Enter: Testament, the gapp that will take your gonad-swallowing virtue to the next level. Ever felt like sucking on a hairy ballsack during office hours? We got you covered. With our state of the art cognitive implants, tracking devices and macumbeiras, you will be able to RIP your way into ultimate scrotolingual pleasure in no time!

Utilizing a highly elaborated process that combines illegal substances with the most forbidden schools of blood magic, we are able to [EXTREMELY CENSORED HERETICAL CONTENT] inside of your MATER with pinpoint accuracy! You shall be reformed in a parallel plane of existence, void of all that was your very being, just to suck on nads!

Just insert the ritual blade into your own testicles and let the spectral dance begin. Try Testament TODAY and use my promo code FIRSTBORNSFIRSTNUT for 20% OFF in your purchase of eternal damnation. Big ups to Testament for sponsoring DEEZ rant.2 -

Fuck you Linux! I thought user password validation would be a piece of cake, like bash one liner. How wrong could I be!

Yeah, it's already ugly to grep hash and salt from /etc/shadow, but I could accept that. But then give me a friggin' tool to generate the hash. And of course the distro I chose has the wrong makepswd, OpenSSL is too old to have the new SHA-512 built in, as it should be a minimal installation I don't want to use perl or python...

And the stupid crypto function that would do me the job is even included in glibc. So it's only one line of C-code to give me all I want, but there is no package that would provide me this dull binary? Instead I will have to compile it myself and then again remove the compiler to keep image small?5 -

What we will miss, if he really softens:

In fact, if the reason is stated as "it makes debugging easier", then I fart in your general

direction and call your mother a hamster.

In short: just say NO TO DRUGS, and maybe you won't end up like the Hurd people.

Of course, I'd also suggest that whoever was the genius who thought it was a good idea to read things ONE F*CKING BYTE AT A TIME with system calls for each byte should be retroactively aborted. Who the f*ck does idiotic things like that? How did they not die as babies, considering

that they were likely too stupid to find a tit to suck on?

Gnome seems to be developed by interface nazis, where consistently the excuse for not doing something is not "it's too complicated to do", but "it would confuse users".

I think the stupidity of your post just snuffed out everything

I think the OpenBSD crowd is a bunch of masturbating monkeys, in that they make such a big deal about concentrating on security to the

point where they pretty much admit that nothing else matters to them.

That is either genius, or a seriously diseased mind. - I can't quite tell which.

Christ, people. Learn C, instead of just stringing random characters together until it compiles (with warnings).

"and anybody who thinks that the above is

(a) legible

(b) efficient (even with the magical compiler support)

(c) particularly safe

is just incompetent and out to lunch.

The above code is sh*t, and it generates shit code. It looks bad, and

there's no reason for it." -

rant.author != this

Christ people. This is just sh*t.

The conflict I get is due to stupid new gcc header file crap. But what

makes me upset is that the crap is for completely bogus reasons.

This is the old code in net/ipv6/ip6_output.c:

mtu -= hlen + sizeof(struct frag_hdr);

and this is the new "improved" code that uses fancy stuff that wants

magical built-in compiler support and has silly wrapper functions for

when it doesn't exist:

if (overflow_usub(mtu, hlen + sizeof(struct frag_hdr), &mtu) ||

mtu <= 7)

goto fail_toobig;

and anybody who thinks that the above is

(a) legible

(b) efficient (even with the magical compiler support)

(c) particularly safe

is just incompetent and out to lunch.

The above code is sh*t, and it generates shit code. It looks bad, and

there's no reason for it.

The code could *easily* have been done with just a single and

understandable conditional, and the compiler would actually have

generated better code, and the code would look better and more

understandable. Why is this not

if (mtu < hlen + sizeof(struct frag_hdr) + 8)

goto fail_toobig;

mtu -= hlen + sizeof(struct frag_hdr);

which is the same number of lines, doesn't use crazy helper functions

that nobody knows what they do, and is much more obvious what it

actually does.

I guarantee that the second more obvious version is easier to read and

understand. Does anybody really want to dispute this?

Really. Give me *one* reason why it was written in that idiotic way

with two different conditionals, and a shiny new nonstandard function

that wants particular compiler support to generate even half-way sane

code, and even then generates worse code? A shiny function that we

have never ever needed anywhere else, and that is just

compiler-masturbation.

And yes, you still could have overflow issues if the whole "hlen +

xyz" expression overflows, but quite frankly, the "overflow_usub()"

code had that too. So if you worry about that, then you damn well

didn't do the right thing to begin with.

So I really see no reason for this kind of complete idiotic crap.

Tell me why. Because I'm not pulling this kind of completely insane

stuff that generates conflicts at rc7 time, and that seems to have

absolutely no reason for being anm idiotic unreadable mess.

The code seems *designed* to use that new "overflow_usub()" code. It

seems to be an excuse to use that function.

And it's a f*cking bad excuse for that braindamage.

I'm sorry, but we don't add idiotic new interfaces like this for

idiotic new code like that.

Yes, yes, if this had stayed inside the network layer I would never

have noticed. But since I *did* notice, I really don't want to pull

this. In fact, I want to make it clear to *everybody* that code like

this is completely unacceptable. Anybody who thinks that code like

this is "safe" and "secure" because it uses fancy overflow detection

functions is so far out to lunch that it's not even funny. All this

kind of crap does is to make the code a unreadable mess with code that

no sane person will ever really understand what it actually does.

Get rid of it. And I don't *ever* want to see that shit again. -

People, help me out.

(first some abstract thoughts)

I am a final year undergrad yet to take steps in the world and i am trying to figure out what to do with my time, what my end goal and next steps should be.

As of now I think my end goal is "relaxation , peace and happiness of me and my loved ones", and to reach there , i need money.

My younger self chose engineering for a particular reason(that i vaguely remember) and weather it was a right or wrong/illogical decision, i guess i am stuck with it and have to use this only to reach my end goal.

Maybe i am regretting this and want to change. Maybe i am just a lazy ass who is bad in his assigned role of an engineer and is running towards glitter in other fields, whatever it is , i am not going against the decision of my past and accepting my identity as an engineer.

I believe once i am able to achieve my goal( that am still not sure about but overall is a good one from general perspective), i guess i will be satisfied

------------------------------------------------

(enough with the deep stuff)

I want to learn how to "learn" . like i am always conflicted about what to do next once the tutor leaves my hand.

for eg, let's say i goto a site abc.

1. They got 1 course each for android , web dev and ai. I choose the web dev course and give my hardworking attention to it

( At this point my choice is usually based on the fact that <A> i should not be stupid to buy all 3 course even if i have money/desire to buy all of em because riding 2 horses is only going to break my ass and <B> some pseudo stats like whichever got more opportunity, which i "like", etc(Point B is usually useless in the long run i guess) )

2. From what i have experienced, these courses usually have a particular list of topic that they cover and apply them to 1 or 2 projects. For eg, say that my web dev course taught me 20 something concepts of basic html/css/js/server and the instructor applied it to blog website

BUT WHAT IS NEXT ?

2.1.

>> Should I make more projects using only those particular list of concepts?

I usually have a ton of ideas that i want to implement now that i know how to build a blog site.

say i got a similar idea to make say url shortner. I start with full enthusiasm but in the middle way there is some new thing that i don't know and when i search the internet, i realize that there are 5 ways to implement such concept, making me wander off towards a whole list of concepts that were not covered in my original 20 concept course. This makes the choice 2. 2

2.2

>> Should I just leave everything , go to docs and start learning concepts from the scratch ??

Usually when i start a project, i soon realize that the original 20 concepts were just the tip of iceberg and there are a ton of things one should know, like how os works, how a particular component interacts with another, how the language is working, how the compiler is executing, etc .

At that point i feel like tearing all my notes away, and learning every associated thing from the scratch. No matter how much my project suffers, i want to know how the things are working from the bottom , like how the requests are being mad, how the routes are working, etc which might not even be relevent for the project.

Why i want to follow approach 2? because of the Goal from abstract thoughts. in theory, having deep knowledge is going to clear my interview thereby getting me a good job.

I will get good money, make projects faster and that will be a happily ever after story.

But in practical this approach is bringing me losses and confusion. every layer of a particular thing i uncover, turns out there is another layer below that. The learning never stops. Plus my original project remained incomplete.

What is your opinon, how do you figure out what to do next?8 -

Just seen F# and really like it. Sadly its compiler throws all kinds of errors on Linux with Mono because for some stupid reason it uses the Mono 2.0 DLLs...undefined lots of debugging tomorrow whyyy it said that it ran on linux all will be functions hail functional programming