Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "server side pages"

-

Oh, man, I just realized I haven't ranted one of my best stories on here!

So, here goes!

A few years back the company I work for was contacted by an older client regarding a new project.

The guy was now pitching to build the website for the Parliament of another country (not gonna name it, NDAs and stuff), and was planning on outsourcing the development, as he had no team and he was only aiming on taking care of the client service/project management side of the project.

Out of principle (and also to preserve our mental integrity), we have purposely avoided working with government bodies of any kind, in any country, but he was a friend of our CEO and pleaded until we singed on board.

Now, the project itself was way bigger than we expected, as the wanted more of an internal CRM, centralized document archive, event management, internal planning, multiple interfaced, role based access restricted monster of an administration interface, complete with regular user website, also packed with all kind of features, dashboards and so on.

Long story short, a lot bigger than what we were expecting based on the initial brief.

The development period was hell. New features were coming in on a weekly basis. Already implemented functionality was constantly being changed or redefined. No requests we ever made about clarifications and/or materials or information were ever answered on time.

They also somehow bullied the guy that brought us the project into also including the data migration from the old website into the new one we were building and we somehow ended up having to extract meaningful, formatted, sanitized content parsing static HTML files and connecting them to download-able files (almost every page in the old website had files available to download) we needed to also include in a sane way.

Now, don't think the files were simple URL paths we can trace to a folder/file path, oh no!!! The links were some form of hash combination that had to be exploded and tested against some king of database relationship tables that only had hashed indexes relating to other tables, that also only had hashed indexes relating to some other tables that kept a database of the website pages HTML file naming. So what we had to do is identify the files based on a combination of hashed indexes and re-hashed HTML file names that in the end would give us a filename for a real file that we had to then search for inside a list of over 20 folders not related to one another.

So we did this. Created a script that processed the hell out of over 10000 HTML files, database entries and files and re-indexed and re-named all this shit into a meaningful database of sane data and well organized files.

So, with this we were nearing the finish line for the project, which by now exceeded the estimated time by over to times.

We test everything, retest it all again for good measure, pack everything up for deployment, simulate on a staging environment, give the final client access to the staging version, get them to accept that all requirements are met, finish writing the documentation for the codebase, write detailed deployment procedure, include some automation and testing tools also for good measure, recommend production setup, hardware specs, software versions, server side optimization like caching, load balancing and all that we could think would ever be useful, all with more documentation and instructions.

As the project was built on PHP/MySQL (as requested), we recommended a Linux environment for production. Oh, I forgot to tell you that over the development period they kept asking us to also include steps for Windows procedures along with our regular documentation. Was a bit strange, but we added it in there just so we can finish and close the damn project.

So, we send them all the above and go get drunk as fuck in celebration of getting rid of them once and for all...

Next day: hung over, I get to the office, open my laptop and see on new email. I only had the one new mail, so I open it to see what it's about.

Lo and behold! The fuckers over in the other country that called themselves "IT guys", and were the ones making all the changes and additions to our requirements, were not capable enough to follow step by step instructions in order to deploy the project on their servers!!!

[Continues in the comments]25 -

https://git.kernel.org/…/ke…/... sure some of you are working on the patches already, if you are then lets connect cause, I am an ardent researcher for the same as of now.

So here it goes:

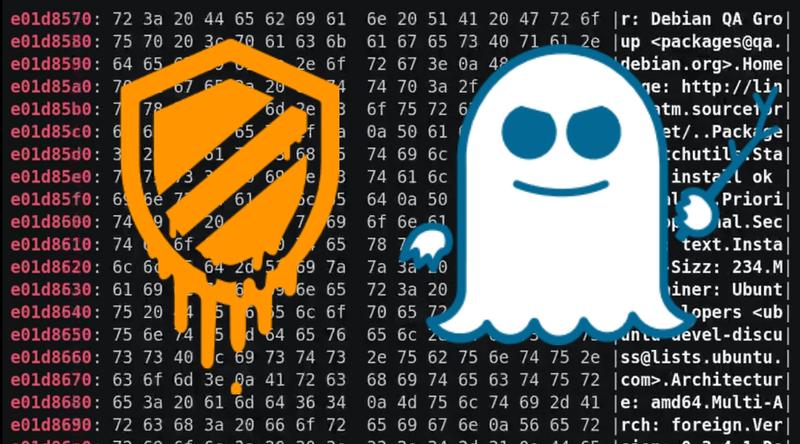

As soon as kernel page table isolation(KPTI) bug will be out of embargo, Whatsapp and FB will be flooded with over-night kernel "shikhuritee" experts who will share shitty advices non-stop.

1. The bug under embargo is a side channel attack, which exploits the fact that Intel chips come with speculative execution without proper isolation between user pages and kernel pages. Therefore, with careful scheduling and timing attack will reveal some information from kernel pages, while the code is running in user mode.

In easy terms, if you have a VPS, another person with VPS on same physical server may read memory being used by your VPS, which will result in unwanted data leakage. To make the matter worse, a malicious JS from innocent looking webpage might be (might be, because JS does not provide language constructs for such fine grained control; atleast none that I know as of now) able to read kernel pages, and pawn you real hard, real bad.

2. The bug comes from too much reliance on Tomasulo's algorithm for out-of-order instruction scheduling. It is not yet clear whether the bug can be fixed with a microcode update (and if not, Intel has to fix this in silicon itself). As far as I can dig, there is nothing that hints that this bug is fixable in microcode, which makes the matter much worse. Also according to my understanding a microcode update will be too trivial to fix this kind of a hardware bug.

3. A software-only remedy is possible, and that is being implemented by all major OSs (including our lovely Linux) in kernel space. The patch forces Translation Lookaside Buffer to flush if a context switch happens during a syscall (this is what I understand as of now). The benchmarks are suggesting that slowdown will be somewhere between 5%(best case)-30%(worst case).

4. Regarding point 3, syscalls don't matter much. Only thing that matters is how many times syscalls are called. For example, if you are using read() or write() on 8MB buffers, you won't have too much slowdown; but if you are calling same syscalls once per byte, a heavy performance penalty is guaranteed. All processes are which are I/O heavy are going to suffer (hostings and databases are two common examples).

5. The patch can be disabled in Linux by passing argument to kernel during boot; however it is not advised for pretty much obvious reasons.

6. For gamers: this is not going to affect games (because those are not I/O heavy)

Meltdown: "Meltdown" targeted on desktop chips can read kernel memory from L1D cache, Intel is only affected with this variant. Works on only Intel.

Spectre: Spectre is a hardware vulnerability with implementations of branch prediction that affects modern microprocessors with speculative execution, by allowing malicious processes access to the contents of other programs mapped memory. Works on all chips including Intel/ARM/AMD.

For updates refer the kernel tree: https://git.kernel.org/…/ke…/...

For further details and more chit-chats refer: https://lwn.net/SubscriberLink/...

~Cheers~

(Originally written by Adhokshaj Mishra, edited by me. ) 22

22 -

Dear fucking boss,

If you want me to implement a huge feature which requires the creation of dozens of db tables, server side classes and front end pages, just fucking stop ask me every 2 hours if I’m done.

Best regards,

The employee that will quit in a week or two6 -

Somebody asked on how to get started on Full Stack web application development.

This is how I got started.

Client side Web Application Development:

---------------------------------------------------------------

• Start with basic HTML, CSS and JS, JSON. For quick learning, see W3Schools for these topic or YouTube it.

• Get a local web server. "200 OK!" webserver chrome extension is a good start. (https://chrome.google.com/webstore/...)

• Learn Chrome Dev Tools to debug the pages. YouTube it.

• Get a good IDE. I am very happy with VSCode. You can use it for very serious WebApps.

• Start learning JavaScript language in depth, but just related to Web Browser related topic or you would get sucked in server side too early.

• Install node.js. Learn NPM package manager. Learn basic node commands.

• Learn complexity of JS file referencing, JS modules in browser. Just learn, don't use it yet, to understand the benefits of code bundlers.

• Learn Webpack code bundler.

• Learn how to make you simple site much faster and using in Mobile using "Progressive Web Apps".

• Now learn to make modular UIs. I love React. Focus on getting the UI code modulear. Create Single Page sites. (You are not there yet to create a Web App) “Create-React-App” started kit is a good starting point.

• Learn to create multi-page site using React-router.

• Learn application state management using Redux.

• Learn to create application decision engine using Redux-Saga.

Practice and master each stage.

Along above, learn git / GitHub (to learn from others code), find good web resources like Medium / Smashing magazine, good YouTube channels etc. I subscribed to some popular Udemy courses too.

Server side Web development:

------------------------------------------

:) First learn client side Web Application development. Server side learning is another story.3 -

Well, the impossible needs to become possible again.

"you will shit out a full website for this customer in two days! Fully responsive, 16 pages, and it better be good!"

Yeah. Ok. Fuck you. My attitude stinks, but your expectations and temperament kind of forge my attitude. Now tell me how in fucks name i am supposed to just stop administering over 3000 users and god only knows the ever growing amount of servers, stop all my server side development, so that I can make a site for a customer paying the company the equivalent of $100 for it (because sales people here are retarded) and get zero fucking commission or even a thank you for it.

Nah. Fuck this.

Tired of complaining, and I'm sure you guys are just as tired of it.6 -

Ok so I have done some work with crypto currency mining pools and recently a client requested for me to make a splash page that showed data from multiple instances of these pools APIs. I went to find some documentation for this open source api and to my surprise there is none. I thought of querying the public API from the clients side and it worked, however it's so slow that the data shows up roughly 20 seconds after the page loads.

Easy fix right? Make a PHP server get the data every 5 seconds, cache it and serve the data with the page and use a websocket for live updates! Until I found out that there is no practical way in this garbage framework to get the damn API data without making an HTTP request or mutilating the original source code. I'm so done with this garbage framework. It literally loads pages based on a page and action parameter on the index.php. I quit.1 -

TL;DR; do your best all you like, strive to be the #1 if you want to, but do not expect to be appreciated for walking an extra mile of excellence. You can get burned for that.

They say verbalising it makes it less painful. So I guess I'll try to do just that. Because it still hurts, even though it happened many years ago.

I was about to finish college. As usual, the last year we have to prepare a project and demonstrate it at the end of the year. I worked. I worked hard. Many sleepless nights, many nerves burned. I was making an android app - StudentBuddy. It was supposed to alleviate students' organizational problems: finding the right building (city plans, maps, bus schedules and options/suggestions), the right auditorium (I used pictures of building evac plans with classes indexed on them; drawing the red line as the path to go to find the right room), having the schedule in-app, notifications, push-notifications (e.g. teacher posts "will be 15 minutes late" or "15:30 moved to aud. 326"), homework, etc. Looots of info, loooots of features. Definitely lots of time spent and heaps of new info learned along the way.

The architecture was simple. It was a server-side REST webapp and an Android app as a client. Plenty of entities, as the system had to cover a broad spectrum of features. Consequently, I had to spin up a large number of webmethods, implement them, write clients for them and keep them in-sync. Eventually, I decided to build an annotation processor that generates webmethods and clients automatically - I just had to write a template and define what I want generated. That worked PERFECTLY.

In the end, I spun up and implemented hundreds of webmethods. Most of them were used in the Android app (client) - to access and upsert entities, transition states, etc. Some of them I left as TBD for the future - for when the app gets the ADMIN module created. I still used those webmethods to populate the DB.

The day came when I had to demonstrate my creation. As always, there was a commission: some high-level folks from the college, some guests from businesses.

My turn to speak. Everything went great, as reversed. I present the problem, demonstrate the app, demonstrate the notifications, plans, etc. Then I describe at high level what the implementation is like and future development plans. They ask me questions - I answer them all.

I was sure I was going to get a 10 - the highest score. This was by far the most advanced project of all presented that day!

Other people do their demos. I wait to the end patiently to hear the results. Commission leaves the room. 10 minutes later someone comes in and calls my name. She walks me to the room where the judgement is made. Uh-oh, what could've possibly gone wrong...?

The leader is reading through my project's docs and I don't like the look on his face. He opens the last 7 pages where all the webmethods are listed, points them to me and asks:

LEAD: What is this??? Are all of these implemented? Are they all being used in the app?

ME: Yes, I have implemented all of them. Most of them are used in the app, others are there for future development - for when the ADMIN module is created

LEAD: But why are there so many of them? You can't possibly need them all!

ME: The scope of the application is huge. There are lots of entities, and more than half of the methods are but extended CRUD calls

LEAD: But there are so many of them! And you say you are not using them in your app

ME: Yes, I was using them manually to perform admin tasks, like creating all the entities with all the relations in order to populate the DB (FTR: it was perfectly OK to not have the app completed 100%. We were encouraged to build an MVP and have plans for future development)

LEAD: <shakes his head in disapproval>

LEAD: Okay, That will be all. you can return to the auditorium

In the end, I was not given the highest score, while some other, less advanced projects, were. I was so upset and confused I could not force myself to ask WHY.

I still carry this sore with me and it still hurts to remember. Also, I have learned a painful life lesson: do your best all you like, strive to be the #1 if you want to, but do not expect to be appreciated for walking an extra mile of excellence. You can get burned for that. -

Why does noone implement autoupdater, especialy on linux side? Is there a reason i dont get? Sure, most system stuff is better in apt, but if i install servers, i do not want to wait for these stupid linux release timings! If it were hard, id understand. But most of this is possible with something like GitHub API and 20 Minutes of time. I mean, yeah backwards compatibility and what not, but then handle that internaly.

Example: I use dnsmasq on a raspberry pi. RPI is running raspbian. Raspian is debian 8. Debian 8 has a version of dnsmasq with a pretty annoying bug, which prevents me from using dnssec, as i cant open any cloudflare pages. Why, o why isnt this updated at MY will? Then, if it isnt, why is it so impossible hard to compile this myself, no docs for that, no binaries, NOTHING? Dear server devs, please add atleast basic autoupdate functionality without having to rely on the base os.

Or, give me easily deployable binaries, if you cant write something integrated.12 -

Alright so I've been thinking of taking my skills to the next level and would like to know a few things from PRO C++ DEV out there

1. Is it possible to set up a production level web server with c++, if so why don't i see many and why are there so many with nodejs etc..

2. Client side web pages without Javascript, possible?

3. Well I forgot the other questions I wanted to ask, if I do remember you'd be able to find them in the comments

I believe in a single universal language for coding, hence I place forth such questions9 -

Wow, I thought Australia's subjects were up-to date with modern technology, but as my year 11 IPT course has proven... No.

Genuine Questions from it:

• Where are Web pages stored?

Most web pages are dynamically generated, so... RAM?

•Locate one webpage that uses ASP. Save a copy of this webpage (file name must = asp.mht)

Chrome Doesn't Even Support that as a save able file format any more!!!!

•Visit the webpage [error 404 anyway why write it]

Wow I can click hyperlinks I thought it was just a fancy color added to the text :|

•Add this webpage to your favorites. Supply one (1) screenshot showing this webpage as one of your favorites.

I ask; Who hasn't bookmarked a webpage in their life at the age of 17, and who actually calls them favorites.

•Press the "Back" Button to view the page you were previously on, take a screen shot to prove you doing so.

I am a rebel, I used my magic fingers to press the button without a mouse (keyboard shortcut)

•Press the "Forward" Button to view the page you were on before you went backwards, take a screen shot to prove you doing so.

I never would of guessed :|

•Take a screen shot after opening multiple tabs in Internet Explorer

...

•View the HTML source of the webpage www.google.com, and save a screen shot

Why not the actual file, really? bloat much?

•Take one screen shot of your Internet Explorer Search History

Stalky much?

•What is a Web browser and what tasks does it perform?

Well.... Do you have a page for indepth analyse? Or do you literally what me to say "It let's you load stuff from dat interwebz, via requesting content from a server"

•Define what JavaScript is in relation to web pages

Are we talking server side? or client?

•Define what CSS is in relation to web pages

Do I even need to say fellow ranters ;) -

Why the fuck nobody talks about Multi-page apps?! We went from a Web where everything was Multi-page server-rendered, and now everything for Web developers is "Single-page apps".

What about websites who can't do that? Not everything can be a single-page app. Only my uncle's restaurant website, or something which is TRULY a full app. No half choices.

If your website is a multi-page app/portal which actually PRELOADS data, instead of doing 100 fetch to an API within a page that is full of loading bars, well, your life is a pain.

When you want a first contentful paint which isn't a white page, well, your life is a pain.

What are React, Vue, Ember, Angular (let's exclude Svelte and Marko) going to do about Multi-page apps and SSR?

React-router sucks to me. It's performance is weak and it's useful only when you have an SPA with multiple sections which can be treated as pages (e.g. A single SPA divided in tabs).

Server-side rendering is the worst pain ever made by humanity, in React (and prob Vue, I didn't try but I can bet). And even when made easier from libs like Svelte and Marko, I (personally) can't get it to be faster enough compared to a traditional website without a JS framework and with a templating engine.

Anyways, if there's anything that I learnt from React, is to stay away from Next.js. Perfect, beautiful, mess.

All JS frameworks just seem to bloat the code and make it worse and slower, even though they're REALLY helpful.

Why? Why everyone loves them if their downsides are so clear? Why 3 projects out of 3 I made (1 React SSR, 1 Vue, 1 Marko SSR) are and will stay painfully slow and bloated, full of shit, even if in 2020 we should have evolved with the famous three shaking, with the famous lazy loading, etc.?

I am just frustrated.

And let's not even talk about Webpack, Rollup, Lasso, those module bundlers shit which are harder to configure and understand than finding a needle in a haystack.

Lasso was the easiest to configure but I anyways can't understand it. Webpack seems it was made to handle SPAs, as any tool in this freaking world, and not even considering an easy way to integrate multiple bundles for multiple pages (I know it's pretty easy, but with component sharing between pages and big unique bundles Next.js handles it soooo bad it feels like hell).

Am I the only one?

Sorry for the long rant. I just needed to rant right now.17 -

My work product: Or why I learned to get twitchy around Java...

I maintain a Java based test system, that tests a raster image processor. The client is a Java swing project that contains CORBA bindings to the internal API of the raster image processor. It also has custom written UI elements and duplicated functionality that became available in later versions of Java, but because some of the third party tools we use don't work with later versions of Java for some reason, it's not possible to upgrade Java to gain things as simple as recursive directory deletion, yes the version of Java we have to use does not support something as simple as that and custom code had to be written to support it.

Because of the requirement to build the API bindings along with the client the whole application must be built with the raster image processor build chain, which is a heavily customised jam build system. So an ant task calls out to execute a jam task and jam does about 90% of the heavy lifting.

In addition to the Java code there's code for interpreting PostScript files, as these can be used to alter the behaviour of the raster image processor during testing.

As if that weren't enough, there's a beanshell interface to allow users to script the test system, but none of the users know Java well enough to feel confident writing interpreted Java scripts (and that's too close to JavaScript for my comfort). I once tried swapping this out for the Rhino JavaScript interpreter and got all the verbal support in the world but no developer time to design an API that'd work for all the departments.

The server isn't much better though. It's a tomcat based application that was written by someone who had never built a tomcat application before, or any web application for that matter and uses raw SQL strings instead of an orm, it doesn't use MVC in any way, and insane amount of functionality is dumped into the jsp files.

It too interacts with a raster image processor to create difference masks of the output, running PostScript as needed. It spawns off multiple threads and can spend days processing hundreds of gigabytes of image output (depending on the size of the tests).

We're stuck on Tomcat seven because we can't upgrade beyond Java 6, which brings a whole manner of security issues, but that eager little Java updated will break the tool chain if it gets its way.

Between these two components we have the Java RMI server (sometimes) working to help generate image data on the client side before all images are pulled across a UNC network path onto the server that processes test jobs (in PDF format), by reading into the xref table of said PDF, finding the embedded image data (for our server consumed test files are just flate encoded TIFF files wrapped around just enough PDF to make them valid) and uses a tool to create a difference mask of two images.

This tool is very error prone, it can't difference images of different sizes, colour spaces, orientations or pixel depths, but it's the best we have.

The tool is installed in both the client and server if the client can generate images it'll query from the server which ones it needs to and if it can't the server will use the tool itself.

Our shells have custom profiles for linking to a whole manner of third party tools and libraries, including a link to visual studio 2005 (more indirectly related build dependencies), the whole profile has to ensure that absolutely no operating system pollution gets into the shell, most of our apps are installed in our home directories and we have to ensure our paths are correct for every single application we add.

And... Fucking and!

Most of the tools are stored as source bundles in a version control system... Not got or mercurial, not perforce or svn, not even CVS... They use a custom built version control system that is built on top of RCS, it keeps a central database of locked files (using soft and hard locks along with write protecting the files in the file system) to ensure users can't get merge conflicts by preventing other users from writing to the files at all.

Branching is heavy weight and can take the best part of a day to create a new branch and populate the history.

Gathering the tools alone to build the Dev environment to build my project takes the best part of a week.

What should be a joy come hardware refresh year becomes a curse ("Well fuck, now I loose a week spending it setting up the Dev environment on ANOTHER machine").

Needless to say, I enjoy NOT working with Java. A lot of this isn't Javas fault, but there's a lot of things that Java (specifically the Java 6 version we're stuck on) does not make easy.

This is why I prefer to build my web apps in python or node, hell, I'd even take Lua... Just... Compiling web pages into executable Java classes, why? I mean I understand the implementation of how this happens, but why did my predecessor have to choose this? Why?2 -

HTML Writers Guidelines

When designing your web site you want to make the visiting experience as enjoyable as possible and at the same time make it so that if the site needs to be changed in any way, the changes are not too difficult to make. You want the look to be as appealing as possible for all browsers and also make the site accessible to users with disabilities. In order to accomplish all this there are some general guidelines when creating your HTML code.

1. The first thing that will really make your life easier is through the use of Cascading Style Sheets (CSS) - CSS is used to maintain the look of the document such as the fonts, margins and color. HTML directly on the page is not a good choice to handle these aspects because if say, the font color you are using for certain paragraphs needs to be changed from blue to red, you would have to go in and change each color tag manually. By using CSS you can designate the color for each of those paragraphs just once in the CSS file. That way if you have to change the font color from blue to red you make one change instead of the countless number of changes you might have to make, especially if your web site contains hundreds of pages. This is a big time saver and a must for all professionally designed web sites.

2. Don't use the FONT tag directly in your HTML code - This becomes a problem when using some cheap authoring tools that try to mimic what a web page should look like by using excessive FONT tags and nbsp characters. These tools end up creating web pages that are impossible to keep maintained. There is a program you can use, if you've created one of these disaster pages, called the HTML Tidy Program which you can actually download here . This will clean up your code as well as possible.

3. You want your web pages readable to people who have disabilities - People who surf the Internet depend on speech synthesizers or Braille readers to interpret the text on the page. If your HTML markup is sloppy or isn't contained in CSS the software these people use to read pages have a difficult time in interpreting these pages. You should also include descriptions for each image on your page. Also, don't use server side image maps. If you are using tables you should include a summary of the table's structure and also associate table data with the correct headers. This gives non visual browsers a chance to follow the page as they go from one cell to another. And finally, for forms, make sure you include labels for form fields.

By following just these three guidelines you give your visitors, especially disabled visitors the best chance of having an enjoyable visit to your site while at the same time making it so that if you have to make changes to your site, those changes can be made easily and quickly.2 -

So i was trying to learn php from a udemy course. The guy there mixes a hell lot of php with html, like all the pages are .php with html content and mini <?php ... ?> Scripts in between everywhere: titles, swl queries running and displaying outputs as html with echo php variables, etc..

Now am not much versed with client server data model, but isn't there supposed to be clear distinction between the server side and the client side? He puts a form there using echo "html string" , rrcieves the form input in the string's action , runs an sql query and generates another set of html strings. All in one file.

Is it how major php websites work? On the other hand My web dev friend om who works a lot with js usually runs 2 seperate aws instances for frontend and backend and makes them communicate via apis9 -

so what do think ? - i built an entire app with html pages.

With client side - angularjs and

server side - .net webapis working with sql db. The app has over 100 forms and works crazy fast in html form compared to the same form in an aspx. Should I leave it this way or do you guys see any problems with it. All forms are post and https enabled site. Open to constructive criticism and don't be a dick4 -

Is client side rendering really that bad? Do you prefer sites without any JavaScript or are you ok with it?

To me it's very convenient to have JS in very dynamic pages. For things like documentation I think server side rendered pages are good enough. I mean it's 2017, right? Do we really need to care for those who deactivate JS? I mean I really like it to separate the front end backend.

What do you think?6 -

Okay so what’s the difference between Blazor and Razor Pages??

I’ve heard Blazor is an alternative to Single page apps made with angular and that it’s not server side, and I personally don’t like that I’d rather have it run on the server side.4 -

so... if someone is learning php, then all of their pages would be php scripts which needs the php engine to execute, aka a complete server set up. therefore no chance of sharing their progress or cool mini projects to the world by setting up a static github page.

Why didn't anyone tell me about that :/1