Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "tracking blocking"

-

Not one feature.

All analytics systems in general.

Whether it's implementing some tracking script, or building a custom backend for it.

So called "growth hackers" will hate me for this, but I find the results from analytics tools absolutely useless.

I don't subscribe to this whole "data driven" way of doing things, because when you dig down, the data is almost always wrong.

We removed a table view in favor of a tile overview because the majority seemed to use it. Small detail: The tiles were default (bias!), and the table didn't render well on mobile, but when speaking to users they told us they actually liked the table better — we just had to fix it.

Nokia almost went under because of this. Their analytics tools showed them that people loved solid dependable feature phones and hated the slow as fuck smartphones with bad touchscreens — the reality was that people hated details about smartphones, but loved the concept.

Analytics are biased.

They tell dangerous lies.

Did you really have zero Android/Firefox users, or do those users use blocking extensions?

Did people really like page B, or was A's design better except for the incessant crashing?

If a feature increased signups, did you also look at churn? Did you just create a bait marketing campaign with a sudden peak which scares away loyal customers?

The opinions and feelings of users are not objective and easily classifiable, they're fuzzy and detailed with lots of asterisks.

Invite 10 random people to use your product in exchange for a gift coupon, and film them interacting & commenting on usability.

I promise you, those ten people will provide better data than your JS snippet can drag out of a million users.

This talk is pretty great, go watch it:

https://go.ted.com/CyNo6 -

I don't use Google/Facebook for privacy reasons (and their sub-services etc). Haven't used them for ages but noticed that google still loads a lot of domains like analytics etc. This goes for facebook as well.

I now blocked a lot of google/facebook domains through my hosts file.

It's funny to see the amount of DNS requests to those fb/google connected domains nearly go to zero and also the fact that I literally can't load google/facebook anymore!126 -

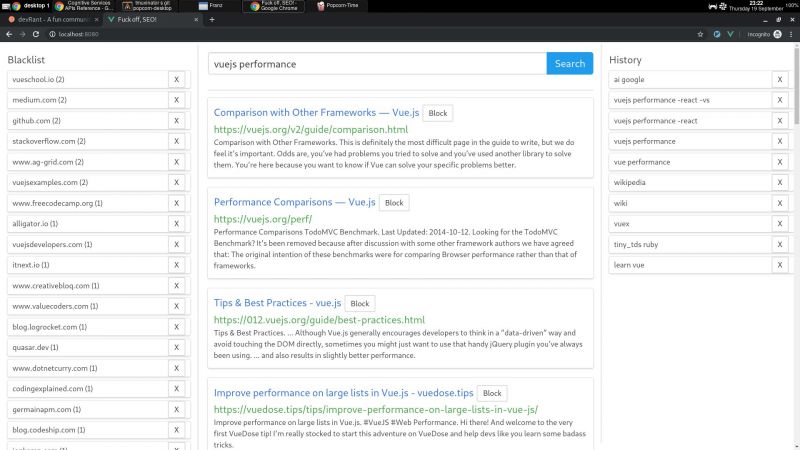

I wrote a node + vue web app that consumes bing api and lets you block specific hosts with a click, and I have some thoughts I need to post somewhere.

My main motivation for this it is that the search results I've been getting with the big search engines are lacking a lot of quality. The SEO situation right now is very complex but the bottom line is that there is a lot of white hat SEO abuse.

Commercial companies are fucking up the internet very hard. Search results have become way too profit oriented thus unneutral. Personal blogs are becoming very rare. Information is losing quality and sites are losing identity. The internet is consollidating.

So, I decided to write something to help me give this situation the middle finger.

I wrote this because I consider the ability to block specific sites a basic universal right. If you were ripped off by a website or you just don't like it, then you should be able to block said site from your search results. It's not rocket science.

Google used to have this feature integrated but they removed it in 2013. They also had an extension that did this client side, but they removed it in 2018 too. We're years past the time where Google forgot their "Don't be evil" motto.

AFAIK, the only search engine on earth that lets you block sites is millionshort.com, but if you block too many sites, the performance degrades. And the company that runs it is a for profit too.

There is a third party extension that blocks sites called uBlacklist. The problem is that it only works on google. I wrote my app so as to escape google's tracking clutches, ads and their annoying products showing up in between my results.

But aside uBlacklist does the same thing as my app, including the limitation that this isn't an actual search engine, it's just filtering search results after they are generated.

This is far from ideal because filter results before the results are generated would be much more preferred.

But developing a search engine is prohibitively expensive to both index and rank pages for a single person. Which is sad, but can't do much about it.

I'm also thinking of implementing the ability promote certain sites, the opposite to blocking, so these promoted sites would get more priority within the results.

I guess I would have to move the promoted sites between all pages I fetched to the first page/s, but client side.

But this is suboptimal compared to having actual access to the rank algorithm, where you could promote sites in a smarter way, but again, I can't build a search engine by myself.

I'm using mongo to cache the results, so with a click of a button I can retrieve the results of a previous query without hitting bing. So far a couple of queries don't seem to bring much performance or space issues.

On using bing: bing is basically the only realiable API option I could find that was hobby cost worthy. Most microsoft products are usually my last choice.

Bing is giving me a 7 day free trial of their search API until I register a CC. They offer a free tier, but I'm not sure if that's only for these 7 days. Otherwise, I'm gonna need to pay like 5$.

Paying or not, having to use a CC to use this software I wrote sucks balls.

So far the usage of this app has resulted in me becoming more critical of sites and finding sites of better quality. I think overall it helps me to become a better programmer, all the while having better protection of my privacy.

One not upside is that I'm the only one curating myself, whereas I could benefit from other people that I trust own block/promote lists.

I will git push it somewhere at some point, but it does require some more work:

I would want to add a docker-compose script to make it easy to start, and I didn't write any tests unfortunately (I did use eslint for both apps, though).

The performance is not excellent (the app has not experienced blocks so far, but it does make the coolers spin after a bit) because the algorithms I wrote were very POC.

But it took me some time to write it, and I need to catch some breath.

There are other more open efforts that seem to be more ethical, but they are usually hard to use or just incomplete.

commoncrawl.org is a free index of the web. one problem I found is that it doesn't seem to index everything (for example, it doesn't seem to index the blog of a friend I know that has been writing for years and is indexed by google).

it also requires knowledge on reading warc files, which will surely require some time investment to learn.

it also seems kinda slow for responses,

it is also generated only once a month, and I would still have little idea on how to implement a pagerank algorithm, let alone code it. 4

4 -

Holy shit firefox, 3 retarded problems in the last 24h and I haven't fixed any of them.

My project: an infinite scrolling website that loads data from an external API (CORS hehe). All Chromium browsers of course work perfectly fine. But firefox wants to be special...

(tested on 2 different devices)

(Terminology: CORS: a request to a resource that isn't on the current websites domain, like any external API)

1.

For the infinite scrolling to work new html elements have to be silently appended to the end of the page and removed from the beginning. Which works great in all browsers. BUT IF YOU HAPPEN TO BE SCROLLING DURING THE APPENDING & REMOVING FIREFOX TELEPORTS YOU RANDOMLY TO THE END OR START OF PAGE!

Guess I'll just debug it and see what's happening step by step. Oh how wrong I was. First, the problem can't be reproduced when debugging FUCK! But I notice something else very disturbing...

2.

The Inspector view (hierarchical display of all html elements on the page) ISN'T SHOWING THE TRUE STATE OF THE DOM! ELEMENTS THAT HAVE JUST BEEN ADDED AREN'T SHOWING UP AND ELEMENT THAT WERE JUST REMOVED ARE STILL VISIBLE! WTF????? You have to do some black magic fuckery just to get firefox to update the list of DOM elements. HOW AM I SUPPOSED TO DEBUG MY WEBSITE ON FIREFOX IF IT'S SHOWING ME PLAIN WRONG DATA???!!!!

3.

During all of this I just randomly decided to open my website in private (incognito) mode in firefox. Huh what's that? Why isn't anything loading and error are thrown left and right? Let's just look at the console. AND IT'S A FUCKING CORS ERROR! FUCK ME! Also a small warning says some URLs have been "blocked because content blocking is enabled." Content Blocking? What is that? Well it appears to be a supper special supper privacy mode by firefox (turned on automatically in private mode), THAT BLOCKS ALL CORS REQUESTS, THAT MAY OR MAY NOT DO SOME TRACKING. AN API THAT 100% CORS COMPLIANT CAN'T BE USED IN FIREFOXs PRIVATE MODE! HOW IS THE END USER SUPPOSED TO KNOW THAT??? AND OF COURSE THE THROWN EXCEPTION JUST SAYS "NETWORK ERROR". HOW AM I SUPPOSED TO TELL THE USER THAT FIREFOX HAS A FEAUTRE THAT BREAKS THE VERY BASIS OF MY WEBSITE???

WHY CAN'T YOU JUST BE NORMAL FIREFOX??????????????????

I actually managed to come up with fix for 1. that works like < 50% of the time -_-5 -

Fuck it, fucking fuck it.

Consulting company, been here for 2 years, had some decent projects (surprise, only those that me and my coworker started from scratch), but OMG the fuck ton amount of bizarre code I've seen is just mindblowing.

Everytime I start on a project, spend days improductive because the stack won't fucking work.

We use some frameworks, but the creators of the projects said fuck it, why would we follow the framework guidelines if I can create a supersmart way that nobody fucking understands way of doing things. I mean, It will look smarter and so nobody else can touch this shitty code.

I hate that the most praised developer is the guy that created most of this shit, and his nº 1 skill is moving Jira tickets to the correct state, tracking time (PM's love this, I hate it) and blocking my fucking merge requests because I left an extra blank line, dangling comma or whatever the fuck else, he's like a human linter.

Dude, the code is a piece of shit, my dangling comma is not going to be the problem! And if you really care that much, setup a linter or something.

Fuck this, I'm quitting this week.3 -

My companies org is in a serious state of disrepair when it comes to project management.

Everything is tracked via conference. Each level of management (CTO, EVP, SVP, BP, S DIRECTOR, DIRECTOR, S MANAGER, MANAGER) all have a different tracking page that all say slightly different things.

To organize things there's a technical project manager who isn't just new to the team, he's new to the field. He's not technical, or experienced in project management. He's never worked within a scrum before.

He's dictating how to organize the teams scrum, and he's getting it very wrong. Decided to organize efforts in all the confluence pages by creating another one for him, again it's different.

When the work in confluence page 3/16 isn't done by a due date anybody knew about, the engineers have to hop on a call and get a Micky mouse solution out the door by the of day so upper management doesn't think the projects off the rails.

In the mean time I've taken a small group of more junior devs and shielding them. We have a side scrum that we manage and is going great, and I'm blocking the BS.

CORPORATE SUCKS. Golden handcuffs are a thing. I might set sail for greener pastures once i don't have to pay back my signing bonus if I leave.2 -

Just reported a minor tracking bug I found on WebKit to the WebKit bugzilla, and I have a few thoughts:

1. Apple product security can be kind of vague sometimes - they generally don't comment on bugs as they're fixing them, from the looks of it, and I'm not sure why that is policy.

2. Tracking bugs *are* security bugs in WebKit, which is quite neat in a way. What amazes me is how Firefox has had a way to detect private browsing for years that they are still working on addressing (indexedDB doesn't work in private browsing), and chrome occasionally has a thing or two that works, with Safari, Apple consistently plays whack-a-mole with these bugs - news sites that attempt to detect private browsing generally have a more difficult time with Safari/WebKit than with other browsers.

I guess a part of that could be bragging rights - since tracking bugs (and private browsing detection bugs, I think) count as security bugs, people like yours truly are more incentivised to report them to Apple because then you get to say "I found a security bug", and internal prioritisation is also higher for them. -

Screen Usage Tracking at Work: Balancing Productivity and Privacy

Introduction

In today’s fast-paced, tech-driven work environments, screen usage tracking has become an essential tool for organizations aiming to improve productivity, security and efficiency. Modern organizations use these monitoring tools to track employee digital device activities because they need to ensure productive time usage. Screen usage tracking generates important privacy issues and ethical problems regarding technological implementation. The successful implementation of productive spaces must preserve worker privacy rights. The following article addresses all aspects of workplace screen usage tracking, including its advantages and disadvantages and proper implementation guidelines.

The Need for Screen Usage Tracking in the Workplace

The demand for screen usage tracking arises from several factors. Digital activity monitoring enables employers to confirm that staff members concentrate on their work tasks while they are at their desks. Remote work and hybrid models have become standard in workplaces because employees can no longer prove their productivity through office attendance. Employers need to monitor how workers spend their time at work because they want both performance outcomes and adequate time management.

Businesses operating in specific industries need to track their employees' activities to secure data because regulatory standards demand it. Through online activity tracking, employers achieve two objectives: they detect suspicious behavior right away and stop employees from accessing unauthorized confidential data. Screen usage tracking functions as an essential tool for both business efficiency maintenance and security protection of organizational assets.

Benefits of Screen Usage Tracking

Reduces Distractions

Employees lose their focus on work when there is no oversight system in place. Screen tracking ensures that employees are focused and using their time effectively, especially during work hours. Work hour restrictions on particular apps and websites through blocking mechanisms help employees stay focused on their tasks.

Enhances Security and Compliance

Employees lose their focus on work when there is no oversight system in place. Work hours require employees to remain focused while using their time effectively because screen tracking provides this oversight. Work hour restrictions on particular apps and websites through blocking mechanisms help employees stay focused on their tasks.

Data-Driven Insights

Screen usage data generates important information about employee work habits as well as employee engagement levels. By monitoring screen usage data managers can identify workers who require extra support and training along with identifying staff members who work excessively and those who perform above expectations. Staff management strategies and workplace performance benefit from these insights gained.

Future of Screen Usage Tracking in Workplaces

Screen usage monitoring will experience future evolution through technological developments that define how tracking occurs. These screen usage tracking tools will benefit from Artificial Intelligence (AI) because it brings both enhanced accuracy and usefulness. Artificial intelligence programming systems analyze staff behavioral patterns to generate forecast data which leads to predictive productivity improvement strategies.

Privacy laws together with regulations, will likely advance in their development. Organizations must discover methods to integrate employee rights protection systems with their monitoring strategies due to rising data privacy concerns. Organizations will adopt standard tracking policies based on transparency and employee consent to maintain ethical and legal handling of employee data.

Qoli.AI drives revolutionary changes in screen usage tracking through its leading AI technology solutions. Data-driven business decisions through Qoli.AI become possible because they provide up-to-the-minute employee performance and behavioral data while adhering to privacy limits. The platform integrates advanced technology to connect with workplace systems which provides employees with trustworthy monitoring solutions.

Conclusion

The implementation of work screen monitoring serves both positive and negative functions. Employment screen tracking enables productivity enhancement and security alongside operational efficiency at the cost of severe privacy issues and moral problems. The successful implementation of work performance enhancement requires organizations to maintain the proper balance between performance upliftment and employee privacy protection. The use of screen tracking tools benefits employers when they maintain transparency and gain employee consent, as well as develop ethical standards that protect employee trust and workplace morale.2