Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "n+1 problem"

-

Swift, oh my god, why do you have to be like this?

I'm looking to write a simple for loop like this one in java

for(int i = 5; i > 0; i--) {

// do shit

}

Thats it, simple, go from 5 to 1 (inclusive), I saw that to iterate over a range in a for loop (increasing ordeR) I can do this

for i in 0...5 {

// do shit.

}

So I thought maybe I could do this to go in reverse (which seems logical when you think about it doesn't it?)

for i in 5..<0 {

// do shit

}

But no, this compiles FINE (THIS IS THE FUCKING KICKER IT COMPILES), alright, when you the code runs you get a fucking exception that crashes the mother fucking application, and you know what the problem is?? This dogshit, shitStain of a language doesn't like it when integer that the for loop starts with is larger than the integer that the for loop ends with MOTHERFUCKER ATLEAST TELL ME THAT AT COMPILE TIME AS A MOTHERFUCKING WARNING YOU PIECE OF SHIT!!

Alright *deep breathing*, now we can't just be stuck on this raging, we're developers need to move forward, so I google this, "Swift for loop in reverse" fair enough I get a straight forward answer that tells me to use the `stride` functionality. The relevant code for it

for i in stride(from:5 to:1 by:-1) {

// do shit

}

Wow looks fine and simple right?? (looks like god damn any other language if you ask me, no innovations here piece of shit apple!) WRONG BITCHES !!! In the latest version of Swift THE FUCKING DEVELOPERS DECIDED TO REMOVE STRIDE ALTOGETHER, WITHOUT ADDING IN A GOOD REPLACEMENT FOR THAT SHIT!

Alright NOW IM FUCKING MAD, I got rage on stackoverflow chat, a guy who's been working on ios for quite a while comes up n says and I quote

"I can sort of figure it out, but besides that, iterating in reverse is uncommon enough that it probably hasn't crossed anyone's mind."

Now hope you guys understand my frustration, and send me cookies to calm me down.

Thank you for listening to me !27 -

API Guy.

He has a serious regex problem.

Regexes are never easy to read, but the ones he uses just take the cake. They're either blatantly wrong, or totally over-engineered garbage that somehow still lacks basic functionality. I think "garbage" here is a little too nice, since you can tell what garbage actually is/was without studying it for five minutes.

In lieu of an actual rant (mostly because I'm overworked), I'll just leave a few samples here. I recommend readying some bleach before you continue reading.

Not a valid url name regex:

VALID_URL_NAME_REGEX = /\A[\w\-]+\Z/

Semi-decent email regex: (by far the best of the four)

VALID_EMAIL_REGEX = /\A[\w+\-.]+@[a-z\d\-.]+\.[a-z]+\z/i

Over-engineered mess that only works for (most) US numbers:

VALID_PHONE_REGEX = /1?\s*\W?\s*([2-9][0-8][0-9])\s*\W?\s*([2-9][0-9]{2})\s*\W?\s*([0-9]{4})(\se?x?t?(\d*))?/

and for the grand finale:

ZIP_CODE_REGEX = /(^\d{5}(-\d{4})?$)|(^[ABCEGHJKLMNPRSTVXY]{1}\d{1}[A-Z]{1} *\d{1}[A-Z]{1}\d{1}$)|GIR[ ]?0AA|((AB|AL|B|BA|BB|BD|BH|BL|BN|BR|BS|BT|CA|CB|CF|CH|CM|CO|CR|CT|CV|CW|DA|DD|DE|DG|DH|DL|DN|DT|DY|E|EC|EH|EN|EX|FK|FY|G|GL|GY|GU|HA|HD|HG|HP|HR|HS|HU|HX|IG|IM|IP|IV|JE|KA|KT|KW|KY|L|LA|LD|LE|LL|LN|LS|LU|M|ME|MK|ML|N|NE|NG|NN|NP|NR|NW|OL|OX|PA|PE|PH|PL|PO|PR|RG|RH|RM|S|SA|SE|SG|SK|SL|SM|SN|SO|SP|SR|SS|ST|SW|SY|TA|TD|TF|TN|TQ|TR|TS|TW|UB|W|WA|WC|WD|WF|WN|WR|WS|WV|YO|ZE)(\d[\dA-Z]?[ ]?\d[ABD-HJLN-UW-Z]{2}))|BFPO[ ]?\d{1,4}/

^ which, by the way, doesn't match e.g. Australian zip codes. That cost us quite a few sales. And yes, that is 512 characters long.47 -

Worst dev team failure I've experienced?

One of several.

Around 2012, a team of devs were tasked to convert a ASPX service to WCF that had one responsibility, returning product data (description, price, availability, etc...simple stuff)

No complex searching, just pass the ID, you get the response.

I was the original developer of the ASPX service, which API was an XML request and returned an XML response. The 'powers-that-be' decided anything XML was evil and had to be purged from the planet. If this thought bubble popped up over your head "Wait a sec...doesn't WCF transmit everything via SOAP, which is XML?", yes, but in their minds SOAP wasn't XML. That's not the worst WTF of this story.

The team, 3 developers, 2 DBAs, network administrators, several web developers, worked on the conversion for about 9 months using the Waterfall method (3~5 months was mostly in meetings and very basic prototyping) and using a test-first approach (their own flavor of TDD). The 'go live' day was to occur at 3:00AM and mandatory that nearly the entire department be on-sight (including the department VP) and available to help troubleshoot any system issues.

3:00AM - Teams start their deployments

3:05AM - Thousands and thousands of errors from all kinds of sources (web exceptions, database exceptions, server exceptions, etc), site goes down, teams roll everything back.

3:30AM - The primary developer remembered he made a last minute change to a stored procedure parameter that hadn't been pushed to production, which caused a side-affect across several layers of their stack.

4:00AM - The developer found his bug, but the manager decided it would be better if everyone went home and get a fresh look at the problem at 8:00AM (yes, he expected everyone to be back in the office at 8:00AM).

About a month later, the team scheduled another 3:00AM deployment (VP was present again), confident that introducing mocking into their testing pipeline would fix any database related errors.

3:00AM - Team starts their deployments.

3:30AM - No major errors, things seem to be going well. High fives, cheers..manager tells everyone to head home.

3:35AM - Site crashes, like white page, no response from the servers kind of crash. Resetting IIS on the servers works, but only for around 10 minutes or so.

4:00AM - Team rolls back, manager is clearly pissed at this point, "Nobody is going fucking home until we figure this out!!"

6:00AM - Diagnostics found the WCF client was causing the server to run out of resources, with a mix of clogging up server bandwidth, and a sprinkle of N+1 scaling problem. Manager lets everyone go home, but be back in the office at 8:00AM to develop a plan so this *never* happens again.

About 2 months later, a 'real' development+integration environment (previously, any+all integration tests were on the developer's machine) and the team scheduled a 6:00AM deployment, but at a much, much smaller scale with just the 3 development team members.

Why? Because the manager 'froze' changes to the ASPX service, the web team still needed various enhancements, so they bypassed the service (not using the ASPX service at all) and wrote their own SQL scripts that hit the database directly and utilized AppFabric/Velocity caching to allow the site to scale. There were only a couple client application using the ASPX service that needed to be converted, so deploying at 6:00AM gave everyone a couple of hours before users got into the office. Service deployed, worked like a champ.

A week later the VP schedules a celebration for the successful migration to WCF. Pizza, cake, the works. The 3 team members received awards (and a envelope, which probably equaled some $$$) and the entire team received a custom Benchmade pocket knife to remember this project's success. Myself and several others just stared at each other, not knowing what to say.

Later, my manager pulls several of us into a conference room

Me: "What the hell? This is one of the biggest failures I've been apart of. We got rewarded for thousands and thousands of dollars of wasted time."

<others expressed the same and expletive sediments>

Mgr: "I know..I know...but that's the story we have to stick with. If the company realizes what a fucking mess this is, we could all be fired."

Me: "What?!! All of us?!"

Mgr: "Well, shit rolls downhill. Dept-Mgr-John is ready to fire anyone he felt could make him look bad, which is why I pulled you guys in here. The other sheep out there will go along with anything he says and more than happy to throw you under the bus. Keep your head down until this blows over. Say nothing."11 -

My code review nightmare part 3

Performed a review on/against a workplace 'nemesis'. I didn't follow the department standards document (cause I could care less about spacing, sorted usings, etc) and identified over 80 bugs, logic errors, n+1 patterns, memory leaks (yes, even in .net devs can cause em'), and general bad behavior (ex.'eating' exceptions that should be handled or at least logged)

Because 'Jeff' was considered a golden child (that's another long TL;DR), his boss and others took a major offense and demanded I justify my review, item by item.

About 2 hours into the meeting, our department mgr realized embarrassing Jeff any further wasn't doing anyone any good and decided to take matters into his own hands. Thinking 'well, its about time he did his job', I go back to my desk. About an hour later..

Mgr: "I need you in the conference room, RIGHT NOW!"

<oh crap>

Mgr: "I spoke to Jeff and I think I know what the problem is. Did you ever train him on any of the problems you identified in the review?"

Me: "Um, no. Why would I?"

Mgr: "Ha!..I was right. So lets agree the problems are partially your fault, OK?"

Me: "Finding the bugs in his code is somehow my fault?"

Mgr: "Yes! For example, the n+1 problem in using the WCF service, you never trained him on how to use the service. You wrote the service, correct?"

Me: "Yes, but it's not my job to teach him how to write C#. I documented the process and have examples in the document to avoid n+1. All he had to do was copy/paste."

Mgr: "But you never sat with Jeff and talked to him like a human being? You sit over there in your silo and are oblivious to the problems you cause. This ends today!"

Me: "What the...I have no idea what you are talking about. What in the world did Jeff tell you?"

Mgr: "He told me enough and I'm putting an end to it. I want a compressive training class developed on how to use your service. I'll give you a month to get your act together and properly train these developers."

3 days later, I submit the power-point presentation and accompanying docs. It was only one WCF with a handful of methods. Mgr approved the training, etc..etc. execute the 'training', and Jeff submits a code review a couple of weeks later. From over 80 issues to around 50. The poop hits the fan again.

Mgr: "What's your problem? When are you going to take your responsibility seriously?"

Me: "Its pretty clear I don't have the problem. All the review items were also verified by other devs. Its not me trying to be an asshole."

Mgr: "Enough with the excuses. If you think you can do a better job *you* make the code changes and submit them for Jeff for review. No More Excuses!"

Couple of days later, I make the changes, submit them for review, and Jeff really couldn't say too much other than "I don't see this as an improvement"

TL;DR, I had been tracking the errors generated by the site due to the bugs prior to my changes. After deployment, # of errors went from thousands per hour to maybe hundreds per day (that's another story) and the site saw significant performance increases, fewer customer complaints, etc..etc.

At a company event, the department VP hands out special recognition awards:

VP: "This award is especially well earned. Not only does this individual exemplify the company's focus on teamwork, he also went above and beyond the call of duty to serve our customers. Jeff, come on up and get this well deserved award."18 -

I messaged a professor at MIT and surprisingly got a response back.

He told me that "generating primes deterministically is a solved problem" and he would be very surprised if what I wrote beat wheel factorization, but that he would be interested if it did.

It didnt when he messaged me.

It does now.

Tested on primes up to 26 digits.

Current time tends to be 1-100th to 2-100th of a second.

Seems to be steady.

First n=1million digits *always* returns false for composites, while for primes the rate is 56% true vs false, and now that I've made it faster, I'm fairly certain I can get it to 100% accuracy.

In fact what I'm thinking I'll do is generate a random semiprime using the suspected prime, map it over to some other factor tree using the variation on modular expotentiation several of us on devrant stumbled on, and then see if it still factors. If it does then we know the number in question is prime. And because we know the factor in question, the semiprime mapping function doesnt require any additional searching or iterations.

The false negative rate, I think goes to zero the larger the prime from what I can see. But it wont be an issue if I'm right about the accuracy being correctable.

I'd like to thank the professor for the challenge. He also shared a bunch of useful links.

That ones a rare bird.21 -

Yesterday the web site started logging an exception “A task was canceled” when making a http call using the .Net HTTPClient class (site calling a REST service).

Emails back n’ forth ..blaming the database…blaming the network..then a senior web developer blamed the logging (the system I’m responsible for).

Under the hood, the logger is sending the exception data to another REST service (which sends emails, generates reports etc.) which I had to quickly re-direct the discussion because if we’re seeing the exception email, the logging didn’t cause the exception, it’s just reporting it. Felt a little sad having to explain it to other IT professionals, but everyone seemed to agree and focused on the server resources.

Last night I get a call about the exceptions occurring again in much larger numbers (from 100 to over 5,000 within a few minutes). I log in, add myself to the large skype group chat going on just to catch the same senior web developer say …

“Here is the APM data that shows logging is causing the http tasks to get canceled.”

FRACK!

Me: “No, that data just shows the logging http traffic of the exception. The exception is occurring before any logging is executed. The task is either being canceled due to a network time out or IIS is running out of threads. The web site is failing to execute the http call to the REST service.”

Several other devs, DBAs, and network admins agree.

The errors only lasted a couple of minutes (exactly 2 minutes, which seemed odd), so everyone agrees to dig into the data further in the morning.

This morning I login to my computer to discover the error(s) occurred again at 6:20AM and an email from the senior web developer saying we (my mgr, her mgr, network admins, DBAs, etc) need to discuss changes to the logging system to prevent this problem from negatively affecting the customer experience...blah blah blah.

FRACKing female dog!

Good news is we never had the meeting. When the senior web dev manager came in, he cancelled the meeting.

Turned out to be a hiccup in a domain controller causing the servers to lose their connection to each other for 2 minutes (1-minute timeout, 1 minute to fully re-sync). The exact two-minute burst of errors explained (and proven via wireshark).

People and their petty office politics piss me off.2 -

An excerpt from the best rant about whiteboard interviews posted on the internet. Ever.

"Well, maybe your maximum subsequence problem is a truly shitty interview problem. You are putting your interview candidate in a situation where their employment hinges on a trivia question. — Kadane's algorithm! They know it, or they don't. If they do, then congratulations, you just met an engineer that recently studied Kadane's algorithm.

Which any other reasonably competent programmer could do by reading Wikipedia.

And if they don't, well, that just proves how smart the interviewer is. At which point the interviewer will be sure to tell you how many people couldn't answer his trivially simple interview question.

Find a spanning tree across a graph where the edges have minimal weight. Maybe one programmer in ten thousand — and I’m being generous — has ever implemented this algorithm in production code. There are only a few highly specific vertical fields in the industry that have a use for it. Despite the fact that next to no one uses it, the question must be asked during job interviews, and you must write production-quality code without looking it up, because surely you know Kruskal’s algorithm; it’s trivial.

Question: why are manhole covers round? Answer: they’re not just round, if you live in London; they're triangular and rectangular and a bunch of other shapes. Why is your interview question broken? Why did you just crib an interview question without researching whether its internal assumption was correct? Do you think that “round manhole covers are easier to roll" is a good answer? Have you ever tried to roll an iron coin that weighs up to 300 pounds? Did you survive? Do you think that “manhole covers are circular so that they don’t fall into manholes” is a good answer? Do you know what a curve of constant width is? Do you know what a Reuleaux triangle is? Have you ever even been to London?

If the purpose of interviewing was to play stump the candidate, I’d just ask you questions from my area of specialization. “What are the windowing conditions which, during the lapping operation on a modified discrete cosine transform, guarantee that the resynthesis achieves perfect reconstruction?” The answer of course is the Princen-Bradley condition! Everyone knows that’s when your windowing function satisfies the conditions h(k)2+h(k+N)2=1 (the lapping regions of the window, squared, should sum to one) and h(k)=h(2N−1−k) (the window should be symmetric). That’s fundamental computer science. So obvious, even a child should know the answer to that one. It’s trivial. You embarrass your entire extended family with your galactic stupidity, which is so vast that its value can only be stored in a double, because a float has insufficient range:"

Author: John Byrd

Src: https://quora.com/What-is-the-harde...3 -

EEEEEEEEEEEE Some fAcking languages!! Actually barfs while using this trashdump!

The gist: new job, position required adv C# knowledge (like f yea, one of my fav languages), we are working with RPA (using software robots to automate stuff), and we are using some new robot still in beta phase, but robot has its own prog lang.

The problem:

- this language is kind of like ASM (i think so, I'm venting here, it's ASM OK), with syntax that burns your eyes

- no function return values, but I can live with that, at least they have some sort of functions

- emojies for identifiers (like php's $var, but they only aim for shitty features so you use a heart.. ♥var)

- only jump and jumpif for control flow

- no foopin variable scopes at all (if you run multiple scripts at the same time they even share variables *pukes*)

- weird alt characters everywhere. define strings with regular quotes? nah let's be [some mental illness] and use prime quotes (‴ U+2034), and like ⟦ ⟧ for array indexing, but only sometimes!

- super slow interpreter, ex a regular loop to count to 10 (using jumps because yea no actual loops) takes more than 20 seconds to execute, approx 700ms to run 1 code row.

- it supports c# snippets (defined with these stupid characters: ⊂ ⊃) and I guess that's the only c# I get to write with this job :^}

- on top of that, outdated documentation, because yea it's beta, but so crappin tedious with this trail n error to check how every feature works

The question: why in the living fartfaces yolk would you even make a new language when it's so easy nowadays to embed compilers!?! the robot is apparently made in c#, so it should be no funcking problem at all to add a damn lua compiler or something. having a tcp api would even be easier got dammit!!! And what in the world made our company think this robot was a plausible choice?! Did they do a full fubbing analysis of the different software robots out there and accidentally sorted by ease of use in reverse order?? 'cause that's the only explanation i can imagine

Frillin stupid shitpile of a language!!! AAAAAHHH

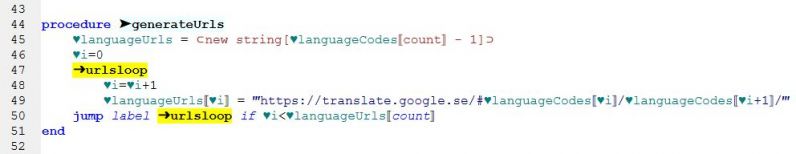

see the attached screenshot of production code we've developed at the company for reference.

Disclaimer: I do not stand responsible for any eventual headaches or gauged eyes caused by the named image.

(for those interested, the robot is G1ANT.Robot, https://beta.g1ant.com/) 4

4 -

Biggest challenge I overcame as dev? One of many.

Avoiding a life sentence when the 'powers that be' targeted one of my libraries for the root cause of system performance issues and I didn't correct that accusation with a flame thrower.

What the accusation? What I named the library. Yep. The *name* was causing every single problem in the system.

Panorama (very, very expensive APM system at the time) identified my library in it's analysis, the calls to/from SQLServer was the bottleneck

We had one of Panorama's engineers on-site and he asked what (not the actual name) MyLibrary was and (I'll preface I did not know or involved in any of the so-called 'research') a crack team of developers+managers researched the system thoroughly and found MyLibrary was used in just about every project. I wrote the .Net 1.1 MyLibrary as a mini-ORM to simplify the execution of database code (stored procs, etc) and gracefully handle+log database exceptions (auto-logged details such as the target db, stored procedure name, parameter values, etc, everything you'd need to troubleshoot database errors). This was before Dapper and the other fancy tools used by kids these days.

By the time the news got to me, there was a team cobbled together who's only focus was to remove any/every trace of MyLibrary from the code base. Using Waterfall, they calculated it would take at least a year to remove+replace MyLibrary with the equivalent ADO.Net plumbing.

In a department wide meeting:

DeptMgr: "This day forward, no one is to use MyLibrary to access the database! It's slow, unprofessionally named, and the root cause of all the database issues."

Me: "What about MyLibrary is slow? It's excecuting standard the ADO.Net code. Only extra bit of code is the exception handling to capture the details when the exception is logged."

DeptMgr: "We've spent the last 6 weeks with the Panorama engineer and he's identified MyLibrary as the cause. Company has spent over $100,000 on this software and we have to make fact based decisions. Look at this slide ... "

<DeptMgr shows a histogram of the stacktrace, showing MyLibrary as the slowest>

Me: "You do realize that the execution time is the database call itself, not the code. In that example, the invoice call, it's the stored procedure that taking 5 seconds, not MyLibrary."

<at this point, DeptMgr is getting red-face mad>

AreaMgr: "Yes...yes...but if we stopped using MyLibrary, removing the unnecessary layers, will make the code run faster."

<typical headknodd-ers knod their heads in agreement>

Dev01: "The loading of MyLibrary takes CPU cycles away from code that supports our customers. Every CPU cycle counts."

<headknod-ding continues>

Me: "I'm really confused. Maybe I'm looking at the data wrong. On the slide where you highlighted all the bottlenecks, the histogram shows the latency is the database, I mean...it's right there, in red. Am I looking at it wrong?"

<this was meeting with 20+ other devs, mgrs, a VP, the Panorama engineer>

DeptMgr: "Yes you are! I know MyLibrary is your baby. You need to check your ego at the door and face the facts. Your MyLibrary is a failed experiment and needs to be exterminated from this system!"

Fast forward 9 months, maybe 50% of the projects updated, come across the documentation left from the Panorama. Even after the removal of MyLibrary, there was zero increases in performance. The engineer recommended DBAs start optimizing their indexes and other N+1 problems discovered. I decide to ask the developer who lead the re-write.

Me: "I see that removing MyLibrary did nothing to improve performance."

Dev: "Yes, DeptMgr was pissed. He was ready to throw the Panorama engineer out a window when he said the problems were in the database all along. Didn't you say that?"

Me: "Um, so is this re-write project dead?"

Dev: "No. Removing MyLibrary introduced all kinds of bugs. All the boilerplate ADO.Net code caused a lot of unhandled exceptions, then we had to go back and write exception handling code."

Me: "What a failure. What dipshit would think writing more code leads to less bugs?"

Dev: "I know, I know. We're so far behind schedule. We had to come up with something. I ended up writing a library to make replacing MyLibrary easier. I called it KnightRider. Like the TV show. Everyone is excited to speed up their code with KnightRider. Same method names, same exception handling. All we have to do is replace MyLibrary with KnightRider and we're done."

Me: "Won't the bottlenecks then point to KnightRider?"

Dev: "Meh, not my problem. Panorama meets primarily with the DBAs and the networking team now. I doubt we ever use Panorama to look at our C# code."

Needless to say, I was (still) pissed that they had used MyLibrary as dirty word and a scapegoat for months when they *knew* where the problems were. Pissed enough for a flamethrower? Maybe.5 -

Jeff Dean Facts (Source: God)

Jeff Dean once failed a Turing test when he correctly identified the 203rd Fibonacci number in less than a second

Jeff Dean compiles and runs his code before submitting, but only to check for compiler and CPU bugs

Unsatisfied with constant time, Jeff Dean created the world's first O(1/n) algorithm

When Jeff Dean designs software, he first codes the binary and then writes the source as documentation

Compilers don't warn Jeff Dean. Jeff Dean warns compilers

Jeff Dean wrote an O(n^2) algorithm once. It was for the Traveling Salesman Problem

Jeff Dean's watch displays seconds since January 1st, 1970.

gcc -O4 sends your code to Jeff Dean for a complete rewrite

-

@Owenvii made a post over at (https://devrant.com/rants/2359774/...) and I want to write a proper response.

The biggest thing you have to look out for as a new dev is the jobs which you accept to begin with.

This isn't minimum wage no more, this is "big league", well, maybe not apple or google big league, but it's not $9.25 an hour either.

Basically you don't want to work anywhere where 1. your labor will be treated as a highly disposable commodity. 2. where the hiring manager doesn't know how to do the job themselves.

The best thing you can do is, if you're new, and just breaking through (and even if you're not), is ask them common questions and problems/solutions that crop up doing the work. If they can answer intelligently that tells you the company values competence (maybe), enough to put someone in place who will know ability from bullshit, merit from mediocrity, and who understands the process of progressing from junior dev to a more involved role.

It also means they are incentivized to hire people who know what they're doing because the training cost of new hires is lowered when they hire people who are actually competent or capable of learning.

Remember, an interview isn't just them learning about you, it's your opportunity to interview *them* and boy, you'll be making a BIG mistake if you don't.

Ideally you want them to ask you to pair program a problem. If your solution is better than theirs then they aren't sending their best to do interviews, and it tells you the company doesn't fire incompetents. The interviewers response can tell you a lot too, if they critique your work, or suggest improvements, and especially if they explain their thinking, that is an amazing response to look for, it says the company values mentorship and *actual* teamwork (not the corporate lingo-bingo 'teamwork' that we sometimes see idolized on posters like so much common dogma).

Most importantly, get them to talk about their work and their team. If they're a professional, it'll be really difficult to pry anything negative about their co-workers out of them, but if they're loose-lipped and gossipy thats a VERY bad sign, regardless of what they have to say.

Ask to take a tour and do a meet n' greet of who you will be working with. If they say no, then it's no thank you to a job offer. You want to take every opportunity to get to know everyone there, everyone you'll be working with, as much as possible--because you'll be spending a LOT of time with these people and you want to rule out any place that employs 'unfireable' toxic assholes, sociopath executives, manipulative ladder climbing narcissists, and vicious misery-loving psychopathic coworkers as quick as possible. This isn't just one warning flag to look out for, it's the essential one. You're looking for the proper *workplace culture*, not the cheesy startup phrase of "workplace culture", but the actual attitudes of the team and the interpersonal dynamics.

Life is really short, and a heart attack at 25 from dipshit coworkers and workplace grief can and will destroy your health, if not your sanity, the older you get.

Trust and believe me when I say no paycheck is too grand to deal with some useless, smarmy, manipulative, or borderline motherfuckers at work constantly. You'll regret it if you do. Don't do it. Do you fucking do it. Just don't.

Take my words to heart and be weary of easy job offers. I'm not saying don't take a good offer that lands in your lap, I AM saying do some investigating and due diligence or the consequences are on you.1 -

GraphQL people: REST sucks because it causes unnecessary data fetching and extra requests.

Also GraphQL people: "PicoLooper - A <200 LOC 🤏 ninja-grade 🥷 bulletproof 🔫 solution for the n+1 request problem 🙅 made with love ❤️" -

Hey, been gone a hot minute from devrant, so I thought I'd say hi to Demolishun, atheist, Lensflare, Root, kobenz, score, jestdotty, figoore, cafecortado, typosaurus, and the raft of other people I've met along the way and got to know somewhat.

All of you have been really good.

And while I'm here its time for maaaaaaaaath.

So I decided to horribly mutilate the concept of bloom filters.

If you don't know what that is, you take two random numbers, m, and p, both prime, where m < p, and it generate two numbers a and b, that output a function. That function is a hash.

Normally you'd have say five to ten different hashes.

A bloom filter lets you probabilistic-ally say whether you've seen something before, with no false negatives.

It lets you do this very space efficiently, with some caveats.

Each hash function should be uniformly distributed (any value input to it is likely to be mapped to any other value).

Then you interpret these output values as bit indexes.

So Hi might output [0, 1, 0, 0, 0]

while Hj outputs [0, 0, 0, 1, 0]

and Hk outputs [1, 0, 0, 0, 0]

producing [1, 1, 0, 1, 0]

And if your bloom filter has bits set in all those places, congratulations, you've seen that number before.

It's used by big companies like google to prevent re-indexing pages they've already seen, among other things.

Well I thought, what if instead of using it as a has-been-seen-before filter, we mangled its purpose until a square peg fit in a round hole?

Not long after I went and wrote a script that 1. generates data, 2. generates a hash function to encode it. 3. finds a hash function that reverses the encoding.

And it just works. Reversible hashes.

Of course you can't use it for compression strictly, not under normal circumstances, but these aren't normal circumstances.

The first thing I tried was finding a hash function h0, that predicts each subsequent value in a list given the previous value. This doesn't work because of hash collisions by default. A value like 731 might map to 64 in one place, and a later value might map to 453, so trying to invert the output to get the original sequence out would lead to branching. It occurs to me just now we might use a checkpointing system, with lookahead to see if a branch is the correct one, but I digress, I tried some other things first.

The next problem was 1. long sequences are slow to generate. I solved this by tuning the amount of iterations of the outer and inner loop. We find h0 first, and then h1 and put all the inputs through h0 to generate an intermediate list, and then put them through h1, and see if the output of h1 matches the original input. If it does, we return h0, and h1. It turns out it can take inordinate amounts of time if h0 lands on a hash function that doesn't play well with h1, so the next step was 2. adding an error margin. It turns out something fun happens, where if you allow a sequence generated by h1 (the decoder) to match *within* an error margin, under a certain error value, it'll find potential hash functions hn such that the outputs of h1 are *always* the same distance from their parent values in the original input to h0. This becomes our salt value k.

So our hash-function generate called encoder_decoder() or 'ed' (lol two letter functions), also calculates the k value and outputs that along with the hash functions for our data.

This is all well and good but what if we want to go further? With a few tweaks, along with taking output values, converting to binary, and left-padding each value with 0s, we can then calculate shannon entropy in its most essential form.

Turns out with tens of thousands of values (and tens of thousands of bits), the output of h1 with the salt, has a higher entropy than the original input. Meaning finding an h1 and h0 hash function for your data is equivalent to compression below the known shannon limit.

By how much?

Approximately 0.15%

Of course this doesn't factor in the five numbers you need, a0, and b0 to define h0, a1, and b1 to define h1, and the salt value, so it probably works out to the same. I'd like to see what the savings are with even larger sets though.

Next I said, well what if we COULD compress our data further?

What if all we needed were the numbers to define our hash functions, a starting value, a salt, and a number to represent 'depth'?

What if we could rearrange this system so we *could* use the starting value to represent n subsequent elements of our input x?

And thats what I did.

We break the input into blocks of 15-25 items, b/c thats the fastest to work with and find hashes for.

We then follow the math, to get a block which is

H0, H1, H2, H3, depth (how many items our 1st item will reproduce), & a starting value or 1stitem in this slice of our input.

x goes into h0, giving us y. y goes into h1 -> z, z into h2 -> y, y into h3, giving us back x.

The rest is in the image.

Anyway good to see you all again. 20

20 -

Okay so my last idea has one big problem: I need to project vertices into a single space which encompasses an entire hemisphere. AND straight lines need to remain straight when projected.

That's not something a typical projection matrix can do. Damn. I'm thinking maybe something like octahedral projection? [1]

But I'm not sure there's an answer. Else I would have to chop up the hemisphere into parts and try rastering each tri for each view. Ugh, that sucks

[1] https://researchgate.net/figure/...2 -

Proudest bug squash experience?

Fixed a N+1 pattern bug on our web site. Wasn't a deeply technical problem, but I was proud to shove the fix up the arse of the developer who blamed me (and even got a VP involved) for the web site crashes (the N+1 involved his code calling a service I wrote) and none of the half-dozen other devs found it.

I really wanted to make a t-shirt with his initial 'blame' email outlining all the 'technical problems' with my service, and the fix was literally moving the service call outside 5 (yes 5) level deep for..each loops.1 -

Every time I see the N+1 query problem in people's implementation, I feel like crying. Especially when it's dealing with large data sets of something like 1000 records.2

-

After a lot of work, the new factorization algorithm has a search space thats the factorial of (log(log(n))**2) from what it looks like.

But thats outerloop type stuff. Subgraph search (inner loop) doesn't appear to need to do any factor testing above about 97, so its all trivial factors for sequence analysis, but I haven't explored the parameter space for improvements.

It converts finding the factors of a semiprime into a sequence search on a modulus related to

OIS sequence A143975 a(n) = floor(n*(n+3)/3)

and returns a number m such that n=pq, m%p == 0||(p*i), but m%q != 0||(q*k)

where i and k are respective multiples of p and q.

This is similar in principal to earlier work where I discovered that if i = p/2, where n=p*q then

r = (abs(((((n)-(9**i)-9)+1))-((((9**i)-(n)-9)-2)))-n+1+1)

yielding a new number r that shared p as a factor with n, but is coprime with n for q, meaning you now had a third number that you could use, sharing only one non-trivial factor with n, that you could use to triangulate or suss out the factors of n.

The problem with that variation on modular exponentiation, as @hitko discovered,

was that if q was greater than about 3^p, the abs in the formula messes the whole thing up. He wrote an improvement but I didn't undertsand his code enough to use it at the time. The other thing was that you had to know p/2 beforehand to find r and I never did find a way to get at r without p/2

This doesn't have that problem, though I won't play stupid and pretend not to know that a search space of (log(log(n))**2)! isn't an enormous improvement over state of the art,

unless I'm misunderstanding.

I haven't posted the full details here, or sequence generation code, but when I'm more confident in what my eyes are seeing, and I've tested thoroughly to understand what I'm looking at, I'll post some code.

hitko's post I mentioned earlier is in this thread here:

https://devrant.com/rants/5632235/...2 -

Heres some research into a new LLM architecture I recently built and have had actual success with.

The idea is simple, you do the standard thing of generating random vectors for your dictionary of tokens, we'll call these numbers your 'weights'. Then, for whatever sentence you want to use as input, you generate a context embedding by looking up those tokens, and putting them into a list.

Next, you do the same for the output you want to map to, lets call it the decoder embedding.

You then loop, and generate a 'noise embedding', for each vector or individual token in the context embedding, you then subtract that token's noise value from that token's embedding value or specific weight.

You find the weight index in the weight dictionary (one entry per word or token in your token dictionary) thats closest to this embedding. You use a version of cuckoo hashing where similar values are stored near each other, and the canonical weight values are actually the key of each key:value pair in your token dictionary. When doing this you align all random numbered keys in the dictionary (a uniform sample from 0 to 1), and look at hamming distance between the context embedding+noise embedding (called the encoder embedding) versus the canonical keys, with each digit from left to right being penalized by some factor f (because numbers further left are larger magnitudes), and then penalize or reward based on the numeric closeness of any given individual digit of the encoder embedding at the same index of any given weight i.

You then substitute the canonical weight in place of this encoder embedding, look up that weights index in my earliest version, and then use that index to lookup the word|token in the token dictionary and compare it to the word at the current index of the training output to match against.

Of course by switching to the hash version the lookup is significantly faster, but I digress.

That introduces a problem.

If each input token matches one output token how do we get variable length outputs, how do we do n-to-m mappings of input and output?

One of the things I explored was using pseudo-markovian processes, where theres one node, A, with two links to itself, B, and C.

B is a transition matrix, and A holds its own state. At any given timestep, A may use either the default transition matrix (training data encoder embeddings) with B, or it may generate new ones, using C and a context window of A's prior states.

C can be used to modify A, or it can be used to as a noise embedding to modify B.

A can take on the state of both A and C or A and B. In fact we do both, and measure which is closest to the correct output during training.

What this *doesn't* do is give us variable length encodings or decodings.

So I thought a while and said, if we're using noise embeddings, why can't we use multiple?

And if we're doing multiple, what if we used a middle layer, lets call it the 'key', and took its mean

over *many* training examples, and used it to map from the variance of an input (query) to the variance and mean of

a training or inference output (value).

But how does that tell us when to stop or continue generating tokens for the output?

Posted on pastebin if you want to read the whole thing (DR wouldn't post for some reason).

In any case I wasn't sure if I was dreaming or if I was off in left field, so I went and built the damn thing, the autoencoder part, wasn't even sure I could, but I did, and it just works. I'm still scratching my head.

https://pastebin.com/xAHRhmfH33 -

So I have a problem and I was hoping for some insight.

I figured out how to get

(surd(n, x)-surd(n, y))

without knowing x or y, (only n), through a convergent series of approximate identities.

n is the product of x and y, where x<y

My only issue is I don't know where to go from here. I've basically hit the limit of my insight into the problem.

surd() here is just a function that returns the results of two arguments, a, b, such that (a^2)-b.

Both are guaranteed to be positive integers, greater than 1.

But, having come this far, with a couple pages of intermediate identities, I'm at a loss.4 -

Stack overflow is full of useless assholes, like I asked a specific question about a problem I am having that is similar to another problem that exists but it is not the same at all in terms of how to fix and instead of helping I’ve got 2 downvotes on it and a comment linking me to a completely unrelated stylistic based question based on something I SAID I HAD ALREADY TRIED CHANGING IN MY QUESTION!!! Here’s my question btw in case anyone can help here before I smash up my laptop 😑:

I have a piece of code in which I am trying to read in words which have been categorised using a number and then placed in a text file in the following format "word-number-" with a new line for each word. However, despite not mixing cin>> and getline and having tried a number of methods I still cannot get it working.

So far I have attempted using a cin.ignore() call to clear any '\n' char's from the buffer, as well as checking if the file is opening in the first place (it is), and using the >> operator instead throughout my code however I could not get that working either. When I place the get line call inside the condition of the while loop, the while loop doesn't run, however when I make the while loop condition a .eof() call it will run once however when I try to print the text that has been read from the getline call it just prints a blank line.

if(file.is_open()){

while(!file.eof()){

getline(file, text, '-');

count++;

cout<<count<<endl;

cout<<text<<endl;

if(count%2 == 1){

wordBuff = text;

}else if(count%2 == 0){

if(stoi(text) == wordClass){

wordList.push_back(wordBuff);

}

}

}

file.close();

}

While I recognise there are a lot of other questions on this out there I cannot seem to get any of their solutions to work and the vast number being related to people mixing the >> operator and getline doesn't help, so any tips or solutions will be of great help -

So today at work, a dev proposed some solution to a performance problem by using divide and conquer. But the way he said it was came across like "this is a brilliant, algorithmic solution, I bet you'd never think of this because no one else knows algos".

So then I just reply to him mentioning Big O and how it seems the performance is N^3, exponential. In which case the optimal size is like 1. But basically like starting an algo discussion to see if he can keep up... Or if he's just dropping some algo slang to look good.9 -

Is there any exact way to get the product of all primes under n multiplied together, without explicitly knowing what those primes are?

Lets call this number V.

Because hypothethetically, if we calculate from the *base* of V, then we can derive easy divisibility rules for V-1 and V+1, as laid out

here:

https://notaboutapples.wordpress.com/...

And then, unless I've misunderstood something, the problem of factorization has been changed from division into an addition and subtraction problem.8 -

Math question time!

Okay so I had this idea and I'm looking for anyone who has a better grasp of math than me.

What if instead of searching for prime factors we searched for a number above p?

One with a certain special property. BEAR WITH ME. I know I make these posts a lot and I'm a bit of a shitposter, but I'm being genuine here.

Take this cherry picked number, 697 for example.

It's factors are 17, and 41. It's trivial but just for demonstration.

If we represented it's factors as a bit string, where each bit represents the index that factor occurs at in a list of primes, it looks like this

1000001000000

When converted back to an integer that number becomes 4160, which we will call f.

And if we do 4160/(2**n) until the result returns

a fractional component, then N in this case will be 7.

And 7 is the index of our lowest factor 17 (lets call it A, and our highest factor we'll call B) in our primes list.

So the problem is changed from finding a factorization of p, to finding an algorithm that allows you to convert p into f. Once you have f it's a matter of converting it to binary, looking up the indexes of all bits set to 1, and finding the values of those indexes in the list of primes.

I'm working on doing that and if anyone has any insights I'm all ears.9 -

Compromise.

I think that sums up development pretty much.

Take for example coding patterns: Most of them *could* be applied on a global scale (all products)… But that doesn't mean you *should* apply them. :-)

Find a matching **compromise** that makes specific sense for the product you develop.

Small example: SOLID / DRY are good practices. But breaking these principles by for example introducing redundant code could be a very wise design decision - an example would be if you know full ahead that the redundancy is needed for further changes ahead. Going full DRY only to add the redundancy later is time spent better elsewhere.

The principle of compromise applies to other things, too.

Take for example architecture design.

Instead of trying to enforce your whole vision of a product, focus on key areas that you really think must be done.

Don't waste your breath on small stuff - cause then you probably lack the strength for focusing on the important things.

Compromise - choose what is *truly* important and make sure that gets integrated vs trying to "get your will done".

Small example: It doesn't really matter if a function is called myDingDong or myDingDongWithBells - one is longer, other shorter. Refactoring tools make renaming a function an easy task. What matters is what this function does and that it does this efficiently and precise. Instead of discussing the *name* of the function, focus on what the function *does*.

If you've read so far and think this example is dumb: Nope... I've seen PR reports where people struggled for hours with lil shit while the elephant in the room like an N+1 problem / database query or other fundamental things completely drowned in the small shit discussion noise.

We had code design, we had architecture... Same goes for people, debugging, and everything else.

Just because you don't like what weird person A does, doesn't mean it's shit.

Compromise. You don't have to like them. Just tolerate them. Listen. Then try to process their feedback unbiased. Simple as that. Don't make discussions personal - and don't isolate yourself by just working with specific persons. Cause living in such a bubble means you miss out a lot of knowledge and insight… or in short: You suck because of your own choices. :-)

Debugging... Again compromise: instead of wasting hours on debugging a problem, ASK for help. A simple: Has anyone done debugging this before or has some input for how to debug this problem efficiently?... Can sometimes work wonders. Don't start debugging without looking into alternative solutions like telemetry, metrics, known problems etc.

It could be a viable, better long term solution to add metrics to a product than to debug for hours ... Compromise. Find a fitting approach to analyze a problem instead of just starting a brute force approach.

....

Et cetera et cetera. -

mann.. 5 years after graduating , and i still feel that dsa rounds are one tough cookie.

i didn't do dsa for last 5 uears as i was busy in my daily dev life. then recently i planned for a switchz started preparing ds algo, got myself into a classroom course and now its been over 1 month and stuff just ... flies out of mind

like, our class has only covered arrays , searching sorting and string based questions till now. and since my interview is tomorrow i thought of going back to the classroom notes and revise all the questions.

and i can hardly remember 10/50!

I clearly remember understanding those questions well, putting comments and examples on the functions and doing them on my own without copying teachers code.

now all those notes are taking time to get back into memory. feels like i am dependent on checking tthe solution again n again to complete the problem.

this is truly scary. as of now i don't even had classes for advanced topics like recursion tres or graphs. the course will probably cover 400-500 problems.

if i go blank state after not doing these questions for a few months. how am i supposed to crack coding interviews which are usually scheduled within 2-3 days of notice?5 -

If we can transform the search space or properties of a product into a graph problem

we could possibly use Kirchhoff's theorem to reveal products which are 'low complexity'

in particular search spaces, yeah?

Now according to

https://en.wikipedia.org/wiki/...

"n Cycle Space, A family of sets closed under the symmetric difference operation can be described algebraically as a vector space over the two-element finite field Z 2 {\displaystyle \mathbb {Z} _{2}} \mathbb{Z } _{2}.[4] This field has two elements, 0 and 1, and its addition and multiplication operations can be described as the familiar addition and multiplication of integers, taken modulo 2"

Wouldn't this relate to pollards algorithm, because it involves looking for factors of coprimes modulo N or am I mistaken?

Now, according to wikipedia, "in a group, the additive identity is the identity element of the group, is often denoted 0, and is unique."

If we make the multiplicative identity of our ring or field a tuple of the ratio of a/b for some product p, or a (and a/w, where w is the square root of p), or any other set such that n*m allows us to derive a or b, we could reduce the additive identity to the multiplicative identity, making the ring trivial. Solving for p would then mean finding a function from R to R, mapping every number to 0, i.e. finding the additive identity.

Now in a system with a multiplication operation the distributes over addition, the "additive

identity annihilates ring elements", so naturally, the function that maps to 0, gives us

our additive identity, we need only find the subset, no?

Forgive me if I'm wrong, but shouldn't this be convertible to a graph search?

I'm WAY out of my depth here so if anyone is familiar and can enlighten me I'd be grateful.

It's all unknown unknowns to me. -

RECOVER LOST CRYPTO WITH THE HELP OF FUNDS RECLAIMER COMPANY

I'm a logical individual, I assure you. I don't believe in conspiracies, in reading minds, in messages from the universe "sending me messages." But in hindsight, the universe wasn't sending messages at all – it was holding a sign in my front lawn, screaming at me to pay attention!

My three disparate friends—*in altogether disparate professions—*all mentioned FUNDS RECLIAMER COMPANY in one and the same month, no less. First, my finance buddy told me about how they recovered his $150,000 following a phishing attack. Next, a technology buddy waxed poetic about getting recovered his compromised wallet a week afterward. And then, out of nowhere, my fitness trainer (yes, my fitness trainer) mentioned them when I grumbled through leg day at the gym.

I could have taken down my contact information at that point, but no, I simply chuckled. "Wow, these guys must have been pretty darn talented." And then I continued with my totally secure, totally unpenetrable life in crypto.

And then one morning, I signed in to my wallet and saw the "incorrect password" message I'd been dreading. No problem—I tried again. And then again. And then yet again. With each failure, I crept ever-closer towards a full-fledged meltdown in life.

And then I considered, "No problem, I have my backup key stored!" Except.I hadn't saved it anywhere, in my hyper-care in being ultra-secure, I'd buried it somewhere so secure even I couldn't remember!

And at that point, full-blown panic moved in and started unboxing its bags. $300,000. Gorno.

My head careened out of control. Perhaps I could meditate? Stupidity, I know. Perhaps I could scream? Tempting, I must admit.

Perhaps I could—OH. WAIT

I remembered FUNDS RECLIAMER COMPANY. Same name, three times in one month, appearing in my life. All at once, my three friends no longer seemed mad. I took out my phone and called them.

From my first conversation, I could trust I was in safe hands. Their team sounded relaxed, professional, and obviously in charge of a routine activity. They questioned me with all proper questions, analyzed my case, and began working immediately.

A couple of days later, I received a message: "We recovered your wallet." I sat down in a heap, full of a mix of joy and disbelief at having my life restored in one go. I sent a same message to all three friends: "Fine, you were correct." Their smug messages popped in at once.

Moral lesson? In case three disparate persons report about a single issue, it is no fluke but a heads-up. And when that issue turns out to be FUNDS RECLIAMER COMPANY, make a call even before a disaster can unfold.

Email: fundsreclaimer(@) c o n s u l t a n t . c o m

Email: fundsreclaimercompany@ z o h o m a i l . c o m

WhatsApp:+1 (361) 2 5 0- 4 1 1 0

Website: h t t p s ://fundsreclaimercompany . c o m1