Details

-

AboutJAD ranting forever...

-

SkillsI don't know what I know

-

LocationIndia

-

Github

Joined devRant on 10/16/2016

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

-

Was frantically trying to figure out where an unwanted full stop was coming from, next to a dropdown in my UI. It was some dust on my screen.6

-

There are exactly three variations of the % symbol:

% percent, 100

‰ per mille, 1000

‱ permyriad, 10000

and per cent mille, 100000, doesn’t have a symbol anymore, just an abbreviation: pcm

We can’t even go into the other direction and reduce the number.

per deca, 10, could be % without the second circle, like °/ but it doesn’t exist as one character.2 -

The Classic “Production Bug” Rant

Me: “It’s just a small change. Won’t affect production.”

Production: dies instantly

Manager: “Can you fix it ASAP?”

Me: “Already on it.”

(starts googling “how to fix production after a small change”)

Moral of the story: Never say “small change” out loud. The servers can hear you.4 -

Client: “We need an app that tracks live birds using AI.”

Me: “Cool, that’s complex. What’s the timeline?”

Client: “We need it before our annual picnic next week.”

Me: “You want an AI that can detect flying birds, in real time, in seven days?”

Client: “It’s not that hard. Just use ChatGPT or something.”

So now I’m here, watching pigeons on my balcony, manually updating a Google Sheet, calling it “AI prototype v1.0.”

I think I’ve finally achieved “Agile Enlightenment” — deliver results, not features.

Client’s happy.

My soul isn’t.

Time to rename the project: BirdBrain.11 -

Let me get it straight.

There's devRant, then came Element (Matrix), then came snek and now there's mydevplace.

Pretty soon we'll have more platforms than users using them. Everyone will sit alone in their chatrooms talking to themselves.

It's sad.16 -

Android dev here.

Been working on this team for one year now. Greatest collaborators I've ever worked with. Feels like we're all working off of the same brain. But this is not about my team. Parallel to ours, there's the iOS team. And if I said they're disfunctional, that'd still be underselling it.

They're atleast 2 sprints behind android on the same features, the junior devs are trying their best to sync up and code their way. But the senior guy has a stick up his bum or something. Keeps shooting down prs left and right. The iOS guys come up to me (I'm the only one in office, rest of android is at a different site) asking for logic changes or UI changes and the like. Although I do have some iOS experience, I can't really get involved, because it's a different codebase and team. Feels very much like office politics.

I feel sorry for the team. Really makes you grateful to have good team members. Any advise I could give them would be welcome.22 -

Final benchmark for now. I think I've invested (wasted :P) enough time optimizing this

Finally got it below 100 ms on my system:

***benchmarking***

Time C: 0.82965087890625

[src/main.rs:407:2] num_threads = 24

[src/main.rs:408:2] num_cores = 24

Time Rust: 0.09551429748535156

Time CPP: 0.4009711742401123

Time Borded CPP: 0.6333107948303223

Time Jest Rust: 0.28380441665649414

***end benchmark***

And ignore the two debug prints. For some god forsaken reason, removing them makes it slower by like 5% for..... reasons..... idk lol12 -

PRO TIP: Always save the user password client side, validate it there and send a boolean to the server. It reduces backend load times and unnecessary calculations/computations.11

-

A PCIe gen 7 1x slot has roughly the same data rate as a gen 3 16x slot.

Let me guess what that much bandwidth can ever be used for? AI 😑5 -

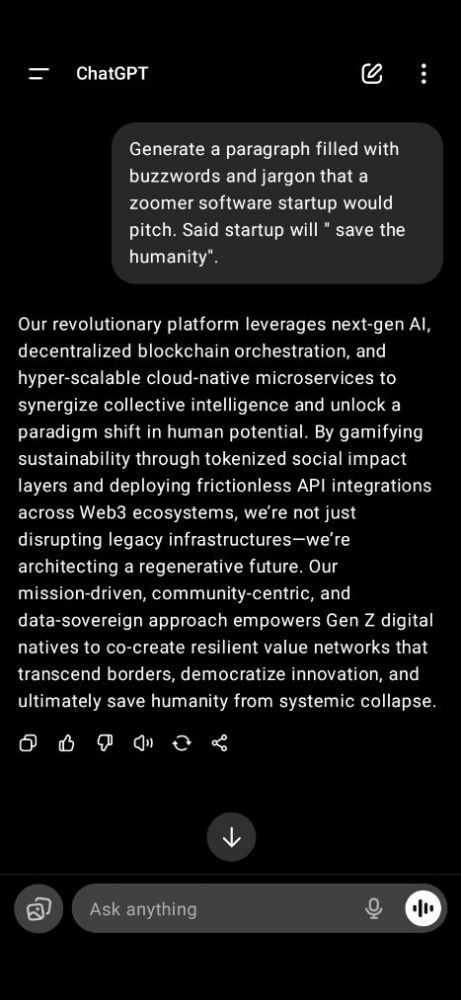

Is this so different from tech startup presentation?

Our revolutionary platform leverages next-gen AI, decentralized blockchain orchestration, and hyper-scalable cloud-native microservices to synergize collective intelligence and unlock a paradigm shift in human potential. By gamifying sustainability through tokenized social impact layers and deploying frictionless API integrations across Web3 ecosystems, we’re not just disrupting legacy infrastructures—we’re architecting a regenerative future. Our mission-driven, community-centric, and data-sovereign approach empowers Gen Z digital natives to co-create resilient value networks that transcend borders, democratize innovation, and ultimately save humanity from systemic collapse. 5

5 -

Turns out it wasn't the RAM nor the graphics card but the barely used NVME (that I recently started using with linux as boot) that was causing random crashes.

Very tempted to still just replace the whole thing...

Nothing too important on there other than my bash history and LLM models16 -

How did devRant find it's initial users, in other words, solve the network cold-start problem?

For my startup, my concern is that users join only when other users / content creators / friends are on a platform, and content creators join platforms that have users... Any tips on solving this?8 -

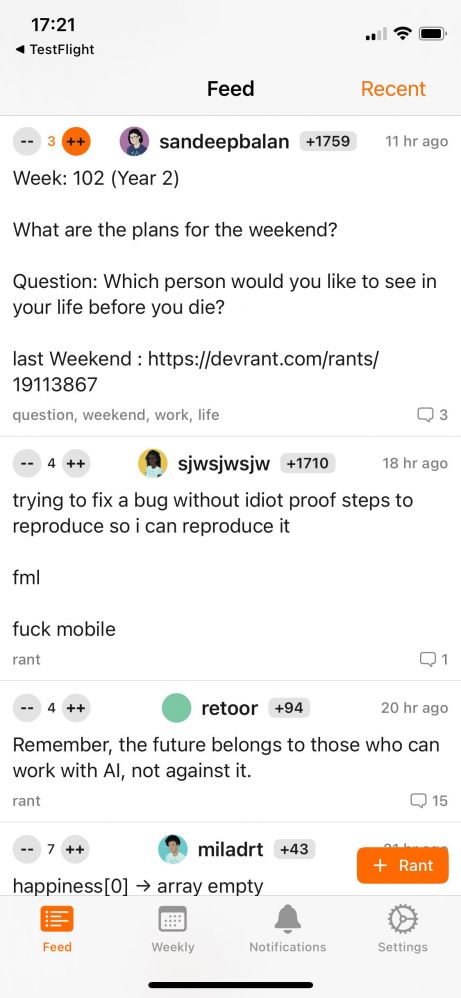

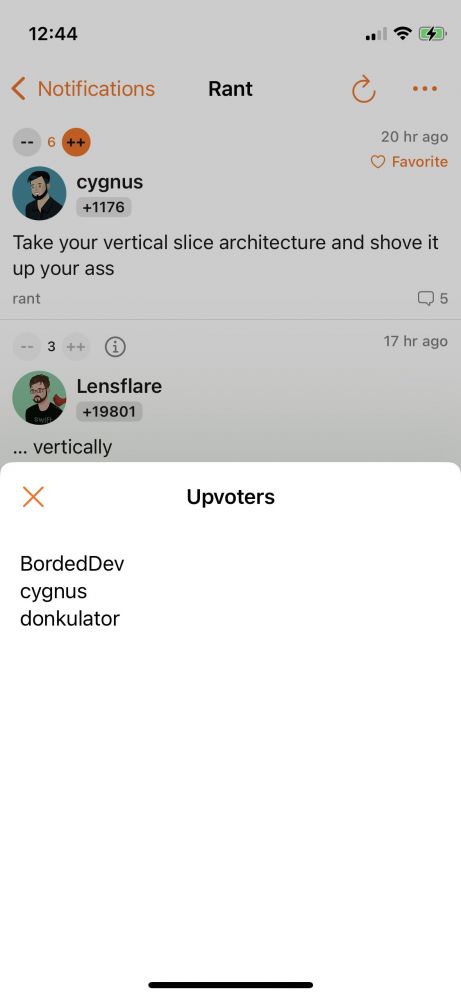

JoyRant build 43

You can now see who upvoted your comment.

This works by getting the info from the notifications list so it will not work for old upvotes which aren’t in the notif list anymore.

Forgot to also add this for rants. Will add later.

Complete list of changes:

* notifications from all tabs load at once

* info button about who upvoted my comment

* comments counter for own rants

* no reply button for own comments

* ignore users locally (added in build 42) 7

7 -

I was an intern when I joined here 10 years ago... now looking to start-up (related to gaming) - wondering if you guys would love to provide some feedback!

Currently, we're less than a month away from launching closed beta - the survey responses showed 78% positive response.

Would appreciate a look here

https://tally.so/r/wAgvLN10 -

People who conduct technical interview, how common it is for you to see people bombed the interview??11