Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "cache issues"

-

Today we have an exciting devRant announcement! As many observant members of the community have problably noticed, since launch we've been using the domain name devrant.io since the .com was already taken. Today, we're happy to announce, we now own devrant.com and it is now the official devRant URL!

How did this happen you ask? The devrant.com domain was already owned by a developer named Wiard when we launched devRant. It took a while to track him down, but when we did, turned out he saw the good we were doing and wanted to help the devRant community by generously offering us the .com domain for a very reasonable exchange (considering that we are a self-funded bootstrapped startup!).

Since Wiard recently started writing a blog on devrant.com, he had to find a new home for it. His new blog is https://sysrant.com and I encourage everyone to check it out! Great topical/educational dev/sys-admin related articles? Check. Someone who cares about the devRant community and allowed us to leave the firey hell that is .io? Check. So check it out!!

Some technical info:

This change is immediate and all devrant.io non-api requests will now redirect to devrant.com. We might have missed a few things (purposely or accidentely) so we're going to be going through and converting anything that's left. If you use the devRant API, your implementation should not break since API requests are meant to be excluded for now, but I highly recommend switching any API URLs to https://devrant.com so you can avoid issues in the future if we decide to stop redirecting devrant.io API requests. Also one note, there was an issue for about a minute after we turned on the redirected where some API requests to devrant.io might have 301 redirected to devrant.com. If an app you were using broke, try clearing whatever cache the 301 redirect might be stored in and the issue should go away.

Feel free to post any questions you might have here (and please let me know about any issues you might discover!), and once again, huge thanks to Wiard! 71

71 -

I absolutely HATE "web developers" who call you in to fix their FooBar'd mess, yet can't stop themselves from dictating what you should and shouldn't do, especially when they have no idea what they're doing.

So I get called in to a job improving the performance of a Magento site (and let's just say I have no love for Magento for a number of reasons) because this "developer" enabled Redis and expected everything to be lightning fast. Maybe he thought "Redis" was the name of a magical sorcerer living in the server. A master conjurer capable of weaving mystical time-altering spells to inexplicably improve the performance. Who knows?

This guy claims he spent "months" trying to figure out why the website couldn't load faster than 7 seconds at best, and his employer is demanding a resolution so he stops losing conversions. I usually try to avoid Magento because of all the headaches that come with it, but I figured "sure, why not?" I mean, he built the website less than a year ago, so how bad can it really be? Well...let's see how fast you all can facepalm:

1.) The website was built brand new on Magento 1.9.2.4...what? I mean, if this were built a few years back, that would be a different story, but building a fresh Magento website in 2017 in 1.x? I asked him why he did that...his answer absolutely floored me: "because PHP 5.5 was the best choice at the time for speed and performance..." What?!

2.) The ONLY optimization done on the website was Redis cache being enabled. No merged CSS/JS, no use of a CDN, no image optimization, no gzip, no expires rules. Just Redis...

3.) Now to say the website was poorly coded was an understatement. This wasn't the worst coding I've seen, but it was far from acceptable. There was no organization whatsoever. Templates and skin assets are being called from across 12 different locations on the server, making tracking down and finding a snippet to fix downright annoying.

But not only that, the home page itself had 83 custom database queries to load the products on the page. He said this was so he could load products from several different categories and custom tables to show on the page. I asked him why he didn't just call a few join queries, and he had no idea what I was talking about.

4.) Almost every image on the website was a .PNG file, 2000x2000 px and lossless. The home page alone was 22MB just from images.

There were several other issues, but those 4 should be enough to paint a good picture. The client wanted this all done in a week for less than $500. We laughed. But we agreed on the price only because of a long relationship and because they have some referrals they got us in the door with. But we told them it would get done on our time, not theirs. So I copied the website to our server as a test bed and got to work.

After numerous hours of bug fixes, recoding queries, disabling Redis and opting for higher innodb cache (more on that later), image optimization, js/css/html combining, render-unblocking and minification, lazyloading images tweaking Magento to work with PHP7, installing OpCache and setting up basic htaccess optimizations, we smash the loading time down to 1.2 seconds total, and most of that time was for external JavaScript plugins deemed "necessary". Time to First Byte went from a staggering 2.2 seconds to about 45ms. Needless to say, we kicked its ass.

So I show their developer the changes and he's stunned. He says he'll tell the hosting provider create a new server set up to migrate the optimized site over and cut over to, because taking the live website down for maintenance for even an hour or two in the middle of the night is "unacceptable".

So trying to be cool about it, I tell him I'd be happy to configure the server to the exact specifications needed. He says "we can't do that". I look at him confused. "What do you mean we 'can't'?" He tells me that even though this is a dedicated server, the provider doesn't allow any access other than a jailed shell account and cPanel access. What?! This is a company averaging 3 million+ per year in revenue. Why don't they have an IT manager overseeing everything? Apparently for them, they're too cheap for that, so they went with a "managed dedicated server", "managed" apparently meaning "you only get to use it like a shared host".

So after countless phone calls arguing with the hosting provider, they agree to make our changes. Then the client's developer starts getting nasty out of nowhere. He says my optimizations are not acceptable because I'm not using Redis cache, and now the client is threatening to walk away without paying us.

So I guess the overall message from this rant is not so much about the situation, but the developer and countless others like him that are clueless, but try to speak from a position of authority.

If we as developers don't stop challenging each other in a measuring contest and learn to let go when we need help, we can get a lot more done and prevent losing clients. </rant>14 -

Holy donkey nuts, I get too scared to leaved unpushed code when I take a coffee break.

https://webcache.googleusercontent.com/... 18

18 -

3 rants for the price of 1, isn't that a great deal!

1. HP, you braindead fucking morons!!!

So recently I disassembled this HP laptop of mine to unfuck it at the hardware level. Some issues with the hinge that I had to solve. So I had to disassemble not only the bottom of the laptop but also the display panel itself. Turns out that HP - being the certified enganeers they are - made the following fuckups, with probably many more that I didn't even notice yet.

- They used fucking glue to ensure that the bottom of the display frame stays connected to the panel. Cheap solution to what should've been "MAKE A FUCKING DECENT FRAME?!" but a royal pain in the ass to disassemble. Luckily I was careful and didn't damage the panel, but the chance of that happening was most certainly nonzero.

- They connected the ribbon cables for the keyboard in such a way that you have to reach all the way into the spacing between the keyboard and the motherboard to connect the bloody things. And some extra spacing on the ribbon cables to enable servicing with some room for actually connecting the bloody things easily.. as Carlos Mantos would say it - M-m-M, nonoNO!!!

- Oh and let's not forget an old flaw that I noticed ages ago in this turd. The CPU goes straight to 70°C during boot-up but turning on the fan.. again, M-m-M, nonoNO!!! Let's just get the bloody thing to overheat, freeze completely and force the user to power cycle the machine, right? That's gonna be a great way to make them satisfied, RIGHT?! NO MOTHERFUCKERS, AND I WILL DISCONNECT THE DATA LINES OF THIS FUCKING THING TO MAKE IT SPIN ALL THE TIME, AS IT SHOULD!!! Certified fucking braindead abominations of engineers!!!

Oh and not only that, this laptop is outperformed by a Raspberry Pi 3B in performance, thermals, price and product quality.. A FUCKING SINGLE BOARD COMPUTER!!! Isn't that a great joke. Someone here mentioned earlier that HP and Acer seem to have been competing for a long time to make the shittiest products possible, and boy they fucking do. If there's anything that makes both of those shitcompanies remarkable, that'd be it.

2. If I want to conduct a pentest, I don't want to have to relearn the bloody tool!

Recently I did a Burp Suite test to see how the devRant web app logs in, but due to my Burp Suite being the community edition, I couldn't save it. Fucking amazing, thanks PortSwigger! And I couldn't recreate the results anymore due to what I think is a change in the web app. But I'll get back to that later.

So I fired up bettercap (which works at lower network layers and can conduct ARP poisoning and DNS cache poisoning) with the intent to ARP poison my phone and get the results straight from the devRant Android app. I haven't used this tool since around 2017 due to the fact that I kinda lost interest in offensive security. When I fired it up again a few days ago in my PTbox (which is a VM somewhere else on the network) and today again in my newly recovered HP laptop, I noticed that both hosts now have an updated version of bettercap, in which the options completely changed. It's now got different command-line switches and some interactive mode. Needless to say, I have no idea how to use this bloody thing anymore and don't feel like learning it all over again for a single test. Maybe this is why users often dislike changes to the UI, and why some sysadmins refrain from updating their servers? When you have users of any kind, you should at all times honor their installations, give them time to change their individual configurations - tell them that they should! - in other words give them a grace time, and allow for backwards compatibility for as long as feasible.

3. devRant web app!!

As mentioned earlier I tried to scrape the web app's login flow with Burp Suite but every time that I try to log in with its proxy enabled, it doesn't open the login form but instead just makes a GET request to /feed/top/month?login=1 without ever allowing me to actually log in. This happens in both Chromium and Firefox, in Windows and Arch Linux. Clearly this is a change to the web app, and a very undesirable one. Especially considering that the login flow for the API isn't documented anywhere as far as I know.

So, can this update to the web app be rolled back, merged back to an older version of that login flow or can I at least know how I'm supposed to log in to this API in order to be able to start developing my own client?6 -

when you wake up saturday noon just to see your phone having 10 missed calls from the same unrecognized number, dial back and find it to be a mad client,

complaining about some graphic issues on a site you have nothing to do with.

checking the site; there is nothing wrong so you tell him to clear his browser cache.

he gets mad shouting a silly programmer shall not tell him what to do with his computer and its the site, not his browser.

i ask him if there is the same issue with another browser or computer..

he giggles a little then turn silent..

2mins or so later, he says: i'm gonna let your boss know about this then hangs up..2 -

Boss wants to scale our webservers because it seems they're having performance/capacity issues....

I'VE BEEN TELLING HIM FOR WEEKS IT'S NOT THE SERVERS!!! IT'S THE FACT THAT EVERY SINGLE QUERY HITS A SINGLE MONGODB... AND NO CACHE EITHER... AND THE DB CANT BE ENTIRELY LOADED INTO MEMORY AS ITS TOO BIG FOR RAM ON A SINGLE SERVER...

HOW THE FUCK CAN YOU SCALE IF EVERYTHING HAS A DEPENDENCY ON 1 NON-DISTRIBUTED DATABASE?6 -

Spent 4 hours debugging a “button” styling, worked fine locally but not on production.!

After striking out “cache” issues, “browser” versions, “fonts”, “sass errors” the error was with a stupid chrome extension that appended a css class attribute to the “HTML” tag 😡

And the other developer thought that was a part of what was written in the code !!

Hate these kinda plugins that manipulate the DOM 😪

P S the plugin is "Grammarly".2 -

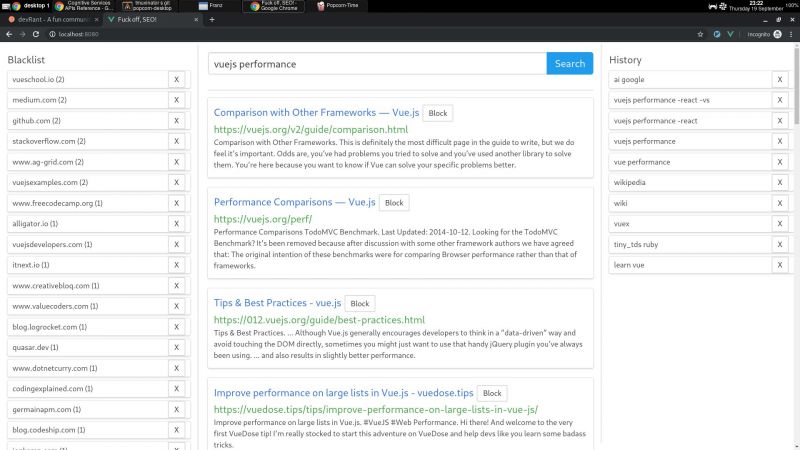

I wrote a node + vue web app that consumes bing api and lets you block specific hosts with a click, and I have some thoughts I need to post somewhere.

My main motivation for this it is that the search results I've been getting with the big search engines are lacking a lot of quality. The SEO situation right now is very complex but the bottom line is that there is a lot of white hat SEO abuse.

Commercial companies are fucking up the internet very hard. Search results have become way too profit oriented thus unneutral. Personal blogs are becoming very rare. Information is losing quality and sites are losing identity. The internet is consollidating.

So, I decided to write something to help me give this situation the middle finger.

I wrote this because I consider the ability to block specific sites a basic universal right. If you were ripped off by a website or you just don't like it, then you should be able to block said site from your search results. It's not rocket science.

Google used to have this feature integrated but they removed it in 2013. They also had an extension that did this client side, but they removed it in 2018 too. We're years past the time where Google forgot their "Don't be evil" motto.

AFAIK, the only search engine on earth that lets you block sites is millionshort.com, but if you block too many sites, the performance degrades. And the company that runs it is a for profit too.

There is a third party extension that blocks sites called uBlacklist. The problem is that it only works on google. I wrote my app so as to escape google's tracking clutches, ads and their annoying products showing up in between my results.

But aside uBlacklist does the same thing as my app, including the limitation that this isn't an actual search engine, it's just filtering search results after they are generated.

This is far from ideal because filter results before the results are generated would be much more preferred.

But developing a search engine is prohibitively expensive to both index and rank pages for a single person. Which is sad, but can't do much about it.

I'm also thinking of implementing the ability promote certain sites, the opposite to blocking, so these promoted sites would get more priority within the results.

I guess I would have to move the promoted sites between all pages I fetched to the first page/s, but client side.

But this is suboptimal compared to having actual access to the rank algorithm, where you could promote sites in a smarter way, but again, I can't build a search engine by myself.

I'm using mongo to cache the results, so with a click of a button I can retrieve the results of a previous query without hitting bing. So far a couple of queries don't seem to bring much performance or space issues.

On using bing: bing is basically the only realiable API option I could find that was hobby cost worthy. Most microsoft products are usually my last choice.

Bing is giving me a 7 day free trial of their search API until I register a CC. They offer a free tier, but I'm not sure if that's only for these 7 days. Otherwise, I'm gonna need to pay like 5$.

Paying or not, having to use a CC to use this software I wrote sucks balls.

So far the usage of this app has resulted in me becoming more critical of sites and finding sites of better quality. I think overall it helps me to become a better programmer, all the while having better protection of my privacy.

One not upside is that I'm the only one curating myself, whereas I could benefit from other people that I trust own block/promote lists.

I will git push it somewhere at some point, but it does require some more work:

I would want to add a docker-compose script to make it easy to start, and I didn't write any tests unfortunately (I did use eslint for both apps, though).

The performance is not excellent (the app has not experienced blocks so far, but it does make the coolers spin after a bit) because the algorithms I wrote were very POC.

But it took me some time to write it, and I need to catch some breath.

There are other more open efforts that seem to be more ethical, but they are usually hard to use or just incomplete.

commoncrawl.org is a free index of the web. one problem I found is that it doesn't seem to index everything (for example, it doesn't seem to index the blog of a friend I know that has been writing for years and is indexed by google).

it also requires knowledge on reading warc files, which will surely require some time investment to learn.

it also seems kinda slow for responses,

it is also generated only once a month, and I would still have little idea on how to implement a pagerank algorithm, let alone code it. 4

4 -

First time doing web development for front end AND back end and I just want to say...

FUCK YOU YOU SHITTY ASS BOLLOCK DRIPPINGLY RETARDING CACHE, WHO YOU LOAD THINGS I NO WANT YOU TO LOAD...WHY THO?...

Well that was 2 hours of my life wasted....8 -

tl;dr - install ‘Pop!_os’ and try it out if you haven’t yet, it’s pretty damn good!

Heavy Micro$haft user here, have tried using ubuntu a bunch of times in the past and fucking regretted it every time. Ran into issues with stupid shit like the apt cache growing exponentially until the drive was full, or something like the the system python getting borked.

To be fair, I’m 120% certain my dumb-assery is what caused the problems. I’m definitely not trying to blame the OS. But my experience was shitty, even if it was at my own hands lol.

Started playing around with Pop!_os from the system76 team. And I’m seriously in freakin’ love with this OS. It’s clean, is performant, feels way less buggy or just feels more stable somehow. I know it’s based on ubuntu, but I’ve had a great time thus far using it. I’ve got ansible, docker, aws toolkit, aws cli, sam-cli, vscode, dynamodb-local, serverless, npm, brew, and working on steam now.

Everything has been a breeze and again the system feels really fast and snappy. It feels a lot like mac on the smoothness scale, but snappy like a windows box with beefy hardware specs.

I’m still just in the testing phase on a VM, but I’m seriously thinking about blowing away my windows install for Pop!_os.

(I’ll try arch someday when I’m up for some hardcore masochism)8 -

!dev

TL;DR: Today my phone Kruger&Matz Live 3+ got ebola. Anyone had same issues?

I woke up and unplugged my phone from charger as always, but it was hot as hell. I was not worried, thought it heated up cause of charging as I plugged it few hours before waking up.

Then things got serious. I was unable to use phone, it freezed randomly, opened apps I hovered when frozen, etc. I thought thats becuse it was hot, so I turned it off and put it into the fridge (I do it sometimes).

I was leaving house in an hour so I hoped that would help. I turned it back on when leaving, but nothing changed and it was getting hot again. I've checked processes, was deleting apps like mad, thibking that was some bug in update of one of them, cleared cache partiotion too. That did not help, so I was forced to factory reset. Guess what... same issues.

I tried everything possible and lost all hope, was ready to send it to service. So I turned it off, so it won't burn my pocket out.

Few hour later I talked with dad complaining about the issue and tried to show him what's wrong, but... it was all right again. No freezes, no heating.

Later that day my sister told me she had issues with her phone - Live 3, described same as mine. Even weirder that my girlfriend had no issues with her Flow 4+ from same company.

Two phones of same company, almost same product line with the same exact issues on the same time frame? Any ideas what happened?4 -

I've ranted about this before, but here we go again:

Go Plugins.

I was racking my brains trying to figure out how one could possibly implement plugins easily in Go.

I had a look at using RPC, which requires far to much boilerplate to be realistic. I looked at using Lua, but there doesn't seem to be a straight forward way of using it. I was even about to go with using WASM (yes, WASM). But then I came across Yaegi ("Yet another elegant Go interpreter", you heard right: "interpreter"), Yaegi is also very easy to use.

There are a few issues (including some I haven't solved yet), including flexibility (multiple types of plugins), module support, etc. Fortunately, Traefik just released their plugin system which is based on Yaegi (same company), and I got to learn a few tricks from them.

Here's how module loading works: The developer vendors their dependencies and pushes them to a repo. The user downloads the repo as a zip and saves it to the plugins folder. I hash the zip, unzip it to a cache, and set the the GOPATH for the interpreter to be that extracted folder. I then load the module (which is defined by a config file in the folder), and save it for later. This is the relatively easy part.

The hard part is allowing for different types of plugins. It looks easy, but Go has a strict typing system, makes things complicated. I'm in the process of solving this problem, and so far it should go like this: Check that the plugin fits an arbitrary interface, and if it does, we're good the go. I will just have to apply the returned plugin to that interface. I don't like this method for a few reasons, but hopefully with generics it will become a bit more clean.1 -

One of my favorite parts of my job is that I’m not allowed to resolve firewall issues myself. IT ops frequently breaks my firewall config, preventing me from resolving any domain names or running dns queries in general even though I still have connectivity. So I call the support number. Remote Desktop icon appears in the corner of my screen.

“Hi I have connectivity but can’t resolve any domain names”

“Have you tried using your browser, maybe they just block pings”

“Well no because I can ping 8.8.8.8, see?”

“Hmm well have you tried from your browser?”

“Yes.”

“Maybe it’s just an issue with ping traffic”

“Well no because I’m not having issues with icmp traffic. I can still ping 8.8.8.8, see?”

“Hmm that’s weird”

*opens network config, renews dhcp lease*

“But I don’t think that’s relat...”

“I know!”

*opens my command prompt, flushes dns cache*

“But if this were a cache issue the requests wouldn’t take so long to tim...”

“I know.”

(Starting to think he doesn’t know)

“I’ll pass this on to the networking guys”

“Thanks”

Third time this has happened. Every time they claim they didn’t change anything and it fixed itself. Obviously this is not the case, because after networking guys “don’t change anything” it starts working again. Every time they talk to me like I have the technical prowess of an HR rep. Like somehow I’m the only software engineer in the world that doesn’t know what the ping command does.

I’m not upset though. They’re just giving me a great excuse to be completely unproductive on a Monday -

That moment you're helping out a colleague with his ticket and stuff isn't working and you ask him.

Hey you do reset your cache right?

On which he replies yes of course I do.

10 minutes later you finally walk over to him and you see his browser open without Dev tools......... -

I have two laravel apps. Both sharing one redis db. One has App/Post one has App/Models/Blog/Post. When I unserialize models from redis cache saved by the other app I get issues because it cannot find the right model to hydrate.

How would you build a custom map to get the right model?15 -

Just tried Min https://minbrowser.github.io/min/. Awesome fast, content blocker, easylist, clean as I like it, mounted config & cache to tmpfs. 🙂

Btw: why are the guys at brave.com won't fix this annoying bug https://github.com/brave/.... No sandbox, no brave. 😣 -

so my poor old hp bro is starting to give up on me after 3 years . there are both battery and performance issues. I knew its death was near when i got its keyboard replaced i saw its battery's state 6 months ago. it was fat as me . Now it can't live without the wire for more than 30 mins.

apart from that am getting frustrated with everyday performance of my system. all i open is 50 tabs of chrome and android studio where bitch gradle generates a billion byte sized files at million places and cache folders . (and sometimes a terminal and/or files and/or vscode).

I have decent specs tho i5 7th generation/8gb ddr4 ram/ 2gb nvidea graphics/ 1tb hdd. but still my ubuntu gets stuck everytime i switch between chrome and AS . (maybe i have not made correct swap partitions or maybe there is an issue due to ubuntu/windows dualboot)

what should i do ? I guess i have to spend some shit on a new battery. But apart from that, iwanted to know about performance. how to get a beter performance?

I have heard of solutions like getting an sdd or increasing ram, but am broke af and might not afford a 1tb ssd (yes i do need that much amount of memory, my system is currently at 650 something gb) and about ram i have heard it doesn't offer much improvements. is that true?What should i do?5 -

!dev

I've finally been so agitated at G+ I need somewhere to just vent.

So for context. What I'm talking about is Google+, or more specifically, the Android app. The website is bad in its own way, but that's not here nor there. No opinions on the iOS version, as I simply REFUSE to touch iOS.

So anyways. The platform itself honestly is not bad. With competent developers behind it, and them actually listening to their dwindling fucking userbase, they could easily turn it into something successful, but the issue is that they just aren't

You see, it's almost like they change dev staff every 6 or so months. Why do I believe this? Because the GUI changes about that fucking often. They also have a history of forcing updates, but allowing you to use an older version, just horrifically slapping on a new and unwelcome skin. This isn't an isolated practice by any means, but it's by far the most prevalent here.

So, now a list of some of the issues the current version has:

-After about a week, the app becomes unstably slow, to the point of it taking about a minute to refresh your home feed, or an individual page.

-Searching is never good, always being slow and rarely giving you who you asked for.

-Transparency is non fucking existent. There isn't a development roadmap to speak of, and when something happens we get it second hand from staff in a "G+ help" community.

There is a solution for the first one, going and clearing the data/cache, but really, the end user shouldn't have to regularly do that. Not to mention the storage space Google apps IN GENERAL fucking take up. Why does Google Play Services regularly use 250MB? (For most people, this really isn't much. But when you only get to fucking use 4 GB of internal storage it's a giant fuck you.)

Bah, back to the topic at hand.

There isn't a good solution to searching, or for transparency at the moment.

The spam filter is awful as well. REGULARLY letting obvious spam pass, regularly blocking and filtering genuine users. It's real annoying that the Android app itself doesn't have support for seeing these flags outside of rooting through the settings a bit, but still. The web and iOS versions have this already.

Oh, it also completely lacks a dark mode like most Google apps for some fuckin reason.

That concludes my random 1:30 AM rant about something I have no ability to change, except hope in vain that someone who has the ability to change this forwards this to the developers of G+.

I need a better sleep schedule.3 -

Python muses me sometimes.

Gunicorn has a preload mode. It enables forking...

So Gunicorn starts, when Gunicorn loaded it forks the workers (Uvicorn / FastAPI in my case).

https://github.com/tiangolo/...

So if we add a function that creates the app... this function will be executed before forking, thus the memory at the state of creating the app will be duplicated.

You can thus spawn 40 workers, they would all have the same ML models.

Or in my case a client who does some things that should only be run by a single thread (with locking).

So the client has a cache, as long as I load the cache during the create_app phase, the cache will be shared between all instances and not created per instance.

It's ... Such a small detail. So simple.

Yet completely fucks my brain.

It's logical, yes. I understand what it does, yes.

But it still makes my brain fart. -

Anyone here use the NodeJS HTTP/2 API? I started working with it the other day and I can get static files served fine with it but when I try and use it's push feature to "bundle" additional resources that the page will need, it doesn't seem to work, the client still requests the resources from the server instead of looking in the "push" cache. Also the load time seems longer when using http/2 vs 1, was wondering if anyone else had come across these issues and found workarounds. P.S. - I'm using Chrome to test on, with https://localhost and some self-signed certs as http/2 isn't implemented in browser unless using https1