Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "conundrum"

-

Opens Facebook

Sees ad: "this machine uses AI to make pizza bread".

Closes Facebook

Goes back to sleep1 -

Was about to follow a guy doing really interesting work on Twitter, then noticed he was using a light background terminal theme.

-

Suddenly some some strange noice from the kitchen... Hmm my wife's laptop sounds like it plays some TV series but no one is logged in.

Conclusion: windows update restarted her computer while it was paused on Netflix, after reboot chrome opens to latest url where it autoplays trailers.

Conundrum resolved.2 -

So, I decided to post this based on @Morningstar's conundrum.

I'm dissatisfied with the laptop market.

Why THE FUCK should I have to buy a gaming laptop with a GTX 1070 or 1080 to get a decent amount of RAM and a fucking great processor?

I don't game. I program. I don't even own a fucking Steam library, for clarification. Never have I ever bought a game on Steam. Disproving the notion that I might have a games library out of the way, I run Linux. Antergos (Arch-based) is my daily driver.

So, in 2017 I went on a laptop hunt. I wanted something with decent specs. Ultimately ended up going with the system76 Galago Pro (which I love the form factor of, it's nice as hell and people recognize the brand for some fucking reason). Matter of fact, one of my profs wanted to know how I accessed our LMS (Blackboard) and I showed him Chromium....his mind was blown: "Ir's not just text!"

That aside, why the fuck are Dell and system76 the only ones with decent portables geared towards developers? I hate the prospect of having to buy some clunky-ass Republic of Gamers piece of shit just to have some sort of decent development machine...

This is a notice to OEMs: yall need to quit making shit hardware and gaming hardware with no mid-range compromise. Shit hardware is defined as the "It runs Excel and that's all the consumer needs" and gaming hardware is "Let's put fucking everything in there - including a decent processor, RAM, and a GTX/Radeon card."

Mid-range that is true - good hardware that handles video editing and other CPU/RAM-intensive tasks and compiling and whatnot but NOT graphics-intensive shit like gaming - is hard to come by. Dell offers my definition of "mid-range" through Sputnik's Ubuntu-powered XPS models and what have you, and system76 has a couple of models that I more or less wish I had money for but don't.

TBH I don't give two fucks about the desktop market. That's a non-issue because I can apply the logic that if you want something done right, do it yourself: I can build a desktop. But not a laptop - at least not in a feasible way.23 -

The developer's conundrum:

Do I fix the issue before a user encounters it...

Or do I wait for a user to contact me and then quickly fix it so they think I am super helpful.5 -

I'm facing a conundrum. I saw a job posting for <Company A> weeks ago, but wasn't really interested and moved on.

Got in touch with a standalone recruitment company today who had an exciting job offer. The recruiter refused to tell me the name of the company until he spoke to me as they directed him to not disclose the name of the company or any details until he has verified the candidate is genuine. To my absolute shock and disbelief it was <Company A>!!!!

So heres the conundrum. External recruiters don't lie, why would they? Clearly this company MUST keep their hiring top secret, and some poor employee didn't get the memo before posting that job publicly. Should I report the <Company A> employee?

I don't want to report them and get them in trouble, but feel I must in order to help the company fix this leak ... before its too late ... and people find out the are hiring!

Thoughts?5 -

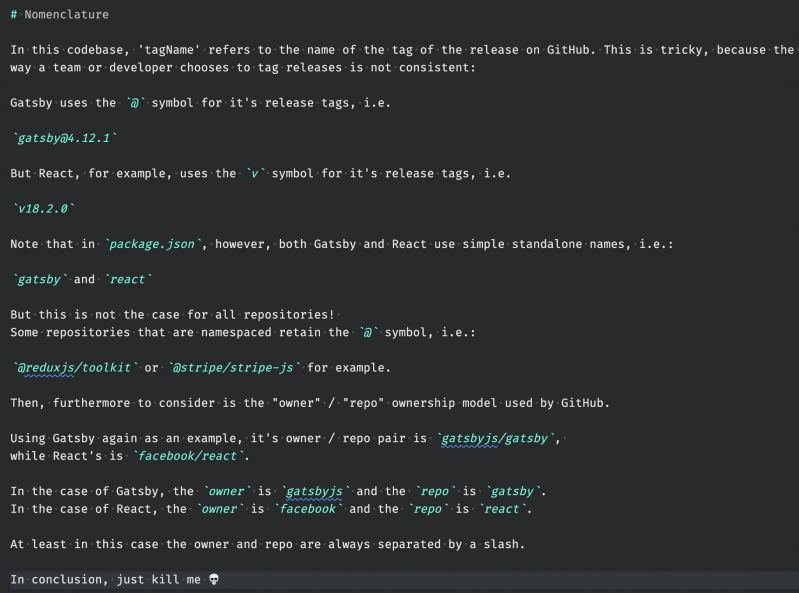

Working on another SaaS product, and now I've run into a "fun" conundrum that is hard to determine cleanly in an automated fashion.

I'm certain it's stupid bullshit opinionated conventions like this as to why so many devs are driven to burnout and bitterness... 3

3 -

To you who enjoyed the Gif/Jiff, Sequel/EsQueEll, Git/Jeet grandiose debates, comes the new phonetic conundrum: DevREnt vs. DevRUnt. Soon, in monitors near you.6

-

what am i going to do today? whatever the fuck the SYSTEM throws at me... or what my manager wants me to waste time on... ah , programmer life when one has a conundrum of doing what you like to do but not end up doing that because there are other mountains to climb with squirrels eating your nuts...1

-

I have a whole bag of pills to eat that make me sicker instead of better while I have a lot of studying and writing to do. Doc says I have to be diligent with pills but like...what of my goals? I'll also not get better if I don't eat the pills. What a conundrum.7

-

Ah, the conundrum when a recruiter emails with a genuinely well-matched and reasonable sounding opportunity (which I often look into), but starts with:

Dear {firstname},

...which of course I aim never to reply to, for the same reason I wouldn't expect them to read a covering letter that said "dear {company}".3 -

Going through a cosmic conundrum where I am starting to question the very existence of time fabric and possibilities of parallel universes having infinite us...

P.S.: when you have watched enough of Rick & Morty 😂6 -

The Rise and Fall of Helper Classes

New method doesn't seem to fit into one of the existing classes so a developer creates a new class and innocently called it "helper".

Another dev had a similar conundrum and adds a couple more methods to the "helper" class.

And a few more methods added...

A couple more methods surely wouldn't be too bad. It has unit tests anyway.

After a year, the helper class has now grown to about 10,000 lines that no one is brave enough to refactor.

CTO now says, "Ok let's park this project and build a new one in Go." Fun times!2 -

I’m trying to update a job posting so that it’s not complete BS and deters juniors from applying... but honestly this is so tough... no wonder these posting get so much bs in them...

Maybe devRant community can help be tackle this conundrum.

I am looking for a junior ml engineer. Basically somebody I can offload a bunch of easy menial tasks like “helping data scientists debug their docker containers”, “integrating with 3rd party REST APIs some of our models for governance”, “extend/debug our ci”, “write some preprocessing functions for raw data”. I’m not expecting the person to know any of the tech we are using, but they should at least be competent enough to google what “docker is” or how GitHub actions work. I’ll be reviewing their work anyhow. Also the person should be able to speak to data scientists on topics relating to accuracy metrics and mode inputs/outputs (not so much the deep-end of how the models work).

In my opinion i need either a “mathy person who loves to code” (like me) or a “techy person who’s interested in data science”.

What do you think is a reasonable request for credentials/experience?5 -

The production bug conundrum:

The new release that's going to fix the problem isn't ready yet, but hotfixing it means merge-hell for the new release. -

Of all the problems in this world, the biggest conundrum seems to be for girls who have to choose between Snapchat and Instagram now that Instagram stole Snapchat feature openly. 😂😂😂😂8

-

Still I remain. It’s ok. But now it is clear that EA is clueless. No matter what domain. Endless buzzwords and endless meetings and I am wondering if any of them actually know how to code…

I even met one who stated that there is no need to that type of knowledge when working at that level.

I kept my mouth shut. Did not nod. Did not shake my head. Did nothing. I was equally blank and shocked as I was focused on the conundrum who hired him.

So, basically he just went through his days guessing. I mean, we all guess more or less but we kind of know something about software engineering so it is more of informed guess-works.

You can go very far by pretending.

It is very toxic. Very bad.3 -

I'm kind of in a bit of a conundrum.

Abou 6 months ago, a friend and me were speaking about how bored I was and how I don't have any new ideas for projects, and he gave me one, and it is the basis of the project I am currently building, yet I changed one of the variables he had gave me as to appeal to a wider audience, yet now as I resumed work on it, I think it is a good idea and would make me some money( I'm not writing about this grounbreaking idea that would make me millions, hell, I'm not sure it will be successfull, but it might make me some money to help me when the time comes to go to university) but I am a firm believer in open-source and I don't know if I should make it open source and rely on the donations and let them modify the code, or just charge for it?

If it were another case, I wouldn't think twice before making it open source yet I probably won't be able to afford uni and this would be good for me and help me along with the freelancing jobs I am starting to work on.

What would you guys recommend I do?2 -

I have this sbt test that keeps failing on CI. Locally it works fine but soon as it goes through circle CI, shit gets fucked. Now when I incessantly keep rerunning the working flow without any change, it eventually passes and I am able to deploy. I have no idea wtf is happening or what to do about it. Isn't containerizatiom supposed to solve this whole worked on my machine conundrum? I am too unenthusiastic and numb to even feel anyway about this. Wish everything would end.4

-

Seeing as I will be scorned for asking this on SO, and there's a ton of devs here that probably had this conundrum: how have you solved horizontal scrolling when working? I know shift + mw+/- works but what's the use having a mouse with macro functionality if not to simplify that? My current software supports lua scripting (but I don't know how to write it). I see some people requesting this while googling I but cannot find anyone that has a solution. The closest I get is a user requesting it from Logitech as they apparently have the same but for ctrl+mw in their products.10

-

The Turing Test, a concept introduced by Alan Turing in 1950, has been a foundation concept for evaluating a machine's ability to exhibit human-like intelligence. But as we edge closer to the singularity—the point where artificial intelligence surpasses human intelligence—a new, perhaps unsettling question comes to the fore: Are we humans ready for the Turing Test's inverse? Unlike Turing's original proposition where machines strive to become indistinguishable from humans, the Inverse Turing Test ponders whether the complex, multi-dimensional realities generated by AI can be rendered palatable or even comprehensible to human cognition. This discourse goes beyond mere philosophical debate; it directly impacts the future trajectory of human-machine symbiosis.

Artificial intelligence has been advancing at an exponential pace, far outstripping Moore's Law. From Generative Adversarial Networks (GANs) that create life-like images to quantum computing that solve problems unfathomable to classical computers, the AI universe is a sprawling expanse of complexity. What's more compelling is that these machine-constructed worlds aren't confined to academic circles. They permeate every facet of our lives—be it medicine, finance, or even social dynamics. And so, an existential conundrum arises: Will there come a point where these AI-created outputs become so labyrinthine that they are beyond the cognitive reach of the average human?

The Human-AI Cognitive Disconnection

As we look closer into the interplay between humans and AI-created realities, the phenomenon of cognitive disconnection becomes increasingly salient, perhaps even a bit uncomfortable. This disconnection is not confined to esoteric, high-level computational processes; it's pervasive in our everyday life. Take, for instance, the experience of driving a car. Most people can operate a vehicle without understanding the intricacies of its internal combustion engine, transmission mechanics, or even its embedded software. Similarly, when boarding an airplane, passengers trust that they'll arrive at their destination safely, yet most have little to no understanding of aerodynamics, jet propulsion, or air traffic control systems. In both scenarios, individuals navigate a reality facilitated by complex systems they don't fully understand. Simply put, we just enjoy the ride.

However, this is emblematic of a larger issue—the uncritical trust we place in machines and algorithms, often without understanding the implications or mechanics. Imagine if, in the future, these systems become exponentially more complex, driven by AI algorithms that even experts struggle to comprehend. Where does that leave the average individual? In such a future, not only are we passengers in cars or planes, but we also become passengers in a reality steered by artificial intelligence—a reality we may neither fully grasp nor control. This raises serious questions about agency, autonomy, and oversight, especially as AI technologies continue to weave themselves into the fabric of our existence.

The Illusion of Reality

To adequately explore the intricate issue of human-AI cognitive disconnection, let's journey through the corridors of metaphysics and epistemology, where the concept of reality itself is under scrutiny. Humans have always been limited by their biological faculties—our senses can only perceive a sliver of the electromagnetic spectrum, our ears can hear only a fraction of the vibrations in the air, and our cognitive powers are constrained by the limitations of our neural architecture. In this context, what we term "reality" is in essence a constructed narrative, meticulously assembled by our senses and brain as a way to make sense of the world around us. Philosophers have argued that our perception of reality is akin to a "user interface," evolved to guide us through the complexities of the world, rather than to reveal its ultimate nature. But now, we find ourselves in a new (contrived) techno-reality.

Artificial intelligence brings forth the potential for a new layer of reality, one that is stitched together not by biological neurons but by algorithms and silicon chips. As AI starts to create complex simulations, predictive models, or even whole virtual worlds, one has to ask: Are these AI-constructed realities an extension of the "grand illusion" that we're already living in? Or do they represent a departure, an entirely new plane of existence that demands its own set of sensory and cognitive tools for comprehension? The metaphorical veil between humans and the universe has historically been made of biological fabric, so to speak.6