Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

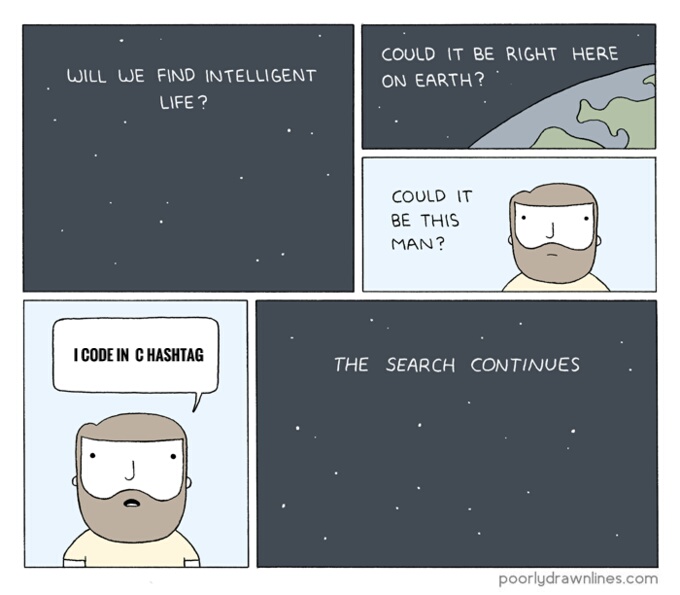

Search - "c hash"

-

In my second year,

I told my teacher I can code in in C#(c-sharp).

She replied : C-sharp ? Oh you mean C-hash !

*that day I lost hope in college*31 -

It pains me that Google Now pronounces C# as "C hash".

Perhaps it's their subtle way to annoy Microsoft developers...8 -

worst interview was about 2 years ago. I found this job as on a famous website, I applied for a desktop dev. position...the job seemed really easy, after 10 minutes the so-called CTO asked me:" how much do you know C hash?" me:"what?" him:"C hash...the Microsoft programming language" I thanked him for his time and went home crying ( he meant c# )...after a month I found out the company had gone bankrupt...I think I know why..8

-

I am a firmware developer with 4 years experience. C and sometimes assembly is my bread and butter.

Like 2 years ago, I was really interested to make a switch to application development. Got referred by my friend to her startup.

But I was a bit rusty with my data structures, high level languages and interpersonal skills.

The first question was to find the number of occurences for each word in a paragraph. The language choice was Java. But I was allowed to use C++ since it was the closest relative to Java that I knew.

And I started implementing a binary search tree from scratch and started inserting each tokenised word into it, wrote a traversal algorithm.

The interviewer, luckily, was a patient guy. After I completed my whole mess, he asked is it possible to do this in a slightly better way with constant time access without traversal.

I said yes, we can with a hash table but I dont know how to implement one. He replied I dont expect you to implement the hash table but see you use it. I asked him if I am allowed to used the standard library, for which he said ofcourse.

*facepalm*.

Finally I understood his expectation, referred cppreference.com and used an unordered_map.

Later there were some quesion on databases for which I tried my best to answer. And I frankly replied that I am not comfortable with JS frameworks as of now. Got rejected.

So the mistake is I never asked basic questions like what is the time complexity expects, if I was allowed to use standard library, didnt spend some extra time on studying stuffs needed for the domain switch and most importantly I panicked.7 -

I've just noticed something when reading the EU copyright reform. It actually all sounds pretty reasonable. Now, hear me out, I swear that this will make sense in the end.

Article 17p4 states the following:

If no authorisation [by rightholders] is granted, online content-sharing service providers shall be liable for unauthorised acts of communication to the public, including making available to the public, of copyright-protected works and other subject matter, unless the service providers demonstrate that they have:

(a) made best efforts to obtain an authorisation, and

(b) made, in accordance with high industry standards of professional diligence, best efforts to ensure the unavailability of specific works and other subject matter for which the rightholders have provided the service providers with the relevant and necessary information; and in any event

(c) acted expeditiously, upon receiving a sufficiently substantiated notice from the rightholders, to disable access to, or to remove from, their websites the

notified works or other subject matter, and made best efforts to prevent their future uploads in accordance with point (b).

Article 17p5 states the following:

In determining whether the service provider has complied with its obligations under paragraph 4, and in light of the principle of proportionality, the following elements, among others, shall be taken into account:

(a) the type, the audience and the size of the service and the type of works or other subject matter uploaded by the users of the service; and

(b) the availability of suitable and effective means and their cost for service providers.

That actually does leave a lot of room for interpretation, and not on the lawmakers' part.. rather, on the implementer's part. Say for example devRant, there's no way in hell that dfox and trogus are going to want to be tasked with upload filters. But they don't have to.

See, the law takes into account due diligence (i.e. they must give a damn), industry standards (so.. don't half-ass it), and cost considerations (so no need to spend a fortune on it). Additionally, asking for permission doesn't need to be much more than coming to an agreement with the rightsholder when they make a claim to their content. It's pretty common on YouTube mixes already, often in the description there's a disclaimer stating something like "I don't own this content. If you want part of it to be removed, get in touch at $email." Which actually seems to work really well.

So say for example, I've had this issue with someone here on devRant who copypasted a work of mine into the cancer pit called joke/meme. I mentioned it to dfox, didn't get removed. So what this law essentially states is that when I made a notice of "this here is my content, I'd like you to remove this", they're obligated to remove it. And due diligence to keep it unavailable.. maybe make a hash of it or whatever to compare against.

It also mentions that there needs to be a source to compare against, which invalidates e.g. GitHub's iBoot argument (there's no source to compare against!). If there's no source to compare against, there's no issue. That includes my work as freebooted by that devRant user. I can't prove my ownership due to me removing the original I posted on Facebook as part of a yearly cleanup.

But yeah.. content providers are responsible as they should be, it's been a huge issue on the likes of Facebook, and really needs to be fixed. Is this a doomsday scenario? After reading the law paper, honestly I don't think it is.

Have a read, I highly recommend it.

http://europarl.europa.eu/doceo/...13 -

Hey, been gone a hot minute from devrant, so I thought I'd say hi to Demolishun, atheist, Lensflare, Root, kobenz, score, jestdotty, figoore, cafecortado, typosaurus, and the raft of other people I've met along the way and got to know somewhat.

All of you have been really good.

And while I'm here its time for maaaaaaaaath.

So I decided to horribly mutilate the concept of bloom filters.

If you don't know what that is, you take two random numbers, m, and p, both prime, where m < p, and it generate two numbers a and b, that output a function. That function is a hash.

Normally you'd have say five to ten different hashes.

A bloom filter lets you probabilistic-ally say whether you've seen something before, with no false negatives.

It lets you do this very space efficiently, with some caveats.

Each hash function should be uniformly distributed (any value input to it is likely to be mapped to any other value).

Then you interpret these output values as bit indexes.

So Hi might output [0, 1, 0, 0, 0]

while Hj outputs [0, 0, 0, 1, 0]

and Hk outputs [1, 0, 0, 0, 0]

producing [1, 1, 0, 1, 0]

And if your bloom filter has bits set in all those places, congratulations, you've seen that number before.

It's used by big companies like google to prevent re-indexing pages they've already seen, among other things.

Well I thought, what if instead of using it as a has-been-seen-before filter, we mangled its purpose until a square peg fit in a round hole?

Not long after I went and wrote a script that 1. generates data, 2. generates a hash function to encode it. 3. finds a hash function that reverses the encoding.

And it just works. Reversible hashes.

Of course you can't use it for compression strictly, not under normal circumstances, but these aren't normal circumstances.

The first thing I tried was finding a hash function h0, that predicts each subsequent value in a list given the previous value. This doesn't work because of hash collisions by default. A value like 731 might map to 64 in one place, and a later value might map to 453, so trying to invert the output to get the original sequence out would lead to branching. It occurs to me just now we might use a checkpointing system, with lookahead to see if a branch is the correct one, but I digress, I tried some other things first.

The next problem was 1. long sequences are slow to generate. I solved this by tuning the amount of iterations of the outer and inner loop. We find h0 first, and then h1 and put all the inputs through h0 to generate an intermediate list, and then put them through h1, and see if the output of h1 matches the original input. If it does, we return h0, and h1. It turns out it can take inordinate amounts of time if h0 lands on a hash function that doesn't play well with h1, so the next step was 2. adding an error margin. It turns out something fun happens, where if you allow a sequence generated by h1 (the decoder) to match *within* an error margin, under a certain error value, it'll find potential hash functions hn such that the outputs of h1 are *always* the same distance from their parent values in the original input to h0. This becomes our salt value k.

So our hash-function generate called encoder_decoder() or 'ed' (lol two letter functions), also calculates the k value and outputs that along with the hash functions for our data.

This is all well and good but what if we want to go further? With a few tweaks, along with taking output values, converting to binary, and left-padding each value with 0s, we can then calculate shannon entropy in its most essential form.

Turns out with tens of thousands of values (and tens of thousands of bits), the output of h1 with the salt, has a higher entropy than the original input. Meaning finding an h1 and h0 hash function for your data is equivalent to compression below the known shannon limit.

By how much?

Approximately 0.15%

Of course this doesn't factor in the five numbers you need, a0, and b0 to define h0, a1, and b1 to define h1, and the salt value, so it probably works out to the same. I'd like to see what the savings are with even larger sets though.

Next I said, well what if we COULD compress our data further?

What if all we needed were the numbers to define our hash functions, a starting value, a salt, and a number to represent 'depth'?

What if we could rearrange this system so we *could* use the starting value to represent n subsequent elements of our input x?

And thats what I did.

We break the input into blocks of 15-25 items, b/c thats the fastest to work with and find hashes for.

We then follow the math, to get a block which is

H0, H1, H2, H3, depth (how many items our 1st item will reproduce), & a starting value or 1stitem in this slice of our input.

x goes into h0, giving us y. y goes into h1 -> z, z into h2 -> y, y into h3, giving us back x.

The rest is in the image.

Anyway good to see you all again. 20

20 -

Major rant incoming. Before I start ranting I’ll say that I totally respect my professor’s past. He worked on some really impressive major developments for the military and other companies a long time ago. Was made an engineering fellow at Raytheon for some GPS software he developed (or lead a team on I should say) and ended up dropping fellowship because of his health. But I’m FUCKING sick of it. So fucking fed up with my professor. This class is “Data Structures in C++” and keep in mind that I’ve been programming in C++ for almost 10 years with it being my primary and first language in OOP.

Throughout this entire class, the teacher has been making huge mistakes by saying things that aren’t right or just simply not knowing how to teach such as telling the students that “int& varOne = varTwo” was an address getting put into a variable until I corrected him about it being a reference and he proceeded to skip all reference slides or steps through sorting algorithms that are wrong or he doesn’t remember how to do it and saying, “So then it gets to this part and....it uh....does that and gets this value and so that’s how you do it *doesnt do rest of it and skips slide*”.

First presentation I did on doubly linked lists. I decided to go above and beyond and write my own code that had a menu to add, insert at position n, delete, print, etc for a doubly linked list. When I go to pull out my code he tells me that I didn’t say anything about a doubly linked list’s tail and head nodes each have a pointer pointing to null and so I was getting docked points. I told him I did actually say it and another classmate spoke up and said “Ya” and he cuts off saying, “No you didn’t”. To which I started to say I’ll show you my slides but he cut me off mid sentence and just yelled, “Nope!”. He docked me 20% and gave me a B- because of that. I had 1 slide where I had a bullet point mentioning it and 2 slides with visual models showing that the head node’s previousNode* and the tail node’s nextNode* pointed to null.

Another classmate that’s never coded in his life had screenshots of code from online (literally all his slides were a screenshot of the next part of code until it finished implementing a binary search tree) and literally read the code line by line, “class node, node pointer node, ......for int i equals zero, i is less than tree dot length er length of tree that is, um i plus plus.....”

Professor yelled at him like 4 times about reading directly from slide and not saying what the code does and he would reply with, “Yes sir” and then continue to read again because there was nothing else he could do.

Ya, he got the same grade as me.

Today I had my second and final presentation. I did it on “Separate Chaining”, a hashing collision resolution. This time I said fuck writing my own code, he didn’t give two shits last time when everyone else just screenshot online example code but me so I decided I’d focus on the PowerPoint and amp it up with animations on models I made with the shapes in PowerPoint. Get 2 slides in and he goes,

Prof: Stop! Go back one slide.

Me: Uh alright, *click*

(Slide showing the 3 collision resolutions: Open Addressing, Separate Chaining, and Re-Hashing)

Prof: Aren’t you forgetting something?

Me: ....Not that I know of sir

Prof: I see Open addressing, also called Open Hashing, but where’s Closed Hashing?

Me: I believe that’s what Seperate Chaining is sir

Prof: No

Me: I’m pretty sure it is

*Class nods and agrees*

Prof: Oh never mind, I didn’t see it right

Get another 4 slides in before:

Prof: Stop! Go back one slide

Me: .......alright *click*

(Professor loses train of thought? Doesn’t mention anything about this slide)

Prof: I er....um, I don’t understand why you decided not to mention the other, er, other types of Chaining. I thought you were going to back on that slide with all the squares (model of hash table with animations moving things around to visualize inserting a value with a collision that I spent hours on) but you didn’t.

(I haven’t finished the second half of my presentation yet you fuck! What if I had it there?)

Me: I never saw anything on any other types of Chaining professor

Prof: I’m pretty sure there’s one that I think combines Open Addressing and Separate Chaining

Me: That doesn’t make sense sir. *explanation why* I did a lot of research and I never saw any other.

Prof: There are, you should have included them.

(I check after I finish. Google comes up with no other Chaining collision resolution)

He docks me 20% and gives me a B- AGAIN! Both presentation grades have feedback saying, “MrCush, I won’t go into the issues we discussed but overall not bad”.

Thanks for being so specific on a whole 20% deduction prick! Oh wait, is it because you don’t have specifics?

Bye 3.8 GPA

Is it me or does he have something against me?7 -

The C Standard Library has a Hash Table implementation, and it's a man-made horror beyond comprehension:

https://youtube.com/watch/...7 -

Heres some research into a new LLM architecture I recently built and have had actual success with.

The idea is simple, you do the standard thing of generating random vectors for your dictionary of tokens, we'll call these numbers your 'weights'. Then, for whatever sentence you want to use as input, you generate a context embedding by looking up those tokens, and putting them into a list.

Next, you do the same for the output you want to map to, lets call it the decoder embedding.

You then loop, and generate a 'noise embedding', for each vector or individual token in the context embedding, you then subtract that token's noise value from that token's embedding value or specific weight.

You find the weight index in the weight dictionary (one entry per word or token in your token dictionary) thats closest to this embedding. You use a version of cuckoo hashing where similar values are stored near each other, and the canonical weight values are actually the key of each key:value pair in your token dictionary. When doing this you align all random numbered keys in the dictionary (a uniform sample from 0 to 1), and look at hamming distance between the context embedding+noise embedding (called the encoder embedding) versus the canonical keys, with each digit from left to right being penalized by some factor f (because numbers further left are larger magnitudes), and then penalize or reward based on the numeric closeness of any given individual digit of the encoder embedding at the same index of any given weight i.

You then substitute the canonical weight in place of this encoder embedding, look up that weights index in my earliest version, and then use that index to lookup the word|token in the token dictionary and compare it to the word at the current index of the training output to match against.

Of course by switching to the hash version the lookup is significantly faster, but I digress.

That introduces a problem.

If each input token matches one output token how do we get variable length outputs, how do we do n-to-m mappings of input and output?

One of the things I explored was using pseudo-markovian processes, where theres one node, A, with two links to itself, B, and C.

B is a transition matrix, and A holds its own state. At any given timestep, A may use either the default transition matrix (training data encoder embeddings) with B, or it may generate new ones, using C and a context window of A's prior states.

C can be used to modify A, or it can be used to as a noise embedding to modify B.

A can take on the state of both A and C or A and B. In fact we do both, and measure which is closest to the correct output during training.

What this *doesn't* do is give us variable length encodings or decodings.

So I thought a while and said, if we're using noise embeddings, why can't we use multiple?

And if we're doing multiple, what if we used a middle layer, lets call it the 'key', and took its mean

over *many* training examples, and used it to map from the variance of an input (query) to the variance and mean of

a training or inference output (value).

But how does that tell us when to stop or continue generating tokens for the output?

Posted on pastebin if you want to read the whole thing (DR wouldn't post for some reason).

In any case I wasn't sure if I was dreaming or if I was off in left field, so I went and built the damn thing, the autoencoder part, wasn't even sure I could, but I did, and it just works. I'm still scratching my head.

https://pastebin.com/xAHRhmfH33 -

There was a lady who had come for QA automation demonstration.

She was mentioning the languages used and uttered, C hash for C#...2 -

Thinking back, it’s pretty terrible how long it took to create my first real development project.

When I was the ages of 13-18 I built websites on and off but I would never consider them good enough. I would literally design a bunch of images and then, using just HTML, put all the images together like a puzzle using exact pixel locations. Might be fine and dandy now but back then it would look great on my monitor but on others it would be an absolute mess.

Anyways, after that I got in college and started learning C++ and did assignments but I don’t count those as my own either. Not until I was 29 (my current age) did I finally develop a program assigned by my internship. Prior to that I always just re-learned C++ over and over again off and on because I had no clue where to go after that.

Apologies for the long intro. So the first development project that I feel is legit at my internship I had to use my companies API to track the amount of time it took for them to encrypt a packet and then decrypt it as well as grabbing the packet and seeing how long the hash was, the letters used in which position and so on. Essentially grab a whole bunch of statistics from their software and then output it to an excel document. It had a menu, and I had to make it work on Windows, Ubuntu, Raspbian, and some other systems on different devices.

I was actually really proud of what I ended up with and they use it to test their new versions and compare and so forth. -

My answer to their survey -->

What, if anything, do you most _dislike_ about Firebase In-App Messaging?

Come on, have you sit a normal dev, completely new to this push notification thing and ask him to make run a simple app like the flutter firebase_messaging plugin example? For sure you did not oh dear brain dead moron that found his college degree in a Linux magazine 'Ruby special edition'.

Every-f**kin thing about that Firebase is loose end. I read all Medium articles, your utterly soporific documentation that never ends, I am actually running the flutter plugin example firebase_messaging. Nothing works or is referenced correctly: nothing. You really go blind eyes in life... you guys; right? Oh, there is a flimsy workaround in the 100th post under the Github issue number 10 thousand... lets close the crash report. If I did not change 50 meaningless lines in gradle-what-not files to make your brick-of-puke to work, I did not changed a single one.

I dream of you, looking at all those nonsense config files, with cross side eyes and some small but constant sweat, sweat that stinks piss btw, leaving your eyes because you see the end, the absolute total fuckup coming. The day where all that thick stinky shit will become beyond salvation; blurred by infinite uncontrolled and skewed complexity; your creation, your pathetic brain exposed for us all.

For sure I am not the first one to complain... your whole thing, from the first to last quark that constitute it, is irrelevant; a never ending pile of non sense. Someone with all the world contained sabotage determination would not have done lower. Thank you for making me loose hours down deep your shit show. So appreciated.

The setup is: servers, your crap-as-a-service and some mobile devices. For Christ sake, sending 100 bytes as a little [ beep beep + 'hello kitty' ] is not fucking rocket science. Yet you fuckin push it to be a grinding task ... for eternity!!!

You know what, you should invent and require another, new, useless key-value called 'Registration API Key Plugin ID Service' that we have to generate and sync on two machines, everyday, using something obscure shit like a 'Gradle terminal'. Maybe also you could deprecate another key, rename another one to make things worst and I propose to choose a new hash function that we have to compile ourselves. A good candidate would be a C buggy source code from some random Github hacker... who has injected some platform dependent SIMD code (he works on PowerPC and have not test on x64); you know, the guy you admire because he is so much more lowlife that you and has all the Pokemon on his desk. Well that guy just finished a really really rapid hash function... over GPU in a server less fashion... we have an API for it. Every new user will gain 3ms for every new key. WOW, Imagine the gain over millions of users!!! Push that in the official pipe fucktard!.. What are you waiting for? Wait, no, change the whole service name and infrastructure. Move everything to CLSG (cloud lambda service ... by Google); that is it, brilliant!

And Oh, yeah, to secure the whole void, bury the doc for the new hash under 3000 words, lost between v2, v1 and some other deprecated doc that also have 3000 and are still first result on Google. Finally I think about it, let go the doc, fuck it... a tutorial, for 'weak ass' right.

One last thing, rewrite all your tech in the latest new in house language, split everything in 'femto services' => ( one assembly operation by OS process ) and finally cramp all those in containers... Agile, for sure it has to be Agile. Users will really appreciate the improvements of your mandatory service. -

Fuck you Linux! I thought user password validation would be a piece of cake, like bash one liner. How wrong could I be!

Yeah, it's already ugly to grep hash and salt from /etc/shadow, but I could accept that. But then give me a friggin' tool to generate the hash. And of course the distro I chose has the wrong makepswd, OpenSSL is too old to have the new SHA-512 built in, as it should be a minimal installation I don't want to use perl or python...

And the stupid crypto function that would do me the job is even included in glibc. So it's only one line of C-code to give me all I want, but there is no package that would provide me this dull binary? Instead I will have to compile it myself and then again remove the compiler to keep image small?5 -

Almost finished with latest preprocessor.

Why am I always working on preprocessors tho? Shit...

Anyway, almost finished ok.

Idea is, basically, that inside a C source or header you can write a perl subroutine instead of `#define ...`.

The mechanism is rather simple:

```C (wat?)

macro mymacro($expr) {

· // perl code goes here

· return "$expr;"

};

```

`$expr` is just a string holding whatever block of code comes after an invocation of `mymacro`. You can use the builtins `tokenshift` and `tokenpop` on a string to get the first and last token, respectively, and then `tokensplit` gives you *all* the tokens.

Whatever string you return is what the expression you received is replaced by:

- You can just give back the expression as-is to get the exact same thing you wrote -- so `mymacro char* wat;` gives you `char* wat;`.

- But if you return a galaxy's worth of C code, then bam. Macro expanded into it, just like that. It's a perl subroutine, so let your imagination fly. Wanna run some scripts at (pre)compile time? Then you can.

- If you return an empty string, then puff. No code. Input consumed.

- If you give the name of another macro (eg "another_macro $expr;"), the expansion recurses.

- If you return the name of the currently executing macro, no recursion happens. This lets you wrap C keywords without (too much) fear.

It's kind of cool because a separate perl module is built from the macros themselves. So then you can include those in another C file. Syntax is basically more perl because why not:

```C (yes)

package mypkg;

· use lib "path/to/myshit/";

· use pm funk qw(mymacro);

```

The `lib` bit actually translates to `-I(path)` for gcc. But for some reason the way you add an include path in perl is `use lib "path"`, so yep. I get it's confusing but just go with the ::~ f l o w ~:: ok.

Then the `pm` stuff is not valid perl (i think), but I took the easy way out and invented it to ensure there is a way to say "OK I don't give a single shit about the C stuff, just give me these qw()'d funky macros from this file." If you simply `use funk qw(mymacro)` then you also get an `#include "funk.h"`.

Speaking of which, headers are automatically generated. Yeah, fuck you, I added `public` to C, bite me. It's actually quite sexy as I defined it using the preprocessor:

```C (yes but actually perl)

macro public($expr) {

· my $dst=cmamout()->{export};\

· tokentidy $expr;

· push @$dst,$expr;

· return "$expr;";

};

```

Where `cmamout()` is a hash from which the output is generated. Oh, and `tokentidy` is just a random builtin that cleans up extra whitespace, don't mind it.

So now the bad stuff: I have to fix a few things. For instance, notice how I had to escape a new line there? Yeah. It's called dumb fix to shit parsing, of course.

But overall I'm quite satisfied with this. And the reason why may not be so obvious so I'ma spill it out: backticks, motherfucker.

That's right. Have a source emitter written in an esoteric language?

```C (yes really but not really)

macro bashit($expr) {

· my ($exe,@args)=tokensplit $expr;

· return `$exe @args`;

};

```

So now you can fork off into parallel dimensions; what can I say pass the pipe brother.

MAMmoth in the room is yes, this depends on MAM. What is MAM? MAMMI. It's the original name of my infamous picture of an ouroboros eating it's own ass while stuck in limbo contemplating terrible life decisions of a build tool, avtomat (go ARSLASH <AR/> [habibi]).

So what's the deal with that? avtomat is a good build tool _for me_, not... ugh, you. I made it for *myself* baby things are not going to work out between us I'm sorry. MAM just does lots of things I wanted build tools to do in the __EXACT__ way I wanted them done. I'd say you should go use it too maybe, but actually don't and you shouldn't because I broke main some weeks ago to fix some other shit and then implement this. Yeah, pretty stupid, but what the hell. I'm the only user after all!

In conclusion, I am fully expecting to receive my mad props and street cred in the mail along with your marriage proposals en masse, effective immediately.

Further reading: https://youtube.com/watch/...5 -

Trying to make a nodejs backend is pure hell. It doesn't contain much builtin functionality in the first place and so you are forced to get a sea of smaller packages to make something that should be already baked in to happen. Momentjs and dayjs has thought nodejs devs nothing about the fact node runtime must not be as restrained as a browser js runtime. Now we are getting temporal api in browser js runtime and hopefully we can finally handle timezone hell without going insane. But this highlights the issue with node. Why wait for it to be included in js standard to finally be a thing. develop it beforehand. why are you beholden to Ecma standard. They write standards for web browser not node backend for god sake.

Also, authentication shouldn't be that complicated. I shouldn't be forced to create my own auth. In laravel scaffolding is already there and is asking you to get it going. In nodejs you have to get jwt working. I understand that you can get such scaffolding online with git clone but why? why express doesn't provide buildtin functions for authentication? Why for gods sake, you "npm install bcrypt"? I have to hash my own password before hand. I mean, realistically speaking nodejs is builtin with cryptography libraries. Hashmap literally uses hashing. Why can't it be builtin. I supposed any API needed auth. Instead I have to sign and verfiy my token and create middlewares for the job of making sure routes are protected.

I like the concept of bidirectional communication of node and the ugly thing, it's not impressive. any goddamn programming language used for web dev should realistically sustain two-way communication. It just a question of scaling, but if you have a backend that leverages usockets you can never go wrong. Because it's written in c. Just keep server running and sending data packets and responding to them, and don't finalize request and clean up after you serve it just keep waiting for new event.

Anyway, I hope out of this confused mess we call nodejs backend comes clean solutions just like Laravel came to clean the mess that was PHP backend back then.

Express is overrated by the way, and mongodb feels like a really ludicrous idea. we now need graphql in goddamn backend because of mongodb and it's cousins of nosql databases.7 -

hey devos, I got a question to ask ya'll. I have an interview tomorrow with an MNC.

I was hovering through some leetcode problems when I came across a hard question that is forcing me to use a hashmap with the key of the user-defined type. I made up my mind to make use of C++ for the coding interview. Now, the problem is C++ asks me to implement a hash function.

If in case, I'm asked a similar question like this in the interview, which of these two options will you suggest:

a) implement your own hash function

b) use pointers as key2