Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "keras"

-

Random guy : Well I'm not tracked on the internet, I use private tabs.

Me : Well, I'm not sleeping with your mom, I use condoms10 -

2010: PHP, CSS, Vanilla JS, and a LAMP Server.

Ah, the simple life.

2016: Node.js, React, Vue, Angular, AngularJS, Polymer, Sass, Less, Gulp, Bower, Grunt.

I can't handle this, I'm shifting domains to Machine Learning.

2017: Numpy, Scipy, TensorFlow, Theano, Keras, Torch, CNNs, RNNs, GANs and LOTS AND LOTS OF MATH!

Okay, okay. Calm down there fella.

JavaScript doesn't seem that complicated now, does it? 🙈14 -

Yo!

I went to see my mother a few days, and her TV's broken.

She told me to repair it, because "you're a programmer"

Does anybody knows a python function or a useful library that could repair TV ?5 -

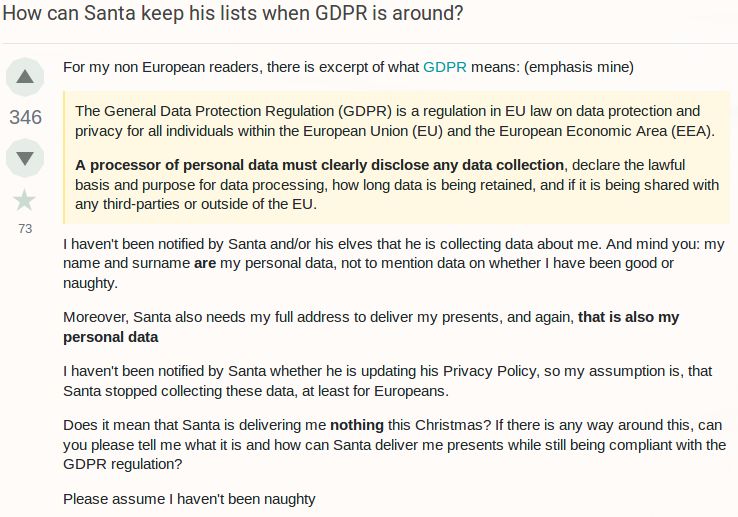

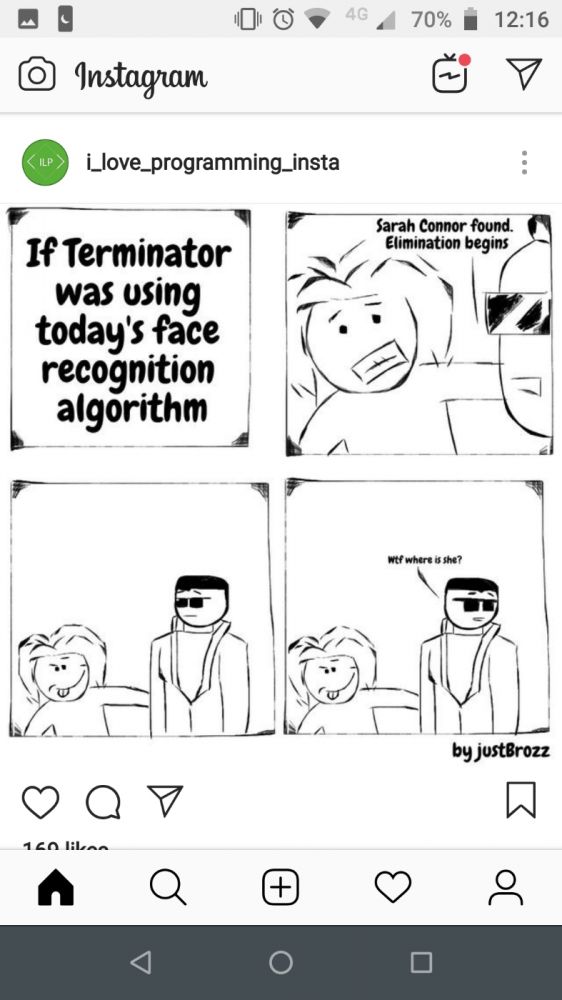

AI robo revolution 😁

joke/meme machine learning tensorflow keras ml opencv ai to overtake humanity soon ai face recognition

joke/meme machine learning tensorflow keras ml opencv ai to overtake humanity soon ai face recognition -

Just started the TV show "sillicon valley", it's pretty good and funny, if you want something to watch about programmers that launch their startup12

-

Me trying to show to my girlfriend family the lord of the rings. I know it is nothing about code but please tell me there's good people out there.

5

5 -

So I made a PR into Keras after a loooong time. It ended up being merged as the 5000th commit of the repo. I'm so happy 😭

3

3 -

Big data is like teenage sex: everyone talks about it, nobody really knows how to do it, everyone thinks everyone else is doing it, so everyone claims they are doing it… — Dan Ariely4

-

As you can see from the screenshot, its working.

The system is actually learning the associations between the digit sequence of semiprime hidden variables and known variables.

Training loss and value loss are super high at the moment and I'm using an absurdly small training set (10k sequence pairs). I'm running on the assumption that there is a very strong correlation between the structures (and that it isn't just all ephemeral).

This initial run is just to see if training an machine learning model is a viable approach.

Won't know for a while. Training loss could get very low (thats a good thing, indicating actual learning), only for it to spike later on, and if it does, I won't know if the sample size is too small, or if I need to do more training, or if the problem is actually intractable.

If or when that happens I'll experiment with different configurations like batch sizes, and more epochs, as well as upping the training set incrementally.

Either case, once the initial model is trained, I need to test it on samples never seen before (products I want to factor) and see if it generates some or all of the digits needed for rapid factorization.

Even partial digits would be a success here.

And I expect to create multiple training sets for each semiprime product and its unknown internal variables versus deriable known variables. The intersections of the sets, and what digits they have in common might be the best shot available for factorizing very large numbers in this approach.

Regardless, once I see that the model works at the small scale, the next step will be to increase the scope of the training data, and begin building out the distributed training platform so I can cut down the training time on a larger model.

I also want to train on random products of very large primes, just for variety and see what happens with that. But everything appears to be working. Working way better than I expected.

The model is running and learning to factorize primes from the set of identities I've been exploring for the last three fucking years.

Feels like things are paying off finally.

Will post updates specifically to this rant as they come. Probably once a day. 2

2 -

Fucking professors, they think could play ping pong with students. I started my thesis on ransomware but these meaningless biological creatures who is my relator sent me to another one who sent me to another one who sent me to the first professor. After almost three weeks I have nothing done so i switched professor and thesis argument to neural networks (TensorFlow, Theano, Keras, Caffe and other) and now they wants me back and one of them said that he is offended. Fucking retarded, I have to graduate and I'm working hard to do it in september, if you were a little bit interested I could have collect some material to study in august sacrifing even the summer but you mock me, but rightly it's my career and my money, it doesn't care to you. You deserve to get stuck in an infinite loop of pian.4

-

I am soooooooooo fucking stupid!

I was using an np.empty instead of np.zeros to shape the Y tensor...

🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️🤦🏻♀️

No fucking wonder the poor thing was keep failing to detect a pattern.

Let's see if it works now... *play elevator music while we wait for keras*5 -

I've assembled enough computing power from the trash. Now I can start to build my own personal 'cloud'. Fuck I hate that word.

But I have a bunch of i7s, and i5s on hand, in towers. Next is just to network them, and setup some software to receive commands.

So far I've looked at Ray, and Dispy for distributed computation. If theres others that any of you are aware of, let me know. If you're familiar with any of these and know which one is the easier approach to get started with, I'd appreciate your input.

The goal is to get all these machines up and running, a cloud thats as dirt cheap as possible, and then train it on sequence prediction of the hidden variables derived from semiprimes. Right now the set is unretrievable, but theres a lot of heavily correlated known variables and so I'm hoping the network can derive better and more accurate insights than I can in a pinch.

Because any given semiprime has numerous (hundreds of known) identities which immediately yield both of its factors if say a certain constant or quotient is known (it isn't), knowing any *one* of them and the correct input, is equivalent to knowing the factors of p.

So I can set each machine to train and attempt to predict the unknown sequence for each particular identity.

Once the machines are setup and I've figured out which distributed library to use, the next step is to setup Keras, andtrain the model using say, all the semiprimes under one to ten million.

I'm also working on a new way of measuring information: autoregressive entropy. The idea is that the prevalence of small numbers when searching for patterns in sequences is largely ephemeral (theres no long term pattern) and AE allows us to put a number on the density of these patterns in a partial sequence, but its only an idea at the moment and I'm not sure what use it has.

Heres hoping the sequence prediction approach works.17 -

Keras was throwing errors...

Since I thought it was a tensorflow issue, I went up and down and all the way around. Installing all tensorflow shit like a bijillion times.

... But it wasn't. It was the fucking ipykernel...

It took me a good 5-6 hours.

I pulled a 12 hours day today.

... Somebody hug me plz 😢2 -

I'm reinventing the wheel by making yet another neural network library. It's not any good yet but I learn as I go along.

The only documentation that exists now is the admittedly quite comprehensive code comments. I'm it because Keras (using TensorFlow) requires a 3.5 compute ability rating for CUDA acceleration (which I don't have) and it doesn't support OpenCL. Eventually, I will make my implementation support both with varying levels of acceleration for different compute capabilities with the oldest supported being my hardware. If I ever get around to it.

I'd say wish me luck but determination would be infinitely more useful.2 -

Dude. Tensorflow version changes are so fucking bad. It's even worse with keras because they create an echo chamber for shit. I'm trynna reset a fuckin model here, yet everything throws 99 more errors to the pile. Like, wtf?

***** For stackoverflow enthusiasts: found a solution, don't need your groundbreaking shit either.9 -

Just received my sticker and I am glad of being a part of the DevRant community. Thanks for you for being the best community I've ever seen 😊

8

8 -

Random guy : Well, I'm a real developer, I followed a 2 days formation on internet.

Me : Well, I gave 1$ to a homeless this morning, still waiting my Nobel for peace.1 -

Just installed Keras, theano, PyTorch and Tensorflow on Windows 10 with GPU and CUDA working...

Took me 2 days to do it on my PC, and then another two days of cryptic compiler errors to do it on my laptop. It takes an hour or so on Linux... But now all of my devices are ready to train some Deep Deep Learning models )

I don't think even here many people will understand the pain I had to go through, but I just had to share it somewhere since I am now overcome with peace and joy.4 -

Just graduated, first real internship.

So basically I'm the only one who do what I'm supposed to do, nobody can help me because they are on project that are totally different. Even my superior who hired me don't know what my predecessor exactly did, he just gave me his gitlab and said "continue... Whatever this shit was".

So I'm alone and the code of my "predecessor" doesn't work obviously because the half of the files are missing, the code has no explanation and he's not joignable. I have to build an algorithm of deep learning from scratch and to do a presentation in one month to explain to everyone why I'm not useless.

Is it really like this everywhere?? Is it the reason why DevRant was created??

I read the quotes when I was in school like "oh no c'mon that really never happened". Foolish boy I was..

But there's nice coffee6 -

i was learning neural networks, started with keras and was on the first tutorial where they started by importing pandas

so i switched to learning data analysis using pandas in Python where they started by importing matplotlib and i realized data visualization is also important and now I'm reading matplotlib docs...🙄11 -

Reinforcement learning is going to be my end. 😩😩😩☠️

(currently stuck at how to put images as well as a bunch of other -motor- values as input... and exactly what am I getting as output again?)

Pulling my own hair out... Ooooooof6 -

I can't be arsed with jobs that mention tensorflow alone as their main tech.

If your company is willing to use tf and not keras, then y'all probably didn't understand what you're dealing with to begin with.

*Red flags and sirens in distance for bad designs* -

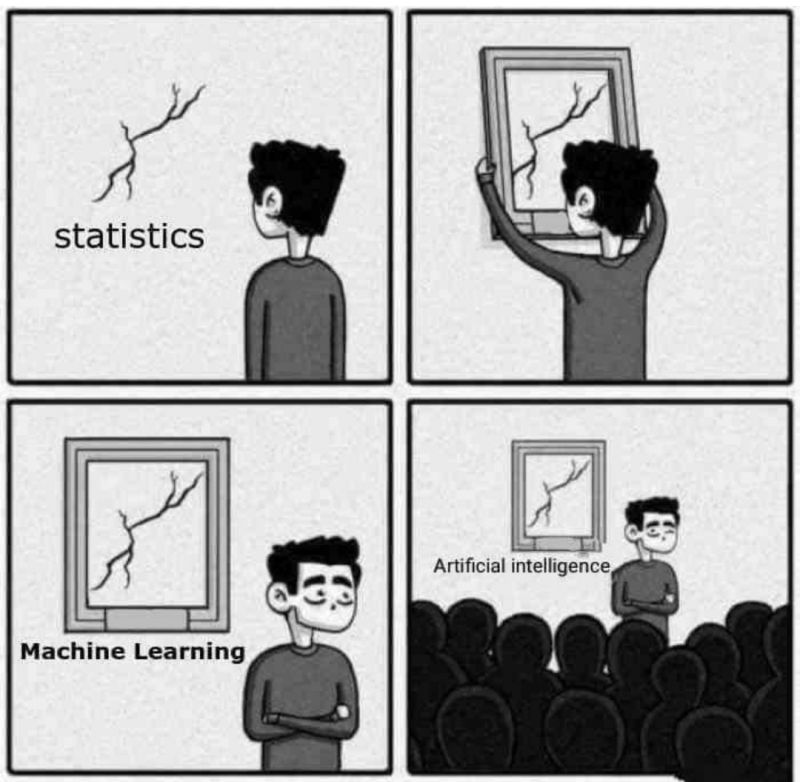

I get it — it’s not fashionable to be part of the overly enthusiastic, hype-drunk crowd of deep learning evangelists, who think import keras is the leap for every hurdle.

-

If you're an ml engineer, you must know how to hyperopt. I could recommend keras tuner tho, it's nice and saves shit on the go.

-

So I got the LSTM working in keras.

Working from a glorified tutorial.

Why the fuck do people let their github pages go down with no other backup?

Especially if its a link in your blog?

Why would you do that and not post the full script (instead of bits and pieces interspersed with *partial* explanations)?

In any case, its working and training on a test set and examples just to debug my own understanding of the process.

Once thats done I can generate some training data and try training on a small set. If that goes smoothly and the loss looks like it is heading in the right direction, then I'll setup the hardware for the private cloud and start writing the parallel computing component.2 -

The fact that I managed to migrate the same fuckin keras model from gym to my own mujoco env and nothing broke too bad, is absolutely amazing.

Let's hope the little shit actually ends up learning some proper shit. 😒🦄4 -

Just switched from Scala and its syntactic sugar to python and it just feels like :

Scala : Mary likes "football"

Python : WTF is Mary ???11 -

Epoch 2/4

777/1054 [=====================>........] - ETA: 45:31 - loss: 2.6682

Screw you Keras model1 -

I watched "imitation game" for the first time.

The first machine learning was unsupervised learning? Really?

You're too crazy boys... -

Hey all, just want to say thanks in advance. Last night, my friend and I were in a project together (realtime), and I was teaching him about some basic Keras models in python, stacked with tensor flow. When we were at school the previous day, he was really interested in the actual concepts behind the code, but when we were actually making the model, he seemed kind of uninterested. I suspect he was just getting distracted, and its better to write down the code and go over it at school, but is there some way I can try and make it more fun/interesting for him?3

-

Hear me out:

Since keras and tf are pretty much schema design rn what if someone made a no-code solution where you drag and drop layers and tweak things in a UI so those data scientists can design it in a UI instead of writing shitty code?5 -

Hey Fellows!

Just to know, what are your favorites music's for programming? Personnaly it's Bon Jovi but that's sound a little bit clichee5