Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "multiple objects"

-

This codebase reminds me of a large, rotting, barely-alive dromedary. Parts of it function quite well, but large swaths of it are necrotic, foul-smelling, and even rotted away. Were it healthy, it would still exude a terrible stench, and its temperament would easily match: If you managed to get near enough, it would spit and try to bite you.

Swaths of code are commented out -- entire classes simply don't exist anymore, and the ghosts of several-year-old methods still linger. Despite this, large and deprecated (yet uncommented) sections of the application depend on those undefined classes/methods. Navigating the codebase is akin to walking through a minefield: if you reference the wrong method on the wrong object... fatal exception. And being very new to this project, I have no idea what's live and what isn't.

The naming scheme doesn't help, either: it's impossible to know what's still functional without asking because nothing's marked. Instead, I've been working backwards from multiple points to try to find code paths between objects/events. I'm rarely successful.

Not only can I not tell what's live code and what's interactive death, the code itself is messy and awful. Don't get me wrong: it's solid. There's virtually no way to break it. But trying to understand it ... I feel like I'm looking at a huge, sprawling MC Escher landscape through a microscope. (No exaggeration: a magnifying glass would show a larger view that included paradoxes / dubious structures, and these are not readily apparent to me.)

It's also rife with bad practices. Terrible naming choices consisting of arbitrarily-placed acronyms, bad word choices, and simply inconsistent naming (hash vs hsh vs hs vs h). The indentation is a mix of spaces and tabs. There's magic numbers galore, and variable re-use -- not just local scope, but public methods on objects as well. I've also seen countless assignments within conditionals, and these are apparently intentional! The reasoning: to ensure the code only runs with non-falsey values. While that would indeed work, an early return/next is much clearer, and reduces indentation. It's just. reading through this makes me cringe or literally throw my hands up in frustration and exasperation.

Honestly though, I know why the code is so terrible, and I understand:

The architect/sole dev was new to coding -- I have 5-7 times his current experience -- and the project scope expanded significantly and extremely quickly, and also broke all of its foundation rules. Non-developers also dictated architecture, creating further mess. It's the stuff of nightmares. Looking at what he was able to accomplish, though, I'm impressed. Horrified at the details, but impressed with the whole.

This project is the epitome of "I wrote it quickly and just made it work."

Fortunately, he and I both agree that a rewrite is in order. but at 76k lines (without styling or configuration), it's quite the undertaking.

------

Amusing: after running the codebase through `wc`, it apparently sums to half the word count of "War and Peace"15 -

Here are the reasons why I don't like IPv6.

Now I'll be honest, I hate IPv6 with all my heart. So I'm not supporting it until inevitably it becomes the de facto standard of the internet. In home networks on the other hand.. huehue...

The main reason why I hate it is because it looks in every way overengineered. Or rather, poorly engineered. IPv4 has 32 bits worth, which translates to about 4 billion addresses. IPv6 on the other hand has 128 bits worth of addresses.. which translates to.. some obscenely huge number that I don't even want to start translating.

That's the problem. It's too big. Anyone who's worked on the internet for any amount of time knows that the internet on this planet will likely not exceed an amount of machines equal to about 1 or 2 extra bits (8.5B and 17.1B respectively). Now of course 33 or 34 bits in total is unwieldy, it doesn't go well with electronics. From 32 you essentially have to go up to 64 straight away. That's why 64-bit processors are.. well, 64 bits. The memory grew larger than the 4GB that a 32-bit processor could support, so that's what happened.

The internet could've grown that way too. Heck it probably could've become 64 bits in total of which 34 are assigned to the internet and the remaining bits are for whatever purposes large IP consumers would like to use the remainder for.

Whoever designed IPv6 however.. nope! Let's give everyone a /64 range, and give them quite literally an IP pool far, FAR larger than the entire current internet. What's the fucking point!?

The IPv6 standard is far larger than it should've been. It should've been 64 bits instead of 128, and it should've been separated differently. What were they thinking? A bazillion colonized planets' internetworks that would join the main internet as well? Yeah that's clearly something that the internet will develop into. The internet which is effectively just a big network that everyone leases and controls a little bit of. Just like a home network but scaled up. Imagine or even just look at the engineering challenges that interplanetary communications present. That is not going to be feasible for connecting multiple planets' internets. You can engineer however you want but you can't engineer around the hard limit of light speed. Besides, are our satellites internet-connected? Well yes but try using one. And those whizz only a couple of km above sea level. The latency involved makes it barely usable. Imagine communicating to the ISS, the moon or Mars. That is not going to happen at an internet scale. Not even close. And those are only the closest celestial objects out there.

So why was IPv6 engineered with hundreds of years of development and likely at least a stage 4 civilization in mind? No idea. Future-proofing or poor engineering? I honestly don't know. But as a stage 0 or maybe stage 1 person, I don't think that I or civilization for that matter is ready for a 128-bit internet. And we aren't even close to needing so many bits.

Going back to 64-bit processors and memory. We've passed 32 bit address width about a decade ago. But even now, we're only at about twice that size on average. We're not even close to saturating 64-bit address width, and that will likely take at least a few hundred years as well. I'd say that's more than sufficient. The internet should've really become a 64-bit internet too.34 -

ARGH. I wrote a long rant containing a bunch of gems from the codebase at @work, and lost it.

I'll summarize the few I remember.

First, the cliche:

if (x == true) { return true; } else { return false; };

Seriously written (more than once) by the "legendary" devs themselves.

Then, lots of typos in constants (and methods, and comments, and ...) like:

SMD_AGENT_SHCEDULE_XYZ = '5-year-old-typo'

and gems like:

def hot_garbage

magic = [nil, '']

magic = [0, nil] if something_something

success = other_method_that_returns_nothing(magic)

if success == true

return true # signal success

end

end

^ That one is from our glorious self-proclaimed leader / "engineering director" / the junior dev thundercunt on a power trip. Good stuff.

Next up are a few of my personal favorites:

Report.run_every 4.hours # Every 6 hours

Daemon.run_at_hour 6 # Daily at 8am

LANG_ENGLISH = :en

LANG_SPANISH = :sp # because fuck standards, right?

And for design decisions...

The code was supposed to support multiple currencies, but just disregards them and sets a hardcoded 'usd' instead -- and the system stores that string on literally hundreds of millions of records, often multiple times too (e.g. for payment, display fees, etc). and! AND! IT'S ALWAYS A FUCKING VARCHAR(255)! So a single payment record uses 768 bytes to store 'usd' 'usd' 'usd'

I'd mention the design decisions that led to the 35 second minimum pay API response time (often 55 sec), but i don't remember the details well enough.

Also:

The senior devs can get pretty much anything through code review. So can the dev accountants. and ... well, pretty much everyone else. Seriously, i have absolutely no idea how all of this shit managed to get published.

But speaking of code reviews: Some security holes are allowed through because (and i quote) "they already exist elsewhere in the codebase." You can't make this up.

Oh, and another!

In a feature that merges two user objects and all their data, there's a method to generate a unique ID. It concatenates 12 random numbers (one at a time, ofc) then checks the database to see if that id already exists. It tries this 20 times, and uses the first unique one... or falls through and uses its last attempt. This ofc leads to collisions, and those collisions are messy and require a db rollback to fix. gg. This was written by the "legendary" dev himself, replete with his signature single-letter variable names. I brought it up and he laughed it off, saying the collisions have been rare enough it doesn't really matter so he won't fix it.

Yep, it's garbage all the way down.16 -

I am much too tired to go into details, probably because I left the office at 11:15pm, but I finally finished a feature. It doesn't even sound like a particularly large or complicated feature. It sounds like a simple, 1-2 day feature until you look at it closely.

It took me an entire fucking week. and all the while I was coaching a junior dev who had just picked up Rails and was building something very similar.

It's the model, controller, and UI for creating a parent object along with 0-n child objects, with default children suggestions, a fancy ui including the ability to dynamically add/remove children via buttons. and have the entire happy family save nicely and atomically on the backend. Plus a detailed-but-simple listing for non-technicals including some absolutely nontrivial css acrobatics.

After getting about 90% of everything built and working and beautiful, I learned that Rails does quite a bit of this for you, through `accepts_nested_params_for :collection`. But that requires very specific form input namespacing, and building that out correctly is flipping difficult. It's not like I could find good examples anywhere, either. I looked for hours. I finally found a rails tutorial vide linked from a comment on a SO answer from five years ago, and mashed its oversimplified and dated examples with the newer documentation, and worked around the issues that of course arose from that disasterous paring.

like.

I needed to store a template of the child object markup somewhere, yeah? The video had me trying to store all of the markup in a `data-fields=" "` attrib. wth? I tried storing it as a string and injecting it into javascript, but that didn't work either. parsing errors! yay! good job, you two.

So I ended up storing the markup (rendered from a rails partial) in an html comment of all things, and pulling the markup out of the comment and gsubbing its IDs on document load. This has the annoying effect of preventing me from using html comments in that partial (not that i really use them anyway, but.)

Just.

Every step of the way on building this was another mountain climb.

* singular vs plural naming and routing, and named routes. and dealing with issues arising from existing incorrect pluralization.

* reverse polymorphic relation (child -> x parent)

* The testing suite is incompatible with the new rails6. There is no fix. None. I checked. Nope. Not happening.

* Rails6 randomly and constantly crashes and/or caches random things (including arbitrary code changes) in development mode (and only development mode) when working with multiple databases.

* nested form builders

* styling a fucking checkbox

* Making that checkbox (rather, its label and container div) into a sexy animated slider

* passing data and locals to and between partials

* misleading documentation

* building the partials to be self-contained and reusable

* coercing form builders into namespacing nested html inputs the way Rails expects

* input namespacing redux, now with nested form builders too!

* Figuring out how to generate markup for an empty child when I'm no longer rendering the children myself

* Figuring out where the fuck to put the blank child template markup so it's accessible, has the right namespacing, and is not submitted with everything else

* Figuring out how the fuck to read an html comment with JS

* nested strong params

* nested strong params

* nested fucking strong params

* caching parsed children's data on parent when the whole thing is bloody atomic.

* Converting datetimes from/to milliseconds on save/load

* CSS and bootstrap collisions

* CSS and bootstrap stupidity

* Reinventing the entire multi-child / nested params / atomic creating/updating/deleting feature on my own before discovering Rails can do that for you.

Just.

I am so glad it's working.

I don't even feel relieved. I just feel exhausted.

But it's done.

finally.

and it's done well. It's all self-contained and reusable, it's easy to read, has separate styling and reusable partials, etc. It's a two line copy/paste drop-in for any other model that needs it. Two lines and it just works, and even tells you if you screwed up.

I'm incredibly proud of everything that went into this.

But mostly I'm just incredibly tired.

Time for some well-deserved sleep.7 -

LPT: NEVER accept a freelance job without looking at the project's source first

Client: I have a project made by a company that is now abandoning it, I want you to fix some bugs

Me: Okay, can you:

1) Give me a build to test the current state of the game

2) Tell me what the bugs are

3) Show me the source

4) Tell me your budget

Client: *sends a list of 10 bugs* Here's the APK and to give you the project I'll need you to sign an NDA

Me: Sure...

*tests build*

*sees at least 20 bugs*

*still downloading source*

*bugs look quite easy to fix should be done under an hour*

Me: Okay, so, I can fix each bug for $10 and I can do 2 today

Client: Okay can you fix 8 bugs today for $40??

*sigh*

Me: No I cannot.

Client: okay then 2 today for $20 is fine, I want a refund if you can't fix them today

*sigh*

Me: Look dude, this isn't the first time I am doing this, aight? I'll fix the bugs today you can pay me after check they are done, savvy?

Client: okay

*source is downloaded*

*literal apes wrote the scripts, commented out code EVERYWHERE

Debug logs after every line printing every frame causing FPS drops, empty objects in the scene

multiple unused UI objects

everything is spaghetti*

*give up, after 2 hours of hell*

*tfw averted an order cancellation by not taking the order and telling client that they can pay me after I am done*

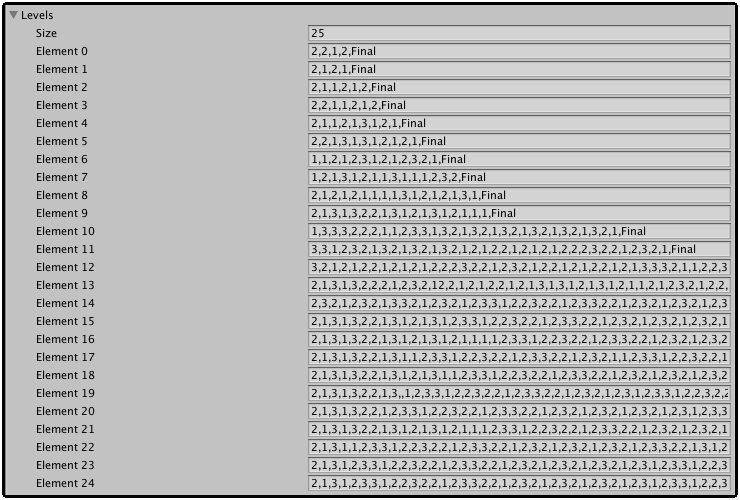

Attached is an image of a level object pool

It's an array with each element representing a level.

The numbers and "Final" are ids for objects in an object pool

The whole string is .Split(',') into an array (RIP MEMORY BTW) and then a loop goes through each element in the split array and instantiates the object from an object pool 5

5 -

Java. AGAIN. 😡

so, I am trying to get a csv opened and read, and then search through it based on values. Easy peasy lemon squeezy in python, right?

Well, damned be java. You need a buffered reader to read the file. Then you have to "while(has next)" the whole damn thing, then you have to do something with the data that you read one by one, right? Well, not to be disappointed, they do have json libraries, but you **have to install** the plugins for it. Aka you have to manually add the libraries or use some backwards manager like maven.

Gotta admit, jdbc is neat if you're anal about your sql statements, but bring the same jazz to csv, and all the hell will break loose.

Now, if you just read your json data into multiple objects and throw them in an array... Kiss shorthand search's ass goodbye, because this mofo can't search through lists without licking the arse of every object. And now, you have to find another way because this way, you can't group shit you just read from csv. (or, I haven't found a way after 5 hours of dealing with the godforsaken shitshow that java libraries are.)

Like, I'm devastated. If this rant doesn't make much sense to you, blame some java library for it.

Shouldn't be too hard.24 -

I wish my classmates didn’t know that I’m good at programming.

Recently, more and more often I am being reached out to by my classmates (and especially by one individual) about the problems they’re having issues with. For example yesterday, a guy fucked up his Git commit and made a bunch of merge conflicts, so I helped him fix this, which then lead to WinForms having multiple declarations of same objects.

And I really don’t wanna be rude, and I always try to help, for the love of god - stop bothering me every 5 minutes while I code, or at 10 PM while I wanna chill out.

Most of the things they have problems with can be solved by 2 minute Googling and I strongly believe that at the university level, you should be able to find solutions for your problems yourself - especially when you’re a programmer.18 -

! rant

Sorry but I'm really, really angry about this.

I'm an undergrad student in the United States at a small state college. My CS department is kinda small but most of the professors are very passionate about not only CS but education and being caring mentors. All except for one.

Dr. John (fake name, of course) did not study in the US. Most professors in my department didn't. But this man is a complete and utter a****le. His first semester teaching was my first semester at the school. I knew more about basic programming than he did. There were more than one occasion where I went "prof, I was taught that x was actually x because x. Is that wrong?" knowing that what I was posing was actually the right answer. Googled to verify first. He said that my old teachings were all wrong and that everything he said was the correct information. I called BS on that, waited until after class to be polite, and showed him that I was actually correct. Denied it.

His accent was also really problematic. I'm not one of those people who feel that a good teacher needs a native accent by any standard (literally only 1 prof in the whole department doesn't), but his English was *awful*. He couldn't lecture for his life and me, a straight A student in high school, was almost bored to sleep on more than one occasion. Several others actually did fall asleep. This... wasn't a good first impression.

It got worse. Much, much worse.

I got away with not having John for another semester before the bees were buzzing again. Operating systems was the second most poorly taught class I've ever been in. Dr John hadn't gotten any better. He'd gotten worse. In my first semester he was still receptive when you asked for help, was polite about explaining things, and was generally a decent guy. This didn't last. In operating systems, his replies to people asking for help became slightly more hostile. He wouldn't answer questions with much useful information and started saying "it's in chapter x of the textbook, go take a look". I mean, sure, I can read the textbook again and many of us did, but the textbook became a default answer to everything. Sometimes it wasn't worth asking. His homework assignments because more and more confusing, irrelavent to the course material, or just downright strange. We weren't allowed to use muxes. Only semaphores? It just didn't make much sense since we didn't need multiple threads in a critical zone at any time. Lastly for that class, the lectures were absolutely useless. I understood the material more if I didn't pay attention at all and taught myself what I needed to know. Usually the class was nothing more than doing other coursework, and I wasn't alone on this. It was the general consensus. I was so happy to be done with prof John.

Until AI was listed as taught by "staff", I rolled the dice, and it came up snake eyes.

AI was the worst course I've ever been in. Our first project was converting old python 2 code to 3 and replicating the solution the professor wanted. I, no matter how much debugging I did, could never get his answer. Thankfully, he had been lazy and just grabbed some code off stack overflow from an old commit, the output and test data from the repo, and said it was an assignment. Me, being the sneaky piece of garbage I am, knew that py2to3 was a thing, and used that for most of the conversion. Then the edits we needed to make came into play for the assignment, but it wasn't all that bad. Just some CSP and backtracking. Until I couldn't replicate the answer at all. I tried over and over and *over*, trying to figure out what I was doing wrong and could find Nothing. Eventually I smartened up, found the source on github, and copy pasted the solution. And... it matched mine? Now I was seriously confused, so I ran the test data on the official solution code from github. Well what do you know? My solution is right.

So now what? Well I went on a scavenger hunt to determine why. Turns out it was a shift in the way streaming happens for some data structures in py2 vs py3, and he never tested the code. He refused to accept my answer, so I made a lovely document proving I was right using the repo. Got a 100. lol.

Lectures were just plain useless. He asked us to solve multivar calculus problems that no one had seen and of course no one did it. He wasted 2 months on MDP. I'd continue but I'm running out of characters.

And now for the kicker. He becomes an a**hole, telling my friends doing research that they are terrible programmers, will never get anywhere doing this, etc. People were *crying* and the guy kept hammering the nail deeper for code that was honestly very good because "his was better". He treats women like delicate objects and its disgusting. YOU MADE MY FRIEND CRY, GAVE HER A BOX OF TISSUES, AND THEN JUST CONTINUED.

Want to know why we have issues with women in CS? People like this a****le. Don't be prof John. Encourage, inspire, and don't suck. I hope he's fired for discrimination.11 -

What I can figure out:

You give me a large application with multiple projects/classes/files/functions that is tens of thousands of lines long, I can debug it. I can picture the multi-dimensional data structures, objects that contain lists of other objects, all in my I head.

What I can't figure out:

Should I or should I not look at the person sitting at their desk when I walk back to my desk from the bathroom.4 -

I would like to know if anyone has created a CSV file which has 10,000,000 objects ?

1) The data is received via an API call.

2) The maximum data received is 1000 objects at once. So it needs to be in some loop to retrieve and insert the data.11 -

the fking piece of technology which is unreal engine... you spend a lot of time on rigging and preparing a beautiful skeleton in blender, you are finally done, and you want to export it as fbx. But nooooo here are like 100 hoops you have to jump through and another more 100 blender settings to set so that the mighty unreal "might" accept your humble offering of an fbx and break it 10 times in the process....

this is rediculous.

The error messages are useless. "mimimi you have multiple roots" "mimimi same named objects". Ya sure, and when I use the older fbx 6.1 library for the export suddently these are fine? hmmmmmmm

<.<'5 -

Completed a python project, started as interest but completed as an academic project.

smart surveillance system for museum

Requirements

To run this you need a CUDA enabled GPU on your computer. (Highly recommended)

It will also run on computers without GPU i.e. it will run on your processor giving you very poor FPS(around 0.6 to 1FPS), you can use AWS too.

About the project

One needs to collect lots of images of the artifacts or objects for training the model.

Once the training is done you can simply use the model by editing the 'options' in webcam files and labels of your object.

Features

It continuously tracks the artifact.

Alarm triggers when artifact goes missing from the feed.

It marks the location where it was last seen.

Captures the face from the feed of suspects.

Alarm triggering when artifact is disturbed from original position.

Multiple feed tracking(If artifact goes missing from feed 1 due to occlusion a false alarm won't be triggered since it looks for the artifact in the other feeds)

Project link https://github.com/globefire/...

Demo link

https://youtu.be/I3j_2NcZQds2 -

The Zen Of Ripping Off Airtable:

(patterned after The Zen Of Python. For all those shamelessly copying airtables basic functionality)

*Columns can be *reordered* for visual priority and ease of use.

* Rows are purely presentational, and mostly for grouping and formatting.

* Data cells are objects in their own right, so they can control their own rendering, and formatting.

* Columns (as objects) are where linkages and other column specific data are stored.

* Rows (as objects) are where row specific data (full-row formatting) are stored.

* Rows are views or references *into* columns which hold references to the actual data cells

* Tables are meant for managing and structuring *small* amounts of data (less than 10k rows) per table.

* Just as you might do "=A1:A5" to reference a cell range in google or excel, you might do "opt(table1:columnN)" in a column header to create a 'type' for the cells in that column.

* An enumeration is a table with a single column, useful for doing the equivalent of airtables options and tags. You will never be able to decide if it should be stored on a specific column, on a specific table for ease of reuse, or separately where it and its brothers will visually clutter your list of tables. Take a shot if you are here.

* Typing or linking a column should be accomplishable first through a command-driven type language, held in column headers and cells as text.

* Take a shot if you somehow ended up creating any of the following: an FSM, a custom regex parser, a new programming language.

* A good structuring system gives us options or tags (multiple select), selections (single select), and many other datatypes and should be first, programmatically available through a simple command-driven language like how commands are done in datacells in excel or google sheets.

* Columns are a means to organize data cells, and set constraints and formatting on an entire range.

* Row height, can be overridden by the settings of a cell. If a cell overrides the row and column render/graphics settings, then it must be drawn last--drawing over the default grid.

* The header of a column is itself a datacell.

* Columns have no order among themselves. Order is purely presentational, and stored on the table itself.

* The last statement is because this allows us to pluck individual columns out of tables for specialized views.

*Very* fast scrolling on large datasets, with row and cell height variability is complicated. Thinking about it makes me want to drink. You should drink too before you embark on implementing it.

* Wherever possible, don't use a database.

If you're thinking about using a database, see the previous koan.

* If you use a database, expect to pick and choose among column-oriented stores, and json, while factoring for platform support, api support, whether you want your front-end users to be forced to install and setup a full database,

and if not, what file-based .so or .dll database engine is out there that also supports video, audio, images, and custom types.

* For each time you ignore one of these nuggets of wisdom, take a shot, question your sanity, quit halfway, and then write another koan about what you learned.

* If you do not have liquor on hand, for each time you would take a shot, spank yourself on the ass. For those who think this is a reward, for each time you would spank yourself on the ass, instead *don't* spank yourself on the ass.

* Take a sip if you *definitely* wildly misused terms from OOP, MVP, and spreadsheets.5 -

Title: "Wizard of Alzheimer's: Memories of Magic"

Setting:

You play as an elderly wizard who has been diagnosed with Alzheimer's disease. As your memories fade, so does your grasp on the magical world you once knew. You must navigate the fragmented and ever-changing landscapes of your own mind, casting spells and piecing together the remnants of your magical knowledge to delay the progression of the disease and preserve your most precious memories.

Gameplay:

1. Procedurally generated memories: Each playthrough generates a unique labyrinth of memories, representing different aspects and moments of your life as a wizard.

2. Memory loss mechanic: As you progress through the game, your memories will gradually fade, affecting your abilities, available spells, and the layout of the world around you.

3. Spell crafting: Collect fragments of your magical knowledge and combine them to craft powerful spells. However, as your memory deteriorates, you'll need to adapt your spellcasting to your changing abilities.

4. Mnemonic puzzles: Solve puzzles and challenges that require you to recall specific memories or piece together fragments of your past to progress.

5. Emotional companions: Encounter manifestations of your emotions, such as Joy, Fear, or Regret. Interact with them to gain insight into your past and unlock new abilities or paths forward.

6. Boss battles against Alzheimer's: Face off against physical manifestations of Alzheimer's disease, representing the different stages of cognitive decline. Use your spells and wits to overcome these challenges and momentarily push back the progression of the disease.

7. Memory anchors: Discover and collect significant objects or mementos from your past that serve as memory anchors. These anchors help you maintain a grasp on reality and slow down the rate of memory loss.

8. Branching skill trees: Develop your wizard's abilities across multiple skill trees, focusing on different schools of magic or mental faculties, such as Concentration, Reasoning, or Creativity.

9. Lucid moments: Experience brief periods of clarity where your memories and abilities are temporarily restored. Make the most of these moments to progress further or uncover crucial secrets.

10. Bittersweet ending: As you delve deeper into your own mind, you'll confront the inevitability of your condition while celebrating the rich magical life you've lived. The game's ending will be a poignant reflection on the power of memories and the legacy you leave behind.

In "Wizard of Alzheimer's: Memories of Magic," you'll embark on a deeply personal journey through the fragmented landscapes of a once-powerful mind. As you navigate the challenges posed by Alzheimer's disease, you'll rediscover the magic you once wielded, cherish the memories you hold dear, and leave a lasting impact on the magical world you've called home.

LMAO 6

6 -

The company I worked for had to do deletion runs of customer data (files and database records) every year, mainly for legal reasons. Two months before the next run they found out that the next year would bring multiple times the amount of objects, because a decade ago they had introduced a new solution whose data would be eligible for deletion for the first time.

The existing process was not be able to cope with those amounts of objects and froze to death gobbling up every bit of ram on the testing system. So my task was to rewrite the exising code, optimize api calls and somehow I ended up in multithreading the whole process. It worked and is most probably still in production today. 💨 -

The next step for improving large language models (if not diffusion) is hot-encoding.

The idea is pretty straightforward:

Generate many prompts, or take many prompts as a training and validation set. Do partial inference, and find the intersection of best overall performance with least computation.

Then save the state of the network during partial inference, and use that for all subsequent inferences. Sort of like LoRa, but for inference, instead of fine-tuning.

Inference, after-all, is what matters. And there has to be some subset of prompt-based initializations of a network, that perform, regardless of the prompt, (generally) as well as a full inference step.

Likewise with diffusion, there likely exists some priors (based on the training data) that speed up reconstruction or lower the network loss, allowing us to substitute a 'snapshot' that has the correct distribution, without necessarily performing a full generation.

Another idea I had was 'semantic centering' instead of regional image labelling. The idea is to find some patch of an object within an image, and ask, for all such patches that belong to an object, what best describes the object? if it were a dog, what patch of the image is "most dog-like" etc. I could see it as being much closer to how the human brain quickly identifies objects by short-cuts. The size of such patches could be adjusted to minimize the cross-entropy of classification relative to the tested size of each patch (pixel-sized patches for example might lead to too high a training loss). Of course it might allow us to do a scattershot 'at a glance' type lookup of potential image contents, even if you get multiple categories for a single pixel, it greatly narrows the total span of categories you need to do subsequent searches for.

In other news I'm starting a new ML blackbook for various ideas. Old one is mostly outdated now, and I think I scanned it (and since buried it somewhere amongst my ten thousand other files like a digital hoarder) and lost it.

I have some other 'low-hanging fruit' type ideas for improving existing and emerging models but I'll save those for another time.5 -

Very Long, random and pretentiously philosphical, beware:

Imagine you have an all-powerful computer, a lot of spare time and infinite curiosity.

You decide to develop an evolutionary simulation, out of pure interest and to see where things will go. You start writing your foundation, basic rules for your own "universe" which each and every thing of this simulation has to obey. You implement all kinds of object, with different attributes and behaviour, but without any clear goal. To make things more interesting you give this newly created world a spoonful of coincidence, which can randomely alter objects at any given time, at least to some degree. To speed things up you tell some of these objects to form bonds and define an end goal for these bonds:

Make as many copies of yourself as possible.

Unlike the normal objects, these bonds now have purpose and can actively use and alter their enviroment. Since these bonds can change randomely, their variety is kept high enough to not end in a single type multiplying endlessly. After setting up all these rules, you hit run, sit back in your comfy chair and watch.

You see your creation struggle, a lot of the formed bonds die and desintegrate into their individual parts. Others seem to do fine. They adapt to the rules imposed on them by your universe, they consume the inanimate objects around them, as well as the leftovers of bonds which didn't make it. They grow, split and create dublicates of themselves. Content, you watch your simulation develop. Everything seems stable for now, your newly created life won't collapse anytime soon, so you speed up the time and get yourself a cup of coffee.

A few minutes later you check back in and are happy with the results. The bonds are thriving, much more active than before and some of them even joined together, creating even larger bonds. These new bonds, let's just call them animals (because that's obviously where we're going), consist of multiple different types of bonds, sometimes even dozens, which work together, help each other and seem to grow as a whole. Intrigued what will happen in the future, you speed the simulation up again and binge-watch the entire Lord of the Rings trilogy.

Nine hours passed and your world became a truly mesmerizing place. The animals grew to an insane size, consisting of millions and billions of bonds, their original makeup became opaque and confusing. Apparently the rules you set up for this universe encourage working together more than fighting each other, although fights between animals do happen.

The initial tools you created to observe this world are no longer sufficiant to study the inner workings of these animals. They have become a blackbox to you, but that's not a problem; One of the species has caught your attention. They behave unlike any other animal. While most of the species adapt their behaviour to fit their enviroment, or travel to another enviroment which fits their behaviour, these special animals started to alter the existing enviroment to help their survival. They even began to use other animals in such a way that benefits themselves, which was different from the usual bonds, since this newly created symbiosis was not permanent. You watch these strange, yet fascinating animals develop, without even changing the general composition of their bonds, and are amazed at the complexity of the changes they made to their enviroment and their behaviour towards each other.

As you observe them build unique structures to protect them from their enviroment and listen to their complex way of communication (at least compared to other animals in your simulation), you start to wonder:

This might be a pretty basic simulation, these "animals" are nothing more than a few blobs on a screen, obeying to their programming and sometimes getting lucky. All this complexity you created is actually nothing compared to a single insect in the real world, but at what point do you draw the line? At what point does a program become an organism?

At what point is it morally wrong to pull the plug?15 -

This is true I swear... I once worked on part of a project "optimization" that required, running a job on sidekiq in the background that spawns multiple threaded RPC calls on RabbitMQ (and be I/O blocking) till the jobs are done (or failed) so that it updates the status of the master object (that has the associated objects processed) and sends an email to the ops manager (just a summary email)... instead of using database locks... or dropping the email requirement...

I did it without arguing because I've already quit the job a while ago... -

Aka... How NOT to design a build system.

I must say that the winning award in that category goes without any question to SBT.

SBT is like trying to use a claymore mine to put some nails in a wall. It most likely will work somehow, but the collateral damage is extensive.

If you ask what build tool would possibly do this... It was probably SBT. Rant applies in general, but my arch nemesis is definitely SBT.

Let's start with the simplest thing: The data format you use to store.

Well. Data format. So use sth that can represent data or settings. Do *not* use a programming language, as this can neither be parsed / modified without an foreign interface or using the programming language itself...

Which is painful as fuck for automatisation, scripting and thus CI/CD.

Most important regarding the data format - keep it simple and stupid, yet precise and clean. Do not try to e.g. implement complex types - pain without gain. Plain old objects / structs, arrays, primitive types, simple as that.

No (severely) nested types, no lazy evaluation, just keep it as simple as possible. Build tools are complex enough, no need to feed the nightmare.

Data formats *must* have btw a proper encoding, looking at you Mr. XML. It should be standardized, so no crazy mfucking shit eating dev gets the idea to use whatever encoding they like.

Workflows. You know, things like

- update dependency

- compile stuff

- test run

- ...

Keep. Them. Simple.

Especially regarding settings and multiprojects.

http://lihaoyi.com/post/...

If you want to know how to absolutely never ever do it.

Again - keep. it. simple.

Make stuff configurable, allow the CLI tool used for building to pass this configuration in / allow setting of env variables. As simple as that.

Allow project settings - e.g. like repositories - to be set globally vs project wide.

Not simple are those tools who have...

- more knobs than documentation

- more layers than a wedding cake

- inheritance / merging of settings :(

- CLI and ENV have different names.

- CLI and ENV use different quoting

...

Which brings me to the CLI.

If your build tool has no CLI, it sucks. It just sucks. No discussion. It sucks, hmkay?

If your build tool has a CLI, but...

- it uses undocumented exit codes

- requires absurd or non-quoting (e.g. cannot parse quoted string)

- has unconfigurable logging

- output doesn't allow parsing

- CLI cannot be used for automatisation

It sucks, too... Again, no discussion.

Last point: Plugins and versioning.

I love plugins. And versioning.

Plugins can be a good choice to extend stuff, to scratch some specific itches.

Plugins are NOT an excuse to say: hey, we don't integrate any features or offer plugins by ourselves, go implement your own plugins for that.

That's just absurd.

(precondition: feature makes sense, like e.g. listing dependencies, checking for updates, etc - stuff that most likely anyone wants)

Versioning. Well. Here goes number one award to Node with it's broken concept of just installing multiple versions for the fuck of it.

Another award goes to tools without a locking file.

Another award goes to tools who do not support version ranges.

Yet another award goes to tools who do not support private repositories / mirrors via global configuration - makes fun bombing public mirrors to check for new versions available and getting rate limited to death.

In case someone has read so far and wonders why this rant came to be...

I've implemented a sort of on premise bot for updating dependencies for multiple build tools.

Won't be open sourced, as it is company property - but let me tell ya... Pain and pain are two different things. That was beyond pain.

That was getting your skin peeled off while being set on fire pain.

-.-5 -

I need some advice to avoid stressing myself out. I'm in a situation where I feel stuck between a rock and a hard place at work, and it feels like there's no one to turn to. This is a long one, because context is needed.

I've been working on a fairly big CMS based website for a few years that's turned into multiple solutions that I'm more or less responsible for. During that time I've been optimizing the code base with proper design patterns, setting up continuous delivery, updating packaging etc. because I care that the next developer can quickly grasp what's going on, should they take over the project in the future. During that time I've been accused of over-engineering, which to an extent is true. It's something I've gotten a lot better at over the years, but I'm only human and error prone, so sometimes that's just how it is.

Anyways, after a few years of working on the project I get a new colleague that's going to help me on my CMS projects. It doesn't take long for me to realize that their code style is a mess. Inconsistent line breaks and naming conventions, really god awful anti-pattern code. There's no attempt to mimic the code style I've been using throughout the project, it's just complete chaos. The code "works", although it's not something I'd call production code. But they're new and learning, so I just sort of deal with it and remain patient, pointing out where they could optimize their code, teaching them basic object oriented design patterns like... just using freaking objects once in a while.

Fast forward a few years until now. They've learned nothing. Every time I read their code it's the same mess it's always been.

Concrete example: a part of the project uses Vue to render some common components in the frontend. Looking through the code, there is currently *no* attempt to include any air between functions, or any part of the code for that matter. Everything gets transpiled and minified so there's absolutely NO REASON to "compress" the code like this. Furthermore, they have often directly manipulated the DOM from the JavaScript code rather than rendering the component based on the model state. Completely rendering the use of Vue pointless.

And this is just the frontend part of the code. The backend is often orders of magnitude worse. They will - COMPLETELY RANDOMLY - sometimes leave in 5-10 lines of whitespace for no discernable reason. It frustrates me to no end. I keep asking them to verify their staged changes before every commit, but nothing changes. They also blatantly copy/paste bits of my code to other components without thinking about what they do. So I'll have this random bit of backend code that injects 3-5 dependencies there's simply no reason for and aren't being used. When I ask why they put them there I simply get a “I don't know, I just did it like you did it”.

I simply cannot trust this person to write production code, and the more I let them take over things, the more the technical debt we accumulate. I have talked to my boss about this, and things have improved, but nowhere near where I need it to be.

On the other side of this are my project manager and my boss. They, of course, both want me to implement solutions with low estimates, and as fast and simply as possible. Which would be fine if I wasn't the only person fighting against this technical debt on my team. Add in the fact that specs are oftentimes VERY implicit, so I'm stuck guessing what we actually need and having to constantly ask if this or that feature should exist.

And then, out of nowhere, I get assigned a another project after some colleague quits, during a time I’m already overbooked. The project is very complex and I'm expected to give estimates on tasks that would take me several hours just to research.

I'm super stressed and have no one I can turn to for help, hence this post. I haven't put the people in this post in the best light, but they're honestly good people that I genuinely like. I just want to write good code, but it's like I have to fight for my right to do it.1 -

Just spent an hour salvaging some code from an app project I abandoned so I can reuse it in the future and add what I salvaged to a portfolio of small things I've made.

It was a simple multiple player name menu that generated player objects once the user was done entering names.

Loads of potential future uses.

No point letting it sit inside an abandoned project even if it is somewhat trivial to reproduce. -

I'm reading some react/typescript code and I haven't work much with any other language or paradigm where I might have a function return multiple objects that are different types

e.g.

const { sna, foo, bar } = useWhatever();

I mean I guess it's just unpacking properties from the actual return object but that isn't apparent to a newcomer at a glance (if I remember correctly)

https://softwareengineering.stackexchange.com/...

Looking for additional insights from the wise devrant community30 -

I love and hate javascript. I set out to do a fully ajax/state driven form interface that operates with multiple interdependent data objects which all extend a base class.

React/Angular may have been a better call but I just didn't have time so I needed to rapid prototype in jquery /vanilla JS.

I'm in the midst of learning and refactoring all the ajax calls to promises and then to async/await, so it's a huge learning experience...

Meanwhile I've got to build objects to represent the data on the backend which is all legacy OScommerce/PHP

Hell of a ride. -

Frustration is starting a very large data object with multiple child objects while a project wide refactor is underway changing the MVC architecture and introducing serialization.

-

Bubble Wrap at Home Depot: The Essential Guide for Safe and Efficient Packaging

Introduction

When it comes to packing and protecting fragile items, bubble wrap stands out as a crucial material. Its air-filled bubbles provide cushioning that guards against impacts, vibrations, and scratches. Home Depot, a leading retailer in home improvement and supplies, offers a range of bubble wrap products suited for various needs. This guide delves into the options available at Home Depot, their benefits, and tips for effectively using bubble wrap.

Types of Bubble Wrap Available at Home Depot

Home Depot stocks a diverse selection of bubble wrap, catering to different packing requirements. Here’s an overview of the types you might encounter:

Standard Bubble Wrap

Standard bubble wrap is the most common type and features 1/2-inch bubbles. This variant is ideal for protecting everyday items during moves or storage. It’s versatile, offering sufficient cushioning for most household goods like dishes, electronics, and picture frames.

Large Bubble Wrap

Large bubble wrap comes with bigger bubbles, typically 3/4 inches in diameter. It provides enhanced protection for larger and more delicate items such as televisions, mirrors, and fragile furniture. The increased bubble size offers superior cushioning and shock absorption.

Anti-Static Bubble Wrap

Anti-static bubble wrap is specially designed for electronic components and sensitive equipment. It prevents static electricity from building up, which can damage electronic circuits. This type of bubble wrap is essential for shipping or storing items like computer parts, circuit boards, and other tech gadgets.

Perforated Bubble Wrap

Perforated bubble wrap features evenly spaced perforations that make it easy to tear off sections without needing scissors. This convenience is perfect for packing smaller items or when you need to use bubble wrap in smaller quantities. It’s a practical choice for both home and office use.

Bubble Wrap Rolls and Sheets

Home Depot offers bubble wrap in various roll sizes and pre-cut sheets. Rolls are ideal for larger packing tasks, as they allow you to cut the exact length needed. Pre-cut sheets, on the other hand, are handy for quick packing and can be used directly without additional cutting.

Benefits of Using Bubble Wrap

Bubble wrap offers several benefits that make it a go-to choice for packaging and protection:

Impact Protection

The air-filled bubbles in bubble wrap absorb and disperse shock, reducing the risk of damage during transit. This impact resistance is crucial for protecting fragile items from breakage.

Scratch Prevention

Bubble wrap creates a cushion around items, preventing scratches and scuffs. This is particularly useful for protecting surfaces of electronics, glassware, and furniture.

Versatility

Bubble wrap can be used for a wide range of items and packing scenarios. Whether you’re moving house, shipping products, or storing valuables, bubble wrap adapts to various needs.

Lightweight

Despite its protective qualities, bubble wrap is lightweight and doesn’t add significant weight to your packages. This feature helps in reducing shipping costs and makes handling easier.

Reusable

Many people reuse bubble wrap for multiple packing jobs. Its durability allows it to be effective over several uses, making it an environmentally friendly option.

How to Use Bubble Wrap Effectively

To maximize the benefits of bubble wrap, follow these tips for effective use:

Wrap Items Securely

Ensure that each item is fully covered with bubble wrap. For delicate items, use multiple layers for added protection. Place the bubble wrap with the bubbles facing inward for optimal cushioning.

Fill Empty Spaces

When packing items in boxes, fill any gaps with bubble wrap to prevent movement. This helps in reducing the risk of items shifting during transit.

Seal the Package Well

Once wrapped, secure the bubble wrap with packing tape. Ensure that all edges are sealed to keep the wrap in place and provide a complete protective layer.

Use Appropriate Size

Choose the right type and size of bubble wrap based on the items you’re packing. Larger bubbles are better for big, fragile items, while smaller bubbles are suitable for everyday objects.

Avoid Over-Packing

While bubble wrap is protective, over-packing can lead to unnecessary bulk and weight. Aim for a balance where items are cushioned but the package remains manageable.

Conclusion

Bubble wrap from Home Depot is an indispensable tool for protecting fragile items during moving, shipping, or storage. With various types available, including standard, large, anti-static, and perforated options, you can find the right bubble wrap to meet your needs.

Resource:https://tycoonpackaging.com/product...