Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "csv"

-

This shit is real.

Guy comes to my desk.

Guy: Do you know Python?

Me: Yes

Guy: I want a program that reads a CSV containing IP addresses and tells which of them are valid.

Me: Sure thing. Show me the CSV file.

Guy: (Shows the file)

Me: (Writes a small function for checking whether the IP is valid)

Me: Done Here you go.

Guy: You should be using regex.

Me: Why? This is perfect. No need for regex.

Guy: My manager wants a solution using regex only.

Me: Why so?

Guy: I don't know. Can you do it using regex?

Me: Only if you say so. (Stackoverflow. Writes a humongous regex). Done!

Me: Just for curiosity, what is your application?

Guy: I will port it in Java. You see, regex is easy to debug.

Me: Ohhh Yes. I forgot that. Good luck with your regex.22 -

I am an indie game developer and I lead a team of 5 trusted individuals. After our latest release, we bought a larger office and decided to expand our team so that we could implement more features in our games and release it in a desirable time period. So I asked everyone to look for individuals that they would like to hire for their respective departments. When the whole list was prepared, I sent out a bunch of job offers for a "training trial period". The idea was that everyone would teach the newbies in their department about how we do stuff and then after a month select those who seem to be the best. Our original team was

-Two coders

-One sound guy(because musician is too mainstream)

-Two artists

I did coding, concept art(and character drawings) and story design, So, I decided to be a "coding mentor"(?).

We planned to recruit

-Two coders

-One sound guy

-One artist (two if we encountered a great artstyle)

When the day finally arrived I decided to hide the fact that I am the founder and decided that there would be a phantom boss so that they wouldn't get stressed or try flattery.

So out of 7, 5 people people came for the "coding trial session". There were 3 guys and 2 girls. My teammate and I started by giving them a brief introduction to the working of our engine and then gave them a few exercises to help them understand it better. Fast forward a few days, and we were teaching them about how we implement multiple languages in our games using Excel. The original text in English is written in the first column and we then send it to translators so that they can easily compare and translate the content side by side such that a column is reserved for each language. We then break it down and convert the whole thing into an engine friendly CSV kind of format. When we concluded, we asked them if they had any questions. So there was this smartass, who could not get over the fact that we were using Excel. The conversation went like this:(almost word to word)

Smartass: "Why would you even use that primitive software? How stupid is that? Why don't you get some skills before teaching us about your shit logic?"

Me:*triggered* "Oh yeah? Well that's how we do stuff here. If you don't like it, you can simply leave."

Smartass: "You don't know who I am, do you? I am friends with the boss of this company. If I wanted I could have all of you fired at whim."

Me:"Oh, is that right?"

Smartass:"Damn right it is. Now that you know who I am, you better treat me with some respect."

Me: "What if I told you that I am not just a coder?"

Smartass:"Considering your lack of skills, I assume that you are also a janitor? What was he thinking? Hiring people like you, he must have been desperate."

Me:"What if I told you that I am the boss?"

Smartass:"Hah! You wish you were."*looks towards my teammate while pointing a thumb at me* "Calling himself the boss, who does he think he is?"

Teammate:*looks away*.

Smartass:*glances back and forth between me and my teammate while looking confused* *realizes* *starts sweating profusely* *looks at me with horror*

Me:"Ha ha ha hah, get out"

Smartass:*stands dumbfounded*

Me:"I said, get out"

Smartass:*gathers his stuff and leaves the room*

Me: "Alright, any questions?"*Smiling angrily*

Newcomers: *shake heads furiously*

Me:"Good"

For the rest of the day nobody tried to bother me. I decided to stop posing as an employee and teaching the newcomers so that I could secretly observe all sessions that took place from now on for events like these. That guy never came back. The good news however, is that the art and music training was going pretty well.

What really intrigues me though is that why do I keep getting caught with these annoying people? It's like I am working in customer support or something.16 -

A guy on another team who is regarded by non-programmers as a genius wrote a python script that goes out to thousands of our appliances, collects information, compiles it, and presents it in a kinda sorta readable, but completely non-transferable format. It takes about 25 minutes to run, and he runs it himself every morning. He comes in early to run it before his team's standup.

I wanted to use that data for apps I wrote, but his impossible format made that impractical, so I took apart his code, rewrote it in perl, replaced all the outrageous hard-coded root passwords with public keys, and added concurrency features. My script dumps the data into a memory-resident backend, and my filterable, sortable, taggable web "frontend"(very generous nomenclature) presents the data in html, csv, and json. Compared to the genius's 25 minute script that he runs himself in the morning, mine runs in about 45 seconds, and runs automatically in cron every two hours.

Optimized!19 -

Today I discovered that we have a CSV export button for an order transaction system, on a page which is completely disconnected from the rest of the website.

It is only being called by an internal server, used by our Data department.

They run selenium to click the button.

Then they import the CSV into a database.

That database is accessed by an admin panel.

That admin panel has an excel export button.

Which is clicked by our CFO. But he got bored of clicking, so he uses IFTTT to schedule a download of the XLS and import it in Google Sheets.

That sheet uses a Salesforce data connector.

Marketing then sends email campaigns based on that Salesforce data...

😒11 -

PM: Hey. I need this data right away so I can generate some reports!

/me runs some queries, creates some csv files, emails results

PM: Thanks! I'll look at this after I get back from vacation!3 -

So I was applying for a research position in linguistic department, and had the interview today.

Prof: So you know excel right

Me: (show a project to him to prove I at least know csv file)

Prof: Ok so you know excel.

Me: Yeah kinda.

Prof: Ok that's good. Cuz right now we are using amazon Turk, and the data they returned, which are excel files, are not really the way we want it.

Me: Ok sounds like a parser can fix it......

Prof: Yeah.... the students in the lab are doing it manually now

(Dead silence)

Prof: Ok move onto next matter 7

7 -

Website A = Website B

Script to import CSV file on Website A = works

Script to import CSV file on Website B = !works

Clock = 4am

Me = null9 -

[Certified CMS Of Doom™ moment]

Ah yes, the good old "generate a huge CSV just to know how many rows there are" 14

14 -

*wrestling commentator voice*

"In this weeks episode of encoding hell:

The iiiinnnfamous UTF-8 Byte Order Mark veeeersus PHP!"

For an online shop we developed, there is currently a CSV upload feature in review by our client. Before we developed this feature, we created together with the client a very precise specification, including the file format and encoding (UTF-8).

After the first test day, the client informed us, that there were invalid characters after processing the uploaded file.

We checked the code and compared the customer's file with our template.

The file was encoded in ISO-8859-1 and NOT as specified UTF-8.

But what ever, we had to add an encoding check, thus allowing both encodings from now on.

Well well well welly welly fucking well...

Test day 2: We receive an email from said client, that the CSV is not working, again.

This time: UTF-8 encoding, but some fields had more colums with different values than specified.

Fucking hell.

We tell the customer that.

(I was about to write a nice death threat novel to them, but my boss held me back)

Testing day 3, today:

"The uploading feature is not working with our file, please fix it."

I tried to debug it, but only got misleading errors. After about 30 minutes, at 20 stacks of hatered, I finally had an idea to check the file in a hex editor:

God fucking what!?!!?!11?!1!!!?2!!

The encoding was valid UTF-8, all columns and fields were correct, but this time the file contained somthing different.

Something the world does not need.

Something nearly as wasteful as driving a monster truck in first gear from NYC to LA.

It was the UTF-8 Byte Order Mark.

3 bytes of pure hell.

Fucking 0xEFBBBF.

The archenemy of PHP and sane people.

If the devil had sex with the ethernet port of a rusty Mac OS X Server, then 9 microseconds later a UTF-8 BOM would have been born.

OK, maybe if PHP would actually cope with these bytes of death without crashing, that would be great.3 -

Our university has a rather small gym, and it tends get pretty crowded. They have an online counter, so I wrote a Python script that queries the current number of people every minute, and logs it in a CSV (no need to get fancy). Hopefully in a week I’ll have enough data to spot the quiet times 😎6

-

I received an email from marketing manager requested me to extract all the emails from the all the application and compile it into a CSV and make sure that CSV doesn't have duplicate email's. She wants to send newsletter to all those email's which we don't have permission for.

I permanently deleted that email.3 -

Ooh this is good.

At my first job, i was hired as a c++ developer. The task seemed easy enough, it was a research and the previous developer died, leaving behind a lot of documentation and some legacy fortran code. Now you might not know, but fortran can be really easily converted to c, and then refactored to c++.

Fine, time to read the docs. The research was on pollen levels, cant really tell more. Mostly advanced maths. I dug through 500+ pages of algebra just to realize, theres no way this would ever work. Okay dont panic, im a data analyst, i can handle this.

Lets take a look at the fortran code, maybe that makes more sense. Turns out it had nothing to do with the task. It looped through some external data i couldnt find anywhere and thats it. Yay.

So i exported everything we had to a csv file, wrote a java program to apply linefit with linear regression and filter out the bad records. After that i spent 2 days in a hot server room, hoping that the old intel xeon wouldnt break down from sending java outputs directly to haskell, but it held on its own.1 -

C: hey mate, what's the best tool to open up this 31.1M rows x 106 cols CSV file?

M: Umh...Pandas DataFrame or R DataTable I guess?

C: all right, Excell will do, thanks!

M: erhm...yeah, anytime?9 -

*part rant part developers are the best people in the world*

years back a friend got a job at some non profit, as a program coordinator, and his first task was to "coordinate" the work on creating the new website for the organisation. current website they had was a monster built on some custom cms, 7 languages, 5 years of almost dayly content updates, etc. so he asked me if i would took the job of creating a new website on wordpress. i wasn t really keen on doing it, but he is a good friend so i said ok. i wrote down the SOW, which clearly stated that i will not be responsible for migrating the old content to the new website. i had experience working with non it clients, and made sure everyone understood the SOW before the contract was signed. everyone was ok with it. after three weeks my job was done, all milestones and requirenments were met. peechy! and then all hell breaks loose when the president of the organisation (the most evil person i ve met in my life) told my friend that she expects me to migrate the content as well. he tried explaining her that that was not agreed, that it will cost extra, etc. but she didn t want to hear any of that. despite the fact that she was a part of the entire SOW creation process, because she is a micro managing bitch. in any other situation i wouldn t budge, because we have the contract and i kept all the paper trail, but since my friends job was on the line i agreed to do it. my SQL knowldge at the time, and even now, was very rudimentary, the db organisation of their cms was confusing as fuck... so i took two days of searching tutorials and SO threads and was doing ok, until i got to a problem i couldn t solve on my own. i posted the issue on SO and some guy asked for some clarifications, and we went back and forth, and decided to move to chat. while chatting with him i realised that there was not a chance for me to do all the work in few days without a lot of errors so i offered him to do it for a fee. he agreed. i asked him for his rate, he said if this is a community work i will do it for free, but if it is commercial i will charge the standard rate, 50$/hr. i told him it was commercial, and agreed to his rate. i asked him if he needed an advance payment, he said no need, you ll pay me when the job is done. i sent him the db dumps, after two days he sent me the csv, i checked it, all was good and wired him the money.

now compare this work relatioship with the relatioship with that bitch from the non profit.

* we met online, on a semi-anonymous forum, this guys profile was empty

* he trusted me enough to say that he would do it for free if i wasn t payed either

* i wasn t an asshole to take advantage of that trust

* he did the work without the advance payment

* i payed him the moment i verified the work

faith in humanity restored3 -

Whoever implemented the data import in Numbers on Mac needs to be lined up against a wall and shot with needles until they wish they were dead.

Why on all of gods unholy green and shitty earth would i want data i import (EVEN IN CSV FOR FUCK SAKE) to be delimited by an arbitrary text width? WHAT THE ACTUAL FUCK

WHY WHY why would I EVER want to delimit my carefully structured data by fucking text width instead of new line or comma? AAAAARRRHHH

And what fucking big brain genius made this the DEFAULT SETTING for imported text AND CSV FILES. IT STANDS FOR COMMA SEPARATED FILE YOU FUCK BOI MAYBE JUST MAYBE I WANT IT SEPARATED BY FUCKING COMMMMMMMAAAAASSSSSS9 -

I've been pleading for nearly 3 years with our IT department to allow the web team (me and one other guy) to access the SQL Server on location via VPN so we could query MSSQL tables directly (read-only mind you) rather than depend on them to give us a 100,000+ row CSV file every 24 hours in order to display pricing and inventory per store location on our website.

Their mindset has always been that this would be a security hole and we'd be jeopardizing the company. (Give me a break! There are about a dozen other ways our network could be compromised in comparison to this, but they're so deeply forged in M$ server and active directories that they don't even have a clue what any decent script kiddie with a port sniffer and *nix could do. I digress...)

So after three years of pleading with the old IT director, (I like the guy, but keep in mind that I had to teach him CTRL+C, CTRL+V when we first started building the initial CSV. I'm not making that up.) he retired and the new guy gave me the keys.

Worked for a week with my IT department to get Openswan (ipsec) tunnel set up between my Ubuntu web server and their SQL Server (Microsoft). After a few days of pulling my hair out along with our web hosting admins and our IT Dept staff, we got them talking.

After that, I was able to install a dreamfactory instance on my web server and now we have REST endpoints for all tables related to inventory, products, pricing, and availability!

Good things come to those who are patient. Now if I could get them to give us back Dropbox without having to socks5 proxy throug the web server, i'd be set. I'll rant about that next.

http://tapsla.sh/e0jvJck7 -

EXCEL YOU FUCKING PIECE OF SHIT! don't get me wrong, it's usefull and kt works, usually... Buckle up, your i for a ride. SO HERE WE FUCKING GO: TRANSLATED FORMULA NAMES? SUCKS BUT MANAGABLE. WHATS REALLY FUCKED UP IS HTHE GERMAN VERSION!

DID YOU HEAR ABOUT .csv? It stands for MOTHERFUCKING COMMA SEPERATED VALUES! GUESS WHAT SOME GENIUS AT MICROSOFT FIGURED? Hey guys let's use a FUCKING SEMICOLON INSTEAD OF A COMMA IN THE GERMAN VERSION! LET'S JUST FUCK EVERY ONE EXPORTING ANY DATA FROM ANY WEBSITE!

The workaround is to go to your computer settings, YOU CAN'T FUCKING ADJUST THIS IN EXCEL!, change the language of the OS to English, open the file and change it back to German. I mean, come on guys, what is this shit?

AND DON'T GET ME STARTED ON ENCODING! äöü and that stuff usually works, but in Switzerland we also use French stuff, that then usually breaks the encoding for Excel if the OS language is set to German (both on Windows and Mac, at least they are consistent...)

To whoever approved, implemented or tested it: FUCK YOU, YOU STUPID SHITFUCK, with love: me7 -

The solution for this one isn't nearly as amusing as the journey.

I was working for one of the largest retailers in NA as an architect. Said retailer had over a thousand big box stores, IT maintenance budget of $200M/year. The kind of place that just reeks of waste and mismanagement at every level.

They had installed a system to distribute training and instructional videos to every store, as well as recorded daily broadcasts to all store employees as a way of reducing management time spend with employees in the morning. This system had cost a cool 400M USD, not including labor and upgrades for round 1. Round 2 was another 100M to add a storage buffer to each store because they'd failed to account for the fact that their internet connections at the store and the outbound pipe from the DC wasn't capable of running the public facing e-commerce and streaming all the video data to every store in realtime. Typical massive enterprise clusterfuck.

Then security gets involved. Each device at stores had a different address on a private megawan. The stores didn't generally phone home, home phoned them as an access control measure; stores calling the DC was verboten. This presented an obvious problem for the video system because it needed to pull updates.

The brilliant Infosys resources had a bright idea to solve this problem:

- Treat each device IP as an access key for that device (avg 15 per store per store).

- Verify the request ip, then issue a redirect with ANOTHER ip unique to that device that the firewall would ingress only to the video subnet

- Do it all with the F5

A few months later, the networking team comes back and announces that after months of work and 10s of people years they can't implement the solution because iRules have a size limit and they would need more than 60,000 lines or 15,000 rules to implement it. Sad trombones all around.

Then, a wild DBA appears, steps up to the plate and says he can solve the problem with the power of ORACLE! Few months later he comes back with some absolutely batshit solution that stored the individual octets of an IPV4, multiple nested queries to the same table to emulate subnet masking through some temp table spanning voodoo. Time to complete: 2-4 minutes per request. He too eventually gives up the fight, sort of, in that backhanded way DBAs tend to do everything. I wish I would have paid more attention to that abortion because the rationale and its mechanics were just staggeringly rube goldberg and should have been documented for posterity.

So I catch wind of this sitting in a CAB meeting. I hear them talking about how there's "no way to solve this problem, it's too complex, we're going to need a lot more databases to handle this." I tune in and gather all it really needs to do, since the ingress firewall is handling the origin IP checks, is convert the request IP to video ingress IP, 302 and call it a day.

While they're all grandstanding and pontificating, I fire up visual studio and:

- write a method that encodes the incoming request IP into a single uint32

- write an http module that keeps an in-memory dictionary of uint32,string for the request, response, converts the request ip and 302s the call with blackhole support

- convert all the mappings in the spreadsheet attached to the meetings into a csv, dump to disk

- write a wpf application to allow for easily managing the IP database in the short term

- deploy the solution one of our stage boxes

- add a TODO to eventually move this to a database

All this took about 5 minutes. I interrupt their conversation to ask them to retarget their test to the port I exposed on the stage box. Then watch them stare in stunned silence as the crow grows cold.

According to a friend who still works there, that code is still running in production on a single node to this day. And still running on the same static file database.

#TheValueOfEngineers2 -

I just got four CSV reports sent to me by our audit team, one of them zipped because it was too large to attach to email.

I open the three smaller ones and it turns out they copied all the (comma separated) data into the first column of an Excel document.

It gets better.

I unzip the "big" one. It's just a shortcut to the report, on a network share I don't have access to.

They zipped a shortcut.

Sigh. This'll be a fun exchange.3 -

Customer: Can you do a database query for me?

Me: Made the query and send them the result as a csv-file.

Customer: Is it possible to send it as an excel-worksheet because the columns don't have the right width.

Me: Resize the columns to the right width, saved it as xlsx-file and send it back.5 -

Let me ask you something: why do most people prefer ms word over a simple plain text document when writing a manual. Use Markdown!

You can search and index it (grep, ack, etc)

You don't waste time formatting it.

It's portable over OS.

You only need a simple text editor.

You can export it to other formats, like PDF to print it!

You can use a version control system to version it.

Please! stop using those other formats. Make everyone's life easier.

Same applies when sharing tables. Simple CSV files are enough most of the time.

Thank you!!?!18 -

I always have guilt complexion of saying that I'm a Data Scientist - when I'm actually spending weeks scraping and annotating data into a csv file.3

-

I’m on this ticket, right? It’s adding some functionality to some payment file parser. The code is atrocious, but it’s getting replaced with a microservice definitely-not-soon-enough, so i don’t need to rewrite it or anything, but looking at this monstrosity of mental diarrhea … fucking UGH. The code stink is noxious.

The damn thing reads each line of a csv file, keeping track of some metadata (blah blah) and the line number (which somehow has TWO off-by-one errors, so it starts on fucking 2 — and yes, the goddamn column headers on line #0 is recorded as line #2), does the same setup shit on every goddamned iteration, then calls a *second* parser on that line. That second parser in turn stores its line state, the line number, the batch number (…which is actually a huge object…), and a whole host of other large objects on itself, and uses exception throwing to communicate, catches and re-raises those exceptions as needed (instead of using, you know, if blocks to skip like 5 lines), and then writes the results of parsing that one single line to the database, and returns. The original calling parser then reads the data BACK OUT OF THE DATABASE, branches on that, and does more shit before reading the next line out of the file and calling that line-parser again.

JESUS CHRIST WHAT THE FUCK

And that’s not including the lesser crimes like duplicated code, misleading var names, and shit like defining class instance constants but … first checking to see if they’re defined yet? They obviously aren’t because they aren’t anywhere else in the fucking file!

Whoever wrote this pile of fetid muck must have been retroactively aborted for their previous crimes against intelligence, somehow survived the attempt, and is now worse off and re-offending.

Just.

Asdkfljasdklfhgasdfdah26 -

YOU CANNOT parse CSV by just splitting the string by commas.

YOU CANNOT generate CSV by just outputting the raw values separated by commas.

CSV is not the magical parseless data format. You need to read fields in quotations, and newlines inside of fields shouldn't prematurely end the row.

Do it fucking right holy shit.15 -

My teammate push 2gbs worth of CSV files into our repo.

He also merged all the other branches so, it's kinda hard to revert back without reworking a lot of stuff.3 -

veekun/pokedex

https://github.com/veekun/pokedex

It's essentially all meta you need to make a pokemon game, in csv files.

Afaik, they ripped the information from the original games, so you can be sure about their validity.

I love how it's easy to use, isn't some weird ass formatted wiki and even has scripts to load it into your database.

Me being a huge pokemon fan, that's the non plus ultra. -

I wrote a prototype for a program to do some basic data cleaning tasks in Go. The idea is to just distribute the files with the executable on our shared network to our team (since it is small enough, no github bullshit needed for this) and they can go from there.

Felt experimental, so I decided to try out F# since I have always been interested with it and for some reason Microsoft adopted it into their core net framework.

I shit you not, from 185 lines of Go code, separated into proper modules etc not to mention the additional packages I downloaded (simple things for CSV reading bla bla)

To fucking 30 lines of F# that could probably be condensed more if I knew how to do PROPER functional programming. The actual code is very much procedural with very basic functional composition, so it could probably be even less, just more "dense"

I am amazed really. I do not like that namespace pollution happens all over F# since importing System.IO gives you a bunch of shit that you wouldn't know where it is coming from unless you fuck enough with Ionide and the docs. But man.....

No need for dotnet run to test this bitch, just highlight it on the IDE, alt enter and WHAM you have the repl in front of you, incremental quasi like Lisp changes on the code can be REPL changed this way, plethora of .NET BCL wonders in it, and a single point of documentation as long as you stay in standard .net

I am amazed and in love, plus finding what I wanted to do was a fucking cakewalk.

Downside: I work in a place in which Python is seen as magic and PHP, VB.NEt and C# is the end all be all of languages. If me goes away or dies there will be no one else in this side of the state to fuck with F#

This language needs to be studied more. Shit can be so compact, but I do feel that one needs to really know enough of functional programming to be good at it. It is really not a pure language like Haskell (then again, haskell is the only "mainstream" pure functional language ain't it not?) but still, shit is really nice and I really dig what Microhard is doing in terms of the .net framework.

Will provide later findings. My entire team is on the Microsoft space, we do have Linux servers, but porting the code to generate the necessary executables for those servers if needed should be a walk in the park. I am just really intrigued by how many lines of code I was able to cut down from the Go application.

Please note that this could also mean that I am a shit Golang dev, but the cut down of nil err checkings do come somewhere.9 -

Well, the system is offline, links are broken and users are complaining! Developer, what did you do?

After some digging around the designer made a "simple change" to a csv file, add a column to include the image file name of each item...

I mean, it just shifted ALL THE COLUMNS in a csv file but what could go wrong? 🤦♂️2 -

Context: we analyzed data from the past 10 years.

So the fuckface who calls himself head of research tried to put blame on me again, what a surprise. He asked for a tool what basically adds a lot of numbers together with some tweakable stuff, doesnt really matter. Now of course all the datanwas available already so i just grabbed it off of our api, and did the math thing. Then this turdnugget spends 4 literal weeks, tryna feed a local csv file into the program, because he 'wanted to change some values'. One, this isnt what we agreed on, he wanted the data from the original. When i told him this, he denied it so i had to dig out a year old email. Two, he never explicitly specified anything so i didnt use a local file because why the fuck would i do that. Three, i clearly told him that it pulls data from the server. Four, what the fuck does he wanna change past values for, getting ready for time travel? Five, he ranted for like 3 pages, when a change can be done by currentVal - changedVal + newVal, even a fucking 10 year old could figure that out. Also, when i allowed changes in a temporary api, he bitched about how the additional info, what was calculated from yet another original dataset, doesnt get updated, when he fucking just randimly changes values in the end set. Pinnacle of professionalism.2 -

I applied for a google step internship today. My cv is pretty good (people say). University transcript is a malformed csv and I am forced to provide a screenshot. Wish me luck.3

-

Imagine saving Integers and Floats in a MySQL table as strings containing locale based thousand sepatators...

man... fickt das hart!

Wait, there's more!

Imagine storing a field containing list of object data as a CSV in a single table column instead of using JSON format or a separate DB table.... and later parsing it by splitting the CSV string on ";"...6 -

This company wanted a "sample of your feed output in CSV format". So I threw together some documentation for our REST API. My project manager forwarded a link to their project manager who forwarded it to their backend team who forwarded it to their contactor who is doing the front end. I've half a mind to just put an extra field in the API responses: {

"comment": "If you're reading this, you're the person I've been trying to through to. Email me "

}1 -

Nothing big, but the time I felt the most useful and awesome guy in the world was when I wrote a script creating PDF cover letters from a csv file with contacts names for my gf. A bit of Latex and python, a few hours to make it resilient to special characters, but the look of relief (she would have done it by hand) and admiration in her eyes truly made me feel proud :)1

-

Today's project was answering the question: "Can I update tables in a Microsoft Word document programmatically?"

(spoiler: YES)

My coworker got the ball rolling by showing that the docx file is just a zip archive with a bunch of XML in it.

The thing I needed to update were a pair of tables. Not knowing anything about Word's XML schema, I investigated things like:

- what tag is the table declared with?

- is the table paginated within the table?

- where is the cell background color specified?

Fortunately this wasn't too cumbersome.

For the data, CSV was the obvious choice. And I quickly confirmed that I could use OpenCSV easily within gradle.

The Word XML segments were far too verbose to put into constants, so I made a series of templates with tokens to use for replacement.

In creating the templates, I had to analyze the word xml to see what changed between cells (thankfully, very little). This then informed the design of the CSV parsing loops to make sure the dynamic stuff got injected properly.

I got my proof of concept working in less than a day. Have some more polishing to do, but I'm pretty happy with the initial results!6 -

Fucking powershell.

Just make a fucking api call, and shove my json into a damn csv.

How fucking hard do you have to be 🤯5 -

The story of the shittiest, FUCKING WORST day of work.

TLDR: shitty day at work, car crash to end the day.

So, let tell you about what could possibly be the worst day I had since I started working.

This morning, my alarm didn't work, woke up 30 minutes before an appointment I had with a client.

Arrived late at the client, as I start deploying. They don't have any way to transfer the deployment package to the secured server. Lost 45 minutes there.

Deployment goes pretty well. My client asks me to stay while they load some data into the app. Everything's pretty easy to work out. Just need to input 3 CSV with the correct format (which the client defined since the beginning).

I end up watching an Excel Macro called "Brigitte" (I'm not fucking kinding, could'nt have thought of that) work for 4 hours straight. Files are badly formatted and don't work.

Troubbleshooting thoses files with a fucking loader that does not tell you anything about why it failed (our fault on that one)

I leave the client at 7:30pm, going back at work, leave at 9pm.

At this point, I just want to buy some food, go home and watch series.

But NO, A FUCKING MORRON OF A BUS DRIVER had to switch lanes as I was overtaking him. Getting me crushed between the bus and the concrete blocks.

Cops were fucking dickheads, being very mean even tho I was still shaking from the adrenaline.

In conclusion, the day could have been worst. The devs at the clients are pretty cool guys and we actually had some fun troubleshooting. At work, there was still one of my colleagues who cheered me up telling me about his day.

And when I think of it, I could have got really hurt (or even worst) in the crash.

A bad day is a bad day, tomorrow morning I'm still going to get up and go to a job I love, with people I love working with.

Very big rant (sorry about that if someone's still reading)9 -

Java. AGAIN. 😡

so, I am trying to get a csv opened and read, and then search through it based on values. Easy peasy lemon squeezy in python, right?

Well, damned be java. You need a buffered reader to read the file. Then you have to "while(has next)" the whole damn thing, then you have to do something with the data that you read one by one, right? Well, not to be disappointed, they do have json libraries, but you **have to install** the plugins for it. Aka you have to manually add the libraries or use some backwards manager like maven.

Gotta admit, jdbc is neat if you're anal about your sql statements, but bring the same jazz to csv, and all the hell will break loose.

Now, if you just read your json data into multiple objects and throw them in an array... Kiss shorthand search's ass goodbye, because this mofo can't search through lists without licking the arse of every object. And now, you have to find another way because this way, you can't group shit you just read from csv. (or, I haven't found a way after 5 hours of dealing with the godforsaken shitshow that java libraries are.)

Like, I'm devastated. If this rant doesn't make much sense to you, blame some java library for it.

Shouldn't be too hard.24 -

So, I'm a Jr. Webdev started one year ago to work on a €200mln. retail platform. Our development team consists out of my Sr. dev who designed the whole platform and it's basically his baby. Now he's leaving and it's expected from me to do new developments, support, meetings with managers from all over Europe, roll-outs in new countries, deal with all the issues SAP has, eat their bullshit when they can't upload a .csv file because they are too stupid to check for missing leading zeros. Listen to important their new functions are that they want because 120% of the salespeople needs it. How stupid can this company be to take the financial risk? I'm done.9

-

"So Alecx, how did you solve the issues with the data provided to you by hr for <X> application?"

Said the VP of my institution in charge of my department.

"It was complex sir, I could not figure out much of the general ideas of the data schema since it came from a bunch of people not trained in I.T (HR) and as such I had to do some experiments in the data to find the relationships with the data, this brought about 4 different relations in the data, the program determined them for me based on the most common type of data, the model deemed it a "user", from that I just extracted the information that I needed, and generated the tables through Golang's gorm"

VP nodding and listening intently...."how did you make those relationships?" me "I started a simple pattern recognition module through supervised mach..." VP: Machine learning, that sounds like A.I

Me: "Yes sir, it was, but the problem was fairly easy for the schema to determ.." VP: A.I, at our institution, back in my day it was a dream to have such technology, you are the director of web tech, what is it to you to know of this?"

Me: "I just like to experiment with new stuff, it was the easiest rout to determine these things, I just felt that i should use it if I can"

VP: "This is amazing, I'll go by your office later"

Dude speaks wonders of me. The idea was simple, read through the CSV that was provided to me, have the parsing done in a notebook, make it determine the relationships in the data and spout out a bunch of JSON that I could use. Hook it up to a simple gorm golang script and generate the tables for that. Much simpler than the bullshit that we have in php. I used this to create a new database since the previous application had issues. The app will still have a php frontend and backend, but now I don't leave the parsing of the data to php, which quite frankly, php sucks for imho. The Python codebase will then create the json files through the predictive modeling (98% accuaracy) and then the go program will populate the db for me.

There are also some node scripts that help test the data since the data is json.

All in all a good day of work. The VP seems scared since he knows no one on this side of town knows about this kind of tech. Me? I am just happy I get to experiment. Y'all should have seen his face when I showed him a rather large app written in Clojure, the man just went 0.0 when he saw Lisp code.

I think I scare him.12 -

Aaah! Another cup of stupidity on this sunny Friday! 🍵

I just received a csv file with usernames, emails and passwords in plaintext for 1500 users.

Apparently that's what it means to "integrate with our database"5 -

When your "product owner" just doesn't listen...

Skype conversation:

PO: What format will the dates need to be in for the csv file upload?

Me: Just tell them YYYY-MM-DD

PO: ok

Two weeks later...

PO: there is a bug in the csv file upload! The dates aren't being picked up

Me: ok will have a look, send me through an example date the are using

PO: ok, example date 12/03/1990

FFS! 😡 3

3 -

So I setup a nice csv file for the customer to fill in the shop items for their webshop, you know? with a nice layout like

name - language - description etc.

(just temporary, because the legacy website is going under a ((sadly frontend only)) rework, so it now also has to display different 'kind' of products... and because the new cms isn't done yet they

have to provide the data with other means)

my thoughts were to create a little import script to write the file into the database.... keep in mind of the relations... etc...

guess what? TWO MONTHS later, I get a file with a custom layout, empty cells, sometimes with actual data, sometimes (in red / green text color) notes for me

I mean WHY.... WHY DO YOU MAKE MY LIVE HARDER???

So now I have to put data in 6 columns and 411 rows in the database BY HAND...

oh and did I mention they also have relations? yeah... I also have to do that by hand now...3 -

ALWAYS read warnings guys.

Story time !

A client of ours has a synchronization app (we wrote it) between his inhouse DB and our app. (No, no APIs on their end. It’s a schelduled task).

Because we didn’t want to ask them for logs every single time, the app writes logs to disk (normal) and in Applications Insights in Azure.

When needed, I can go in portal, get all logs for last execution in a nice CSV file.

Well, recently we added more logs (Some problems were impossible to track).

So client calls us : “problem with XXX”

Me : Goes to Azure, does the same manipulation as always. Dismiss a smaaaaaalish warning without reading. Study logs. Conclusion: “The XXX is not even in the logs, check your DB”.

Little I knew, the warning was telling me “Results are truncated at 10.000 lines”.

So client was right, I was wrong and I needed to develop a small app to get logs with more than 10.000 lines. (It’s per execution. Every 3 hours) -

Because sharing is caring.

For anyone whom cares, I've extracted the CoronaVirus data for total infected / deaths from the world health organisation and shoved it into applicable csv files per day.

You can find the complete data set here:

https://github.com/C0D4-101/...5 -

It's rant time again. I was working on a project which exports data to a zipped csv and uploads it to s3. I asked colleagues to review it, I guess that was a mistake.

Well, two of my lesser known colleague reviewed it and one of the complaints they had is that it wasn't typescript. Well yes good thing you have EYES, i'm not comfortable with typescript yet so I made it in nodejs (which is absolutely fine)

The other guy said that I could stream to the zip file and which I didn't know was possible so I said that's impossible right? (I didn't know some zip algorithms work on streams). And he kept brushing over it and taking about why I should use streams and why. I obviously have used streams before and if had read my code he could see that my code streamed everything to the filesystem and afterwards to s3. He continued to behave like I was a literall child who just used nodejs for 2 seconds. (I'm probably half his age so fair enough). He also assumed that my code would store everything in memory which also isn't true if he had read my code...

Never got an answer out of him and had to google myself and research how zlib works while he was sending me obvious examples how streams work. Which annoyed me because I asked him a very simple question.

Now the worst part, we had a dev meeting and both colleagues started talking about how they want that solutions are checked and talked about beforehand while talking about my project as if it was a failure. But it literally wasn't lol, i use streams for everything except the zipping part myself because I didn't know that was possible.

I was super motivated for this project but fuck this shit, I'm not sure why it annoys me so much. I wanted good feedback not people assuming because I'm young I can't fucking read documentation and also hate that they brought it up specifically pointing to my project, could be a general thing. Fuck me.3 -

Looks like despite 20-30 years on the market all popular text / spreadsheet editors are still loading whole file to memory.

What the fucking wankers. WTF are they doing whole day besides changing menu layout and icon colors ?

Clearly development today is lead by bunch of idiots from marketing department accompanied with HR hiring social network self made models.

What a fucked up world.

Let’s add AI to our software but fails to open 150MB csv file.

Great job everyone. Great job.4 -

Want to make someone's life a misery? Here's how.

Don't base your tech stack on any prior knowledge or what's relevant to the problem.

Instead design it around all the latest trends and badges you want to put on your resume because they're frequent key words on job postings.

Once your data goes in, you'll never get it out again. At best you'll be teased with little crumbs of data but never the whole.

I know, here's a genius idea, instead of putting data into a normal data base then using a cache, lets put it all into the cache and by the way it's a volatile cache.

Here's an idea. For something as simple as a single log lets make it use a queue that goes into a queue that goes into another queue that goes into another queue all of which are black boxes. No rhyme of reason, queues are all the rage.

Have you tried: Lets use a new fangled tangle, trust me it's safe, INSERT BIG NAME HERE uses it.

Finally it all gets flushed down into this subterranean cunt of a sewerage system and good luck getting it all out again. It's like hell except it's all shitty instead of all fiery.

All I want is to export one table, a simple log table with a few GB to CSV or heck whatever generic format it supports, that's it.

So I run the export table to file command and off it goes only less than a minute later for timeout commands to start piling up until it aborts. WTF. So then I set the most obvious timeout setting in the client, no change, then another timeout setting on the client, no change, then i try to put it in the client configuration file, no change, then I set the timeout on the export query, no change, then finally I bump the timeouts in the server config, no change, then I find someone has downloaded it from both tucows and apt, but they're using the tucows version so its real config is in /dev/database.xml (don't even ask). I increase that from seconds to a minute, it's still timing out after a minute.

In the end I have to make my own and this involves working out how to parse non-standard binary formatted data structures. It's the umpteenth time I have had to do this.

These aren't some no name solutions and it really terrifies me. All this is doing is taking some access logs, store them in one place then index by timestamp. These things are all meant to be blazing fast but grep is often faster. How the hell is such a trivial thing turned into a series of one nightmare after another? Things that should take a few minutes take days of screwing around. I don't have access logs any more because I can't access them anymore.

The terror of this isn't that it's so awful, it's that all the little kiddies doing all this jazz for the first time and using all these shit wipe buzzword driven approaches have no fucking clue it's not meant to be this difficult. I'm replacing entire tens of thousands to million line enterprise systems with a few hundred lines of code that's faster, more reliable and better in virtually every measurable way time and time again.

This is constant. It's not one offender, it's not one project, it's not one company, it's not one developer, it's the industry standard. It's all over open source software and all over dev shops. Everything is exponentially becoming more bloated and difficult than it needs to be. I'm seeing people pull up a hundred cloud instances for things that'll be happy at home with a few minutes to a week's optimisation efforts. Queries that are N*N and only take a few minutes to turn to LOG(N) but instead people renting out a fucking off huge ass SQL cluster instead that not only costs gobs of money but takes a ton of time maintaining and configuring which isn't going to be done right either.

I think most people are bullshitting when they say they have impostor syndrome but when the trend in technology is to make every fucking little trivial thing a thousand times more complex than it has to be I can see how they'd feel that way. There's so bloody much you need to do that you don't need to do these days that you either can't get anything done right or the smallest thing takes an age.

I have no idea why some people put up with some of these appliances. If you bought a dish washer that made washing dishes even harder than it was before you'd return it to the store.

Every time I see the terms enterprise, fast, big data, scalable, cloud or anything of the like I bang my head on the table. One of these days I'm going to lose my fucking tits.10 -

Just saw this:

Add comma's to your passwords to mess with the csv file they will be dumped in after a data breach9 -

when you start machine learning on you laptop, and want to it take to next level, then you realize that the data set is even bigger that your current hard-disk's size. fuuuuuucccckkk😲😲

P. S. even metadata csv file was 500 mb. Took at least 1 min to open it. 😭😧11 -

#!/usr/bin/rant

So, we are a web development and marketing agency. That's fine... except now it seems that we are a marketing and web development agency. Where the head marketing guy feels it's his job to head up web development.

This is NOT what I signed up for.

When you offer web services to a client, the one meeting with the client should understand at least basic stuff, and know when to pull in a heavyweight for more questions. Instead, our web team is summarized by a guy who listens to 80's rock music in a shared office (used to be just me in there) and spends his days trying to get 30-year-olds on Facebook to click an ad.

He was on the phone yesterday with some ecommerce / CRM support, trying to tell them that they have an API, that "it's a simple thing, I'm sure you have it", and that's all we need to do business with them. Which is not his call, it's my call, but for some reason he's the one on the phone asking for API info. The last time I took someone else's word on an API, I underquoted the work and eventually found out that their "API" was nothing more than a cron job which places a CSV file on your server via FTP.

Anyway, we now have a full-time marketer and two part-time interns, with another ad out for an AdWords specialist. Meanwhile, I'm senior dev with a server admin / retired senior dev, and if we don't focus on hiring a front-end guy soon we're going to lose business.

Long story short, I'm getting sick of having a guy who does not understand basic web concepts run the show because he's the one who talks to the client.3 -

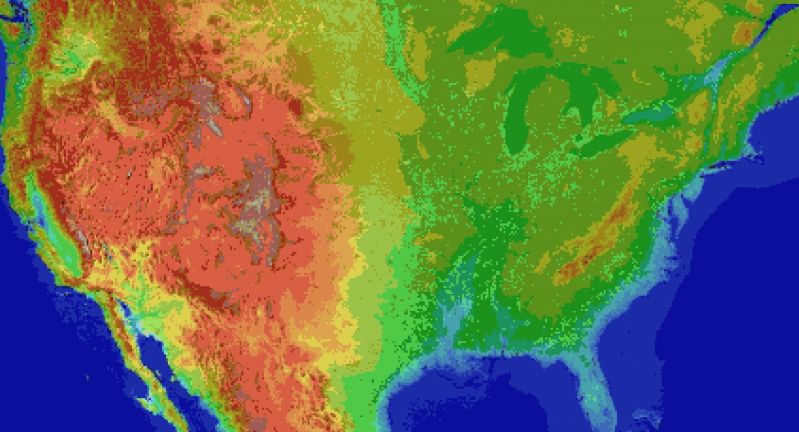

Everyone and their dog is making a game, so why can't I?

1. open world (check)

2. taking inspiration from metro and fallout (check)

3. on a map roughly the size of the u.s. (check)

So I thought what I'd do is pretend to be one of those deaf mutes. While also pretending to be a programmer. Sometimes you make believe

so hard that it comes true apparently.

For the main map I thought I'd automate laying down the base map before hand tweaking it. It's been a bit of a slog. Roughly 1 pixel per mile. (okay, 1973 by 1067). The u.s. is 3.1 million miles, this would work out to 2.1 million miles instead. Eh.

Wrote the script to filter out all the ocean pixels, based on the elevation map, and output the difference. Still had to edit around the shoreline but it sped things up a lot. Just attached the elevation map, because the actual one is an ugly cluster of death magenta to represent the ocean.

Consequence of filtering is, the shoreline is messy and not entirely representative of the u.s.

The preprocessing step also added a lot of in-land 'lakes' that don't exist in some areas, like death valley. Already expected that.

But the plus side is I now have map layers for both elevation and ecology biomes. Aligning them close enough so that the heightmap wasn't displaced, and didn't cut off the shoreline in the ecology layer (at export), was a royal pain, and as super finicky. But thankfully thats done.

Next step is to go through the ecology map, copy each key color, and write down the biome id, courtesy of the 2017 ecoregions project.

From there, I write down the primary landscape features (water, plants, trees, terrain roughness, etc), anything easy to convey.

Main thing I'm interested in is tree types, because those, as tiles, convey a lot more information about the hex terrain than anything else.

Once the biomes are marked, and the tree types are written, the next step is to assign a tile to each tree type, and each density level of mountains (flat, hills, mountains, snowcapped peaks, etc).

The reference ids, colors, and numbers on the map will simplify the process.

After that, I'll write an exporter with python, and dump to csv or another format.

Next steps are laying out the instances in the level editor, that'll act as the tiles in question.

Theres a few naive approaches:

Spawn all the relevant instances at startup, and load the corresponding tiles.

Or setup chunks of instances, enough to cover the camera, and a buffer surrounding the camera. As the camera moves, reconfigure the instances to match the streamed in tile data.

Instances here make sense, because if theres any simulation going on (and I'd like there to be), they can detect in event code, when they are in the invisible buffer around the camera but not yet visible, and be activated by the camera, or deactive themselves after leaving the camera and buffer's area.

The alternative is to let a global controller stream the data in, as a series of tile IDs, corresponding to the various tile sprites, and code global interaction like tile picking into a single event, which seems unwieldy and not at all manageable. I can see it turning into a giant switch case already.

So instances it is.

Actually, if I do 16^2 pixel chunks, it only works out to 124x68 chunks in all. A few thousand, mostly inactive chunks is pretty trivial, and simplifies spawning and serializing/deserializing.

All of this doesn't account for

* putting lakes back in that aren't present

* lots of islands and parts of shores that would typically have bays and parts that jut out, need reworked.

* great lakes need refinement and corrections

* elevation key map too blocky. Need a higher resolution one while reducing color count

This can be solved by introducing some noise into the elevations, varying say, within one standard div.

* mountains will still require refinement to individual state geography. Thats for later on

* shoreline is too smooth, and needs to be less straight-line and less blocky. less corners.

* rivers need added, not just large ones but smaller ones too

* available tree assets need to be matched, as best and fully as possible, to types of trees represented in biome data, so that even if I don't have an exact match, I can still place *something* thats native or looks close enough to what you would expect in a given biome.

Ponderosa pines vs white pines for example.

This also doesn't account for 1. major and minor roads, 2. artificial and natural attractions, 3. other major features people in any given state are familiar with. 4. named places, 5. infrastructure, 6. cities and buildings and towns.

Also I'm pretty sure I cut off part of florida.

Woops, sorry everglades.

Guess I'll just make it a death-zone from nuclear fallout.

Take that gators! 5

5 -

When an analysts opens a ticket about a wrong result csv file but instead of putting the actual file there , he puts a screen shot of it

-

Just found an interface in an app of my company that uses the best of both worlds: csv AND xml 🤦🏻♂️

<Name>John;<Lastname>Doe;

🤣1 -

The company considers the project manager I work with to be the best. After working with him, I consider him to be everything that is wrong with project management.

This PM injects himself into everything and has a way of completely over-complicating the smallest of things. I will give an example:

We needed to receive around 1000 rows of data from our vendor, process each row, and host an endpoint with the data in json. This was a pretty simple task until the PM got involved and over complicated the shit out of it. He asks me what file format I need to receive the data. I say it doesnt really matter, if the vendor has the data in Excel, I can use that. After an hour long conversation about his concerns using Excel he decides CSV is better. I tell him not a problem for me, CSV works just as good. The PM then has multiple conversations with the Vendor about the specific format he wants it in. Everything seems good. The he calls me and asks how am I going to host the JSON endpoints. I tell him because its static data, I was probably going to simply convert each record into its own file and use `nginx`. He is concerned about how I would process each record into its own file. I then suggest I could use a database that stores the data and have an API endpoint that will retrieve and convert into JSON. He is concerned about the complexities of adding a database and unnecessary overhead of re-processing records every time someone hits the endpoint. No decision is made and two hours are wasted. Next day he tells me he figured out a solution, we should process each record into its own JSON file and host with `nginx`. Literally the first thing I said. I tell him great, I will do that.

Fast forward a few days and its time to receive the payload of 1000 records from the Vendor. I receive the file open it up. While they sent it in CSV format the headers and column order are different. I quietly without telling the PM, adjust my code to fit what I received, ran my unit test to make sure it processed correctly, and outputted each record into its own json file. Job is now done and the project manager gets credit for getting everything to work on the first try.

This is absolutely ridiculous, the PM has an absurd 120 hours to this task! Because of all the meetings, constant interruptions, and changing of his mind, I have 35 hours to this task. In reality the actual time I spent writing code was probably 2-3 hours and all the rest was dealing with this PM's meetings and questions and indecisiveness. From a higher level, he appears to be a great PM because of all the hours he logs but in reality he takes the easiest of tasks and turns them into a nightmare. This project could have easily been worked out between me and vendor in a 30 min conversation but this PM makes it his business to insert himself into everything. And then he has the nerve to complain that he is so overwhelmed with all the stuff going on. It drives me crazy because this inefficacy and unwanted help makes everything he touches turn into a logistical nightmare but yet he is viewed as one of the companies top Project Managers.3 -

For fucks sake! It's 2018 and MS™ Excel™ is still not able to store a file in UTF-8...

And neither can you choose the separators when opening a CSV.

Go eat a bag of corporate dicks and greedily choke on it to an agonizing death.5 -

A server application pulled off some sort of listings as table. Problem was, it crashed with some thousand data files after one and a half hours. I looked into that, and couldn't stop WTFing.

A stupid server side script fetched the data in XML (WTF!) and then inserted shit node-wise (WTF!!), which was O(n^2) - in PHP and on XML! Then it converted the whole shebang into HTML for browser display although users would finally copy/paste the result into Excel anyway.

The original developer even had written a note on the application page that pulling the data "could take long". Yeah because it's so fucking STUPID that Clippy is an Einstein in comparison, that's why!

So I pulled the raw data via batch file without XML wrapping and wrote a little C program for merging the dumped stuff client-side in O(n), spitting out a final CSV for Excel import.

Instead of fucking the server for 1.5 hours and then crashing, shit is done after 7 seconds, out of which the actual data processing takes 40 bloody milliseconds!4 -

I think I can learn English here.

HAHAHA

I can also learn professional knowledge.

**I am a Korean.**

And...

Succeed!

Android studio AVD powered pictures 4

4 -

I would like to know if anyone has created a CSV file which has 10,000,000 objects ?

1) The data is received via an API call.

2) The maximum data received is 1000 objects at once. So it needs to be in some loop to retrieve and insert the data.11 -

Rant from a previous gig I just remembered that reignited my fury lol

Suddenly, CSV exports became massively critical to our product's success. "They were always part of the plan, if we don't have them the product is a failure". Plot twist, they were NOT always part of the plan. And our backend is not at all designed for querying the combinations of data you're asking for.

Nevermind we've been entirely focused these last few months on making the new user experience as slick as possible because "our customers want cake, not meat and potatoes". Forget the fact that, in order to meet the deadlines, my team coupled the backend a little too much with the needs of the frontend because otherwise integrations took too long. We NEED fucking CSV exports of everything you can fucking imagine.

No. Fuck you. If you want it, it's gonna take at least 2 engineers and a month, and according to you we only have a few weeks of runway. No, I'm not compromising jack shit, this is the reality we live in. This is going to go nuclear in production if we don't do it right. Either give us the month and bankrupt the company, or fucking drop it.

Or...you could go cry to the frontend team for solutions. And convince them to page through ALL of the data and generate CSVs in the fucking browser. Sure, it sort of works in QA with the miniscule amount of data we have there, but how'd that work out for you in prod?

Jesus fucking christ why are you people such incompetent morons, and how the fuck did you become executives??2 -

Staring at a CSV file full of data looking for that one extra comma or stray double quote or some out of place Unicode character that might exist but you don't know which of the hundreds it could be feels like staring into a pit of despair.7

-

So we work on a Vmware network. And besides the terrible network lag. The specs of that VM is one core (Possibly one thread of a xeon core) and 3 GB RAM.

What do we do on it?

Develop heavy ass java GUI applications on eclipse. It lags in every fucking task. Can't even use latest versions of browsers because the VM is a fucking snail ass piece of shit!

So, in the team meeting I proposed to my manager, Hey our productivity is down because of this POS VM. Please raise the specs!.

He said mere words won't help. He needs proof.

Oh, you need proof ? Sure. I coded up a script that all of my team ran for a week. That generates a CSV with CPU usage, mem left, time - every 10 min. I use this data to show some motherfucking Graphs because apparently all they understand is graphs and shit.

So there you go. Have your proof! Now give me the specs I need to fucking work!3 -

Boss at the start of a new project: "We could hire an intern to gatter some data in an excel list... You can easily implement that in the application later - right? So can you get us a excel list to fill out? "

No... Just no...

You tell me what you wanna see and how you wanna interact with the application!

In the process we will figure out which data is necessary, I will build some tables in the database for that data and then, !!! not a second sooner !!! , I'll be able to give you an suitable excel list, which includes a complete list of columns for the necessary data in a form I can work with it.

It's not my job to know what data a application needs to make YOUR JOB easier! I'm not a magician! I just love programming stuff!3 -

Most successful project at work: NodeJS utility for storing loads of measurements from an application running on various other systems and providing fast ways of getting at that data. No DB, just CSV files broken into time periods. Also has a search function written in C that can very quickly find all user sessions matching the criteria. It's not perfect, but it does the job pretty well and I can tweak the storage engine as much as needed for our use case since its all custom written.

Outside of work: Incomplete right now but I soldered some wires onto an old sound card and managed to get an Arduino to configure it and play some notes on its FM synthesis chip. Still quite a newbie to electronics so this was quite an achievement for me personally. -

Alright since I have to deal with this shit in my part time job I really have to ask.

What is the WORST form of abusing CSV you have ever witnessed?

I for one have to deal with something like this:

foo,1,2,3,4,5

0,2,4,3,2,1

0,5,6,4,3,1

bar,,,,,

foobar,,,,,

foo can either be foo, or a numeric value

if it is foo, the first number after the foo dictates how many times the content between this foo and the next bar is going to be repeated. Mind you, this can be nested:

foo,1,,,,

1,2,3,4,5,6

foo,10,,,,

6,5,4,3,2,1,

bar,,,,,

1,2,3,4,5,6

bar,,,,,

foobar,,,,,

foobar means the file ends.

Now since this isn't quite enough, there's also SIX DIFFERENT FLAVOURS OF THIS FILE. Each of them having different columns.

I really need to know - is it me, or is this format simply utterly stupid? I was always taught (and fuck, we always did it this way) that CSV was simply a means to store flat and simple data. Meanwhile when I explain my struggle I get a shrug and "Just parse it, its just csv!!"

To top it off, I can not use the flavours of these files interchangably. Each and everyone of them contains different data so I essentially have to parse the same crap in different ways.

OK this really needed to get outta the system6 -

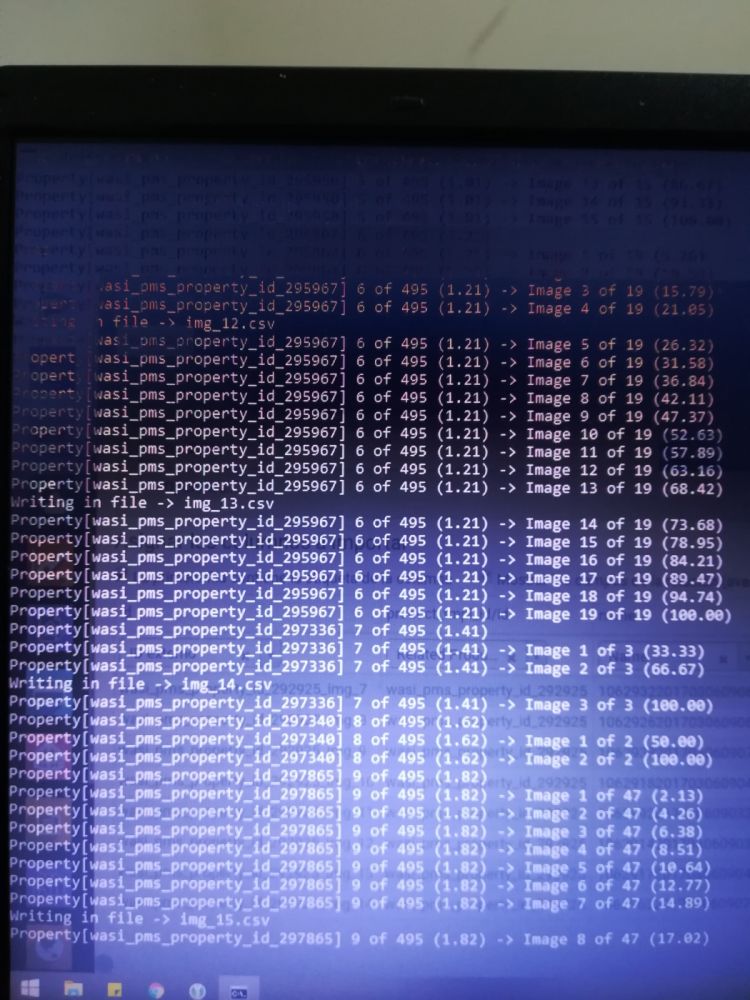

I suspected that our storage appliances were prematurely pulling disks out of their pools because of heavy I/O from triggered maintenance we've been asked to automate. So I built an application that pulls entries from the event consoles in each site, from queries it makes to their APIs. It then correlates various kinds of data, reformats them for general consumption, and produces a CSV.

From this point, I am completely useless. I was able to make some graphs with gnumeric, libre calc, and (after scraping out all the identifying info) Google sheets, but the sad truth is that I'm just really bad at desktop office document apps. I wound up just sending the CSV to my boss so he can make it pretty.1 -

Just started today with Python 3. I don't know but I really start to like the language. Its not to difficult and it has nice syntax. Currently I'm working on a little financial overview generator. It parses the CSV file from my bank and sorts it into categories. Really fun to make. Do you guys made some nice projects in python?4

-

!rant

if you're someone who grades code, fuck you, you probably suck. Turned in a final project for this gis software construction class as a part of my master's degree (this class was fuck all easy, I had two weeks for each project, each of them took me two days). We had to pick the last project, so I submitted final project proposal that performs a two-sample KS test on some point data. Not complex, but it sounds fancy, project accepted. Easy money.

I write the thing and finish it, it works, but it doesn't have a visualization and that makes the results seem pretty lame, even though its fully functional. SO I GO OUT OF MY FUCKING WAY to add a matplotlib chart of the distribution. To do that, at the very bottom of the workflow, I define a function to chart it out because it made the code way more readable. Reminder, I didn't have to do this, it was extra work to make my code more functional.

Then, this motherfucker takes points off because I didn't define the function at the very beginning of the code... THE FUCK, DUDE? But, noobrants, it's "considered best prac--" nope, fuck you, okay? This class was so shit, not once was code style addressed in a lesson or put on any rubric - they didn't give a shit what it looked like - in fact, the whole class only used arcpy (and the csv mod once), they didn't teach us shit about anything except how to write geoprocessing scripts (in other words, how to read arcGIS docs about arcpy) and encouraged us to write in fucking pythonwin. And now, when the class is fucking over, you decide to just randomly toss this shit in, like it was a specific expectation this whole time? AND you do this when someone has gone out of their way to add functionality? Why punish someone who does extra work because that extra work isn't perfect? Literally, my grade would have been better without the visualization.

I'm not even mad at my grade - it was fine - I just hate inconsistency in grading practices and the random raising and lowering of expectations depending on how some grader's coffee tasted that morning. I also hate punishing people for doing more - it's this kind of shit that makes people A) wanna rip their eyeballs out, and B) never do anything more than the basic minimum expectation to avoid extra unwanted attention. If you want your coders to step up and actually put work in to make things the best they can be, yell at a grader to reward extra work and not punish it.4 -

Just finished dumping all ethereum tranasctions into one big 30 GB csv.

Only thing left is to configure Apache Spark cluster.3 -

CSV wouldn't import to database despite researching problem for the past hour. Fuck it I'm getting drunk9

-

First time posting, hopefully I got this right!

So, we have that fabulous application that lets you update translations at will directly from within it. Of course, we also ship basic translations in perfectly indigestible PO files.

That particular client *really* wants to have his translations updated before going on-premise, but it would be too much of a hassle to put that in a CSV and import it into the app. Better ask the only developper on the project to update all PO files one after the other just because "we don't like that word, we'd prefer to see that perfect synonym there". Also, the rest of the project - aka the actual development - should not be delayed because of this.

And of course the translations are provided in the form of screenshots of part of the application with words highlighted in yellow over a white background. Good luck finding the right string in the right file.3 -

A programme I have to maintain (and not allowed to optimise or change):

1) read input from serial connection

2) store data in MySQL database

3) every day convert to CSV

4) store on Windows file share

5) process CSV in access 2000

6) store in MsSQL database

When it was first developed, I said to te developer to store it straight from serial to MsSQL but out boss wanted it to follow the above spec.

He has now left and I have to maintain it1 -

Can we all just agree to stop actively imagining progressively harder to parse CSV formatting options? For fucks sake I’ve had to build in tolerance for quoted and unquoted data, combined data and split data, ways to split the data and recombine it, compare every data point, filter some data, only add data, only remove data, base data updates on non Boolean fields in the file, set end point matching based on arbitrary fields, column number matching, header matching, manipulate malformed urls and reassemble the file with proper ones, it goes the fuck on. CSV’s should just be simple and not hard to format. Why does everyone want to try so fucking hard to do bizarro shit?!

-

So I completed writing code to generate report regarding git commit in CSV. What my boss was saying is :

8

8 -

When you get to work with the Analytics side if the warehouse and one of the guys wants you to learn d3. Js to take a csv to make a html site.

Me: hell Yeah can't wait to make crazy circle graphs and line graphs for everyone in analytics

Analytics: Oh, we just need you to take the csv files and copy the same excel format to a html site. So, table, table, table, table.

Me: so...... No visualization graph

Analytics: No. 4

4 -

Today I found out we have 5 different Customer databases, one for each product area. We don't have access to more than 2 of them, while corp. it central has full access, but we have to beg and pay to see our own customers....

Now i'm tasked with integrating all these into a Customer db. and the way i get access is cobolt made, fixed length csv files and it's different for each db.

FML1 -

The fun with the Slack continues (context: https://devrant.com/rants/5552410/...).

I got in touch with their support (VERY pleasant experience!). Turns out, even though I specify a `filetype` when uploading a file via Slack's API, Slack ignores it and still scans the payload and tries to determine its type itself. They say Slack needs to be absolutely certain that the file will be readable within Slack.

IDK about you, but that raises some flags for me. I again have that itch to password-zip all the files I'm sending over.

I've raised this concern to the support rep. Waiting for his comments.6 -

A software had been developed over a decade ago. With critical design problems, it grew slower and buggier over time.

As a simple change in any area could create new bugs in other parts, gradually the developers team decided not to change the software any more, instead for fixing bugs or adding features, every time a new software should be developed which monitors the main software, and tries to change its output from outside! For example, look into the outputs and inputs, and whenever there's this number in the output considering this sequence of inputs, change the output to this instead.

As all the patchwork is done from outside, auxiliary software are very huge. They have to have parts to save and monitor inputs and outputs and algorithms to communicate with the main software and its clients.

As this architecture becomes more and more complex, company negotiates with users to convince them to change their habits a bit. Like instead of receiving an email with latest notifications, download a csv every day from a url which gives them their notifications! Because it is then easier for developers to build.

As the project grows, company hires more and more developers to work on this gigantic project. Suddenly, some day, there comes a young talented developer who realizes if the company develops the software from scratch, it could become 100 times smaller as there will be no patchwork, no monitoring of the outputs and inputs and no reverse engineering to figure out why the system behaves like this to change its behavior and finally, no arrangement with users to download weird csv files as there will be a fresh new code base using latest design patterns and a modern UI.

Managers but, are unaware of technical jargon and have no time to listen to a curious kid! They look into the list of payrolls and say, replacing something we spent millions of man hours to build, is IMPOSSIBLE! Get back to your work or find another job!

Most people decide to remain silence and therefore the madness continues with no resistance. That's why when you buy a ticket from a public transport system you see long delays and various unexpected behavior. That's why when you are waiting to receive an SMS from your bank you might end up requesting a letter by post instead!

Yet there are some rebel developers who stand and fight! They finally get expelled from the famous powerful system down to the streets. They are free to open their startups and develop their dream system. They do. But government (as the only client most of the time), would look into the budget spending and says: How can we replace an annually billion dollar project without a toy built by a bunch of kids? And the madness continues.... Boeings crash, space programs stagnate and banks take forever to process risks and react. This is our world.3 -

I got pranked. I got pranked good.

My prof at my uni had given us an asigment to do in java for a class.

Easy peasy for me, it was only a formality...

First task was normal but...

The second one included making a random number csv gen with the lenght of at least 10 digits, a class for checking which numbers are a prime or not and a class that will check numbers from that cvs and create a new cvs with only primes in it. I have created the code and only when my fans have taken off like a jet i realised... I fucked up...

In that moment i realised that prime checking might... take a while..

There was a third task but i didnt do it for obvious reasons. He wanted us to download a test set of few text files and make a csv with freq of every word in that test set. The problem was... The test set was a set of 200 literature books...17 -

So, in Germany apprentices at companies need to file a "Berichtsheft".

It's a thing where you have to file, for each day that is, what you did at work or in job college and how long you did it.

Basically every company keeps records of their employees activities in their CRM or other management system and all schools use services for keeping timetables that include lesson duration and activity.

So why the fuck do we apprentices have to write that shit ourselves when we could literally just acces the databases and SELECT THE SHIT FROM FILED_ACTIVITIES, I thought.