Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "event loop"

-

Before you're hired:

1. A binary tree?

2. Currying?

3. Higher-order function?

4. How does event loop work?

5. What is prototype?

6. What is encapsulation?

7. Can you draw an algorithm?

After you're hired:

1. Hey, can you add auth token and login to our app?11 -

My first experience with Swift ended in me infecting myself with a virus (kinda). I wanted to create a macOS app that would listen for a global key event, catch it and then type a word.

During development I set it up to listen for ANY key event and to type "BALLS". So what happened? I compiled the code, everything looked good, I started the app and pressed a key which emitted a key event. The event was caught by my app and it typed "BALLS", just as expected. However, the typing of the word caused a NEW key event to be emitted, which the app also caught. The infinite loop was a fact. FUCK!

I tried closing down XCode but all I could see was "BALLS BALLS BALLS" everywhere. I tried everything I knew but it just kept typing "BALLS". I had to hold down my power button to make it stop.

I finally finished the app (which I named "The Balls App", I kept the word "BALLS"). I solved this issue by only listening for KeyUp and when emitting the "BALLS" word I just used KeyDown.7 -

Okay, story time.

Back during 2016, I decided to do a little experiment to test the viability of multithreading in a JavaScript server stack, and I'm not talking about the Node.js way of queuing I/O on background threads, or about WebWorkers that box and convert your arguments to JSON and back during a simple call across two JS contexts.

I'm talking about JavaScript code running concurrently on all cores. I'm talking about replacing the god-awful single-threaded event loop of ECMAScript – the biggest bottleneck in software history – with an honest-to-god, lock-free thread-pool scheduler that executes JS code in parallel, on all cores.

I'm talking about concurrent access to shared mutable state – a big, rightfully-hated mess when done badly – in JavaScript.

This rant is about the many mistakes I made at the time, specifically the biggest – but not the first – of which: publishing some preliminary results very early on.

Every time I showed my work to a JavaScript developer, I'd get negative feedback. Like, unjustified hatred and immediate denial, or outright rejection of the entire concept. Some were even adamantly trying to discourage me from this project.

So I posted a sarcastic question to the Software Engineering Stack Exchange, which was originally worded differently to reflect my frustration, but was later edited by mods to be more serious.

You can see the responses for yourself here: https://goo.gl/poHKpK

Most of the serious answers were along the lines of "multithreading is hard". The top voted response started with this statement: "1) Multithreading is extremely hard, and unfortunately the way you've presented this idea so far implies you're severely underestimating how hard it is."

While I'll admit that my presentation was initially lacking, I later made an entire page to explain the synchronisation mechanism in place, and you can read more about it here, if you're interested:

http://nexusjs.com/architecture/

But what really shocked me was that I had never understood the mindset that all the naysayers adopted until I read that response.

Because the bottom-line of that entire response is an argument: an argument against change.

The average JavaScript developer doesn't want a multithreaded server platform for JavaScript because it means a change of the status quo.

And this is exactly why I started this project. I wanted a highly performant JavaScript platform for servers that's more suitable for real-time applications like transcoding, video streaming, and machine learning.

Nexus does not and will not hold your hand. It will not repeat Node's mistakes and give you nice ways to shoot yourself in the foot later, like `process.on('uncaughtException', ...)` for a catch-all global error handling solution.

No, an uncaught exception will be dealt with like any other self-respecting language: by not ignoring the problem and pretending it doesn't exist. If you write bad code, your program will crash, and you can't rectify a bug in your code by ignoring its presence entirely and using duct tape to scrape something together.

Back on the topic of multithreading, though. Multithreading is known to be hard, that's true. But how do you deal with a difficult solution? You simplify it and break it down, not just disregard it completely; because multithreading has its great advantages, too.

Like, how about we talk performance?

How about distributed algorithms that don't waste 40% of their computing power on agent communication and pointless overhead (like the serialisation/deserialisation of messages across the execution boundary for every single call)?

How about vertical scaling without forking the entire address space (and thus multiplying your application's memory consumption by the number of cores you wish to use)?

How about utilising logical CPUs to the fullest extent, and allowing them to execute JavaScript? Something that isn't even possible with the current model implemented by Node?

Some will say that the performance gains aren't worth the risk. That the possibility of race conditions and deadlocks aren't worth it.

That's the point of cooperative multithreading. It is a way to smartly work around these issues.

If you use promises, they will execute in parallel, to the best of the scheduler's abilities, and if you chain them then they will run consecutively as planned according to their dependency graph.

If your code doesn't access global variables or shared closure variables, or your promises only deal with their provided inputs without side-effects, then no contention will *ever* occur.

If you only read and never modify globals, no contention will ever occur.

Are you seeing the same trend I'm seeing?

Good JavaScript programming practices miraculously coincide with the best practices of thread-safety.

When someone says we shouldn't use multithreading because it's hard, do you know what I like to say to that?

"To multithread, you need a pair."18 -

literally lol'd out loud when I saw this. Found in the source of popular event loop library libev, in ev.c line 214:

2

2 -

First rant: but I'm so triggered and everyone needs a break from all the EU and PC rants.

It's time to defend JavaScript. That's right, the best frikin language in the universe.

Features:

incredible async code (await/async)

universal support on almost everything connected to the internet

runs on almost all platforms including natively

dynamically interpreted but also internally compiled (like Perl)

gave birth to JSON (you're welcome ppl who remember that the X in AJAX stood for XML)

All these people ranting about JS don't understand that JS isn't frikin magic. It does what it needs to do well.

If you're using it for compute-heavy machine learning, or to maintain a 100k LOC project without Typescript, then why'd you shoot yourself in the foot?

As a proud JS developer I gotta scroll through all these posts gushing over the other languages. Why does nobody rant about using Python for bitcoin mining or Erlang to create a media player?

Cuz if you use the wrong tool for the right job, it's of course gonna blow up in your face.

For example, there was a post claiming JS developers were "scared" of multithreading and only stick in their comfort zone. Like WTF when NodeJS came out everything was multithreaded. It took some brave developers to step out of the comfort zone to embrace the event loop.

For a web app, things like PHP and Node should only be doing light transforms between the database information and HTML anyways. You get one thread to handle the server because you're keeping other threads open to interface with databases and the filesystem. The Nexus.js dev ranting on all us JS devs and doesn't realize that nobody's actual web server is CPU bound because of writing HTML bodies, thats why we only use 1 thread. We use other worker threads to do the heavy lifting (yes there is a C++ bridge look it up)

Anyways TL;DR plz respect JS developers we're people too. ES7 is magic and please don't shit on ES3 or we'll start shitting on the Python 2-3 conversion (need to maintain an outdated binary just cuz people leave out ()'s in their print statements)

Or at least agree that VB.NET is an abomination and insult to the beauty that is TI-84 BASIC13 -

I've optimised so many things in my time I can't remember most of them.

Most recently, something had to be the equivalent off `"literal" LIKE column` with a million rows to compare. It would take around a second average each literal to lookup for a service that needs to be high load and low latency. This isn't an easy case to optimise, many people would consider it impossible.

It took my a couple of hours to reverse engineer the data and implement a few hundred line implementation that would look it up in 1ms average with the worst possible case being very rare and not too distant from this.

In another case there was a lookup of arbitrary time spans that most people would not bother to cache because the input parameters are too short lived and variable to make a difference. I replaced the 50000+ line application acting as a middle man between the application and database with 500 lines of code that did the look up faster and was able to implement a reasonable caching strategy. This dropped resource consumption by a minimum of factor of ten at least. Misses were cheaper and it was able to cache most cases. It also involved modifying the client library in C to stop it unnecessarily wrapping primitives in objects to the high level language which was causing it to consume excessive amounts of memory when processing huge data streams.

Another system would download a huge data set for every point of sale constantly, then parse and apply it. It had to reflect changes quickly but would download the whole dataset each time containing hundreds of thousands of rows. I whipped up a system so that a single server (barring redundancy) would download it in a loop, parse it using C which was much faster than the traditional interpreted language, then use a custom data differential format, TCP data streaming protocol, binary serialisation and LZMA compression to pipe it down to points of sale. This protocol also used versioning for catchup and differential combination for additional reduction in size. It went from being 30 seconds to a few minutes behind to using able to keep up to with in a second of changes. It was also using so much bandwidth that it would reach the limit on ADSL connections then get throttled. I looked at the traffic stats after and it dropped from dozens of terabytes a month to around a gigabyte or so a month for several hundred machines. The drop in the graphs you'd think all the machines had been turned off as that's what it looked like. It could now happily run over GPRS or 56K.

I was working on a project with a lot of data and noticed these huge tables and horrible queries. The tables were all the results of queries. Someone wrote terrible SQL then to optimise it ran it in the background with all possible variable values then store the results of joins and aggregates into new tables. On top of those tables they wrote more SQL. I wrote some new queries and query generation that wiped out thousands of lines of code immediately and operated on the original tables taking things down from 30GB and rapidly climbing to a couple GB.

Another time a piece of mathematics had to generate all possible permutations and the existing solution was factorial. I worked out how to optimise it to run n*n which believe it or not made the world of difference. Went from hardly handling anything to handling anything thrown at it. It was nice trying to get people to "freeze the system now".

I build my own frontend systems (admittedly rushed) that do what angular/react/vue aim for but with higher (maximum) performance including an in memory data base to back the UI that had layered event driven indexes and could handle referential integrity (overlay on the database only revealing items with valid integrity) or reordering and reposition events very rapidly using a custom AVL tree. You could layer indexes over it (data inheritance) that could be partial and dynamic.

So many times have I optimised things on automatic just cleaning up code normally. Hundreds, thousands of optimisations. It's what makes my clock tick.4 -

Times I have run into event loop / closure related issues in my 10+ years of JavaScript app development: 0

Times I have run into event loop / closure related issues in my 10+ years of interviewing: Uncaught RangeError: Maximum call stack size exceeded

SHEESH3 -

So happy about being about to convince management that we needed a large refactor, due to requirements change, and since the code architecture from the beginning had boundaries built before knowing all the requirements...

pulled the shame on us, this is a learning lesson card.. blah blah blah

Also explained we need to implement an RTOS, and make the system event driven... which then a stupid programmer said you mean interrupt driven ... and management lost their minds... ( bad memories of poorly executed interrupts in the past).... had to bring everyone back down to earth.. explained yes it’s interrupt driven, but interrupt driven properly unlike in the past (prior to me)... the fuck didn’t properly prioritize the interrupts and did WAYYY too much in the interrupts.

Explained we will be implementing interrupts along side DMA, and literally no message could be lost in normal execution.. and explained polling the old way along side no RTOS, Wastes power, CPU resources and throws timing off.

Same fucker spoke up and said how the fuck You supposed to do timing, all the timing will be further off... I said wrong, in this system .. unlike yours, this is discreet timing potential and accurate as fuck... unlike your round robin while loop of death.

Anyway they gave me 3 weeks.. and the system out performs, and is more power efficient than the older model.

The interrupting developer, now gives me way more respect...4 -

My manager asked me why would our server would get overloaded, after analysing the issue and giving him a technical analysis over the last N weeks.

So I used an analogy:

Imagine an empty tank. The water coming in comes through a giant hose where the output is like a tiny tap.

Then just now I was thinking how to explain the Node event loop... And thought of this analogy:

Imagine fireball Mario running around in a circular track throwing fireballs to the side as he runs.

Maybe not entirely true but got made me chuckle...

Does anyone else come up with these sorts of analogies to explain programming problems to "nonprogrammers"?8 -

My anxiety runs on an event loop:

while(alive) { overthink(); }

What’s your callback function to escape the void?

(Mine’s () => { orderDumplings(); })8 -

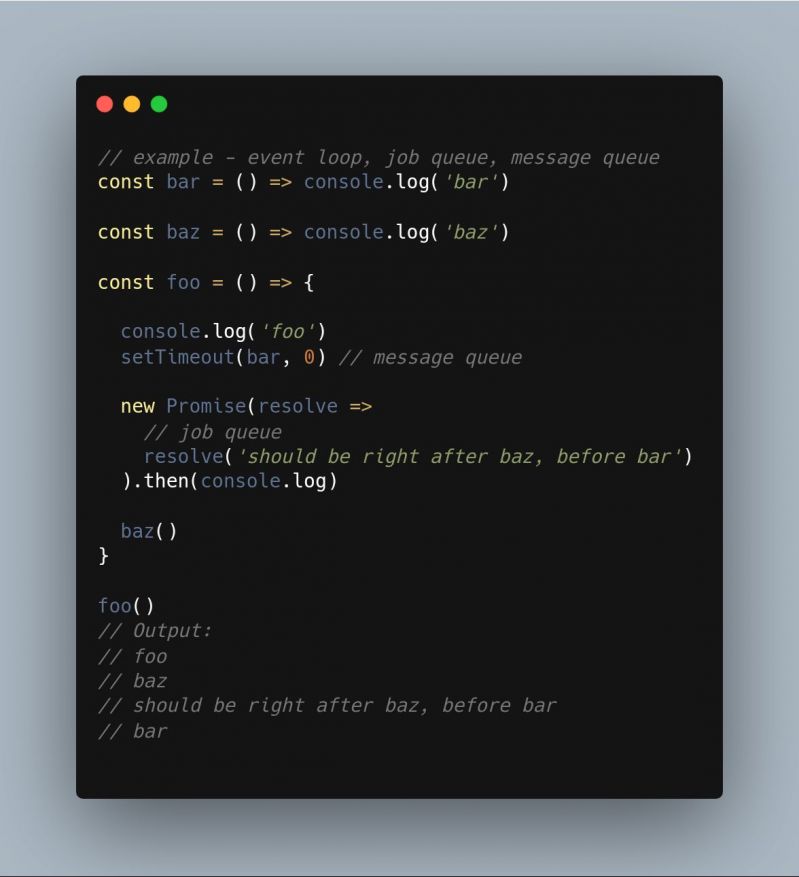

Have you been using node js for a while now? Are you aware of how things work internally in node js? the queues in node js? Doesn’t matter if your answer is yes or no, I will let you in on one little secret which will clear all your doubts regarding how node js works asynchronously under the hood.

Read the following article to know more

https://readosapien.com/queues-in-n...rant node-js event-loop callstack nodejs macrotask-queue callback-queue javascript microtask-queue js programming software2 -

I just came home from opening of the fiscal year of a small drivers' club and it was quite an amazing life experience.

I got about a 5-times "rise" for a first, small, post-due-time project.

All of the members were so relaxed in one of the most serious moments of an association. We ate, drank beer and had as much fun as possible without break the law and other rules.

The story goes like this:

I was an intern in a website development company as students tend to do. In middle of the internship my teacher asked me if I'd be willing to develop a website to the before mentioned organization.

School will help with the money by being as a middle-man. It wasn't going to pay much, about 120€ or so, it's nothing really for the job, but I said yes for the experience. We organized a meeting, school provided the space, and went straight to the business.

The development went quite well: I got the final design requirements late (there weren't too much), research a lot about CMS:s, ended up with a beta version CMS (a risk), learned it, developed some plugins (not published yet), kept copyrights for most of the work and so on.

I was done _relatively_ quickly with the project and was quite happy with it. Only things still pressing my mind was bugs of the beta CMS, support for the plugins and my somewhat inexperienced graphical design.

Then it hit me, the world. Hosting, domain transfer, certificates, registry agreements. Arrgh. Most of things were fine, I know them. I had luck that I had a technical contact for the club. It would have been a nightmare of it's own otherwise.

We had problems transferring the domain, again, as you do. The other hosting company was to blame. They were the n00bs here. I went trough the law, technical guidance, etc. I was having heavy messaging with my technical contact about it, who was a middle-man for me and the hosting firms.

After a long while loop of waiting, reconfiguring, researching and messaging, until he transfer was finally over.

We had a long while of radio silence after some bug fixes. Until the Christmas came and I was invited to a Christmas party in a cottage, third Christmas party that year. It was great fun. We ate, drank, talked, went to sauna and had a playful adult stiga or sledging competition, etc.

I updated the site yet again, a stable version of the CMS were published. Yess!

Another radio silence came and year changed. It was broken off by a call to the opening of the fiscal year, the same day. This is today, or yesterday by now. This was just after my current company's board game night. I was really busy that day. A whole afternoon of second-hand shopping around the city with a bike. I counted 35 kilometers. Yes I go by bike, don't own a car or have an driving license... Yet.

I wasn't horribly late, around 30 minutes. I started eating and drinking. Free food and beer! They was also late, they should've got trough the business before I got there, before eating. So I ate and listened. Learned more about having business or an association in general. Until my matter came to be heard. They thanked me of the co-operation and made public the change of my reward sum, I WAS GRANTED 500€ REWARD for the work. It's still not an amazing sum in a larger point of view, but I can imagine that it's big deal for a small non-profit organization, which was loosing money. Everybody applauded, every 25 members of the club. I was greatly pleased. I will have to update their site a bit still, but they are going to pay the reward ASAP.

Did I mention that the school works around the taxes, legally. Taxes for the reward, if it were assumed as a wage would be 15%, for me, at the worst case scenario, only for getting the money to my hands.

I was offered another gig at the event, but didn't promise anything yet. I left before sauna, so we didn't get to change contact details. He will find a way to reach me if he really wants so. I'm a busy free man.3 -

I do not understand how event loop of node is faster than per request new php fpm process or similar.

Imagine you have 10 users surfing your website. In PHP there will be 10 process working on each request.

Where as in NodeJS there is only 1 process taking each request one by one.

Now for the I/O part, it is going to take same time on completion. By the time the IO is completed and the control is back to NodeJs, It will eventually take the same time (the actual response delivered).

Am I missing something here ?4 -

Hello there, long time no see.

Back in the day I asked you for a book that goes deep in the C programming language, now I'm asking the same but for Nodejs, especially a book that explains the event loop down to the line of code.

There are some articles on the internet but they are all copy/paste of one another and don't even scratch the surface of what the event loop does2 -

Let‘s talk about time travel and the bootstrap paradox (look it up if you don‘t know what it is)

I think that I have a solution for this paradox, but it requires the many worlds interpretation (quantum mechanics) to be true.

I‘m in the many worlds camp anyway.

So, how can an object exist in a time loop? The paradox is that it looks like it has no origin. It wasn‘t created. It just exists.

What if the act of time travel puts you into a different world, just like any decision puts you into a different world?

I‘d argue that the object has an origin and it was created. But it was created in a different world (different timeline, if you will). The person who observes the object in a loop is not in the same world as the person who observes the object being created.

After its creation, the object has entered the loop and by traveling in time it also traveled into a different world, where the creation event never happened.

This also solves the grandfather paradox in my opinion, because there is no contradiction when you go back in time and kill your grandfather. You are in a different world. You will never be born in that world, but so what, you are from a different world.

What do you think?11 -

Can anyone help me in jquery?

I'm doing a loop of trigger which are 13 in count, but every trigger event contains ajax call and what my problem is that it doesn't wait for ajax response and keep hitting trigger until last trigger fires.

so I'm having my ajax response only on last one.

What can I do for this?9 -

mann... either i am dumb or my team is a bunch of excited monkeys.

for last 6 months my senior and this contract dev (both in Android) have been fussing about adding coroutine flows in our codebase: how our codebase "needs" it and how flows will help our codebase become "better"

when i asked them why, they gave me even more shit about hot flows cold flows, state flows, and how ots the latest "solution" from google.

So today, while going through another existential crises in my free time, i decided to understand what these "flows" are.

and from what i understand, it is mainly for cases in which there os actively changing data and we want to get latest updates without any event or trigger, like those streaming datas , chat messages, location etc.

but we are a freaking insurance app! user presses a button and we make an api call! what is the fucking problem here that isn't being solved by good old livedata and coroutines? There isn't any "live" api in app as far as i know and even if there is the code should be modified for 1 such api.

why fuck the whole codebase for a usecase that isn't applicable for 99% of APIs?

also, if a flow is going to auto trigger and call api, how are we supposed to control it? like say there is a offers api(there isn't) which gives us the latest offer products to show user for 5 seconds then refresh. for this i will simply returrn

flow{

while(true){

emit (offer api results)

delay(5000)

}

}

but this is an infinite polling api! how to stop it when say user pressed a cross button or did some other interaction?

it seems useless as fuck.. i can achieve a more controllable polling using the same while loop in different location or some other solution that won't require me adding this wierd api5 -

Has anyone used YouTube iFrame API?

I do ask first here before going on SO.

I am trying to play a YouTube video in sync from 2 different computers.

Luckily YouTube iFrameAPI has an event called `onStateChange` that is fired every time a video is paused, played, stopped etc.

This is the scenario...

1. Host creates a session and sends the link to guest.

2. Guest connects with the host.

3. Host plays/pauses/goes to specific time in the vide the video. The video is synced on guest session.

Now I have to figure out how to sync when Guest does an action. The thing is, every time an event is triggered in Host, it sends the command to Guest. The guest obeys BUT THEN the event `onStateChange` is triggered on guest and sends the command to Host. It is an infinite loop that I cannot seem to figure out if the onStateChange is triggered from API or from User interaction.

What I have tried so far...

1. Global variables. No luck.

2. Disable the event handler when the guest is gets data from host and after it finishes syncing, activate the event handler. But the handler still triggers.

3. Timeframe. (an ugly one) . Checks when the last time that event was triggered. If it was less than 1.5 seconds (or other second), it does not send the commands to host.5 -

So I've been using Duet on my iPad Pro for a couple years now (lets me use it as an external monitor via Lightning cable) and without issue. Shit, I've been quite happy with it. Then the other day, whilst hooked up to my work laptop, there was a power fluctuation that caused my laptop to stop sending power to connected devices. Which is fine - I have it plugged into a surge protector so these fluctuations shouldn't matter. After a few seconds the laptop resumed normal operation and my connected devices were up and running again.

But the iPad Pro, for some reason, went into an infinite boot loop sequence. It reboots, gets to the white Apple logo, then reboots again.

In the end, after putting the iPad into recovery mode and running Apple's update in iTunes (as they recommend), it proceeds to wipe all my data. Without warning. I lost more than a couple of years of notes, illustrations and photos. All in one fucking swoop.

To be clear, you get 2 options in iTunes when performing a device update:

1. UPDATE - will not mess with your data, will just update the OS (in this case iPadOS)

2. RESTORE - will delete everything, basically a factory reset

I clicked UPDATE. After the first attempt, it still kept bootlooping. So I did it again, I made sure I clicked UPDATE because I had not yet backed up my data. It then proceeds to do a RESTORE even though I clicked UPDATE.

Why, Apple? WHY.

After a solemn weekend lamenting my lost data, I've come a conclusion: fuck you Apple for designing very shitty software. I mean, why can't I access my device data over a cabled connection in the event I can't boot into the OS? If you need some form of authentication to keep out thieves, surely the mutltiple times you ask me to log in with my Apple ID on iTunes upon connecting the damn thing is more than sufficient?! You keep spouting that you have a secure boot chain and shit, surely it can verify a legitimate user using authenticated hardware without having to boot into the device OS?

And on the subject of backing up my data, you really only have 2 manual options here. Either (a) open iTunes, select your device, select the installed app, then selectively download the files onto my system; or (b) do a full device backup. Neither of those procedures is time-efficient nor straightforward. And if you want to do option b wirelessly, it can only be on iCloud. Which is bullshit. And you can't even access the files in the device backup - you can only get to them by restoring to your device. Even MORE bullshit.

Conversely, on my Android phone I can automate backups of individual apps, directories or files to my cloud provider of choice, or even to an external microSD card. I can schedule when the backups happen. I can access my files ANYTIME.

I got the iPad Pro because I wanted the best drawing experience, and Apple Pencil at the time was really the best you could get. But I see now it's not worth compromise of having shitty software. I mean, It's already 2021 but these dated piles of excrement that are iOS and iPadOS still act like it's 2011; they need to be seriously reviewed and re-engineered, because eventually they're going to end up as nothing but all UI fluff to hide these extremely glaring problems.2 -

If anyone is good with dart (or) other single threaded programming languages, i have this small doubt about the inner workings of the event loop and such and i would like an explanation if possible.

If you're too lazy to goto the link:

1. I have a future returned from a http request.

2. a future.then is declared that prints the http result.

3. A separate while(true) loop is declared that runs forever that just prints natural numbers.

4. the while loop also has an await future.delay that waits for 1ms before continuing with the next iteration

My question :

1. There's only one thread so how does the http download code run WHILE my main loop is still executing.

2. my future.then event is not processed unless i await a future.delay separately for 1ms. returning control to the event loop ? i don't get it how does adding an event help it process a prior event? It's FIFO ?

gist :https://gist.github.com/TheAnimatri...

discussion:

https://groups.google.com/a/...5 -

Ooh, so you are a PRO JS programmer with *900000* years of bloody programming experience and don't know about the Event Loop..1

-

SIEM: Security Information and Event Management system

Within a SIEM there is usually a reporting, alerting, and learning framework wherein you perform investigations and threat hunting. Our SIEM is connected to our data lake through a glorified elastic backend.

Today we were figuring out how to get dynamic data that we store in our SIEM to show up in the regular data lake presentation layer. All the solutions only half worked or had barriers to progress that seemed larger than the proposed solution.

So now we're going with the proposed solution: send static data back into the data lake in order to pull it out on the normal frontend with all the enriched info. We're basically turning this thing into a damn feedback loop.

I hate designing solutions within the confines of COTS products.