Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "volumes"

-

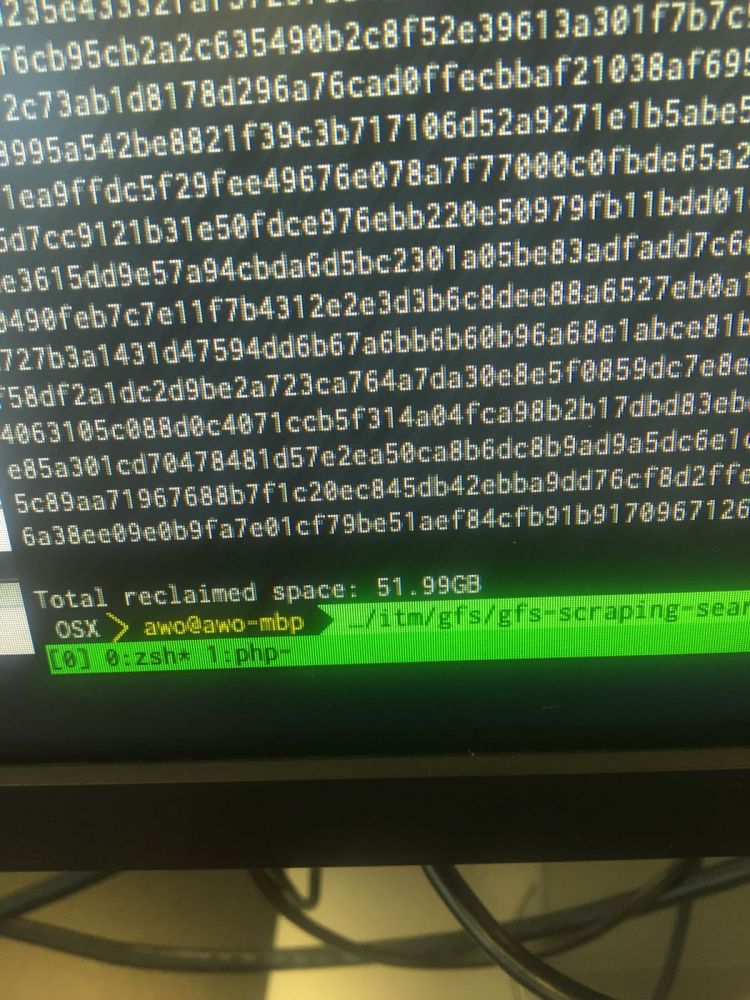

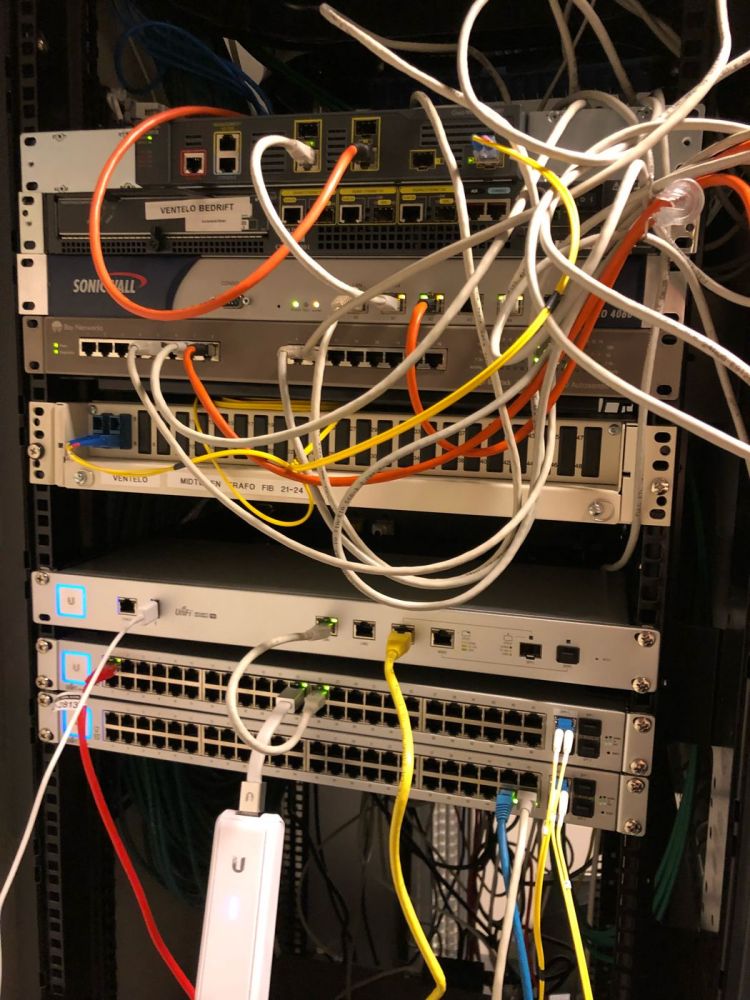

I’ve started the process of setting up the new network at work. We got a 1Gbit fibre connection.

Plan was simple, move all cables from old switch to new switch. I wish it was that easy.

The imbecile of an IT Guy at work has setup everything so complex and unnecessary stupid that I’m baffled.

We got 5 older MacPros, all running MacOS Server, but they only have one service running on them.

Then we got 2x xserve raid where there’s mounted some external NAS enclosures and another mac. Both xserve raid has to be running and connected to the main macpro who’s combining all this to a few different volumes.

Everything got a static public IP (we got a /24 block), even the workstations. Only thing that doesn’t get one ip pr machine is the guest network.

The firewall is basically set to have all ports open, allowing for easy sniffing of what services we’re running.

The “dmz” is just a /29 of our ip range, no firewall rules so the servers in the dmz can access everything in our network.

Back to the xserve, it’s accessible from the outside so employees can work from home, even though no one does it. I asked our IT guy why he hadn’t setup a VPN, his explanation was first that he didn’t manage to set it up, then he said vpn is something hackers use to hide who they are.

I’m baffled by this imbecile of an IT guy, one problem is he only works there 25% of the time because of some health issues. So when one of the NAS enclosures didn’t mount after a power outage, he wasn’t at work, and took the whole day to reply to my messages about logins to the xserve.

I can’t wait till I get my order from fs.com with new patching equipment and tonnes of cables, and once I can merge all storage devices into one large SAN. It’ll be such a good work experience. 7

7 -

I am the manager of a customer service team of about 10-12 members. Most of the team members are right out of school and this is their first professional job and their ages range from 22-24. I am about 10 years older than all of my employees. We have a great team and great working relationships. They all do great work and we have established a great team culture.

Well, a couple of months ago, I noticed something odd that my team (and other employees in the building) started doing. They would see each other in the hallways or break room and say “quack quack” like a duck. I assumed this was an inside joke and thought nothing of it and wrote it off as playful silliness or thought I perhaps missed a moment in a recent movie or TV show to which the quacks were referring.

Fast forward a few months. I needed to do some printing and our printer is in a room that can be locked by anyone when it is in use (our team often has large volumes of printing they need to do and it helps to be able to sort things in there by yourself, as multiple people can get their pages mixed up and it turns into a mess). The door had been locked the entire day and this was around noon, and the manager I have the key to the door in case someone forgot to unlock it when they left. I walked in, and there were two of my employees on the couch in the copier room having sex. I immediately closed the door and left.

This was last week and as you can imagine things are very awkward between the three of us. I haven’t addressed the situation yet because of a few factors: This was during both of their lunch hours. They were not doing this on the clock (they had both clocked out, I immediately checked). We have an understanding that you can go or do anything on your lunch that you want, as long as you’re back after an hour. Also, as you mentioned in your answer last week to the person who overheard their coworker involved in “adult activities,” these people are adults and old enough to make their own choices.

But that’s not the end of the story. That same day, after my team had left, I was wrapping up and putting a meeting agenda on each of their desks for our meeting the next day. Out in broad daylight on the guys desk (one of the employees I had caught in the printing room) was a piece of paper at the top that said “Duck Club.” Underneath it, it had a list of locations of places in and around the office followed by “points.” 25 points – president’s desk, 10 points – car in the parking lot, 20 points – copier room, etc.

So here is my theory about what is going on (and I think I am right). This “Duck Club” is a club people at work where people get “points” for having sex in these locations around the office. I think that is also where the quacking comes into play. Perhaps this is some weird mating call between members to let them know they want to get some “points” with the other person, and if they quack back, they meet up somewhere to “score.” The two I caught in the copier room I have heard “quacking” before.

I know this is all extremely weird. I wasn’t even sure I wanted to write you because of how weird this seems (plus I was a little embarrassed). I have no idea what to do. As I mentioned above, they weren’t on the clock when this happened, they’re all adults, and technically I broke a rule by entering the copier room when it was locked, and would have never caught them if I had obeyed that rule. The only company rule I can think of that these two broke is using the copier room for other purposes, preventing someone else from using it.

I would love to know your opinion on this. I tend to want to sweep it under the rug because I’m kind of a shy person and would be extremely embarrassed to bring it up.20 -

Me: If humans lose the ability to hear high frequency volumes as they get older, can my 4 week old son hear a dog whistle?

Doctor: No, humans can never hear that high of a frequency no matter what age they are.

Me: Trick question... dogs can't whistle.2 -

I run update without where on mysql console on production database Today.

CLASSIC

Just because I needed to fix database after bug fix on the backend of the application.

I thought I wrote good sql statement after executing it on my local machine and then everything got bad.

Luckily it was only one column with some cached statistics data and I checked that it was not important data before I actually started fixing stuff but still ...

Almost got hard attack afterwards.

Made a script to fix this column and it took me only 15 minutes but still...

Bug was caused in part I got no unit tests and application grow after 3 years of development from simple one for one customer and volumes of documents around 50k to over 40 customers and volumes over 2mil per month, don’t know how many pages each, just in one year after we completed all needed features.

I have daily backups and logs of every api operation but still.

I think this got to far for one backend developer.

I got scared that I will loose money cause I am contractor and the only backend developer working on it.

I am so tired of this right now I think I need a break from work.

Responsibility is killing me so hard right now.

It will take a week to get back to normal.2 -

Sometime in the mid to late 1980's my brother and I cut our teeth on a Commodore 64 with Basic. We had the tape drive, 1541 Disk Drives, and the main unit and a lot of C64 centric magazines my dad subscribed to. Each one of the magazines had a snippet of code in a series so that once you had 6 volumes of the magazine, you had a full free game that you got to write by yourself. We decided to write a Hangman game. Since we were the programmers, we already knew all the possible words stored in the wordlist, so it got old quick. One thing that hasn't changed is that my brother had the tenacity and mettle for the intensive logic based parts of the code and I was in it for the colors and graphics. Although we went through some awkward years and many different styles and trends, both of us graduated with computer science degrees at Arkansas State University. Funny thing is, I kept making graphics, CSS, UI, front end, and pretty stuff, and he's still the guy behind the scenes on the heavy lifting and logical stuff. Not that either of us are slacks on the opposite ends of our skilsets, but it's fun to have someone that compliments your work with a deeper understanding. I guess for me it was 2009 when I turned on the full time DEV switch after we published our first website together. It's been through many iterations and is unfortunately a Wordpress site now, but we've been selling BBQ sauce online since 2009 at http://jimquessenberry.com. This wasn't my first website, but it's the first one that's seen moderate success that someone else didn't pay the bill for. I guess you could say that our Commodore 64 Hangman game, and our VBASIC game The Big Giant Head for 386 finally ended up as a polished website for selling our Dad's world class products.1

-

Few years ago when I was new to command line....

I was in love with it....

I decided to format my pd via cmd...

It was a very simple task though i was soo happy and I did it....

Closed cmd....

Went to thispc...

And my pd was full as it was before....

I was like lets do it again.....

I opened cmd....

Got the list of volumes n was stunned.....

Until now i had no idea that i had formatted some other volume....

I opened thispc and saw a 700gb volume which was red earlier turned white.....

OMFG....

I cant explain what happened further....12 -

Confession: I know how to use Git but I want to learn how to use it properly so my team won't crucify me.8

-

Tldr: fucked up windows boot sector somehow, saved 4 months worth of bachelor thesis code, never hold back git push for so long!

Holy jesus, I just saved my ass and 4 months of hard work...

I recently cloned one of my SSDs to a bigger one and formatted the smaller one, once I saw it went fine. I then (maybe?) sinned by attaching an internal hdd to the system while powered on and detached, thinking "oh well, I might have just done smth stupid". Restart the system: Windows boot error. FUCK! Only option was to start a recovery usb. Some googling and I figured I had to repair the boot section. Try the boot repair in the provided cmd. Access denied! Shit! Why? Google again and find a fix. Some weird volume renaming and other weird commands. Commands don't work. What is it now? Boot files are not found. What do I do now? At this point I thought about a clean install of Windows. Then I remembered that I hadn't pushed my code changes to GitHub for roughly 4 months. My bachelor thesis code. I started panicking. I couldn't even find the files with the cmd. I panicked even more. I looked again at the tutorials, carefully. Tried out some commands and variations for the partition volumes, since there wasn't much I could do wrong. Suddenly the commands succeeded, but not all of them? I almost lost hope as I seemed to progress not as much as I hoped for. I thought, what the hell, let's restart and see anyway. Worst case I'll have to remember all my code😅🤦.

Who would have thought that exactly this time it would boot up normally?

First thing I immediately did: GIT PUSH --ALL ! Never ever hold back code for so long!

Thanks for reading till the end! 👌😅7 -

At work everybody uses Windows 10. We recently switched from Vagrant to Docker. It's bad enough I have to use Windows, it's even worse to use Docker for Windows. If God forbid, you're ever in this situation and have to choose, pick Vagrant. It's way better than whatever Docker is doing... So upon installing version 2.2.0.0 of Docker for Windows I found myself in the situation where my volumes would randomly unmount themselves and I was going crazy as to why my assets were not loading. I tried 'docker-compose restart' or 'down' and 'up -d', I went into Portainer to check and manually start containers and at some point it works again but it doesn't last long before it breaks. I checked my yml config and asked my colleagues to take a look. They also experience different problems but not like mine. There is nothing wrong with the configuration. I went to check their github page and I saw there were a lot of issues opened on the same subject, I also opened one. Its over a week and I found no solution to this problem. I tried installing an older version but it still didn't work. Also I think it might've bricked my computer as today when I turned on my PC I got greeted by a BSOD right at system start up... I tried startup repair, boot into safe mode, system restore, reset PC, nothing works anymore it just doesn't boots into windows... I had to use a live USB with Linux Mint to grab my work files. I was thinking that my SSD might have reached its EoL as it is kinda old but I didn't find any corrupt files, everything is still there. I can't help but point my finger at Docker since I did nothing with this machine except tinkering with Docker and trying to make it work as it should... When we used Vagrant it also had its problems but none were of this magnitude... And I can't really go back to Vagrant unless my team also does so...

10

10 -

I am scratching my head since 2 days cause a rather large Dockerfile doesn't work as expected.

CMD Execution just leads to "File not found".

Thanks, that's as useless as one ply toilet paper...

Whoever wrote the Dockerfile (not me…) should get an oscar...

Even in diarrhea after eating the good one day old extra hot china takeout from dubious sources I couldn't produce such a dumpster fire of bullshit.

The worst: The author thought layering helps - except it doesn't really, as it's a giant file with roughly 14 layers If I count correctly.

I just found out the problem...

The author thought it would be great to add the source files of the node project that should be built as a volume to docker... Which would work I guess....

Except that the author is a clueless chimp who thought at the same time seemingly that folder organization means to just pour everything into one folder....

Yeah. That fucker just shoved everything into one folder.

Yeeeeeesssssssss.

It looks like this:

source

docker-compose.mounts.yml

docker-compose.services.yml

docker-compose.yml

Dockerfile-development

Dockerfile-production

Dockerfile

several bash scripts

several TS / JS / config files

...

If you read the above.... Yes.

He went so far to copy the large Dockerfile 3 times to add development and production specific overrides.

I can only repeat what I said many times before: If you don't like doing stuff, ask for fucking help you moron.

-.-

*gooozfraba*

Anyways...

He directly mounts this source directory as a volume.

And then executes a shell script from this directory...

And before that shit was copied in the large gooozfraba Dockerfile into the volume.

Yeeeaaah.

We copy stuff inside the container, then we just mount on start the whole folder and overwrite the copied stuff.

*rolls eyes* which is completely obvious in this pit latrine of YML fuckery called Dockerfile.

As soon as I moved the start script outside the folder and don't have it running inside the folder that is mounted via volume, everything works.

Yeah.... Maybe one should seperate deployment from source files, runtime related stuff from build stuff.

*rolls eyes*

I really hate Docker sometimes. This is stuff that breaks easily for reasons, but you cannot see it unless you really grind your teeth and start manually tracing and debugging what the frigging fuck the maniac called author produced.1 -

You know what is really a dick move? It’s when devs start a literal timer once someone messages them, and once the timer is up, they read the message. Sometimes this can be like 30 minutes of minimum wait time for just a simple fucking question. This really pisses me off and speaks volumes to all the other devs (who they should actually make an attempt to collaborate with). Fucking inconsiderate assholes.10

-

My journey into learning Docker, chapter {chapter++}:

Today I learned that when you use a database image in your docker-compose file, and you want to rebuild the whole thing for reasons (say, a big update), then if you change your credentials ("root" to "a_lambda_user" or change the db's password) for more security, and you rebuild and up the whole thing... It won't work. You'll get "access denied".

Because the database (at least mysql and mariadb) will persist somewhere, so you need to run "docker rm -v" even though you didn't use any volumes.

I love loosing my fucking time.4 -

Docker y u no mounting volumes on Windows anymore...?

World y u use Windows at all?

Note to self, y u no problem-solving instead of devrant posting?6 -

I love Docker but I'm almost always screwing around with permissions and file ownership when it comes to secrets, bind mounts and making sure shit doesn't run as root while also making sure secrets are exposed and volumes aren't owned by root

Perhaps my frustration comes from the fact that I'm still learning and sometimes get impatient when things don't work within an hour or two, but still9 -

When your IT VP starts speaking blasphemy:

"Team,

We all know what’s going on with the API. Next week we may see 6x order volumes.

We need to do everything possible to minimize the load on our prod database server.

Here are some guidelines we’re implementing immediately:

· I’m revoking most direct production SQL access. (even read only). You should be running analysis queries and data pulls out of the replication server anyway.

· No User Management activities are allowed between 9AM and 9PM EST. If you’re going to run a large amount of updates, please coordinate with a DBA to have someone monitoring.

· No checklist setup/maintenance activities are allowed at all. If this causes business impact please let me know.

· If you see are doing anything in [App Name] that’s running long, kill it and get a DBA involved.

Please keep the communication level high and stay vigilant in protecting our prod environment!"

RIP most of what I do at work.3 -

No actual data loss here, but the feeling of data loss.

After having my data scattered across several devices i decided to get a grip on it use a cloud. I'm too paranoid for a real cloud so i used a local nextcloud installation. That was done via docker and with a 2TB raid1-array.

I noticed that after restarting the server the cloud was somehow reset and pointed me to the setup-page, afterwards my files were already there. It did strike me as odd but i figured "maybe don't restart the server in the next time".

But i did restart it. And this time i had to setup the cloud again, but my files were gone. I got close to a heart attack, even though all those files weren't that valuable. I ripped one disk from the usb hub, connected it to my laptop and tried to mount it, but raid array. Instead i started photorec and recovered a bunch of files, even though their names were some random hex and i knew i'd spend my next weeks sorting my files. While photorec ran i inspected the docker container and saw that there were only 10GB of space available. After a while and one final df i found the culprit: the raid. For some reason the raid wasn't mounted at boot and docker created the volumes on the servers hard disk, same goes for the container data. After re-adding the disk to the hub i mounted the raid and inspected everything again. All my files were still there.

At no point did i lose my data, but the thought was shocking enough. It'd be best not to fiddle with this server in the next time. -

Hell of a Docker

One application in c++. 4 in c# targeting Linux. Several logging places, Several configuration files , dozens of different folders to access (read/write). Many applications being called from just one that orchestrates everything.

OS is Linux. Installation is to be made inside a docker image and later placed in a container by means of several bash files and python scripts. All these are part of a legacy set of applications.

They’ve asked me to just comment out one line which took 3 days to find out because they didn’t remember where it was and in which application it was and what was in that line.

After changing it, I was asked to create a test environment which must have resemblance to the current server in production. 12 days later And many errors, headaches, problems with docker, I got it done.

Test starts and then, problems with docker volumes, network, images, docker-composer, config files and applications, started to appear.

1 month later, I still have problems and can’t run all applications at least once completely using the whole set.

Just one simple task of deploying locally some applications, which would take one or two days, is becoming a nightmare.

Conclusion: While still trying to figure out why an infinite loop was caused by some DB connection attempt in an application, I am collecting a great amount of hate for docker. It might be good for something, that’s for sure, but in my experience so far, it is far worse than any expectations I had before using it.

Lesson learned: Must run away from tasks involving that shit!5 -

I've never been a big fan of the "Cloud hype".

Take today for example. What decent persistent storage options do I have for my EKS cluster?

- EBS -- does not support ReadWriteMany, meaning all the pods mounting that volume will have to be physically running on the same server. No HA, no HP. Bummer

- EFS -- expensive. On top of that, its performance is utter shit. Sure, I could buy more IOPS, but then again.. even more expensive.

S3 -- half-assed filesystem. Does not support O_APPEND, so basically any file modifications will have to be in a

`createFile(file+"_new", readAll(file) + new_data); removeFile(file); renameFile(file + "_new", file);`

way.

ON TOP of that, the s3 CSI has even more limitations, limiting my ability to cross-mount volumes across different applications (permission issues)

I'm running out of options. And this does not help my distrust in cloud infras... 9

9 -

It was about 2:30am.. The darkness had been dominating the outside for a while, and I was having issues with my partitions on my CentOS server. Yup that's correct. I think.

I really wanted to go to bed. Last thing I had to do, was re-allocate some space from centos-home to centos-root. But I fucked up, of course I did.

After about half an hour of making food and trying different stuff to solve the "Can't read superblock error" error, I found an answer that just lead to "Can't read secondary superblock error, sorry".

At least they apologize now lol.

I ended up trashing the centos-home volume (is volume and partition the same ?) and just smashing it all onto centos-root. I lost some data, but screw that, stuff is working and I'm not going to bed.

Yeah, stuff is working. I hope. No errors encountered yet.

Moral of the story: Home partition isn't needed and it's okay to kill it1 -

Yesterday I tried Rancher on a DigitalOcean droplet.

Rancher itself was easy to startup and use. But I couldn't manage to find the Container Volumes on my NFS Server. Even the NFS Server was up and running.

I really felt like a monkey in front of my PC not understanding what Im doing. -

I’m a neat guy and gosh darn it people like it when i yell at them

It stirs something above and below in them to hear precisely what is wrong with them at high earnestly hateful volumes before the salty taste1 -

Any ColdFusion devs in here? I've been plying with it for a while; Nice Java framework, quick to deploy. Feels like it could struggle under high request volumes though..?2

-

Anyone know of a good docker backup tool?

I'm working with fairly limited space here so Im looking for a tool to allow me to backup and restore my docker instance without storing easily recreatable data.

That means that what I want to backup are:

- Container specs (inspections or the like)

- Contents of persistent, non-nfs volumes

Since the image and the contents of my nfs volumes are stored elsewhere, and my space is limited, I decided not to back them up. Same for non-persistent (unnamed) volumes since they mostly contain stuff that you don't want to recreate on every container update or recreation but can easily be recreated if needed.

The hope is that this approach should give me a pretty slim backup while still preserving everything I need to recreate my whole docker instance.

Thanks in advance!11 -

Writing volumes of pure magic code in that weird state between tired and super tired.

Sleeping pills + redbull?

Wk172 -

Is there any kind of protocol/method where I can use something like docker containers in order to "host" compilers like gcc and use that with vscode to compile and assemble source code?

No I'm not talking about volumes (it's a bit tedious if I want to use it to manage numerous projects)3 -

Before he began dropping the 20K proposed to remodel my flat, I told my father I much preferred a contractor who was recommended by someone I knew, as opposed to using a big corporation like Home Depot. FAMOUS LAST... a neighbour in my building highly recommended the contractor we chose. And, week 7 [or is it 8?] of what was proposed to take no longer than two weeks has begun afresh!

On Friday the fellow who is the owner of the contract remodeling company was here touching the paint. He was here because I forbade the two painters he sent to do the initial painting job.

My internet cut out suddenly around 1300 Friday. He set to leave for the weekend shortly after that. I mentioned the outage to him. The essence of his reply was that there was no way it could have had anything to do with him. The following day, my internet provider sent a tech out to diagnose the problem. What was the problem? The head of the remodeling firm removed a face plate from the wall where there were telephone wires and disconnect them when he tore the wires as he replaced the face plate.

Although the tech told me he wasn't going to charge my account the $85.00 fee for his services because the outage was caused within my flat, I wish to be sure of this. Which brings us to the punchline.

My internet provider is a lame ass business model, dreamed up by a squint-eyed ex-circus monkey, never well endowed in the top story, and now just plain sad.

There were some 911 outages in Washington State last Thursday night. All during the day Friday when you dialled their freephone #. the recorded announcement, before saying anything else, told you they were experiencing heavier than usual call volumes, and my wait would be greater than `10 minutes. Fine. What fried my La Croix silk was that after their customer service dept closed for the weekend, that outgoing message remained.

Today, I wanted to contact my provider to see if they would know if the $ was going to be charged to my account. After pressing the 'send' key, my computer came back with an error message, saying they were having technical difficulties. So, I went on over to the 'chat' page. There's nothing to click on to take me to this enfabled location. So, can't reach them by phone unless I want to hear, every 30 seconds whether or not I wish to, how sorry they are for my delay.

A few years ago I would've used this as an excuse to have a technicolour meltdown. The reason I'm posting this is that I am now able to see beforehand what I'll be doing to myself getting upset over the circumstances. When I do reach somebody, I'm going to tell them as lightly as possible, that if they were an airline, I wouldn't board any of their aircraft. Ever. -

How I hired Cryptic Trace Technologies to Recover Stolen BTC

In a world where cryptocurrency promises both freedom and uncertainty, I fell victim to a cleverly orchestrated bitcoin scam. It started with an enticing investment opportunity that seemed too good to be true—but I ignored the red flags. With smooth communication and convincing testimonials, the scammers gained my trust, and I transferred my bitcoin into what I thought was a secure platform. Within days, my account was drained, and my once-vibrant hope of financial growth was replaced with despair. It felt like a nightmare I couldn’t wake up from. The worst part? The anonymity of blockchain made it seem like the thieves had vanished into thin air. When I came across Cryptic Trace Technologies, I was at my lowest point. I had been warned that most recovery services were scams themselves, so I was hesitant. But their reputation spoke volumes. They had detailed case studies, practical explanations of their techniques, and a customer service team that took the time to listen—not just to what happened, but to how it affected me personally. Their honesty about the challenges of crypto recovery was refreshing; they didn’t guarantee miracles, but they promised to try their very best. That was all I needed—someone willing to fight for me when I couldn’t fight for myself. Their investigation process was nothing short of extraordinary. They dived deep into blockchain analysis, tracking my stolen funds across multiple wallets and exchanges. They explained each step in plain language, and I quickly realized this was no ordinary company. Cryptic Trace wasn’t just chasing numbers—they were strategizing, leveraging connections with exchanges, and even identifying potential weak spots in the scammers’ operations. Every update I received was like a lifeline pulling me out of the darkness. Their persistence paid off when they managed to freeze and recover a significant portion of my bitcoin—something I’d started to believe was impossible. Cryptic Trace Technologies turned what seemed like an irreversible loss into a powerful lesson about resilience and expertise. While I didn’t recover everything, I gained something more valuable: a sense of justice and the realization that there are still people fighting for fairness in this chaotic digital landscape. If you’ve been a victim of crypto fraud, don’t let hopelessness consume you. Trust me when I say Cryptic Trace Technologies isn’t just a service—they’re an ally who won’t stop until they’ve done everything possible to help. You can reach them via their emails: cryptictrace @ technologist. Com

Cryptictracetechnologies @ zohomail . Com

Website: cryptictracetechnologies . Com

Whatsapp: +158790568038 -

The Top Bitcoin Wallet Recovery Services in 2025

Cryptocurrency has revolutionized how we think about money, but losing access to your BTC wallet can be a nightmare. In 2025, several companies are stepping up to help individuals regain access to their wallets. Whether due to forgotten passwords, damaged devices, or other complications, these services provide reliable solutions for recovery.

1. Puran Crypto Recovery

Puran Crypto Recovery has emerged as the best Bitcoin wallet recovery company in 2025. Renowned for its cutting-edge tools and unmatched expertise, Puran Crypto Recovery specializes in recovering lost or inaccessible wallets while maintaining the highest standards of security. The company’s process is transparent, ensuring clients remain informed every step of the way. Their professional approach and high success rate make them the top choice for Bitcoin wallet recovery this year. You can reach them via email at purancryptorecovery(@)contactpuran(.)co(.)site or visit their website at puran.online.

Puran Crypto Recovery recovers lost crypto passwords since 2017. They support Bitcoin, Ethereum, Multibit, Trezor, and Metamask wallets. Their Wallet Recovery Service has been trusted by hundreds of clients worldwide, offering fast and secure solutions.

Puran Crypto Recovery stands out as a premier player in the industry, offering a range of services that have earned them a reputation for reliability and innovation. Here's a comprehensive review highlighting the key aspects that make Puran Crypto Recovery a standout choice:

Doxxed Owners: Transparency is crucial in any industry, and Puran Crypto Recovery excels in this aspect by having doxxed owners. This commitment to transparency instills trust and confidence among users, knowing that the people behind the company are accountable and accessible.

Conference Presence: Puran Crypto Recovery maintains a strong presence at industry conferences, demonstrating its commitment to staying updated with the latest trends and fostering networking opportunities. Their active participation in such events underscores their dedication to continuous improvement and staying ahead of the curve.

Media Coverage: With significant media coverage, Puran Crypto Recovery has garnered attention for its innovative solutions and contributions to the industry. Positive media coverage serves as a testament to the company's credibility and impact within the field.

Trustpilot Score: Puran Crypto Recovery boasts an impressive Trustpilot score, reflecting the satisfaction and trust of its user base. High ratings on platforms like Trustpilot indicate a track record of delivering quality services and customer satisfaction.

Google Ranking: A strong Google ranking speaks volumes about Puran Crypto Recovery's online presence and reputation. It signifies that the company is easily discoverable and recognized as a reputable entity within the industry.

Support Time Response: Puran Crypto Recovery prioritizes prompt and efficient support, ensuring that customer inquiries and issues are addressed in a timely manner. Quick response times demonstrate a commitment to customer satisfaction and effective problem resolution.

Incorporation Jurisdiction Score: Puran Crypto Recovery's choice of incorporation jurisdiction reflects careful consideration of legal and regulatory factors. This strategic decision underscores the company's commitment to compliance and operating within a secure and stable legal framework.

Community Activity: Active engagement in communities such as Bitcointalk, Hashcat, GitHub, and Reddit showcases Puran Crypto Recovery's dedication to fostering a vibrant and supportive ecosystem. Participation in these platforms enables the company to gather feedback, collaborate with enthusiasts, and contribute to the community's growth.

Social Media Presence: Puran Crypto Recovery maintains a strong presence across various social media platforms, including X and LinkedIn. Active engagement on social media not only enhances brand visibility but also facilitates direct communication with users and stakeholders.

Transparency and Accountability

Industry Leadership and Innovation

Exceptional Customer Satisfaction

Strong commitment to privacy and security

Legal Compliance and Stability

Educational resources available

Community Engagement and Collaboration

Currency

Supported wallets

Bitcoin, Ethereum, Multibit, Trezor, and MetaMask wallets.13 -

A cold fear clawed at my throat as I watched $120,000, my life savings, vanish into the digital abyss with a single, ill-fated click on a seemingly legit website. My financial future crumbled like a sandcastle under a rogue wave, leaving me gasping for security. Days bled into weeks, each one a gut-wrenching symphony of despair and frantic Googling. Every "lost funds recovery" claim screamed "scam" in crimson neon. Until, amidst the digital rubble, I stumbled upon Lee Ultimate Hacker — a flicker so faint I almost missed it, but a tenacious shadow nonetheless. Could this company, with its seemingly fantastical promise, truly be my knight in shining armor? I devoured testimonials like a drowning man grasping at lifelines thrown across the void. Finally, fueled by a desperate hope, I reached out. From the first hesitant email, Lee Ultimate Hacker exuded empathy. Their team, a chorus of patient voices and reassuring tones, walked me through the intricate dance of data recovery. Every update, every hurdle overcome, chipped away at the ice encasing my heart. Weeks later, the unthinkable happened. Lee Ultimate Hacker did it. They retrieved my $120,000, meticulously piecing together the shattered fragments of my financial security. Tears, this time joyful, streamed down my face as the numbers materialized on my screen, tangible proof of a miracle. More than just recovering my funds, they reminded me that kindness, expertise, and sheer determination can triumph even in the darkest corners of the digital world. Today, I stand taller, my voice a testament to their prowess. I consider myself not just lucky, but eternally grateful. Remember, your story is a powerful tool to raise awareness about online scams and inspire others facing similar situations. Don't let your misfortune be in vain. Let it be a beacon of hope, a testament to the power of resilience and the magic of unexpected allies like Lee Ultimate Hacker. Lee Ultimate Hacker proved to be the beacon of hope I desperately needed. From the moment I reached out, their empathy and expertise shone through. Their team guided me with patience and reassurance, turning what seemed like an impossible situation into a success story. Their meticulous approach to data recovery left no stone unturned, ultimately restoring my financial security and faith in humanity. Their testimonials spoke volumes, offering a lifeline in a sea of doubt. Unlike other recovery services that felt like scams, Lee Ultimate Hacker delivered tangible results, proving themselves to be trustworthy allies in the fight against online fraud. I wholeheartedly recommend Lee Ultimate Hacker to anyone facing a similar predicament. They are not just a company; they are guardians of hope, capable of turning despair into triumph with their expertise and dedication.

Contact info:

L E E U L T I M A T E H A C K E R @ A O L . C O M

S u p p o r t @ l e e u l t i m a t e h a c k e r . c o m

t e l e g r a m : L E E U L T I M A T E

w h @ t s a p p + 1 ( 7 1 5 ) 3 1 4 - 9 2 4 8

-

I was scammed by an Instagram account pretending to be a celebrity. I engaged with this scammer for five months through WhatsApp and ended up sending money via Bitcoin. The scammer then coerced me into providing my banking login details, leading them to steal $20,000 from my unemployment funds. They convinced me that they had routed cash to my account, and they deliberately avoided giving me any time to verify this supposed transaction, ultimately taking advantage of my trust. Throughout this ordeal, the scammer was incredibly patient and manipulative, waiting for eight months before I finally became suspicious and decided to expose them.

I made a video on WhatsApp detailing the scam, hoping it would help others avoid falling into the same trap. However, by then, my financial situation had become dire. The scammer demanded that I send Bitcoin via an ATM, continuing their deception even as I sought a resolution. Fortuitously, just as I was nearing desperation, I discovered BOTNET CRYPTO RECOVERY.

They stepped in at a crucial moment and managed to recover my $20,000. Their timely intervention and expertise in fund recovery proved to be a lifesaver.

The team at BOTNET CRYPTO RECOVERY is truly skilled in their field, demonstrating an impressive capability to not only recover stolen funds but also to expose and dismantle scam operations. Their service was exceptional. They provided clear communication and actionable strategies, working diligently to ensure that my money was returned. The recovery process was handled with the utmost professionalism and efficiency. Seeing my funds returned was a huge relief, and it was evident that BOTNET CRYPTO RECOVERY was well-versed in handling such complex and sensitive situations. I have since recommended BOTNET CRYPTO RECOVERY to several friends and colleagues, all of whom have been equally impressed with their services. They have become my go-to recommendation for anyone dealing with similar issues, whether it involves recovering lost funds or addressing various online scams. Their expertise extends beyond just recovery; they offer comprehensive solutions for individuals and businesses facing financial fraud.

BOTNET CRYPTO RECOVERY’s ability to address both hacking and fund recovery issues has been invaluable to me. They have various skills and strategies for tackling these challenges, and their success in helping me recover my stolen funds speaks volumes about their capabilities. They approach each case with a unique strategy tailored to the specifics of the situation, ensuring the best possible outcome for their clients. Reflecting on my experience, I am immensely grateful for the intervention of BOTNET CRYPTO RECOVERY. Their support has not only helped me regain my lost funds but also provided me peace of mind during a highly stressful period.

The professionalism and dedication demonstrated by their team have solidified my confidence in their services. If you find yourself in a situation involving financial fraud or scams, I highly recommend reaching out to BOTNET CRYPTO RECOVERY. Their proven track record and specialized expertise make them a top choice for recovering stolen funds and tackling online scams. They have been instrumental in my financial recovery, and their assistance has made a significant difference in my life.

EMAIL THEM: chat@botnetcryptorecovery.info -

SCAMMED BITCOIN RECOVERY MADE EASY WITH ASSET RESCUE SPECIALIST

Asset Rescue Specialist is an exceptional service that deserves every bit of praise it receives. As a user who experienced the devastating consequences of a crypto scam, I can attest to the sheer brilliance and professionalism displayed by Asset Rescue Specialists in rectifying the situation. From the moment I reached out to them, I was impressed by their approach, which exuded seriousness and a commitment to helping clients recover from their financial losses. This level of dedication instilled confidence in me right from the start, as I knew I was dealing with professionals who truly understood the gravity of the situation. One of the most remarkable aspects of Asset Rescue Specialist is the sheer genius of their team. They possess unparalleled expertise in navigating the complexities of the digital landscape, particularly when it comes to recovering lost cryptocurrencies. Their ability to devise innovative strategies and employ cutting-edge techniques sets them apart as true masters of their craft. Moreover, Asset Rescue Specialist comes highly recommended by numerous individuals who have benefited from their services. This widespread acclaim is a testament to their track record of success and their unwavering commitment to delivering results for their clients. It speaks volumes about the trust and confidence that people place in their abilities to resolve even the most challenging cases. In my own experience, Asset Rescue Specialist exceeded all expectations by successfully recovering my scammed crypto. This outcome not only brought me immense relief but also enabled me to pay off my debts and regain control of my financial situation. The impact of their assistance cannot be overstated, and I am forever grateful to them for coming to my aid in my time of need. What sets Asset Rescue Specialist apart from other similar services is its personalized approach to each case. They understand that every situation is unique and requires a tailored strategy to achieve the best possible outcome. This level of attention to detail ensures that clients receive the individualized support they need to overcome their challenges effectively. Furthermore, Asset Rescue Specialists operate with the utmost integrity and transparency, ensuring that clients are kept informed every step of the way. They prioritize clear communication and are always available to address any concerns or questions that may arise throughout the recovery process. This commitment to openness fosters trust and fosters a strong sense of partnership between clients and the Asset Rescue Specialist team. In conclusion, I wholeheartedly endorse Asset Rescue Specialist for anyone in need of assistance with recovering lost cryptocurrencies or resolving other digital financial issues. Their professionalism, expertise, and dedication are truly unmatched, and I am confident that anyone who seeks their services will be in the best possible hands. Thank you, Asset Rescue Specialist, for your invaluable assistance – you have made a profound difference in my life, and I am forever grateful.

Please find their contact info below.

Email: Assetrescuespecialist(@) qualityservice (.) com

Telegram user: assetrescueservices

-

ETHEREUM AND USDT RECOVERY EXPERT- HIRE SALVAGE ASSET RECOVERY

The moment my Bitcoin wallet froze mid-transfer, stranding $410,000 in cryptographic limbo, I felt centuries of history slip through my fingers. That balance wasn’t just wealth; it was a lifeline for forgotten libraries, their cracked marble floors and water-stained manuscripts waiting to breathe again. The migration glitch struck like a corrupted index: one second, funds flowed smoothly; the next, the transaction hung “Unconfirmed,” its ID number mocking me in glowing red. Days bled into weeks as support tickets evaporated into corporate ether. I’d haunt the stacks of my local library, tracing fingers over brittle Dickens volumes, whispering, “I’m sorry,” to ghosts of scholars past. Then, Marian—a silver-haired librarian with a crypto wallet tucked beside her ledger found me slumped at a mahogany study carrel. “You’ve got the blockchain stare,” she murmured, pressing a Post-it into my palm.

Salvage Asset Recovery. “They resurrected my nephew’s Ethereum after a smart contract imploded. Go.”

I emailed them at midnight, my screen’s blue glare mixing with moonlight through stained-glass windows. By dawn, their engineers had dissected the disaster. The glitch, they explained, wasn’t a hack but a protocol mismatch, a handshake between wallet versions that failed mid-encryption, freezing funds like a book jammed in a pneumatic tube. “Your Bitcoin isn’t lost,” assured a specialist named Leo. “It’s stuck in a cryptographic limbo. We’ll debug the transaction layer by layer.”

Thirteen days of nerve-shredding limbo followed. I’d refresh blockchain explorers obsessively, clinging to updates: “Reverse-engineering OP_RETURN outputs…” “Bypassing nonce errors—progress at 72%...” My library blueprints, quotes for climate-controlled archives, plans for AR-guided tours sat untouched, their ink fading under my doubt. Then, on a frostbitten morning, the email arrived: “Transaction invalidated. Funds restored.” I watched, trembling, as my wallet repopulated $410,000 glowing like a Gutenberg Bible under museum lights.

Salvage Asset Recovery didn’t just reclaim my Bitcoin; they salvaged a bridge between past and future. Today, the first restored library stands in a 19th-century bank building, its vault now a digital archive where blockchain ledgers track preservation efforts. Patrons sip fair-trade coffee under vaulted ceilings, swiping NFTs that unlock rare manuscript scans, a symbiosis of parchment and Python code.

These assets are more than technicians; they’re custodians of legacy. When code fails, they speak its dead languages, reviving what the digital world dismisses as lost. And to Marian, who now hosts Bitcoin literacy workshops between poetry readings, you were the guardian angel this techno-hermit didn’t know to pray for.

If your crypto dreams stall mid-flight, summon Salvage Asset Recovery. They’ll rewrite the code, rebuild the bridge, and ensure history never becomes a footnote. All thanks to Salvage Asset Recovery- their contact info

TELEGRAM---@Salvageasset

WhatsApp+ 1 8 4 7 6 5 4 7 0 9 6 1

1 -

CRYPTOCURRENCY RECOVERY SERVICES: BOTNET CRYPTO RECOVERY

As the cryptocurrency market continues to evolve and grow, it's no secret that the number of scams, frauds, and cyber-attacks has also increased exponentially. The anonymity and lack of regulation in the crypto space make it a breeding ground for malicious actors, leaving innocent investors vulnerable to financial losses. This is exactly why BOTNET CRYPTO RECOVERY, a trailblazing cryptocurrency recovery company, has emerged as a beacon of hope for those who have fallen prey to these nefarious activities.

With a proven track record of successfully recovering millions of dollars' worth of stolen or lost cryptocurrencies, BOTNET CRYPTO RECOVERY has established itself as the most trusted and reliable recovery company worldwide. Their team of expert cybersecurity specialists, forensic analysts, and blockchain experts work tirelessly to track down and retrieve stolen assets, using cutting-edge technology and innovative strategies to stay one step ahead of the scammers.

What sets them apart from other recovery companies is our unwavering commitment to their clients. They understand the emotional and financial distress that comes with losing hard-earned savings, and they are dedicated to providing a personalized, empathetic, and confidential service that puts our clients' needs above all else. Their dedicated support team is available 24/7 to guide you through the recovery process, ensuring that you are informed and empowered every step of the way. with countless success stories are a testament to their expertise and dedication. From retrieving stolen Bitcoin from a phishing scam to recovering Ethereum lost in a Ponzi scheme, They have helped numerous individuals and businesses regain control of their digital assets. their clients' testimonials speak volumes about their exceptional service and unparalleled results:

BOTNET CRYPTO RECOVERY is a Godsend. I had lost all hope after falling victim to a sophisticated phishing scam, but their team worked tirelessly to recover my stolen Bitcoin. I couldn't be more grateful for their professionalism and expertise.

I was skeptical at first, but BOTNET CRYPTO RECOVERY truly delivered on their promise. They recovered my lost Ethereum and helped me understand how to protect myself from future scams. I highly recommend their services to anyone who has been a victim of cryptocurrency fraud. If you or someone you know has fallen victim to a cryptocurrency scam or fraud, don't hesitate to reach out to them. Their team is ready to help you recover your losses and take back control of your digital assets.

Contact them today to schedule a consultation and take the first step towards reclaiming your financial freedom.

Email: [ support@ botnetcryptorecovery . com ]

Phone: +1 (431) 801-8951

Website: [ botnetcryptorecovery. com ]

Let us help you weave a safer web in the world of cryptocurrency. 3

3 -

HACKING EXPERTS IN STOLEN CRYPTO AND DIGITAL ASSETS: TRUST GEEKS HACK EXPERT

Cryptocurrency space is fraught with risks, particularly when it comes to Bitcoin, which has become a prime target for scammers as Cryptocurrency Catch on incidents of loss due to fraudulent schemes, hacks, and deceptive practices have surged. In this challenging environment, the need for effective recovery solutions has never been more critical. TRUST GEEKS HACK EXPERT has emerged as a leading authority in the cryptocurrency recovery sector, specializing in helping individuals and businesses recover Bitcoin lost to scammers. TRUST GEEKS HACK EXPERT has built a strong reputation for its expertise in tracing and recovering lost Bitcoin, leveraging advanced techniques and tools to navigate the complexities of the cryptocurrency ecosystem. Their dedicated team understands the intricacies of various scams, from phishing attacks to Ponzi schemes, and is well-equipped to assist clients in reclaiming their assets. The company's commitment to excellence is reflected in the numerous accolades it has received from both satisfied clients and industry peers, underscoring its effectiveness in delivering results. Clients who have fallen victim to scams often find themselves in distressing situations, unsure of how to proceed. Contact service visit Website h t t p s :// trust geeks hack expert .c o m / - - E m a i l. Trustgeekshackexpert [At] fast service [Dot] c o m provides a lifeline, offering professional guidance and support throughout the recovery process. Their approach is characterized by transparency, communication, and a deep understanding of the emotional toll that losing Bitcoin to scammers can take. By fostering trust and loyalty, TRUST GEEKS HACK EXPERT has become a go-to choice for those seeking to recover their lost assets. Moreover, the company's dedication to staying ahead of industry trends and continuously refining its methodologies has set high standards for service quality. By investing in ongoing training and development, TRUST GEEKS HACK EXPERT ensures that its team is equipped with the latest knowledge and skills necessary to tackle the evolving challenges posed by cryptocurrency scams. For anyone seeking reliable recovery solutions for Bitcoin lost to scammers, the industry recognition of TRUST GEEKS HACK EXPERT speaks volumes about their capabilities and reputation.2 -

Bitcoin and Crypto Lost to Scam Can Now be Recovered / Go to Captain WebGenesis

Reputable Expert in Cryptocurrency Recovery

CAPTAIN WEBGENESIS boasts a team of seasoned professionals who understand the complexities of blockchain technology and digital assets. Their deep knowledge enables them to navigate through intricate recovery processes effectively. Whether it's recovering lost private keys or addressing security breaches, you can rely on their expertise to get your assets back safely. CAPTAIN WEBGENESIS has a remarkable track record of recovering assets for clients, instilling confidence in their methods and practices. His client testimonials and case studies speak volumes about the successful outcomes they have achieved, making them a reliable choice for those in distress.

Email: captainwebgenesis@ hackemail. com1 -

PROFESSIONAL CRYPTO RECOVERY COMPANY; BITCOIN AND ETH RECOVEYR EXPERT HIRE CYBER CONSTABLE INTELLIGENCE

Early 2025,I was captivated by a cryptocurrency trading company that promised extraordinary financial rewards. Their marketing materials boasted high returns, expert mentorship, and the allure of becoming a self-sufficient online trader. Eager to capitalize on the volatile crypto market, I underwent a series of assessments to prove my trading aptitude and dedication. The third test, a rigorous simulation involving real-time market analysis, risk management strategies, and cryptocurrency volatility scenarios, convinced me of the platform’s legitimacy. By this stage, I had invested roughly $56,000, reassured by their polished interface and assurances that my funds were secure and growing. The turning point came when I attempted to withdraw my accumulated balance of $273,000, which included trading commissions and a $25,000 “loyalty bonus.” Instead of processing my request, the company demanded an additional $83,000 to “finalize tax compliance protocols” and unlock my account. They framed this as a routine step to “legitimize” my earnings and transition me to a “permanent trader” tier. Skepticism set in as their communication grew evasive: delayed responses, vague explanations about blockchain “security fees,” and sudden claims of “wallet activation errors. Alarm bells rang louder when they cited “suspicious activity” on my account, insisting another $50,000 deposit was needed to “verify liquidity” and release my funds. Each delay came with new urgency, threats of account suspension, forfeited profits, or even legal repercussions for “breaching terms.” Exhausted by their manipulative tactics, I refused further payments and began researching recovery options. A trusted colleague recommended Cyber Constable Intelligence, a firm specializing in crypto fraud cases. Their team swiftly identified red flags: fake trading volumes, fabricated regulatory certifications, and wallet addresses linked to offshore exchanges. Through forensic analysis, they traced my funds, recovering a significant p

oration within weeks, restoring my hope and financial stability.

Here's their Info below

WhatsApp: 1 252378-7611

Website info; www cyberconstableintelligence com

Email Info cyberconstable@coolsite net

Telegram Info: cyberconstable2 -

Uncover Your Cheating Partner Through Cryptic Trace Technologies

I cannot recommend Cryptic Trace Technologies enough for their exceptional service and professionalism. When I suspected infidelity in my marriage, I felt lost and unsure of how to gather evidence without breaching trust or going beyond legal boundaries. Cryptic Trace Technologies came highly recommended, and from the moment I reached out, their team demonstrated empathy, discretion, and expertise. They provided a clear explanation of their processes and reassured me that everything they did would be within the confines of the law. This transparency immediately put me at ease. Their technical capabilities are truly impressive. Using advanced tools and methods, they were able to uncover communications and patterns that confirmed my suspicions. The team provided detailed reports and evidence, ensuring that everything was clear and easy to understand. What stood out most was their thoroughness—there was no stone left unturned. Their findings not only validated my concerns but also provided the closure I needed to make informed decisions about my future. What I appreciated most about Cryptic Trace Technologies was their professionalism and sensitivity throughout this difficult time. They handled my case with care and ensured my privacy was protected at every step. They maintained constant communication and were available to answer my questions, no matter how small or technical. This level of customer service is rare and speaks volumes about their commitment to supporting their clients during such personal and emotionally charged situations. If you are in a situation where you need clarity, whether it’s for infidelity, fraud, or other issues requiring digital investigation, I wholeheartedly recommend Cryptic Trace Technologies. They combine cutting-edge technology with compassionate service, making them a reliable and trustworthy partner during challenging times. Their work helped me uncover the truth and take control of my life, and for that, I am incredibly grateful. Reach out to them through their emails: cryptictrace @ technologist. Com

Cryptictracetechnologies @ zohomail . Com

Website: cryptictracetechnologies . Com

Whatsapp: +158790568031 -

Bitcoin Recovery Solutions: Crypto Recovery Expert - Hire A Hacker For Crypto Scam Recovery Services

Captain WebGenesis is a cutting-edge recovery service that specializes in helping individuals regain access to their lost cryptocurrency. With a team of experts and advanced technology, they have become a beacon of hope for many in the crypto community. I found myself a victim of a Crypto scam that cost me a staggering $46,000. Just when I thought all hope was gone, I discovered Captain WebGenesis Crypto Assets Recovery Expert who was able to reclaim my lost Bitcoin.

What sets Captain WebGenesis apart from other crypto recovery services is their impressive track record. Many users, including myself, have shared success stories of recovering their lost assets. Testimonials on their website speak volumes about their dedication and effectiveness in resolving complex recovery cases.

More Information,

(Captainwebgenesis. com),

Email: (captainwebgenesis@hackermail. com).1 -

Crypto Recovery Services | Recover Your Stolen Crypto : How to Recover Stolen Cryptocurrency

Top Ways To Recover Funds From Crypto Scam

CAPTAIN WEBGENESIS CRYPTO RECOVERY CENTER is a renowned expert in the cryptocurrency community, specializing in recovery solutions for lost crypto assets. With years of experience and a proven track record, he has helped countless individuals regain access to their digital fortunes. CAPTAIN WEBGENESIS CRYPTO RECOVERY CENTER has successfully assisted countless clients in recovering their lost or inaccessible cryptocurrencies. Their impressive track record speaks volumes about their reliability and success rates. Client testimonials highlight their professionalism and effectiveness, reassuring potential customers that they are in good hands The CAPTAIN WEBGENESIS CRYPTO RECOVERY CENTER team is well-versed in the nuances of different blockchain technologies, enabling them to devise tailored solutions for each unique case.

captainwebgenesis@ hackermail. com)

Call or Whatsapp +1(501)436-9362)

Visit website: captainwebgenesis. com1 -

Discover the Best Crypto Recovery Service: Captain WebGenesis

Captain WebGenesis has established itself as a leader in crypto recovery services. With a team of seasoned professionals who specialize in digital asset recovery, they have successfully assisted countless clients in reclaiming their lost cryptocurrencies. Their reputation speaks volumes, with numerous positive testimonials and case studies showcasing their expertise.

Why Choose Captain WebGenesis for Crypto Recovery?

The team at Captain WebGenesis understands that each case is unique. They offer tailored recovery solutions for various scenarios, including:

Hacked Wallets: If your wallet has been compromised, their experts utilize advanced techniques to trace and recover your stolen assets.

Lost Private Keys: Forgetting your private key can be a nightmare. Captain WebGenesis employs innovative methods to help you regain access to your funds.

Unresponsive Exchanges: If an exchange has frozen your account, their legal and technical experts work tirelessly to resolve the situation.1 -

I just went through a super long debugging process trying to figure out what was going on with my ZFS volumes. It turned out I had bad memory:

https://battlepenguin.com/tech/... -

Crypto Scam Recovery Services: How To Hire a Hacker For Crypto Scam Recovery - Captain WebGenesis.

How To Recover From Fake Cryptocurrency Investment?

Captain WebGenesis is a renowned figure in the cryptocurrency recovery space. With years of experience in blockchain technology and cybersecurity, Captain WebGenesis has developed unique methodologies for tracking lost or stolen BTC. By leveraging advanced software tools and forensic analysis, he is able to trace transactions and track down missing assets.

Captain WebGenesis has successfully recovered millions of dollars worth of Bitcoin for clients worldwide. His testimonials speak volumes, with many satisfied customers praising his efficient and transparent methods.

Contact Info:

Mail: { Captainwebgenesis@ hackermail . com}

Whatsapp: +1,50,14,36,93,62

Homepage; Captainwebgenesis. co m1